Enterprise DevOps Skills Report A Deep Dive

Enterprise DevOps Skills Report: Navigating the ever-evolving landscape of enterprise DevOps requires a keen understanding of the skills, tools, and practices that drive success. This report dives deep into the core competencies needed for thriving in this dynamic field, exploring everything from essential technical skills to the crucial soft skills that foster collaboration and innovation within high-performing teams. We’ll uncover the tools and technologies shaping the modern enterprise DevOps environment, examining their strengths and weaknesses to help you make informed decisions for your organization.

From implementing agile methodologies and mastering infrastructure as code to establishing robust security measures and scaling DevOps practices across the enterprise, we’ll cover the key strategies and best practices that ensure efficiency, resilience, and continuous improvement. We’ll also explore the future of enterprise DevOps, examining emerging technologies and the evolving skill sets that will be critical for future success. Get ready to level up your DevOps game!

Defining Enterprise DevOps Skills

Enterprise DevOps, unlike its smaller-scale counterpart, demands a broader and deeper skillset. It’s not just about automating processes; it’s about orchestrating complex systems, managing risk across diverse teams, and ensuring continuous delivery at scale. This requires a blend of technical proficiency, operational expertise, and crucial soft skills.

Core DevOps Skills in Enterprise Environments

A robust enterprise DevOps skillset encompasses a wide range of technical capabilities. These skills are essential for effective automation, monitoring, and collaboration across the entire software delivery lifecycle. Individuals should possess expertise in various areas to contribute meaningfully to the enterprise DevOps environment.

- Version Control Systems (e.g., Git): Proficient use of Git for code management, branching strategies, and collaborative development is fundamental.

- CI/CD Pipelines (e.g., Jenkins, GitLab CI, Azure DevOps): Building, configuring, and maintaining automated pipelines for continuous integration and continuous delivery is crucial for efficient deployments.

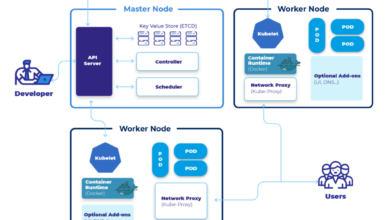

- Cloud Computing Platforms (e.g., AWS, Azure, GCP): Understanding cloud infrastructure, services, and deployment strategies is essential for scalability and flexibility.

- Containerization (e.g., Docker, Kubernetes): Experience with containerization technologies is key for efficient packaging, deployment, and management of applications.

- Infrastructure as Code (IaC) (e.g., Terraform, Ansible, CloudFormation): Automating infrastructure provisioning and management through code improves consistency and repeatability.

- Monitoring and Logging (e.g., Prometheus, Grafana, ELK stack): Effective monitoring and logging are vital for identifying and resolving issues quickly.

- Scripting and Automation (e.g., Bash, Python, PowerShell): Automation skills are essential for streamlining repetitive tasks and improving efficiency.

- Security Best Practices: Understanding and implementing security measures throughout the DevOps lifecycle is paramount.

Skills Comparison Across DevOps Roles

The specific skills required vary significantly depending on the role within an enterprise DevOps team. While some overlap exists, each role emphasizes different areas of expertise.

| Skill | Developer | Operations Engineer | Security Engineer |

|---|---|---|---|

| Coding/Scripting | High | Medium | Medium |

| CI/CD Pipeline Management | Medium | High | Medium |

| Cloud Infrastructure | Medium | High | Medium |

| Security Best Practices | Medium | Medium | High |

| System Administration | Low | High | Medium |

| Security Auditing | Low | Low | High |

The Importance of Soft Skills in Enterprise DevOps

Technical expertise alone is insufficient for success in enterprise DevOps. Strong soft skills are crucial for effective collaboration, communication, and problem-solving within large, cross-functional teams.

- Communication: Clearly conveying technical information to both technical and non-technical audiences is essential.

- Collaboration: Working effectively with developers, operations engineers, security engineers, and business stakeholders is critical.

- Problem-solving: Identifying, analyzing, and resolving complex technical issues requires a systematic and collaborative approach.

- Adaptability: The ability to adapt to changing priorities and technologies is crucial in a dynamic environment.

Enterprise DevOps Skills Matrix

This matrix illustrates proficiency levels required for different roles, using a scale of 1 (Beginner) to 5 (Expert).

| Role/Skill | Developer | Operations Engineer | Security Engineer |

|---|---|---|---|

| Git | 4 | 3 | 2 |

| Jenkins | 3 | 4 | 2 |

| AWS | 3 | 4 | 3 |

| Kubernetes | 3 | 4 | 2 |

| Security Auditing | 1 | 2 | 5 |

| Communication | 3 | 3 | 3 |

Essential Tools and Technologies

Navigating the complex landscape of enterprise DevOps requires a deep understanding of the tools and technologies that underpin its success. This section explores the core components of a robust DevOps environment, categorizing them by function and examining their strengths and weaknesses within the context of enterprise-level needs. The choice of tools is often dictated by factors like existing infrastructure, team expertise, and the scale of operations.

Effective DevOps relies on a carefully selected suite of tools integrated to create a seamless workflow. The right tools can significantly improve efficiency, reduce errors, and accelerate the software delivery lifecycle. Conversely, poorly chosen or poorly integrated tools can create bottlenecks and hinder progress.

Infrastructure as Code (IaC) Tools

IaC tools automate the provisioning and management of infrastructure, enabling consistent and repeatable deployments. This approach reduces manual configuration errors and improves scalability.

- Terraform: A popular open-source tool that uses declarative configuration to manage infrastructure across various cloud providers and on-premises environments. It excels in managing complex infrastructure and promoting collaboration through its state management capabilities. However, it can have a steeper learning curve than some other tools.

- Ansible: An agentless configuration management and automation tool known for its simplicity and ease of use. It uses YAML-based playbooks to define infrastructure configurations and automate tasks. While straightforward for smaller deployments, managing very large and complex infrastructures can become challenging.

- CloudFormation (AWS): A cloud-specific IaC service offered by Amazon Web Services. It’s tightly integrated with other AWS services, making it a natural choice for AWS-centric deployments. However, its functionality is limited to the AWS ecosystem.

CI/CD Pipeline Tools

CI/CD pipelines automate the building, testing, and deployment of software. These tools are crucial for accelerating software delivery and improving quality. The choice of tool depends on factors such as the complexity of the application, the team’s expertise, and integration with other tools.

- Jenkins: A widely used open-source automation server that provides a flexible and extensible platform for building CI/CD pipelines. Its plugin ecosystem allows for customization to fit various needs, but this flexibility can also lead to increased complexity.

- GitLab CI/CD: Integrated into the GitLab platform, this CI/CD solution simplifies the process by tightly coupling code management and deployment. It’s particularly convenient for teams already using GitLab for version control. However, the level of customization might be less extensive than Jenkins.

- Azure DevOps: Microsoft’s cloud-based CI/CD platform offers a comprehensive suite of tools for managing the entire software development lifecycle. It integrates well with other Microsoft technologies but might be less suitable for organizations heavily invested in other ecosystems.

Configuration Management Tools

Configuration management tools ensure that systems are consistently configured and updated across environments. They play a critical role in maintaining stability and reducing configuration drift.

- Chef: Uses a Ruby-based DSL to define infrastructure configurations and automate tasks. It is known for its robustness and scalability but can have a steeper learning curve.

- Puppet: Another popular configuration management tool that utilizes a declarative approach to manage infrastructure. It’s known for its strong community support and extensive documentation.

Monitoring and Logging Tools

Monitoring and logging tools provide real-time visibility into the performance and health of applications and infrastructure. They are essential for identifying and resolving issues quickly.

- Prometheus: An open-source monitoring system that collects metrics from various sources and provides dashboards for visualizing performance data. Its flexibility and scalability make it suitable for large-scale deployments.

- Datadog: A cloud-based monitoring and analytics platform offering comprehensive monitoring capabilities for applications, infrastructure, and logs. Its user-friendly interface and extensive integrations make it a popular choice for many organizations.

- Elastic Stack (ELK): Combines Elasticsearch, Logstash, and Kibana to provide a powerful solution for log management and analytics. It’s highly scalable and capable of handling large volumes of log data.

CI/CD Tool Comparison

| Feature | Jenkins | GitLab CI/CD | Azure DevOps |

|---|---|---|---|

| Ease of Use | Moderate | High | High |

| Flexibility/Extensibility | High | Moderate | Moderate |

| Integration | Extensive (via plugins) | Tight GitLab integration | Strong Microsoft ecosystem integration |

| Cost | Open Source (self-hosted) | Free and paid tiers | Paid (cloud-based) |

DevOps Practices in the Enterprise

Successfully implementing DevOps in an enterprise requires a strategic approach that goes beyond simply adopting new tools. It necessitates a cultural shift, a commitment to automation, and a focus on continuous improvement across the entire software delivery lifecycle. This section delves into key DevOps practices crucial for enterprise-level success.

Agile Methodologies in Enterprise DevOps, Enterprise devops skills report

Agile methodologies, with their iterative and incremental approach, are fundamental to successful enterprise DevOps. Instead of lengthy waterfall cycles, agile promotes shorter development sprints, frequent feedback loops, and continuous adaptation. In an enterprise setting, this often translates to scaling agile frameworks like Scrum or Kanban across multiple teams, requiring careful coordination and communication. Effective implementation involves establishing clear roles and responsibilities, implementing robust tracking and reporting mechanisms (like Jira or Azure DevOps), and fostering a collaborative environment where teams can quickly adapt to changing requirements and priorities.

Successful enterprise-level agile adoption often necessitates a phased approach, starting with pilot projects and gradually expanding across the organization as best practices are established and refined. This allows for continuous learning and improvement, minimizing disruption and maximizing value.

Infrastructure as Code Best Practices in Large Organizations

Infrastructure as Code (IaC) is a cornerstone of enterprise DevOps, enabling automated provisioning, configuration, and management of infrastructure. In large organizations, this translates to managing potentially thousands of servers, networks, and other resources. Best practices include using version control (like Git) for infrastructure code, employing configuration management tools (like Ansible, Chef, or Puppet) to ensure consistency across environments, and leveraging Infrastructure-as-a-Service (IaaS) platforms (like AWS, Azure, or GCP) for scalability and flexibility.

A crucial aspect is modularity – breaking down infrastructure into reusable components that can be easily deployed and managed. Robust testing strategies are also vital, ensuring that changes to infrastructure code don’t introduce unexpected issues. This includes automated testing using tools that validate the configuration and functionality of the deployed infrastructure. Finally, comprehensive documentation is essential for maintainability and collaboration within large teams.

Implementing Robust Security Measures in a DevOps Pipeline

Security is paramount in any DevOps pipeline, particularly in the enterprise. Implementing robust security measures requires a shift-left approach, integrating security practices throughout the entire software delivery lifecycle, rather than as an afterthought. This involves incorporating security scanning tools into the CI/CD pipeline to automatically detect vulnerabilities in code and infrastructure. Secure coding practices must be enforced, and regular security audits should be conducted.

Access control mechanisms need to be meticulously managed, utilizing least privilege principles to limit potential damage from compromised accounts. Automation plays a crucial role, automating security tasks such as vulnerability scanning, penetration testing, and compliance checks. A well-defined incident response plan is also vital, outlining procedures for handling security breaches and minimizing their impact. Regular security training for developers and operations teams is essential to foster a security-conscious culture.

Challenges in Scaling DevOps Across an Enterprise

Scaling DevOps across a large enterprise presents several significant challenges. One common hurdle is overcoming organizational silos and fostering collaboration between development, operations, and security teams. Resistance to change from individuals or teams accustomed to traditional workflows can also hinder adoption. Integrating existing legacy systems with new DevOps tools and processes can be complex and time-consuming. Maintaining consistency and standardization across multiple teams and environments requires careful planning and execution.

Measuring the success of DevOps initiatives and demonstrating their value to the business can also be challenging, requiring the establishment of clear metrics and reporting mechanisms. Finally, ensuring sufficient training and support for teams adopting new tools and practices is essential for long-term success.

Measuring and Improving DevOps Performance

Effective DevOps implementation isn’t just about adopting new tools and processes; it’s about demonstrably improving performance and efficiency. Measuring key metrics allows us to track progress, identify bottlenecks, and ultimately optimize our DevOps pipeline for greater speed, reliability, and value delivery. This section explores crucial metrics, their application, and techniques for continuous improvement.

Key Metrics for DevOps Effectiveness

Understanding the effectiveness of your DevOps practices requires a focus on quantifiable metrics. These metrics provide objective insights into areas needing attention and allow for data-driven decision-making. Key metrics often include deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate. By tracking these metrics over time, we can identify trends and pinpoint areas for improvement.

For instance, a low deployment frequency might indicate a slow or cumbersome release process, while a high MTTR suggests vulnerabilities in incident management.

Using Metrics to Identify Areas for Improvement

Let’s consider a scenario where a company observes a consistently high lead time for changes (e.g., several weeks). This suggests inefficiencies in the software development lifecycle (SDLC). Analyzing this further might reveal bottlenecks in code review, testing, or deployment processes. By breaking down the lead time into its constituent stages, the team can identify the specific stage contributing most to the delay.

This targeted approach allows for focused improvement efforts, such as streamlining code reviews or automating testing processes. Similarly, a high change failure rate indicates a need for improved testing strategies, better code quality, or more robust rollback mechanisms.

DevOps Performance Dashboard Design

A well-designed dashboard provides a consolidated view of key performance indicators (KPIs), enabling quick identification of trends and anomalies. Imagine a dashboard with several key sections:

- Deployment Frequency: A line graph showing the number of deployments per week or month over the past year. This visual quickly reveals trends in release velocity.

- Lead Time for Changes: A bar chart comparing the lead time for different types of changes (e.g., bug fixes, new features). This highlights potential bottlenecks in specific change types.

- Mean Time to Recovery (MTTR): A histogram displaying the distribution of MTTR values over time. This helps identify recurring issues and their impact on service availability.

- Change Failure Rate: A pie chart showing the percentage of deployments resulting in failures. This provides a clear picture of the overall reliability of the release process.

- Customer Satisfaction (CSAT): A line graph tracking customer satisfaction scores over time, correlating them with deployment frequency and MTTR. This demonstrates the business impact of DevOps improvements.

The dashboard should use clear, concise visualizations, avoiding overwhelming the user with excessive data. Color-coding can be used to highlight areas needing immediate attention (e.g., high MTTR or failure rates). The dashboard should be easily accessible to all relevant stakeholders, providing a shared understanding of DevOps performance.

Techniques for Continuous Improvement

Continuous improvement is central to a successful DevOps strategy. Several techniques can be employed to drive ongoing optimization:

- Regular Retrospectives: Conducting regular retrospectives allows the team to reflect on past performance, identify areas for improvement, and create actionable plans. This fosters a culture of continuous learning and adaptation.

- A/B Testing: Experimenting with different approaches to processes (e.g., different testing strategies, deployment pipelines) allows for data-driven optimization of the DevOps pipeline.

- Automation: Automating repetitive tasks such as testing, deployment, and infrastructure provisioning frees up team members to focus on higher-value activities, increasing efficiency and reducing errors.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems allows for proactive identification and resolution of issues, minimizing downtime and improving MTTR.

By embracing these techniques and consistently measuring and analyzing performance, organizations can continuously improve their DevOps practices, driving greater efficiency, reliability, and business value.

Future Trends in Enterprise DevOps: Enterprise Devops Skills Report

The landscape of enterprise DevOps is in constant flux, driven by the relentless pace of technological innovation. Emerging technologies are not merely augmenting existing practices; they’re fundamentally reshaping the way organizations build, deploy, and manage software. Understanding these shifts is crucial for any enterprise aiming to maintain a competitive edge.AI, machine learning, and serverless computing are poised to revolutionize various aspects of the DevOps lifecycle, from automated testing and deployment to proactive infrastructure management.

However, these advancements also bring new challenges, necessitating a proactive approach to skills development and process adaptation.

The Impact of Emerging Technologies on Enterprise DevOps

The integration of AI and machine learning (ML) into DevOps workflows promises significant improvements in efficiency and reliability. AI-powered tools can automate repetitive tasks, predict potential failures, and optimize resource allocation. For example, ML algorithms can analyze historical data to identify patterns indicating impending outages, allowing DevOps teams to proactively address issues before they impact users. Serverless computing further streamlines development by abstracting away infrastructure management, enabling faster deployments and reduced operational overhead.

Teams can focus on writing code, leaving the complexities of scaling and resource provisioning to the cloud provider. This shift reduces the burden on DevOps engineers, allowing them to focus on higher-value activities like automation and optimization.

Potential Skills Gaps and Future Skill Requirements

The adoption of AI, ML, and serverless computing necessitates a shift in the skillset required for enterprise DevOps engineers. While traditional skills like scripting and system administration remain important, there’s a growing need for expertise in areas such as data science, machine learning, and cloud-native architectures. The ability to interpret and utilize data generated by AI-powered monitoring tools is becoming increasingly crucial.

Furthermore, understanding the security implications of deploying applications in serverless environments is paramount. A significant skills gap exists in these areas, highlighting the need for robust training and upskilling initiatives within organizations.

Evolution of DevOps Practices and Adaptation to New Technologies

DevOps practices are constantly evolving to adapt to new technologies and changing business needs. The shift towards microservices architectures, for example, necessitates a more granular approach to deployment and monitoring. The rise of GitOps, a methodology that manages infrastructure as code through Git repositories, further emphasizes automation and collaboration. Continuous integration and continuous delivery (CI/CD) pipelines are becoming increasingly sophisticated, incorporating AI-powered testing and automated rollbacks.

The adoption of cloud-native technologies is driving the need for greater agility and resilience in DevOps practices, pushing organizations to embrace principles of observability and automation more fully.

Hypothetical Scenario: AI-Powered Predictive Maintenance in a DevOps Workflow

Imagine a large e-commerce company using a microservices architecture. Their DevOps team is struggling to manage the increasing complexity of their application landscape, leading to frequent outages and slow deployment cycles. They implement an AI-powered monitoring system that analyzes logs, metrics, and other data to predict potential failures in their microservices. This system identifies a specific service exhibiting unusual behavior, predicting a potential outage within the next 24 hours.

The AI system automatically generates an alert, notifying the DevOps team. The team uses the AI’s insights to proactively deploy a fix, preventing a major outage and ensuring business continuity. The AI also suggests improvements to the service’s architecture to prevent similar issues in the future. This proactive approach, driven by AI, drastically improves the reliability and efficiency of their DevOps workflow.

Outcome Summary

Ultimately, mastering enterprise DevOps is about more than just technical proficiency; it’s about building high-performing teams that embrace collaboration, continuous learning, and a shared commitment to delivering exceptional value. This report has served as a roadmap, highlighting the essential skills, tools, and practices needed to navigate the complexities of enterprise DevOps and thrive in this ever-changing technological landscape. By understanding the key metrics, embracing continuous improvement, and anticipating future trends, organizations can unlock the full potential of DevOps and achieve sustainable competitive advantage.

Let’s build the future of DevOps, together!

FAQs

What are the biggest challenges in implementing DevOps in a large enterprise?

Common challenges include overcoming legacy systems, integrating disparate tools, fostering a culture of collaboration across different teams, and managing the complexity of large-scale deployments.

How can I measure the success of my DevOps initiatives?

Key metrics include deployment frequency, lead time for changes, mean time to recovery (MTTR), and customer satisfaction. Track these over time to gauge progress.

What’s the difference between DevOps and Site Reliability Engineering (SRE)?

While closely related, DevOps focuses on the cultural and process aspects of software delivery, while SRE applies engineering principles to improve the reliability and performance of systems.

How can I upskill my team for enterprise DevOps?

Invest in training programs, workshops, certifications, and mentorship opportunities. Encourage continuous learning and experimentation.