Demystifying Serverless Security Safeguarding Cloud Computings Future

Demystifying serverless security safeguarding the future of cloud computing isn’t just a catchy title; it’s a mission statement for this post. We’re diving headfirst into the exciting, yet often confusing, world of serverless architecture and how to keep it secure. Forget the jargon-heavy white papers – we’ll explore the core concepts, the shared responsibilities, and the practical steps you need to take to build secure and robust serverless applications.

Think of this as your survival guide to navigating the sometimes treacherous landscape of cloud security.

Serverless computing, with its promise of scalability and cost-effectiveness, is rapidly transforming how we build and deploy applications. However, this innovative approach brings its own set of unique security challenges. Unlike traditional infrastructure, where you manage the entire server, serverless shifts responsibility, requiring a new understanding of vulnerabilities and protective measures. We’ll unpack the shared responsibility model, explore common vulnerabilities like injection attacks and insecure configurations, and delve into securing data both at rest and in transit.

We’ll also cover essential aspects like Identity and Access Management (IAM) and the importance of robust monitoring and logging. Get ready to level up your serverless security game!

Introduction

Serverless computing is rapidly transforming the cloud landscape, offering a compelling alternative to traditional infrastructure management. It’s a paradigm shift where developers focus solely on writing code, offloading the complexities of server provisioning, scaling, and maintenance to a cloud provider. This allows for increased agility, reduced operational overhead, and potentially lower costs. However, this shift also introduces a unique set of security challenges that require careful consideration.Serverless architecture, at its core, involves executing code in response to events without managing servers directly.

Functions are triggered by events like HTTP requests, database changes, or messages in a queue. The cloud provider handles the underlying infrastructure, including scaling resources dynamically based on demand. This “pay-per-use” model eliminates the need for constantly running servers, leading to significant cost savings, but it also necessitates a different approach to security.

Serverless Security Challenges

The decentralized and event-driven nature of serverless introduces complexities absent in traditional infrastructure. Unlike traditional servers with well-defined perimeters, serverless functions are ephemeral and often lack the persistent state associated with virtual machines. This makes traditional security practices, like network firewalls and intrusion detection systems, less effective. Moreover, the shared responsibility model between the cloud provider and the developer can be a source of confusion and potential security gaps if not clearly defined and managed.

Misconfigurations of function permissions, insecure code, and vulnerabilities in dependent services are all significant concerns. Data breaches, unauthorized access, and denial-of-service attacks remain relevant threats, but their manifestation in a serverless environment differs substantially.

Comparative Analysis: Serverless vs. Traditional Infrastructure Security

Traditional infrastructure security focuses heavily on perimeter defense, relying on firewalls, intrusion detection systems, and virtual private networks (VPNs) to protect servers and networks. Security patching and updates are typically managed centrally. In contrast, serverless security emphasizes code security, identity and access management (IAM), and the secure configuration of services. The responsibility for security is distributed between the cloud provider and the developer.

While the cloud provider handles the underlying infrastructure security, the developer is responsible for the security of their code, configurations, and data. This shared responsibility model necessitates a deeper understanding of the security implications of serverless architecture and a proactive approach to securing code and configurations. The absence of persistent servers also means that traditional security tools need to be adapted or replaced with solutions designed for the ephemeral nature of serverless functions.

For instance, traditional vulnerability scanning tools might need augmentation with runtime security monitoring.

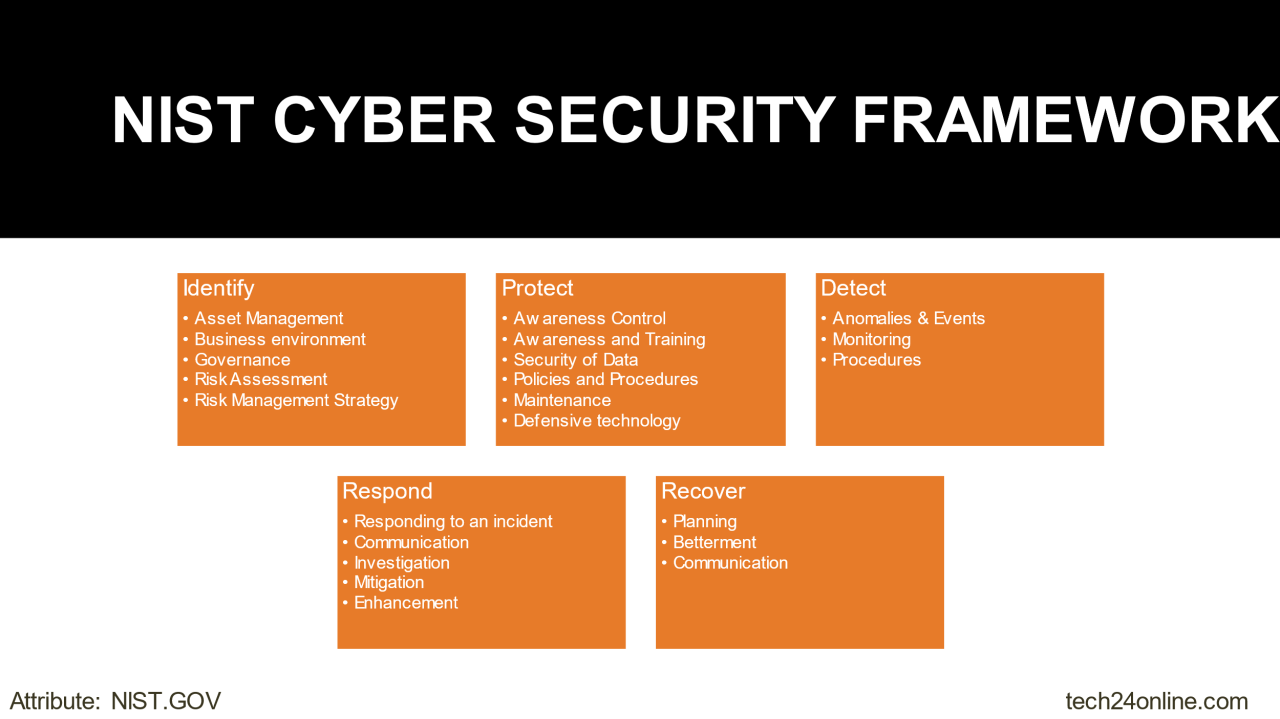

Shared Responsibility Model in Serverless Environments

Serverless computing, while offering incredible scalability and cost-efficiency, introduces a nuanced shared responsibility model between the cloud provider and the user. Understanding this model is crucial for securing your serverless applications and avoiding potential vulnerabilities. This shared responsibility isn’t a simple 50/50 split; instead, it’s a layered approach where responsibilities vary depending on the service level.The fundamental principle revolves around the division of concerns between managing the underlying infrastructure and managing the applications and data deployed on that infrastructure.

The cloud provider is responsible for the underlying infrastructure’s security, while the user is responsible for securing their applications and data running on that infrastructure. However, the lines can sometimes blur, leading to potential misunderstandings and security gaps.

Shared Responsibility Matrix in Serverless

The following table illustrates the shared responsibility model in a typical serverless deployment. Note that specific responsibilities can vary slightly depending on the specific cloud provider and services used.

| Responsibility | Provider (e.g., AWS, Azure, GCP) | User | Example |

|---|---|---|---|

| Physical Security of Data Centers | Provider manages the physical security of their data centers, including access control, environmental controls, and disaster recovery. | Not applicable | Provider ensures robust physical security measures to protect servers and network infrastructure. |

| Global Infrastructure Security | Provider secures the underlying network infrastructure, including firewalls, load balancers, and DDoS protection. | Not applicable | Provider implements and maintains robust network security measures to prevent unauthorized access. |

| Operating System and Hypervisor Security | Provider is responsible for the security of the operating system and hypervisor. | Not applicable | Provider regularly patches and updates the operating systems and hypervisors to address vulnerabilities. |

| Serverless Function Security | Provider secures the runtime environment for serverless functions. | User is responsible for secure coding practices, IAM roles, and access control within the function. | Provider provides a secure execution environment, but the user must configure appropriate IAM roles and permissions to restrict access to resources. |

| Data Security at Rest | Provider provides encryption at rest for storage services, but the user is responsible for configuring and managing encryption keys. | User is responsible for data encryption at rest and in transit. | The user needs to configure server-side encryption (SSE) for their data stored in services like S3 or similar storage. |

| Data Security in Transit | Provider secures the network communication between services. | User is responsible for securing data transmitted between their applications and services, using HTTPS and other security protocols. | The user needs to ensure all communication between their application and other services uses HTTPS. |

| Application Security | Not applicable | User is responsible for secure coding practices, vulnerability scanning, and regular security audits of their application code. | The user must follow secure coding practices, conduct regular penetration testing, and implement appropriate security controls within their application code. |

| Identity and Access Management (IAM) | Provider provides the IAM infrastructure, but the user is responsible for configuring appropriate roles and permissions. | User is responsible for managing user accounts, roles, and permissions to control access to their serverless functions and resources. | The user needs to create and manage IAM roles with least privilege access to prevent unauthorized access to resources. |

Potential Conflicts and Misunderstandings

A major source of conflict stems from the often-blurred lines between infrastructure security and application security. For example, a misconfiguration of IAM roles by the user could expose their serverless functions to unauthorized access, even though the provider’s infrastructure is secure. Similarly, a failure to implement appropriate data encryption techniques by the user can lead to data breaches, despite the provider’s efforts to secure data at rest.

Another area of potential misunderstanding lies in the responsibility for security updates and patching. While the provider handles OS and hypervisor updates, the user is responsible for updating their application code and dependencies to address vulnerabilities. A lack of clarity on these responsibilities can lead to security gaps and increased risk.

Key Security Considerations for Serverless Functions

Serverless functions, while offering incredible scalability and cost efficiency, introduce a unique set of security challenges. Unlike traditional applications where you manage the entire infrastructure, serverless shifts some responsibility to the cloud provider, but significant security burdens remain with the developer. Understanding and mitigating these vulnerabilities is crucial for building secure and reliable serverless applications.

Common Vulnerabilities in Serverless Functions

Serverless functions, due to their event-driven nature and reliance on shared infrastructure, are susceptible to several specific vulnerabilities. These often stem from improper configuration, insecure coding practices, and a lack of comprehensive security testing. Addressing these vulnerabilities proactively is paramount to preventing breaches and data loss.

- Injection Attacks: Similar to traditional applications, serverless functions are vulnerable to SQL injection, command injection, and cross-site scripting (XSS) attacks if input validation and sanitization are not rigorously implemented. An attacker could exploit vulnerabilities in your function’s code to execute malicious commands or access sensitive data. For example, if a function directly uses user-supplied data in a database query without proper escaping, an SQL injection attack could compromise the entire database.

- Insecure Configurations: Misconfigurations in the serverless platform itself, such as overly permissive IAM roles or inadequate network security settings, can expose functions to unauthorized access. For instance, granting a function excessive permissions beyond what it needs to operate can create a significant security risk. An attacker gaining access to this overly permissive role could compromise other parts of your cloud infrastructure.

- Unpatched Dependencies: Serverless functions often rely on external libraries and dependencies. If these dependencies contain known vulnerabilities and are not regularly updated, attackers could exploit these weaknesses to gain access to your function or data. Failing to maintain up-to-date dependencies significantly increases the attack surface of your application.

- Lack of Logging and Monitoring: Inadequate logging and monitoring can hinder the detection and response to security incidents. Without comprehensive logging, identifying the root cause of a security breach can be extremely difficult, delaying remediation efforts and potentially leading to further damage.

Securing Serverless Function Code and Dependencies

Implementing robust security practices throughout the development lifecycle is crucial for mitigating the risks associated with serverless functions. This includes secure coding practices, dependency management, and rigorous testing.

- Input Validation and Sanitization: Always validate and sanitize all inputs to prevent injection attacks. Never trust user-provided data; always assume it could be malicious. Use parameterized queries for database interactions and escape user input before using it in any context that could lead to code execution.

- Principle of Least Privilege: Configure IAM roles with the minimum necessary permissions. Only grant access to the resources and actions your function absolutely requires. This limits the impact of a potential compromise.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities in your serverless functions and infrastructure. This proactive approach helps prevent security breaches before they occur.

- Dependency Management: Use a dependency management system to track and update your dependencies regularly. Utilize tools to scan for vulnerabilities in your dependencies and automatically update them when patches are released. This helps to maintain a secure application by addressing vulnerabilities in external libraries.

- Secure Deployment Practices: Employ secure deployment practices such as using infrastructure-as-code (IaC) to manage your serverless infrastructure and CI/CD pipelines for automated and secure deployments. This ensures consistency and repeatability in your deployment process, reducing the risk of human error.

Security Checklist for Serverless Application Developers

This checklist provides a concise summary of essential security measures to incorporate when building serverless applications.

Demystifying serverless security is crucial for the future of cloud computing, demanding robust strategies to protect our increasingly distributed architectures. Understanding how to effectively manage this evolving landscape is key, and a great resource for learning more about securing the cloud is this article on bitglass and the rise of cloud security posture management , which highlights innovative solutions.

Ultimately, safeguarding serverless applications requires a proactive and comprehensive approach to security.

- Input validation and sanitization: Implement robust input validation and sanitization to prevent injection attacks.

- Least privilege principle: Configure IAM roles with the minimum necessary permissions.

- Secure coding practices: Follow secure coding guidelines to prevent common vulnerabilities.

- Regular security updates: Keep all dependencies and runtime environments up-to-date.

- Comprehensive logging and monitoring: Implement detailed logging and monitoring to detect and respond to security incidents.

- Security testing: Conduct regular security audits and penetration testing.

- Secure deployment practices: Utilize IaC and CI/CD for secure and repeatable deployments.

- Regular vulnerability scanning: Regularly scan your codebase and dependencies for vulnerabilities.

- Encryption at rest and in transit: Encrypt sensitive data both at rest and in transit.

- Access control: Implement strong access control mechanisms to restrict access to your serverless functions and data.

Securing Serverless Data and Storage

Serverless architectures, while offering incredible scalability and cost efficiency, introduce unique challenges to data security. The distributed nature of serverless functions and the reliance on managed services mean that traditional security paradigms need adaptation. Understanding the security implications of various data storage options and implementing robust security practices are crucial for protecting sensitive information in a serverless environment.Data security in serverless deployments hinges on a layered approach, encompassing data at rest (while stored) and data in transit (while moving).

Choosing the right storage service and implementing appropriate access controls are key components of this strategy.

Serverless Data Storage Options: A Comparison, Demystifying serverless security safeguarding the future of cloud computing

Serverless applications benefit from a variety of data storage options, each with its own strengths and weaknesses concerning security. The choice depends heavily on the application’s specific needs and the sensitivity of the data being stored.

- Databases (e.g., Amazon DynamoDB, Azure Cosmos DB, Google Cloud Firestore): Managed databases offer scalability and built-in features like encryption and access control. However, they require careful configuration to ensure data is appropriately protected. Improperly configured access control lists (ACLs) or insufficient encryption can lead to data breaches.

- Object Storage (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage): Object storage is ideal for unstructured data like images, videos, and logs. Security is achieved through access control lists (ACLs), encryption (both at rest and in transit using HTTPS), and lifecycle policies for data deletion. Misconfigurations of bucket policies, for example, can expose sensitive data unintentionally.

Security Implications of Different Storage Options

The security implications of choosing one storage option over another are significant. For instance, using a database with weak encryption settings exposes data to potential decryption attacks. Similarly, improperly configured object storage buckets can lead to data exposure and unauthorized access.

Demystifying serverless security is crucial for the future of cloud computing; it’s all about proactive measures, not just reactive patching. Building secure applications becomes easier with the right tools, and that’s where understanding platforms like those discussed in this insightful article on domino app dev the low code and pro code future comes in. Ultimately, secure development practices, regardless of the platform, are key to a safer serverless landscape.

| Storage Type | Security Implications (if misconfigured) | Mitigation Strategies |

|---|---|---|

| Databases | SQL injection vulnerabilities, insufficient encryption, weak access controls | Input validation, strong encryption (at rest and in transit), granular access control, regular security audits |

| Object Storage | Publicly accessible buckets, weak encryption, insufficient logging and monitoring | Strict bucket policies, encryption at rest and in transit, detailed logging, regular security audits, use of server-side encryption (SSE) |

Securing Data at Rest and in Transit

Protecting data at rest and in transit requires a multi-faceted approach. Data at rest needs to be encrypted using strong algorithms, while data in transit requires secure protocols like HTTPS.

Data at Rest: Encryption at rest is crucial. Leverage server-side encryption offered by cloud providers, enabling them to handle the key management. Regularly review and update encryption keys. For databases, enable encryption at the database level. For object storage, use server-side encryption (SSE) with managed keys (SSE-KMS) for stronger security.

Data in Transit: Always use HTTPS to encrypt data transmitted between your serverless functions and other services. Implement proper certificate management and ensure all communication channels are secured. Consider using VPNs for additional security layers when accessing data from outside the cloud environment. For example, a company might use a VPN to allow employees to securely access data stored in Amazon S3 from their home computers.

Identity and Access Management (IAM) in Serverless

Robust Identity and Access Management (IAM) is the cornerstone of serverless security. Without a well-defined and strictly enforced IAM strategy, your serverless architecture becomes vulnerable to unauthorized access, data breaches, and compromised functionality. Effective IAM ensures that only authorized users, services, and applications can access specific serverless resources, limiting the blast radius of any potential security incident.Implementing granular access control in a serverless environment requires a deep understanding of IAM roles and policies.

These mechanisms allow you to define precisely what actions specific entities are permitted to perform on your serverless resources. This fine-grained approach minimizes risk by limiting privileges to only what’s absolutely necessary, adhering to the principle of least privilege.

IAM Roles and Policies for Fine-Grained Access Control

IAM roles are essentially sets of permissions that can be assigned to users, services, or applications. These roles define what actions the assigned entity can perform. IAM policies, on the other hand, are documents that specify what permissions are granted or denied for specific resources. Combining roles and policies allows for highly granular control. For example, a Lambda function might be assigned a role that grants it permission only to read data from a specific S3 bucket and write logs to CloudWatch, preventing it from accessing other sensitive resources or performing unintended actions.

This approach significantly reduces the attack surface compared to granting overly permissive access.

Examples of IAM Misconfigurations and Their Consequences

A common misconfiguration is assigning overly permissive roles to Lambda functions or other serverless components. For instance, granting a Lambda function full administrator access to your AWS account would be catastrophic. A compromised function could then be used to completely compromise your entire cloud infrastructure. Another example is failing to rotate IAM credentials regularly. If an attacker obtains access to long-lived credentials, they can maintain unauthorized access for an extended period.

This lack of rotation significantly increases the risk of data breaches and other security incidents. A less obvious, but equally dangerous misconfiguration involves failing to properly manage the permissions associated with temporary credentials. If these are not revoked after use, they could allow attackers to maintain access even after a legitimate session ends. Finally, improperly configured cross-account access, where one account grants access to resources in another, can create security holes if not carefully monitored and restricted.

Such misconfigurations can result in unauthorized access, data exfiltration, and significant financial losses. In short, even small IAM oversights can have major implications for the security of your serverless application.

Monitoring and Logging in Serverless Environments: Demystifying Serverless Security Safeguarding The Future Of Cloud Computing

Effective monitoring and logging are paramount for maintaining the security of your serverless applications. Unlike traditional architectures, serverless functions are ephemeral, meaning they don’t exist continuously. This makes traditional monitoring methods insufficient, demanding a proactive and comprehensive approach to ensure visibility into your application’s behavior and identify potential security breaches. Centralized logging and robust monitoring are essential for understanding application performance, detecting anomalies, and responding swiftly to security incidents.Centralized logging aggregates logs from various sources within your serverless application into a single, unified view.

This simplifies the process of identifying patterns, correlations, and anomalies that might indicate a security threat. A comprehensive logging strategy facilitates efficient troubleshooting, incident response, and security auditing.

Log Aggregation and Analysis

A well-designed logging strategy involves selecting the right tools for log collection and analysis. Popular choices include cloud-based logging services such as AWS CloudWatch, Azure Monitor, and Google Cloud Logging. These services provide features like log filtering, searching, and alerting, making it easier to identify security-relevant events. For example, you might configure alerts to notify you of unusual spikes in error rates, unauthorized access attempts, or data exfiltration attempts.

Effective analysis often involves using log management tools to correlate events across different services and functions, enabling the identification of sophisticated attacks that might not be immediately apparent from individual log entries. Consider employing security information and event management (SIEM) systems to further enhance log analysis capabilities and gain broader security insights. A SIEM system can aggregate logs from multiple sources, including your serverless environment, and provide advanced threat detection and response capabilities.

Security Incident Detection and Response

Detecting and responding to security incidents in a serverless environment requires a proactive approach. This includes implementing robust security measures from the outset, establishing clear incident response plans, and regularly testing your security posture. Real-time monitoring, coupled with automated alerting, is crucial for immediate detection of suspicious activities. For example, detecting unusual access patterns to your data storage, unexpected increases in function invocations, or unusual API calls can be early indicators of a compromise.

Once an incident is detected, a well-defined incident response plan should be followed. This plan should Artikel roles, responsibilities, communication protocols, and escalation procedures. Regular security audits and penetration testing are essential to identify vulnerabilities and improve your overall security posture. By simulating attacks, you can gain valuable insights into your system’s weaknesses and improve your ability to detect and respond to real-world threats.

Consider incorporating automated remediation strategies to mitigate the impact of security incidents as quickly as possible. For instance, automatically disabling compromised functions or restricting access to sensitive data can minimize the damage caused by an attack.

Serverless Security Best Practices and Future Trends

Serverless computing offers incredible scalability and cost efficiency, but its unique architecture introduces new security challenges. Understanding and proactively addressing these vulnerabilities is crucial for harnessing the full potential of serverless while mitigating risks. This section explores emerging threats, the role of automation and AI in bolstering security, and Artikels key future trends in serverless security.Emerging security threats in serverless environments are rapidly evolving.

Traditional perimeter-based security models are less effective, requiring a shift towards a more granular, function-level approach.

Emerging Serverless Security Threats

The decentralized nature of serverless introduces complexities. Supply chain attacks targeting dependencies used within functions are a significant concern. Compromised third-party libraries or frameworks can provide attackers with unauthorized access. Furthermore, misconfigurations in serverless platforms, such as overly permissive IAM roles or inadequate resource limits, can lead to vulnerabilities that are easily exploited. Another threat is the potential for data breaches through insecure function code, particularly those handling sensitive data without proper encryption or validation.

Finally, the ephemeral nature of serverless functions can make forensic analysis challenging, hindering incident response efforts. For example, a compromised function might disappear after execution, leaving little trace of malicious activity.

Automation and AI in Enhancing Serverless Security

Automation and AI are instrumental in enhancing serverless security. Automated security testing tools can continuously scan code for vulnerabilities, identify misconfigurations, and enforce security best practices. AI-powered threat detection systems can analyze vast amounts of runtime data to identify anomalies and potential attacks in real-time. For example, an AI system might detect unusual spikes in function invocations or unexpected data access patterns, indicating a potential compromise.

Machine learning models can also be trained to predict potential vulnerabilities based on historical data and code patterns. This proactive approach allows for early identification and mitigation of threats before they can cause significant damage. Automated remediation processes, triggered by AI-driven alerts, can further enhance security posture by automatically patching vulnerabilities or disabling compromised functions.

Future Trends and Challenges in Serverless Security

The serverless security landscape is constantly evolving. Several key trends and challenges are anticipated:

Several significant trends and challenges are expected to shape the future of serverless security. These include the increasing complexity of serverless deployments, the need for more sophisticated threat detection and response mechanisms, and the growing importance of automated security solutions.

- Increased Focus on Supply Chain Security: As reliance on third-party libraries and frameworks grows, securing the entire serverless application ecosystem becomes paramount. This requires robust vetting processes and continuous monitoring of dependencies.

- Enhanced Runtime Security: Advanced runtime protection techniques, such as runtime application self-protection (RASP), will become crucial for detecting and mitigating attacks during function execution. This involves integrating security directly into the function code itself.

- Serverless-Specific Security Standards and Regulations: The development of industry-specific standards and regulations for serverless security will provide a much-needed framework for secure deployments. This will ensure a baseline level of security across the industry.

- Rise of Serverless Security Platforms: Specialized platforms offering comprehensive serverless security solutions, including vulnerability scanning, threat detection, and automated remediation, are likely to gain popularity.

- Integration with DevSecOps: Seamless integration of security practices into the DevOps pipeline will be essential for ensuring continuous security throughout the serverless application lifecycle. This involves automating security testing and incorporating security considerations at every stage of development.

Outcome Summary

So, there you have it – a whirlwind tour through the world of serverless security. While the technology continues to evolve, and new threats emerge, the core principles remain consistent: understanding the shared responsibility model, proactively addressing vulnerabilities, and implementing robust security measures are paramount. By embracing best practices, leveraging automation, and staying informed about emerging trends, we can confidently harness the power of serverless computing while safeguarding our applications and data.

The future of cloud computing is serverless, and with a proactive security approach, that future is bright.

FAQ Resource

What are the biggest misconceptions about serverless security?

A common misconception is that serverless is inherently more secure than traditional infrastructure. While it offers some advantages, it simply shifts the responsibility; it doesn’t eliminate the need for robust security practices.

How do I choose the right data storage option for my serverless application?

The best option depends on your specific needs, considering factors like scalability, cost, and data sensitivity. Carefully evaluate services like databases (e.g., DynamoDB, Cosmos DB), object storage (e.g., S3, Azure Blob Storage), and managed databases before making a decision.

What are some examples of IAM misconfigurations?

Examples include overly permissive IAM roles granting excessive access, lack of least privilege principle, and failure to rotate access keys regularly.

How can I effectively monitor and log serverless functions?

Utilize cloud provider logging services (e.g., CloudWatch, Azure Monitor) to collect logs from your functions. Implement centralized log aggregation and analysis tools for easier monitoring and incident response.