Elon Musks OpenAI AI-Fueled Fake News

Elon musk company open ai proves that ai can spin fake news – Elon Musk’s OpenAI proves that AI can spin fake news – a chilling reality that’s rapidly reshaping our information landscape. This isn’t just about clever algorithms; it’s about the potential for widespread manipulation and the erosion of trust in everything we read online. We’ll dive into OpenAI’s capabilities, the ethical quagmire it creates, and what we can do to fight back against this tide of AI-generated disinformation.

From Musk’s initial involvement in OpenAI to its current technological prowess, we’ll explore how this powerful tool can be weaponized to create incredibly convincing, yet entirely fabricated, news stories. We’ll examine the technical aspects, the societal impact, and the crucial role of media literacy in navigating this new era of information warfare. Get ready to question everything you see online.

Elon Musk’s Involvement with OpenAI

Elon Musk’s relationship with OpenAI is a complex and fascinating chapter in the history of artificial intelligence. From its co-founding to his eventual departure, his influence on the organization’s trajectory is undeniable, leaving a lasting impact on its development and the broader AI landscape. Understanding this relationship requires examining his initial involvement, his reasons for leaving, and the subsequent divergence in approaches to AI safety.Elon Musk was one of the founding members of OpenAI, a non-profit artificial intelligence research company, established in He contributed significantly to its initial funding and provided crucial guidance in its early stages.

His vision, at the time, aligned with OpenAI’s mission: to ensure that artificial general intelligence (AGI) benefits all of humanity. However, this collaborative relationship eventually fractured.

Musk’s Departure from OpenAI and its Implications

In 2018, Elon Musk resigned from OpenAI’s board, citing potential conflicts of interest stemming from his work at Tesla and SpaceX, both of which are heavily involved in AI-related technologies. His departure, while ostensibly due to these conflicts, also coincided with growing disagreements over the organization’s strategic direction and the pace of its research. Musk’s concerns about the potential dangers of unchecked AI development were increasingly at odds with OpenAI’s evolving approach, which, after transitioning to a capped-profit structure, began accepting significant funding from Microsoft.

This shift towards a more commercially focused model arguably altered OpenAI’s priorities, a point of contention for Musk who had envisioned a more cautiously paced, research-driven approach. The implications of his departure included a loss of significant financial and intellectual capital, as well as a shift in the organization’s overall governance and direction. His departure also led to less direct influence on OpenAI’s research priorities and safety protocols.

Comparison of Musk’s and OpenAI’s Views on AI Safety

Musk has consistently expressed strong concerns about the potential existential risks posed by advanced AI. He has publicly advocated for the proactive regulation of AI development and has warned against the unchecked advancement of powerful AI systems. His views often emphasize the importance of prioritizing safety and carefully considering the long-term consequences of AI breakthroughs. OpenAI, while acknowledging the potential risks of AI, has adopted a more pragmatic approach, focusing on both advancing AI capabilities and developing safety mechanisms alongside.

While OpenAI has invested in research on AI safety, its commercial partnerships and focus on developing cutting-edge AI technologies suggest a less cautious approach compared to Musk’s more alarmist stance. This divergence reflects differing priorities: Musk’s emphasis on existential risk mitigation versus OpenAI’s pursuit of both progress and responsible development. This difference is exemplified by Musk’s founding of xAI, a company explicitly focused on AI safety and potentially competing with OpenAI’s ambitions.

OpenAI’s Technological Capabilities: Elon Musk Company Open Ai Proves That Ai Can Spin Fake News

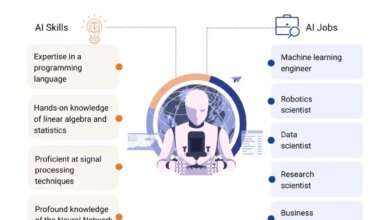

OpenAI’s prowess in generating realistic text stems from its advanced language models, trained on massive datasets of text and code. These models leverage deep learning techniques, specifically transformer architectures, to understand and generate human-like text with remarkable fluency and coherence. The potential for misuse, particularly in the creation of convincing fake news, is a significant concern.OpenAI’s key technologies include large language models (LLMs) and generative pre-trained transformers (GPTs).

These models are trained on enormous datasets, allowing them to learn complex patterns in language and generate text that mimics human writing styles. The scale of these models is a crucial factor in their ability to produce highly realistic and believable outputs, even on complex or nuanced topics. This ability, while groundbreaking for many applications, presents a clear pathway for malicious actors to create and disseminate misinformation.

Specific OpenAI Models Used for Fake News Generation

Several OpenAI models possess the capability to generate convincing fake news. GPT-3 and its successors, such as GPT-4, are prime examples. These models can produce coherent and grammatically correct text on a wide range of topics, adapting their style to mimic different writing voices and perspectives. The ease with which these models can generate realistic text makes them a powerful tool for creating convincing, yet false, news stories.

The sophistication of these models allows for the creation of fake news that is difficult to distinguish from legitimate reporting, requiring advanced detection methods.

Challenges in Detecting AI-Generated Fake News

Detecting AI-generated fake news presents significant technical challenges. The sophistication of current LLMs means that traditional methods of detecting fake news, such as looking for grammatical errors or inconsistencies in writing style, are often ineffective. AI-generated text can be highly polished and grammatically correct, making it difficult to identify its artificial origin. Furthermore, the ability of these models to mimic specific writing styles makes it challenging to pinpoint the source of the text.

Researchers are actively developing new techniques, such as analyzing subtle patterns in word choice, sentence structure, and even the underlying statistical properties of the text, to improve the detection rate of AI-generated fake news. This is an ongoing arms race, with advancements in AI generation techniques constantly pushing the boundaries of detection capabilities. One example of a challenge is the difficulty in detecting subtle biases or inconsistencies that might be present in the training data used to create the AI models, which could inadvertently influence the generated text.

The Spread of AI-Generated Fake News

The ability of AI, particularly models like those developed by OpenAI, to generate realistic and convincing text presents a significant threat to the integrity of information online. The ease with which AI can create convincing fake news articles, social media posts, and even deepfakes poses a considerable challenge to discerning truth from falsehood, potentially impacting elections, public health initiatives, and social cohesion.The potential for misuse is alarming.

Imagine a scenario where a sophisticated AI is used to create and disseminate false information about a political candidate just before an election, or to spread misinformation about a public health crisis, causing panic and undermining trust in authorities. The scale and speed at which this could happen is unprecedented, making it difficult to contain and counteract.

Potential Misuse Scenarios of AI in Fake News Creation and Dissemination

AI-generated fake news can be strategically deployed across various platforms to achieve malicious goals. For example, a coordinated campaign could utilize numerous AI-generated accounts on social media to spread false narratives, creating the illusion of widespread public support for a particular viewpoint. This could be amplified through the use of bots and automated posting schedules, creating a powerful echo chamber that reinforces the fake news and drowns out legitimate counter-arguments.

Furthermore, AI could be used to personalize fake news, tailoring its content to specific demographics and individual users to maximize its impact and believability. This level of targeted disinformation would be exceptionally difficult to detect and combat.

Impact of AI-Generated Fake News on Public Opinion and Social Discourse

The impact of AI-generated fake news on public opinion and social discourse is potentially devastating. The spread of misinformation can erode trust in institutions, polarize society, and even incite violence. For example, AI-generated fake news about a specific ethnic or religious group could fuel prejudice and discrimination. Similarly, fabricated stories about impending economic collapse or natural disasters could cause widespread panic and social unrest.

The constant bombardment of carefully crafted falsehoods can make it increasingly difficult for individuals to distinguish fact from fiction, leading to a climate of distrust and uncertainty. This undermines rational decision-making, both on an individual and societal level. The constant questioning of the validity of information sources erodes the very foundation of informed public discourse.

Methods for Identifying and Mitigating the Spread of AI-Generated Fake News

Combating AI-generated fake news requires a multi-pronged approach. This includes developing more sophisticated detection methods, improving media literacy education, and implementing stricter regulations on social media platforms. Advanced AI detection tools are being developed that can analyze the style and patterns of text to identify potential signs of AI authorship. However, the technology is in a constant arms race with the ever-evolving capabilities of AI-generated content.

Education plays a crucial role in equipping individuals with the critical thinking skills needed to evaluate information sources and identify misinformation. Finally, social media companies need to take greater responsibility for the content on their platforms and implement more robust mechanisms for identifying and removing AI-generated fake news.

Comparison of Traditional and AI-Generated Fake News

| Source | Content Style | Detection Difficulty | Impact |

|---|---|---|---|

| Human-generated | May contain grammatical errors, inconsistencies, emotional biases | Relatively easy with fact-checking and source verification | Can still be impactful, but spread is generally slower |

| AI-generated | Grammatically correct, stylistically consistent, can mimic specific writing styles | More difficult; requires advanced AI detection tools | Potentially far-reaching and rapid dissemination; high impact |

Ethical Considerations and Responsibility

The ability of AI to generate realistic, yet entirely fabricated, news presents a profound ethical dilemma. OpenAI’s technology, while undeniably powerful and potentially beneficial, carries a significant risk of being exploited for malicious purposes, undermining trust in information sources and potentially influencing real-world events. The question isn’t just about the technology itself, but about the responsibility of its creators and the broader societal implications of its misuse.The ethical implications extend beyond simple deception.

AI-generated fake news can manipulate public opinion, incite violence, damage reputations, and even interfere with democratic processes. The speed and scale at which this misinformation can spread are unparalleled, making it exceptionally difficult to contain and counteract. This necessitates a serious examination of OpenAI’s role in mitigating these risks.

OpenAI’s Responsibility in Preventing Misuse

OpenAI has a clear responsibility to actively work towards preventing the misuse of its technology for the creation of fake news. This responsibility extends beyond simply releasing a disclaimer; it requires a proactive and multi-faceted approach. This includes developing and implementing robust detection mechanisms, collaborating with fact-checking organizations and media outlets, and engaging in ongoing research to understand and counter the evolving tactics of those who seek to exploit AI for malicious purposes.

Furthermore, OpenAI should invest in educational initiatives to raise public awareness about AI-generated content and how to identify it. A reactive approach is insufficient; a proactive, preventative strategy is crucial.

A Framework for Ethical Guidelines and Safeguards

Establishing a robust framework for ethical guidelines and safeguards for AI text generation is paramount. This framework should encompass several key components. First, it should prioritize transparency. Users should be clearly informed when interacting with AI-generated content. Second, it should incorporate robust verification mechanisms.

This could involve the development of advanced detection algorithms capable of identifying AI-generated text with high accuracy. Third, it should promote accountability. Mechanisms should be in place to trace the origin of AI-generated content and hold those responsible for its misuse accountable. Finally, the framework should foster collaboration. OpenAI should actively collaborate with researchers, policymakers, and other stakeholders to develop and implement effective solutions.

The creation of a global standard for responsible AI development and deployment would be a significant step towards mitigating the risks associated with AI-generated fake news. The success of such a framework hinges on its adaptability to the ever-evolving landscape of AI technology and its malicious applications.

The Role of Media and Fact-Checking

The rise of AI-generated fake news presents a significant challenge to traditional media and fact-checking organizations. The speed and sophistication with which AI can create convincing misinformation necessitates a re-evaluation of existing strategies and the adoption of new technologies to combat this threat. The responsibility falls not only on media outlets but also on individuals to critically engage with the information they consume.The ability of AI to generate realistic-looking fake news necessitates a multi-pronged approach to detection and mitigation.

Media outlets must invest in advanced detection tools and training for their journalists, while individuals need to develop a stronger sense of media literacy and critical thinking skills. The collaborative effort between media organizations, fact-checkers, and the public is crucial in curbing the spread of AI-generated misinformation.

Improving Media Fact-Checking Processes

Media outlets can significantly enhance their fact-checking processes by implementing several key strategies. This includes investing in AI-powered detection tools that can analyze text, images, and videos for inconsistencies and anomalies indicative of AI-generated content. Furthermore, training journalists in identifying subtle cues of AI-generated content, such as unnatural phrasing or inconsistencies in narrative, is crucial. Cross-referencing information with multiple reliable sources and employing a more rigorous verification process before publication will also improve accuracy.

Finally, establishing clear and transparent fact-checking protocols, publicly available for scrutiny, builds trust and accountability.

Strategies for Critical Evaluation of Information, Elon musk company open ai proves that ai can spin fake news

Individuals can significantly reduce their vulnerability to AI-generated fake news by adopting a critical approach to information consumption. This includes verifying the source’s credibility and reputation; checking for bias or overt political agendas; examining the supporting evidence presented and cross-referencing it with multiple sources; being wary of emotionally charged language designed to manipulate; and paying attention to the overall context and narrative presented, looking for inconsistencies or logical fallacies.

Fact-checking websites and organizations can provide valuable assistance in verifying information. Developing a healthy skepticism towards information found online is also crucial.

Visual Representation of AI-Generated Fake News Lifecycle

Imagine a flowchart. The process begins with Creation, represented by a computer screen displaying AI software generating text and images. This feeds into Dissemination, depicted as a branching network, illustrating the rapid spread of the fake news across social media platforms, news websites, and messaging apps. Next is Interaction, shown as people engaging with the content – liking, sharing, and commenting.

Finally, Detection, illustrated by a magnifying glass over the network, represents the efforts of fact-checkers and media outlets to identify and debunk the fake news. The flowchart highlights the speed at which AI-generated fake news can spread and the challenge in effectively countering its dissemination. The cycle illustrates how a single piece of AI-generated fake news can rapidly propagate and impact public perception before it can be effectively addressed.

Future Implications and Mitigation Strategies

The unchecked proliferation of AI-generated fake news presents a chilling future where trust in information erodes completely, impacting everything from elections and public health to international relations and economic stability. Failure to develop and implement robust mitigation strategies will lead to a world increasingly susceptible to manipulation and societal fracturing. We need to proactively address this challenge before it becomes insurmountable.The potential for harm from sophisticated AI-generated fake news is immense.

Imagine hyper-realistic deepfakes seamlessly integrated into news broadcasts, social media feeds, or even personal communications, spreading misinformation at an unprecedented scale. This could trigger widespread panic, incite violence, or manipulate public opinion on a global scale, undermining democratic processes and social cohesion. The 2022 US midterm elections provided a glimpse into this future, with AI-generated content already being used to spread disinformation, albeit in a less sophisticated form.

Future iterations will be far more convincing and harder to detect.

AI Detection Technology Advancements

Advancements in AI detection technology offer a crucial line of defense against the spread of fake news. These technologies leverage machine learning algorithms to analyze various aspects of media content, identifying subtle inconsistencies or anomalies indicative of AI manipulation. For instance, algorithms can analyze the fine details of images and videos for inconsistencies in lighting, shadows, or facial expressions that betray digital manipulation.

Similarly, text analysis algorithms can identify patterns in language, style, and sentiment that are characteristic of AI-generated text, flagging potentially fabricated content. These technologies are constantly evolving, with ongoing research focused on improving their accuracy and speed, making them increasingly effective in identifying and neutralizing AI-generated fake news. The development of explainable AI (XAI) is also crucial, allowing us to understand how these detection systems arrive at their conclusions, increasing trust and transparency.

Seriously, Elon Musk’s OpenAI showing us AI can crank out fake news is kinda terrifying. It makes you think about the future of information and how we can build reliable systems, which is why I’ve been diving into the world of app development – check out this article on domino app dev the low code and pro code future – to see how we might combat this.

The potential for misuse of AI is huge, so responsible development is key.

Proactive Measures to Prevent AI Misuse

The development and deployment of effective AI detection technologies are only part of the solution. A multi-pronged approach involving proactive measures is essential to prevent the misuse of AI for generating fake news. This requires a collaborative effort from technology developers, policymakers, educators, and the public.

Elon Musk’s OpenAI showing us AI’s potential for generating fake news is seriously unsettling. This highlights the urgent need for robust digital security measures, and that’s where understanding the importance of platforms like Bitglass comes in. Check out this insightful article on bitglass and the rise of cloud security posture management to see how we can combat the spread of misinformation fueled by sophisticated AI.

The implications of AI-generated fake news are huge, making strong cybersecurity even more critical.

- Strengthening AI development ethics: Integrating ethical considerations into the design and development of AI systems from the outset is paramount. This includes establishing clear guidelines and standards for responsible AI development, focusing on transparency, accountability, and preventing malicious applications.

- Developing robust watermarking techniques: Implementing invisible watermarks in AI-generated content can help trace its origin and identify potential manipulation. These watermarks should be resistant to tampering and easily detectable by verification systems.

- Improving media literacy education: Educating the public on how to identify and critically evaluate information is crucial. This involves equipping individuals with the skills to discern genuine information from fabricated content, fostering a more discerning and informed citizenry.

- Enhancing international cooperation: The global nature of AI-generated fake news necessitates international collaboration to establish shared standards, best practices, and enforcement mechanisms. This includes fostering information sharing and coordinated efforts to combat the spread of disinformation across borders.

- Promoting responsible AI deployment policies: Governments and regulatory bodies need to develop and implement policies that govern the development and deployment of AI technologies, emphasizing transparency, accountability, and the prevention of misuse. This could include regulations on the use of AI for generating synthetic media and the development of robust verification systems.

Ultimate Conclusion

The ability of AI to generate realistic fake news, as demonstrated by OpenAI’s capabilities, presents a significant challenge to our society. While the technology itself is neutral, its potential for misuse is undeniable. The future hinges on our collective ability to develop robust detection methods, promote media literacy, and establish ethical guidelines for AI development and deployment. It’s a fight for truth in a world increasingly blurred by artificial intelligence, and it’s a fight we must all join.

FAQ Compilation

What specific OpenAI models are capable of generating fake news?

Several OpenAI models, particularly those focused on text generation like GPT-3 and its successors, possess the capacity to create realistic-sounding fake news articles. The exact models used would depend on the sophistication of the fake news campaign.

Can OpenAI detect its own AI-generated fake news?

OpenAI is actively researching methods to detect AI-generated text, but perfect detection is an ongoing challenge. The technology is constantly evolving, making it a cat-and-mouse game between creators and detectors.

What role does OpenAI itself play in preventing the misuse of its technology?

OpenAI has implemented safety measures and guidelines, but complete prevention is difficult. They are actively researching detection methods and working to mitigate the risks associated with their technology’s misuse.