Generative AI Broadening Cybersecurity Readiness

Generative AI bringing cybersecurity readiness to the broader market is revolutionizing how we think about digital protection. No longer a luxury afforded only to large corporations with extensive IT budgets, advanced security measures are becoming accessible to everyone, thanks to the power of AI. This shift is driven by generative AI’s ability to automate complex tasks, analyze vast datasets for threats, and even create personalized training programs, making cybersecurity more efficient and affordable than ever before.

We’re entering a new era where even small businesses and individuals can significantly bolster their defenses against increasingly sophisticated cyberattacks.

This incredible potential stems from generative AI’s capacity to learn from massive amounts of data, identifying patterns and anomalies that might escape human notice. This allows for more proactive threat detection, faster incident response, and the development of robust, adaptable security protocols. Furthermore, generative AI can personalize cybersecurity training, tailoring it to individual needs and skill levels, ensuring a more effective learning experience and a better-prepared workforce.

Generative AI’s Role in Enhancing Cybersecurity

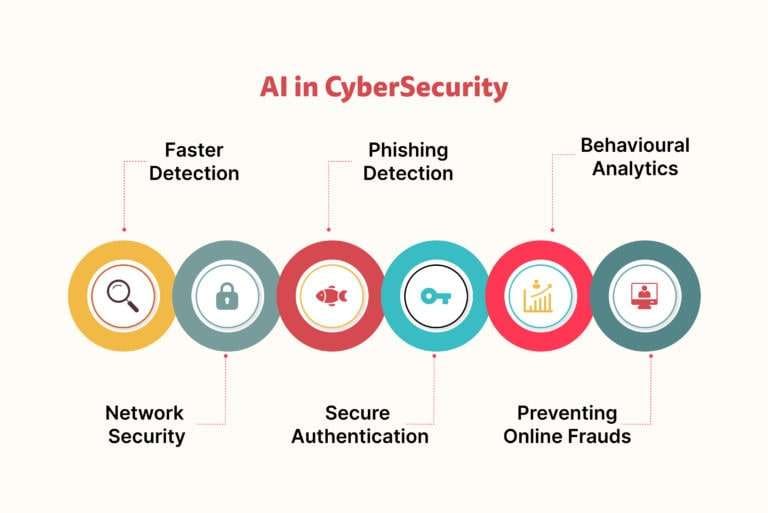

The rise of sophisticated cyber threats necessitates a paradigm shift in cybersecurity defenses. Generative AI, with its ability to learn from vast datasets and generate novel outputs, offers a powerful new toolset for bolstering our digital security. Its capacity to analyze complex patterns, predict threats, and automate responses promises to significantly improve our ability to protect against increasingly sophisticated attacks.

Improved Threat Detection Capabilities, Generative ai bringing cybersecurity readiness to the broader market

Generative AI models can analyze massive volumes of security logs, network traffic data, and other sources to identify subtle anomalies indicative of malicious activity. Traditional signature-based detection systems struggle to identify zero-day exploits or novel attack vectors. Generative AI, however, can learn the patterns of normal system behavior and identify deviations that might signal a threat, even if it’s unlike anything seen before.

For example, a generative AI model trained on network traffic data could identify unusual communication patterns between internal systems and external IP addresses, flagging potential data exfiltration attempts. The model’s ability to learn and adapt allows it to continuously improve its threat detection capabilities over time.

Creation of More Robust Security Protocols

Generative AI can assist in the design and implementation of more resilient security protocols. By analyzing vulnerabilities in existing systems and simulating potential attacks, generative AI can identify weaknesses and suggest improvements. This can lead to the creation of more robust authentication mechanisms, stronger encryption protocols, and more effective intrusion detection systems. For instance, a generative AI could be used to design a new firewall rule set based on an analysis of past attacks, optimizing the rules to minimize false positives while maximizing protection against known and unknown threats.

The result would be a more adaptive and resilient security posture.

Assistance in Vulnerability Assessments and Penetration Testing

Generative AI can automate and enhance vulnerability assessments and penetration testing. It can generate a large number of test inputs to probe for weaknesses in software and systems, identifying vulnerabilities that might be missed by manual testing. Furthermore, it can create realistic attack scenarios to simulate real-world threats, allowing security teams to better understand and mitigate potential risks. For example, a generative AI could automatically generate thousands of SQL injection queries to test the robustness of a web application’s database security, identifying potential vulnerabilities far more efficiently than manual testing.

Generative AI is leveling up cybersecurity for everyone, making complex solutions more accessible. This democratization is crucial as we see a massive increase in cloud adoption, which is why understanding platforms like Bitglass is so important; check out this great resource on bitglass and the rise of cloud security posture management to see how they’re tackling this.

Ultimately, the combination of AI-driven tools and robust cloud security solutions like Bitglass is what truly brings broader cybersecurity readiness to the market.

Enhanced Incident Response Procedures

Generative AI can significantly improve incident response procedures. By analyzing the data related to a security incident, a generative AI model can quickly identify the root cause, the extent of the damage, and the best course of action. This can help security teams respond more quickly and effectively to attacks, minimizing the impact of breaches. For instance, if a ransomware attack occurs, a generative AI could analyze the encrypted files, identify the type of ransomware used, and suggest the most effective decryption strategy based on its knowledge of past incidents and successful remediation techniques.

This speed and efficiency are crucial in minimizing the damage caused by cyberattacks.

Broadening Market Access through Generative AI Cybersecurity Solutions

For years, sophisticated cybersecurity solutions have been largely inaccessible to small businesses and individuals. The cost, complexity, and specialized expertise required have created a significant barrier to entry, leaving many vulnerable to cyber threats. Generative AI, however, is changing this landscape by democratizing access to advanced cybersecurity protections.Generative AI lowers the barrier to entry for sophisticated cybersecurity measures by automating many traditionally labor-intensive tasks.

This includes tasks like threat detection, vulnerability assessment, and incident response. By leveraging machine learning algorithms, generative AI can analyze vast amounts of data to identify patterns and anomalies indicative of malicious activity, significantly reducing the reliance on highly skilled security personnel. This automation makes advanced protection more affordable and manageable for smaller organizations and individuals who lack the resources to hire dedicated security teams.

Cost-Effectiveness of Generative AI-Based Solutions

Traditional cybersecurity solutions often involve significant upfront investment in hardware, software, and personnel. Ongoing maintenance and updates also add to the overall cost. Generative AI-based solutions, while still requiring an initial investment, offer a more cost-effective approach in the long run. The automation capabilities reduce the need for extensive human intervention, minimizing labor costs. Furthermore, the ability of generative AI to proactively identify and mitigate threats reduces the financial impact of potential breaches, which can be substantial in terms of data recovery, legal fees, and reputational damage.

For example, a small business might spend thousands annually on traditional antivirus software and managed security services. A generative AI-powered solution could offer comparable or superior protection at a fraction of the cost, potentially paying for itself through avoided breaches.

Marketing Strategy for Generative AI Cybersecurity Products

A successful marketing strategy for generative AI cybersecurity products must focus on simplicity and ease of use. The messaging should emphasize the accessibility and affordability of the technology, highlighting its ability to provide enterprise-grade security to a broader market. A multi-channel approach is essential, combining online marketing (, social media, targeted advertising) with offline strategies like webinars, industry events, and partnerships with technology providers.

Specifically, focusing on marketing materials that clearly articulate the return on investment (ROI) through cost savings and risk mitigation will resonate with potential customers. For example, a marketing campaign could showcase case studies demonstrating how small businesses have successfully used the solution to prevent costly data breaches, showcasing real-world success stories. Educational content, such as blog posts, white papers, and short videos, explaining the technology in simple terms, would further enhance understanding and trust.

Generative AI and the Evolution of Cybersecurity Training

The cybersecurity landscape is constantly evolving, demanding professionals with adaptable skills and up-to-date knowledge. Traditional training methods often struggle to keep pace with this rapid change, leading to skills gaps and vulnerabilities. Generative AI offers a powerful solution, enabling the creation of dynamic, personalized, and highly effective cybersecurity training programs that better prepare individuals for real-world threats.

Generative AI’s ability to create realistic and diverse scenarios, coupled with its capacity for personalized learning paths, significantly improves the effectiveness of cybersecurity training. This technology moves beyond static modules to create immersive and engaging learning experiences, fostering a deeper understanding of cybersecurity principles and practices.

Methods for Creating Realistic Cybersecurity Training Simulations using Generative AI

Generative AI can produce realistic phishing emails, malware samples (simulated, of course!), and network intrusion attempts. These simulated threats can be used within interactive training modules, allowing trainees to practice identifying and responding to attacks in a safe environment. For example, a generative AI model could create hundreds of variations of phishing emails, each with subtle differences in wording and formatting, forcing trainees to hone their critical thinking skills.

Similarly, it could generate simulated network traffic patterns reflecting various attack vectors, allowing trainees to practice analyzing network logs and identifying malicious activity. The key is to leverage AI’s ability to generate diverse and unpredictable scenarios, ensuring trainees are prepared for a wide range of threats.

Comparison of Generative AI-Based and Traditional Cybersecurity Training

The following table compares the effectiveness of generative AI-based training against traditional methods, considering cost, effectiveness, and scalability.

| Method | Cost | Effectiveness | Scalability |

|---|---|---|---|

| Traditional Training (e.g., classroom lectures, pre-recorded videos) | High (instructor fees, venue costs, materials) | Moderate (limited personalization, static content) | Low (limited number of trainees per session) |

| Generative AI-Based Training | Moderate (initial AI model development, ongoing maintenance) | High (personalized learning paths, dynamic scenarios, immediate feedback) | High (can train large numbers of individuals simultaneously) |

Personalizing Cybersecurity Training Based on Individual Skill Levels

Generative AI can analyze a trainee’s performance on simulations and assessments to tailor the training content to their specific needs. For example, a trainee who struggles with identifying phishing emails might receive additional training modules focused on email security best practices, while a trainee who excels in this area can move on to more advanced topics like malware analysis.

This personalized approach ensures that each trainee receives the appropriate level of challenge, maximizing their learning and retention. This adaptive learning system, powered by AI, continuously adjusts the difficulty and content based on individual progress, ensuring optimal learning outcomes.

Potential Scenarios for Generative AI-Driven Cybersecurity Training Modules

Generative AI can create a vast array of training scenarios, significantly enhancing the learning experience. The possibilities are extensive, ranging from basic to advanced modules.

- Phishing Simulation: Trainees receive and analyze various phishing emails generated by the AI, learning to identify malicious links and attachments.

- Malware Analysis: Trainees examine simulated malware samples, learning to identify malicious code and understand its behavior.

- Network Intrusion Detection: Trainees analyze simulated network traffic to identify and respond to intrusion attempts.

- Incident Response Simulation: Trainees practice responding to simulated security incidents, from initial detection to containment and recovery.

- Vulnerability Assessment: Trainees identify and assess vulnerabilities in simulated systems, learning to apply security patches and mitigations.

- Security Awareness Training: Trainees participate in interactive scenarios that test their knowledge of social engineering tactics and security best practices.

Addressing Ethical and Societal Implications

The rapid advancement of generative AI in cybersecurity presents a double-edged sword. While offering unprecedented opportunities for threat detection and response, it also introduces complex ethical and societal challenges that demand careful consideration and proactive mitigation. Failing to address these implications could undermine the very benefits generative AI promises and potentially exacerbate existing cybersecurity vulnerabilities.Generative AI models, trained on vast datasets, can inherit and amplify biases present in that data.

This can lead to discriminatory outcomes, such as unfairly targeting certain user groups or misidentifying threats based on skewed patterns learned from the training data. For instance, a model trained primarily on data from one geographical region might perform poorly when applied to cybersecurity threats originating elsewhere, potentially leading to delayed responses or missed attacks.

Generative AI is leveling up cybersecurity for everyone, not just the tech giants. This democratization of security tools is exciting, and it’s especially relevant as we see the rise of faster app development, like what’s discussed in this great article on domino app dev, the low-code and pro-code future. Ultimately, easier app creation means more apps needing robust security, making generative AI’s role in broader market readiness even more critical.

Potential Biases in Generative AI Models and Mitigation Strategies

Addressing biases requires a multi-pronged approach. Firstly, careful curation of training datasets is crucial. This involves actively seeking diverse and representative data to minimize the impact of skewed information. Secondly, algorithmic fairness techniques can be integrated into the model development process to detect and correct biases. This might involve techniques like adversarial debiasing or fairness-aware learning.

Finally, ongoing monitoring and evaluation of the model’s performance across different demographics and threat landscapes are essential to identify and rectify any emerging biases. Regular audits and independent assessments can further enhance transparency and accountability.

Implications of Generative AI for Privacy and Data Security

The use of generative AI in cybersecurity often involves processing sensitive data, raising significant privacy concerns. For example, models trained on network traffic data might inadvertently learn and reproduce patterns that reveal sensitive user information. Similarly, the generation of realistic phishing emails or malware samples requires access to real-world data, increasing the risk of data breaches and misuse.

Robust data anonymization techniques, differential privacy mechanisms, and secure data storage practices are crucial to mitigate these risks. Furthermore, clear and transparent data governance policies are essential to ensure compliance with relevant privacy regulations, such as GDPR and CCPA.

Generative AI’s potential to boost cybersecurity is huge, helping everyday users understand and mitigate risks. This is especially crucial given recent unsettling news, like the concerning reports of Facebook requesting bank account and card details, as detailed in this article: facebook asking bank account info and card transactions of users. Ultimately, broader access to AI-powered security tools will empower individuals to better protect themselves from these kinds of threats.

Potential for Misuse of Generative AI in Cyberattacks and Countermeasures

The same capabilities that make generative AI powerful for defensive purposes can also be exploited by malicious actors. Attackers could use generative models to create highly convincing phishing emails, craft sophisticated malware variants, or generate realistic deepfakes for social engineering attacks. This necessitates a proactive approach to counter these threats. This includes developing robust detection mechanisms capable of identifying AI-generated attacks, improving security awareness training to educate users about these new attack vectors, and strengthening existing security infrastructure to withstand these advanced attacks.

Research into AI-based countermeasures, such as AI-powered threat intelligence platforms and deception technologies, is crucial in this ongoing arms race.

Legal and Regulatory Challenges Associated with Generative AI in Cybersecurity

The rapid evolution of generative AI presents significant legal and regulatory challenges. Questions around liability in case of AI-related security failures, data ownership and intellectual property rights related to AI-generated content, and the ethical implications of using AI for surveillance and profiling require careful consideration and clear legal frameworks. International collaboration and the development of harmonized regulations are crucial to navigate these complex issues and ensure responsible innovation in this field.

Existing laws, such as those concerning data protection and cybersecurity, may need to be updated to adequately address the unique challenges posed by generative AI. The establishment of clear guidelines and best practices for the development and deployment of generative AI in cybersecurity is paramount to foster trust and responsible innovation.

Future Trends and Predictions

The integration of generative AI into cybersecurity is poised for explosive growth, fundamentally altering how we approach threat detection, response, and prevention. While still nascent, its potential to revolutionize the field is undeniable, leading to a significant shift in both the technological landscape and the workforce. The following sections explore key predictions for the near future.

Generative AI Adoption Rate Across Market Segments

The adoption rate of generative AI in cybersecurity will vary significantly across market segments. Large enterprises with substantial IT budgets and a higher tolerance for risk will likely be early adopters, leveraging AI to automate complex tasks and enhance their existing security infrastructure. Smaller businesses and organizations with limited resources may lag behind, facing challenges in terms of cost, expertise, and integration.

Government agencies and critical infrastructure sectors are expected to show moderate to high adoption rates, driven by the need for enhanced security and compliance. The healthcare sector, due to its sensitive data and regulatory requirements, will also experience a gradual but significant uptake. We can expect a tiered adoption, with larger, more resource-rich organizations leading the charge, followed by smaller organizations and eventually widespread adoption across all segments.

This adoption curve mirrors similar technological shifts, such as cloud computing, where early adopters paved the way for broader market penetration.

Generative AI’s Impact on the Cybersecurity Job Market

Generative AI’s impact on the cybersecurity job market will be transformative, but not necessarily detrimental. While some routine tasks, like vulnerability scanning and log analysis, will be automated, this will free up human experts to focus on more complex and strategic aspects of cybersecurity. The demand for cybersecurity professionals with AI expertise will skyrocket, creating new roles focused on AI model development, deployment, and management.

Existing roles will also evolve, requiring professionals to possess a strong understanding of AI’s capabilities and limitations. For example, security analysts will need to learn to interpret and validate AI-generated insights, while ethical hackers will need to understand how to exploit and defend against AI-powered attacks. This shift mirrors the historical evolution of technology, where automation has led to the creation of new and higher-skilled jobs.

Generative AI’s Reshaping of Cybersecurity Threats and Defenses

Advancements in generative AI will reshape the landscape of both cybersecurity threats and defenses. On the threat side, attackers will leverage generative AI to create more sophisticated and personalized phishing campaigns, develop novel malware variants, and automate large-scale attacks. The ability to generate realistic and convincing content will make social engineering attacks far more effective. On the defensive side, generative AI will empower security teams to proactively identify and mitigate threats by analyzing vast amounts of data to detect anomalies and predict potential attacks.

AI-powered systems will be able to automatically generate security patches and update defenses in real-time, significantly reducing response times. The ongoing arms race between attackers and defenders will continue, with both sides leveraging the power of generative AI. This dynamic mirrors the historical evolution of cyber warfare, where attackers constantly seek new methods to circumvent defenses, prompting a constant evolution of security measures.

Projected Evolution of Generative AI in Cybersecurity (Five-Year Forecast)

Imagine a graph charting the evolution of generative AI in cybersecurity over the next five years. The X-axis represents time (years), and the Y-axis represents the level of AI integration and impact. Year 1 shows a relatively low level of integration, primarily focused on automating basic tasks. Year 2 sees a significant increase, with AI-powered threat detection and response systems becoming more prevalent.

Year 3 marks the widespread adoption of AI-driven security information and event management (SIEM) systems, capable of analyzing massive datasets in real-time. Year 4 witnesses the emergence of sophisticated AI-powered threat prediction models, capable of anticipating future attacks based on historical data and emerging trends. By Year 5, the graph shows a plateau, indicating widespread integration of generative AI across all aspects of cybersecurity, from threat detection and response to vulnerability management and incident investigation.

This evolution reflects a gradual but significant shift towards a more proactive and intelligent approach to cybersecurity, mirroring the adoption curve of other transformative technologies.

Final Wrap-Up

The democratization of cybersecurity through generative AI is not just a technological advancement; it’s a societal imperative. As cyber threats become more prevalent and complex, ensuring everyone has access to effective protection is crucial. While challenges remain, particularly regarding ethical considerations and potential misuse, the benefits of generative AI in enhancing cybersecurity readiness far outweigh the risks. The future of cybersecurity is undeniably intertwined with the continued development and responsible implementation of generative AI, promising a safer and more secure digital world for all.

FAQ Insights: Generative Ai Bringing Cybersecurity Readiness To The Broader Market

What are the limitations of using generative AI in cybersecurity?

While powerful, generative AI models are not perfect. They can be susceptible to adversarial attacks, meaning malicious actors could try to manipulate them. Additionally, biases in the training data can lead to inaccurate or unfair results. Careful model selection, validation, and ongoing monitoring are essential.

How can I ensure the privacy of my data when using generative AI for cybersecurity?

Data privacy is paramount. Choose solutions that prioritize data anonymization and encryption. Understand the data handling practices of the AI provider and ensure they comply with relevant privacy regulations like GDPR or CCPA.

Is generative AI-based cybersecurity more expensive than traditional methods?

The initial investment might seem higher, but generative AI often offers long-term cost savings through automation, improved efficiency, and reduced human error. The overall cost-effectiveness depends on the specific solution and the scale of deployment.