How to Defend Your Company Against Deepfake Scams

How to defend your company against deepfake scams? It’s a question more and more businesses are asking themselves. Deepfakes, those convincingly realistic fake videos and audio recordings, are becoming increasingly sophisticated and pose a serious threat to company reputation and finances. From CEO impersonations leading to fraudulent wire transfers to damaging disinformation campaigns, the potential for harm is immense.

This post dives into the strategies you need to protect your business from this emerging threat, offering practical advice and actionable steps you can take today.

We’ll explore the technology behind deepfakes, examine real-world examples of successful attacks, and delve into preventative measures like employee training, robust cybersecurity, and the use of cutting-edge deepfake detection technology. We’ll also cover how to respond to an attack, including damage control and communicating effectively with stakeholders. Get ready to equip your company with the knowledge and tools to stay ahead of this evolving threat landscape.

Understanding Deepfake Technology and its Threats to Businesses

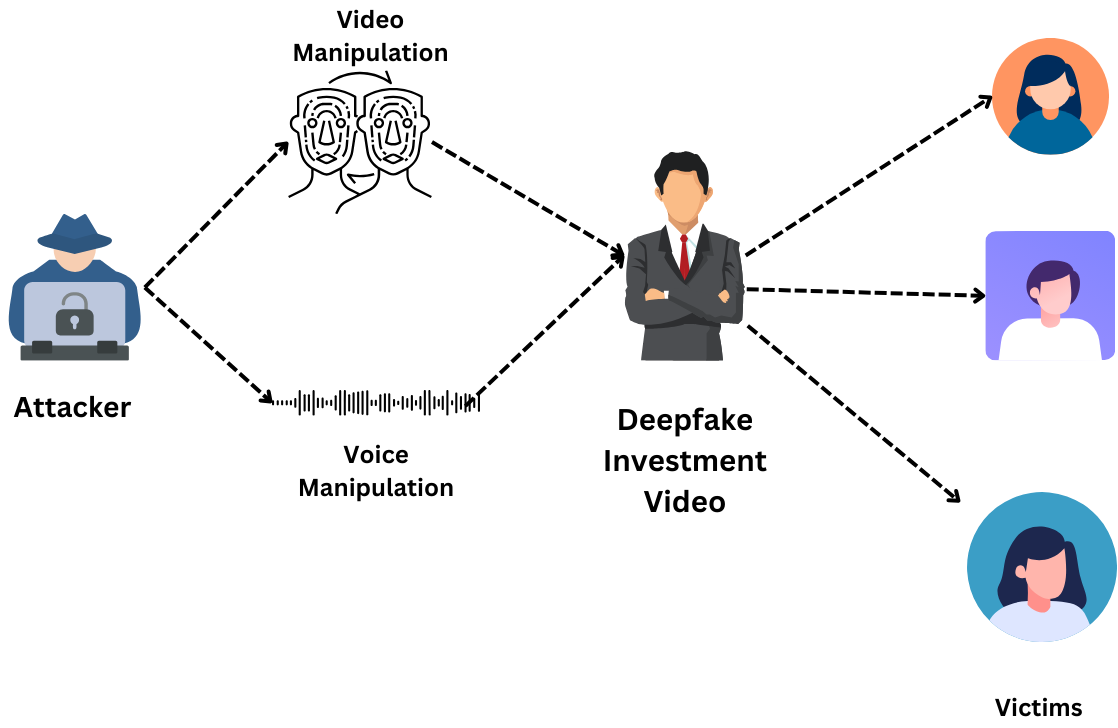

Deepfakes, hyperrealistic manipulated videos and audio, pose a significant and growing threat to businesses. Their ability to convincingly impersonate individuals and alter information can lead to severe financial losses and irreparable damage to a company’s reputation. Understanding the technology behind deepfakes and the methods used to deploy them is crucial for effective defense.Deepfake creation relies on sophisticated artificial intelligence, specifically deep learning algorithms.

These algorithms are trained on vast datasets of images and videos of a target individual, learning their facial expressions, voice patterns, and mannerisms. Once trained, the algorithm can generate new videos and audio clips featuring the target person saying or doing things they never actually did. The potential impact on a company is substantial; a deepfake of a CEO authorizing a fraudulent transaction, for instance, could result in significant financial losses and erode investor confidence.

The resulting reputational damage can be long-lasting and difficult to repair.

Methods of Deepfake Creation and Deployment Targeting Businesses, How to defend your company against deepfake scams

Several methods are employed to create and deploy deepfakes against businesses. A common tactic involves CEO impersonation, where a deepfake video or audio recording is used to instruct employees to transfer funds or reveal sensitive information. This is often combined with social engineering techniques, exploiting human trust and exploiting vulnerabilities in internal communication protocols. Another method involves creating deepfakes of company spokespeople to spread false information or damage the company’s brand.

These deepfakes might be disseminated through social media, email, or even through manipulated news reports. The goal is to manipulate public opinion, damage stock prices, or create general chaos. The sophistication of these attacks continues to evolve, making them increasingly difficult to detect.

Real-World Examples of Deepfake Scams Affecting Companies

While many deepfake scams remain unreported due to the sensitivity of the matter, several high-profile cases have emerged. One notable example involved a CEO impersonation scam where a deepfake voice was used to convince a company executive to transfer a significant sum of money to a fraudulent account. The financial losses were substantial, and the incident highlighted the vulnerability of companies to this emerging threat.

Another example involved a deepfake video of a company spokesperson making false claims about a product, leading to a significant drop in stock prices and investor panic. These real-world examples underscore the urgent need for businesses to develop robust security protocols to mitigate the risks associated with deepfakes.

Deepfake Detection Methods

Several methods are available for detecting deepfakes, each with its strengths and weaknesses. These methods can be broadly categorized into those that examine visual artifacts, those that analyze audio characteristics, and those that leverage behavioral cues. The effectiveness of each method depends on the sophistication of the deepfake and the quality of the available data.

| Method | Strengths | Weaknesses | Application |

|---|---|---|---|

| Visual Artifact Analysis | Can detect inconsistencies in facial expressions, lighting, and other visual cues. | Less effective against high-quality deepfakes. Requires specialized software. | Video analysis |

| Audio Analysis | Can detect inconsistencies in voice tone, pitch, and other audio characteristics. | Requires sophisticated algorithms and clean audio samples. | Audio calls, voicemails |

| Behavioral Analysis | Can detect inconsistencies in body language, speech patterns, and other behavioral cues. | Relies on human observation and may be subjective. | Live video conferences |

| AI-powered Detection | Can combine multiple methods for a more comprehensive approach. | Requires large datasets for training and can be computationally expensive. | Broad range of media |

Implementing Preventative Measures

Proactive measures are crucial in defending your company against the increasingly sophisticated threat of deepfake scams. A multi-layered approach combining employee education, robust security protocols, and a well-defined response plan is essential for minimizing vulnerability. This section Artikels key steps to build a strong defense against deepfake attacks.

Employee Training on Deepfake Identification

Effective employee training is the first line of defense. Deepfakes often contain subtle visual and auditory inconsistencies that, with proper training, employees can learn to detect. Training should focus on recognizing these anomalies, empowering employees to critically assess the authenticity of any communication received, especially those of a sensitive or urgent nature. For example, employees should be taught to look for inconsistencies in lighting, shadows, and background details in videos.

Auditory cues, such as unnatural lip synchronization or inconsistencies in voice tone and inflection, should also be highlighted. Regular refresher courses and simulated phishing exercises incorporating deepfakes will further enhance employee awareness and preparedness.

Password Policies and Multi-Factor Authentication

Strong password policies and multi-factor authentication (MFA) are fundamental to protecting company accounts from unauthorized access, a critical component in mitigating deepfake attacks. A robust password policy should mandate complex passwords, regular password changes, and the prohibition of easily guessable information. MFA adds an extra layer of security by requiring multiple forms of verification, such as a password and a one-time code sent to a mobile device.

This makes it significantly harder for attackers, even those wielding deepfakes to impersonate authorized personnel, to gain access to sensitive systems and data. For example, a combination of password, security token, and biometric scan would provide robust MFA.

Robust Cybersecurity Infrastructure

A robust cybersecurity infrastructure is paramount in mitigating deepfake attacks and other cyber threats. This includes firewalls to control network traffic, intrusion detection systems (IDS) to monitor for malicious activity, and intrusion prevention systems (IPS) to actively block threats. Regular security audits and penetration testing should be conducted to identify vulnerabilities and ensure the effectiveness of security measures.

Employing advanced threat detection technologies that can analyze network traffic and identify anomalies indicative of deepfake attacks can further enhance security posture. These technologies leverage machine learning and artificial intelligence to detect patterns and behaviors associated with malicious activities, providing early warning signals of potential attacks.

Implementing a Comprehensive Deepfake Prevention Strategy

Implementing a comprehensive deepfake prevention strategy requires a systematic approach.

- Assess Vulnerability: Conduct a thorough risk assessment to identify potential vulnerabilities within the organization, focusing on areas susceptible to deepfake attacks, such as financial transactions, sensitive data access, and executive communication.

- Develop a Training Program: Create a comprehensive training program that educates employees on identifying deepfakes and reporting suspicious activities. The program should include regular updates to address evolving deepfake techniques.

- Enhance Security Measures: Strengthen security measures by implementing strong password policies, MFA, robust firewalls, IDS/IPS, and advanced threat detection technologies.

- Establish Response Protocols: Develop clear incident response protocols to handle suspected deepfake attacks. This should include procedures for reporting, investigation, and remediation.

- Regularly Review and Update: Regularly review and update the deepfake prevention strategy to adapt to emerging threats and technological advancements. This ensures the effectiveness of the strategy in the long term.

Responding to a Deepfake Attack

A deepfake attack can severely damage a company’s reputation and financial stability. Swift and decisive action is crucial to mitigate the harm and restore trust. A well-defined response protocol is essential for navigating this complex situation effectively. This section Artikels the key steps involved in responding to a suspected deepfake attack.

Deepfake Incident Response Protocol

Responding to a deepfake attack requires a structured approach. The initial steps focus on containment and damage control, followed by investigation and remediation. A dedicated crisis management team should be assembled immediately. This team should include legal counsel, public relations professionals, IT specialists, and senior management.

- Immediate Actions: The first priority is to identify and remove the deepfake content from all platforms where it appears. This involves issuing takedown notices to social media companies, hosting providers, and other relevant parties. Simultaneously, begin tracking the spread of the deepfake and identify its origin if possible.

- Investigation and Analysis: A thorough investigation should be launched to determine the source of the deepfake, its purpose, and the extent of its impact. This may involve forensic analysis of the deepfake itself, as well as an examination of internal systems to identify any potential vulnerabilities that may have been exploited.

- Communication Strategy Development: A clear and consistent communication strategy should be developed to address stakeholders. This plan should Artikel key messages, target audiences, and communication channels. Transparency and honesty are crucial in managing the situation.

- Remediation and Prevention: Once the immediate threat is contained, focus shifts to remediation. This involves repairing any damaged systems, strengthening security protocols, and implementing measures to prevent future deepfake attacks. This might include employee training on deepfake detection and enhanced security measures for sensitive data.

- Documentation and Preservation of Evidence: Meticulous documentation of all aspects of the incident is vital for potential legal action. This includes preserving the deepfake content itself, communications related to the incident, and any evidence related to the source and perpetrators. Maintain a chain of custody for all collected evidence.

Effective Communication Strategies

Open and honest communication is critical in managing a deepfake crisis. A well-defined communication strategy should address the concerns of employees, customers, and investors. Transparency builds trust and helps mitigate the negative impact of the attack.

- Acknowledge the incident promptly: Don’t delay in confirming the deepfake attack. A swift response demonstrates responsibility and proactive management.

- Provide factual information: Avoid speculation and stick to the facts. Clearly state what is known and what is being done to address the situation.

- Emphasize the company’s commitment to security: Reassure stakeholders that the company is taking steps to enhance security measures and prevent future incidents.

- Maintain regular updates: Keep stakeholders informed of the progress of the investigation and any significant developments.

- Offer support and resources: Provide contact information for those who need assistance or have questions.

Legal and Public Relations Support Resources

Navigating a deepfake crisis requires specialized expertise. Seeking professional help from legal and public relations professionals is essential.

Companies should have pre-established relationships with legal firms experienced in handling cybersecurity incidents and reputational damage. Similarly, having a strong relationship with a reputable public relations firm is crucial for effective crisis communication.

Evidence Documentation and Preservation

Preserving evidence is paramount for potential legal action. A detailed record of the deepfake incident, including its discovery, spread, and impact, is necessary. This includes screenshots, videos, metadata, and any other relevant information.

It is crucial to maintain a chain of custody for all collected evidence to ensure its admissibility in court. This involves documenting every step in the evidence handling process, from collection to storage. Engage with forensic experts to ensure the integrity and authenticity of the evidence.

Leveraging Technology for Deepfake Detection: How To Defend Your Company Against Deepfake Scams

The rise of deepfake technology necessitates a proactive approach to detection and prevention. Businesses can no longer rely solely on traditional security measures; integrating advanced technologies is crucial for safeguarding their reputation and operations. This section explores various technological solutions designed to identify and mitigate the risks associated with deepfake attacks.Deepfake detection software and tools are rapidly evolving, offering businesses a range of options for identifying manipulated media.

These tools leverage various techniques, from analyzing subtle inconsistencies in video and audio to employing sophisticated AI algorithms to detect anomalies. The choice of the right tool depends on the specific needs and resources of the organization.

Deepfake Detection Software and Tools

Several companies offer deepfake detection software, each employing different methodologies. Some focus on analyzing subtle visual cues like inconsistencies in blinking patterns or lighting, while others concentrate on detecting audio anomalies. For example, one software might analyze the frequency of lip movements compared to the spoken audio, while another might identify inconsistencies in the subtle muscle movements around the eyes.

A comprehensive approach often involves using multiple tools in conjunction to improve detection accuracy. The selection of specific software should be guided by factors like cost, ease of integration with existing systems, and the types of deepfakes the business is most vulnerable to.

Blockchain Technology for Enhanced Authenticity

Blockchain technology offers a promising approach to enhancing the authenticity and integrity of company communications. By creating a tamper-proof record of digital assets, blockchain can verify the origin and history of videos and audio recordings. Imagine a system where each company video is cryptographically secured and its digital fingerprint is recorded on a blockchain. Any alteration to the video would invalidate its blockchain record, immediately alerting the company to a potential deepfake.

This approach adds a layer of trust and verifiability, making it much harder for deepfakes to gain traction.

AI and Machine Learning in Deepfake Detection and Prevention

Artificial intelligence (AI) and machine learning (ML) are at the forefront of deepfake detection. AI algorithms can be trained to identify patterns and anomalies characteristic of deepfakes. These algorithms analyze various features, including facial expressions, micro-movements, and inconsistencies in lighting and background, to determine the likelihood of manipulation. Furthermore, ML models can continuously learn and adapt to new deepfake techniques, making them a powerful tool in the fight against this evolving threat.

Companies can integrate AI-powered detection systems into their existing security infrastructure to automatically flag suspicious content for human review.

Integrating Deepfake Detection Technology

The following flowchart illustrates the process of integrating deepfake detection technology into a company’s existing security systems.[Flowchart Description: The flowchart would begin with “Suspicious Media Detected” as the starting point. This would lead to “Initial Screening with Automated Deepfake Detection Software”. If the software flags the media as potentially manipulated, it would proceed to “Human Review and Verification”.

If the human review confirms the deepfake, it would go to “Incident Response Protocol” (which would include actions like legal action, public relations management, and internal investigation). If the software does not flag the media as potentially manipulated, or if the human review deems it genuine, it would proceed to “Archive/Use Media”. There would be feedback loops allowing for continuous improvement of the detection system.]

Building a Culture of Security Awareness

Deepfakes pose a significant threat to businesses, and technical solutions alone aren’t enough to mitigate the risk. A strong security culture, built on employee awareness and proactive reporting, is crucial for effective deepfake defense. This involves consistent training, clear communication, and establishing easily accessible reporting channels.A robust security awareness program needs to go beyond simple email alerts. It should integrate deepfake awareness into existing security protocols and promote a culture of vigilance and proactive reporting.

Employees should understand their role in identifying and reporting suspicious activity. This proactive approach is significantly more effective than relying solely on reactive measures after a deepfake attack has occurred.

Methods for Fostering Cybersecurity Awareness

Creating a culture of cybersecurity awareness requires a multi-faceted approach. Regular training, engaging communication, and clear reporting mechanisms are key components. We need to move beyond simply informing employees about the threat; we need to empower them to actively participate in mitigating the risk. This includes incorporating deepfake awareness into existing security training, using relatable examples, and regularly reinforcing key concepts.

Establishing Clear Reporting Channels

A clear and accessible reporting system is essential for quickly addressing potential deepfake incidents. Employees need to know exactly who to contact and how to report suspicious communications or activities. This could involve dedicated email addresses, internal reporting platforms, or even a designated security team point of contact. The reporting process should be simple, anonymous if needed, and ensure prompt investigation of all reported incidents.

The goal is to minimize the impact of a potential attack by addressing it swiftly and effectively. For example, a company could establish a dedicated “Deepfake Incident Report” email address or a section within their existing internal reporting system.

Company-Wide Training Program on Deepfake Threats

A comprehensive training program should be implemented to educate employees about deepfake technology, its potential impact, and effective prevention techniques. The training should go beyond simple awareness; it should provide employees with the skills to identify potential deepfakes and understand the implications of such attacks. This could involve interactive workshops, online modules, or even simulated phishing exercises using deepfake examples.

Regular refresher courses should also be provided to maintain employee awareness and adapt to evolving deepfake techniques. For instance, a training module could showcase examples of real deepfake videos, highlighting subtle visual cues and inconsistencies that can indicate manipulation.

Examples of Internal Communications Materials

Effective internal communications are vital for reinforcing deepfake awareness. Various methods can be used to engage employees and maintain a high level of vigilance.

- Posters: Posters displayed in common areas could feature eye-catching visuals and concise information about deepfake threats and reporting procedures. One example might show a split image – one side a genuine image, the other a subtle deepfake, with a caption highlighting the differences.

- Videos: Short, engaging videos can effectively communicate complex information. A video could showcase a realistic deepfake scenario, followed by tips on how to identify and report such incidents. The video could also include interviews with security experts or employees who have encountered deepfake attempts.

- Emails: Regular email updates can reinforce key messages and provide updates on deepfake threats. These emails could include quizzes, interactive elements, or links to helpful resources. For example, a monthly newsletter could include a short deepfake awareness quiz and highlight recent deepfake scams that have affected other companies.

Summary

Protecting your company from deepfake scams isn’t just about implementing the latest technology; it’s about cultivating a culture of security awareness. By combining robust security measures with well-trained employees and a proactive approach, you can significantly reduce your vulnerability to these sophisticated attacks. Remember, staying informed and adapting to the ever-changing landscape of deepfake technology is crucial for safeguarding your business’s reputation and bottom line.

Don’t wait until it’s too late – start building your defense today!

FAQ Guide

What are the telltale signs of a deepfake?

Look for inconsistencies in lighting, blinking patterns, unnatural lip synchronization, unusual background details, and subtle distortions in facial expressions. Listen for audio glitches or inconsistencies in voice tone.

Can I completely prevent deepfake attacks?

While complete prevention is difficult, a multi-layered approach combining technology, employee training, and robust security protocols significantly minimizes the risk.

What should I do if I suspect a deepfake attack?

Immediately cease any requested actions, secure all affected systems, preserve evidence, and contact your legal and cybersecurity teams. Follow your established incident response plan.

What is the role of insurance in mitigating deepfake losses?

Cybersecurity insurance policies often cover losses related to data breaches and fraud, including those caused by deepfakes. Review your policy carefully to understand your coverage.