A Faster Way to Evaluate DevOps Software

A faster way to evaluate DevOps software is crucial in today’s rapidly evolving tech landscape. Choosing the right tools can make or break your development workflow, impacting everything from deployment speed to team collaboration. This post dives into practical strategies to streamline your DevOps software selection process, saving you time and ensuring you pick the perfect solution for your needs.

We’ll explore efficient methods, automation tools, and key considerations to help you navigate this critical decision with confidence.

From defining what “faster” truly means in this context to leveraging automation and focusing on essential features, we’ll cover a range of techniques to optimize your evaluation. We’ll also look at how to make the most of trial periods, analyze vendor information effectively, and even consider post-implementation optimization. Get ready to revolutionize your DevOps software selection process!

Defining “Faster Evaluation” in DevOps Software

Evaluating DevOps software can be a time-consuming process, often involving extensive testing, integration, and performance analysis. However, in today’s rapidly evolving technological landscape, a quicker, more efficient evaluation process is crucial for staying competitive. This necessitates a re-evaluation of our traditional approaches and a focus on streamlining the evaluation workflow. This post will delve into what constitutes a “faster” evaluation and how to achieve it.

Defining “faster” isn’t simply about rushing through the process; it’s about optimizing the evaluation methodology to maximize the information gained per unit of time. This requires a strategic approach that prioritizes key features and leverages efficient testing techniques.

Criteria for Defining Speed in DevOps Software Evaluation

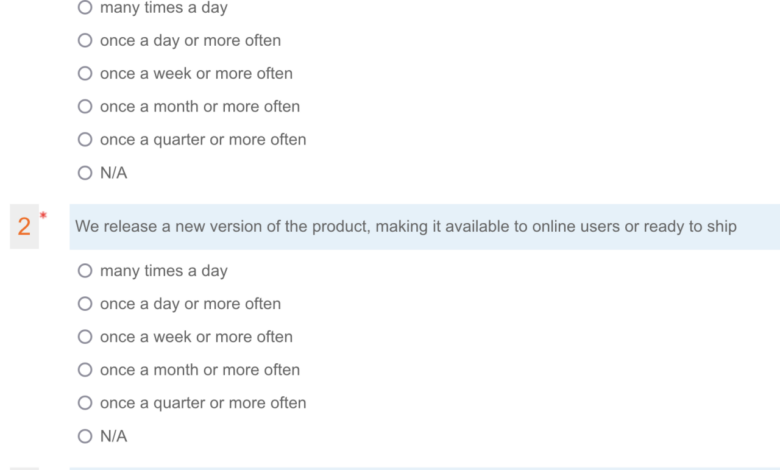

Several key metrics contribute to a faster and more efficient evaluation. The following table Artikels these metrics, their descriptions, importance, and how they can be measured.

| Metric | Description | Importance | Measurement Method |

|---|---|---|---|

| Evaluation Time | The total time taken from initiating the evaluation to reaching a decision. | High | Track start and end times using a project management tool. |

| Number of Test Cases Executed | The quantity of test cases run to assess functionality and performance. | Medium | Record the number of tests executed in a test management system. |

| Defect Detection Rate | The percentage of defects found during the evaluation process. A higher rate indicates a more thorough evaluation, potentially slowing down the process but increasing quality. | High | Calculate the ratio of defects found to the total number of tests. |

| Resource Utilization | The efficiency of resource allocation (personnel, tools, infrastructure). | Medium | Track time spent by team members on specific tasks. Analyze resource usage logs from testing environments. |

| Automation Level | The extent to which the evaluation process is automated (e.g., automated testing, automated deployment). | High | Measure the percentage of automated vs. manual tasks. |

Trade-offs Between Speed and Thoroughness

There’s an inherent trade-off between speed and thoroughness in software evaluation. A rapid evaluation might miss critical flaws, while an excessively thorough one might delay deployment unnecessarily. The optimal balance depends on several factors, including project risk tolerance, time constraints, and the criticality of the software. For example, a quick evaluation might suffice for a low-risk, internal tool, while a more comprehensive approach is necessary for mission-critical applications.

To mitigate this trade-off, a risk-based approach can be adopted. Prioritize testing efforts on high-risk areas identified through threat modeling or previous experience. This focused approach can allow for a faster evaluation without compromising critical aspects of the software.

Impact of Team Size and Skill Sets on Evaluation Speed

Team size and skill sets significantly influence evaluation speed. A larger team with diverse expertise can parallelize tasks, potentially accelerating the process. However, larger teams can also lead to communication overhead and coordination challenges, potentially negating the speed advantage. Conversely, a smaller team might be slower but may exhibit better coordination and reduced communication friction.

Similarly, a team with specialized skills in DevOps tools and methodologies can conduct evaluations more efficiently. Experience with automation frameworks, performance testing tools, and security best practices allows for a more focused and streamlined process. For instance, a team proficient in using tools like Kubernetes and Jenkins can automate many testing and deployment steps, significantly reducing the overall evaluation time.

Lack of expertise, on the other hand, necessitates more manual effort and extended evaluation periods.

Streamlining the DevOps Software Selection Process: A Faster Way To Evaluate Devops Software

Choosing the right DevOps software can feel like navigating a minefield. The sheer number of options, coupled with the complexities of each tool, often leads to lengthy and inefficient evaluation processes. A streamlined approach, however, can significantly reduce the time and resources spent without compromising the quality of your decision. This involves focusing on essential criteria, efficient communication, and leveraging existing knowledge.A streamlined process prioritizes speed without sacrificing thoroughness.

This means strategically focusing your efforts on the most critical aspects of each tool, eliminating unnecessary steps, and leveraging existing resources whenever possible. This approach allows you to quickly identify the best fit for your organization’s needs and get your team up and running faster.

Stakeholder Requirement Gathering

Gathering comprehensive stakeholder requirements upfront is crucial for a successful software selection. Inefficient methods, such as lengthy surveys or unstructured interviews, can lead to wasted time and unclear expectations. Instead, employ focused workshops with key stakeholders representing different teams (development, operations, security). These workshops should use a structured approach, possibly employing a prioritization matrix to rank requirements by importance and feasibility.

For example, a simple matrix could list requirements along one axis (e.g., CI/CD pipeline automation, infrastructure-as-code support, security integration) and rate them on a scale of 1-5 for both importance and feasibility, allowing for a clear visualization of priorities. This ensures everyone is on the same page and avoids later conflicts arising from unmet expectations.

Vendor Communication and Information Gathering

Effective vendor communication is essential for a quick and informed decision. Simply requesting lengthy demos from each vendor is inefficient. Instead, prioritize a structured approach. Start with a Request for Information (RFI) to pre-qualify vendors based on your defined criteria. This RFI should focus on key features and capabilities, allowing you to quickly eliminate unsuitable options.

Follow this with targeted, concise demos focusing only on the features deemed essential from the RFI responses. For instance, if your RFI highlights the need for seamless integration with your existing monitoring system, focus the demos specifically on demonstrating that capability. Avoid getting bogged down in features irrelevant to your immediate needs. Furthermore, consider using a structured comparison matrix to document your findings across different vendors, allowing for easy comparison of key features and capabilities.

This ensures a consistent and objective evaluation.

Leveraging Automation in the DevOps Software Evaluation Process

Automating the evaluation of DevOps software is crucial for accelerating the selection process and ensuring a thorough assessment. Manual testing and benchmarking are time-consuming and prone to human error. By automating these tasks, we can significantly reduce evaluation time, improve accuracy, and gain more comprehensive insights into the software’s capabilities. This allows for a more data-driven decision-making process, leading to a better choice of DevOps tools.Automating various aspects of the evaluation process allows for faster iteration and a more comprehensive analysis of different tools.

This includes automating the setup of test environments, running performance benchmarks, and analyzing the results. The benefits extend beyond speed, including enhanced consistency and reduced bias compared to manual methods.

Automation Tools and Techniques for DevOps Software Evaluation

Automating the evaluation process involves using various tools and techniques. A strategic approach to automation is key to maximizing efficiency and effectiveness.

- Infrastructure as Code (IaC): Tools like Terraform and Ansible can automate the provisioning and configuration of test environments, ensuring consistency across evaluations.

- Configuration Management Tools: Puppet and Chef can automate the installation and configuration of the DevOps software under evaluation, reducing manual effort and ensuring a standardized setup.

- Continuous Integration/Continuous Delivery (CI/CD) Pipelines: Jenkins, GitLab CI, and Azure DevOps can automate the build, testing, and deployment processes, enabling rapid iteration and feedback.

- Performance Testing Tools: JMeter, k6, and Gatling can automate performance benchmarks, providing quantitative data on response times, throughput, and resource utilization.

- Monitoring and Logging Tools: Prometheus, Grafana, and ELK stack can automate the collection and analysis of performance metrics, providing insights into the software’s behavior under load.

- Automated Testing Frameworks: Selenium, Cypress, and pytest can automate functional and integration tests, ensuring the software meets the required functionalities.

Streamlining Testing and Performance Benchmarking Through Automation

Automation significantly streamlines testing and performance benchmarking by enabling the execution of numerous tests and benchmarks concurrently and repeatedly. For example, using a CI/CD pipeline, we can automatically trigger performance tests whenever new code is deployed, providing immediate feedback on the impact of changes. This allows for early detection of performance bottlenecks and ensures continuous monitoring of the software’s performance.

Similarly, automated testing frameworks can execute a comprehensive suite of functional and integration tests, ensuring the software meets the required functionality and quality standards.Consider a scenario where a team is evaluating three different CI/CD platforms. Instead of manually setting up each platform, configuring it, running tests, and analyzing the results for each, they can use IaC to automatically provision the test environments, and a CI/CD pipeline to automatically run tests and generate performance reports.

This significantly reduces the time and effort required for the evaluation, allowing the team to focus on analyzing the results and making informed decisions.

Challenges and Limitations of Automating the Evaluation Process

While automation offers significant advantages, it also presents certain challenges and limitations.

- Initial Setup and Configuration: Setting up the necessary automation infrastructure and integrating different tools can be complex and time-consuming.

- Maintenance and Updates: Keeping the automation scripts and tools up-to-date requires ongoing maintenance and effort.

- Cost: Implementing and maintaining an automated evaluation process can incur significant costs, especially for complex systems.

- Complexity of the Software: Automating the evaluation of highly complex software can be challenging and may require specialized expertise.

- Unforeseen Issues: Automation cannot always account for every possible scenario, and unforeseen issues may still arise during the evaluation process.

Focusing on Key Features and Functionality

Choosing the right DevOps software can feel overwhelming, given the sheer number of options available. The key to a faster evaluation lies in focusing your efforts on the features truly essential to your team’s workflow and priorities. Don’t get bogged down in bells and whistles; concentrate on the core functionalities that will deliver the biggest impact.Prioritizing features effectively streamlines the selection process and avoids wasting time on tools that don’t meet your immediate needs.

By strategically testing crucial functionalities, you can quickly identify which solutions are worth a deeper dive and which ones should be discarded. This approach allows for a much more efficient and targeted evaluation.

Essential DevOps Features and Their Importance

A prioritized list of essential features should be tailored to your specific context, but some consistently rank high in importance for most DevOps teams. Consider these examples, remembering to adjust based on your organization’s unique requirements and scale.

- CI/CD Pipeline Automation: This is arguably the most crucial feature. Automated build, test, and deployment processes drastically reduce manual effort and increase deployment frequency, leading to faster feedback loops and quicker releases. Without robust CI/CD, your DevOps efforts will be significantly hampered.

- Infrastructure as Code (IaC): IaC allows for the automated provisioning and management of infrastructure, ensuring consistency and repeatability across environments. This is vital for scalability and reduces the risk of human error in configuration management.

- Configuration Management: Tools that manage and automate configuration across your infrastructure are essential for maintaining consistency and reducing the likelihood of configuration drift. This ensures that all your systems are properly configured and secure.

- Monitoring and Logging: Comprehensive monitoring and logging capabilities are crucial for identifying and resolving issues quickly. Real-time insights into application performance and system health are essential for maintaining uptime and responding to incidents effectively.

- Collaboration and Communication Tools: Effective communication and collaboration are the bedrock of successful DevOps. Integrated tools that facilitate seamless communication between development, operations, and security teams are essential for streamlining workflows and preventing bottlenecks.

Quick Assessment of Crucial DevOps Features

Assessing functionality efficiently involves targeted testing rather than exhaustive exploration of every feature. For example, to evaluate a CI/CD pipeline, create a simple application with automated tests and deploy it through the pipeline. Time the process and assess the ease of use and integration with other tools. For monitoring tools, deploy a test application, generate some simulated load, and observe how effectively the tool tracks performance metrics and alerts on potential issues.

Focus on the core functionalities relevant to your prioritized list.

Utilizing Proof-of-Concept Projects for Accelerated Evaluation

Proof-of-concept (POC) projects provide a practical and efficient way to evaluate DevOps software in a realistic environment. Instead of relying solely on vendor demos or documentation, a POC allows you to use the software to address a specific challenge or implement a small-scale version of your target workflow. This approach offers hands-on experience and provides valuable insights into the tool’s usability, integration capabilities, and overall effectiveness within your unique context.

For example, you could use a POC to test the CI/CD pipeline’s ability to handle your specific coding language and deployment environment or evaluate the monitoring tool’s capacity to integrate with your existing infrastructure. The results from these POCs will offer concrete data points for your decision-making process.

Effective Use of Trial Periods and Demos

Trial periods and demos are invaluable tools for evaluating DevOps software. They allow you to experience the software firsthand, test its functionality in your own environment, and assess its suitability for your specific needs before committing to a purchase. Maximizing the value of these limited-time opportunities requires a strategic approach.Effective use of trial periods and demos hinges on careful planning and execution.

This involves clearly defining your evaluation criteria beforehand, identifying critical testing scenarios, and establishing efficient methods for gathering and analyzing feedback. This ensures you get the most out of the limited time available.

Best Practices for Maximizing Trial Periods and Demos

To maximize the value of a trial period or demo, create a detailed test plan upfront. This plan should Artikel specific goals and objectives for the evaluation, focusing on the most critical features and functionalities for your team. For instance, if CI/CD pipeline integration is a priority, dedicate a significant portion of the trial period to rigorously testing this aspect.

Similarly, if security features are paramount, focus on evaluating those features with diverse test cases. Prioritize testing features relevant to your organization’s most pressing needs. A well-structured plan helps avoid wasting time on less crucial aspects. Consider using a checklist to track progress and ensure all key areas are covered.

Identifying Critical Testing Scenarios

Prioritizing testing scenarios is crucial within a limited timeframe. Instead of trying to test every feature exhaustively, focus on the functionalities that directly address your organization’s pain points. For example, if your current deployment process is slow and error-prone, prioritize testing the deployment features of the DevOps software. Similarly, if collaboration and communication are challenges, focus on testing the collaboration tools within the software.

Remember to include both positive and negative test cases to get a comprehensive understanding of the software’s capabilities and limitations. This targeted approach ensures you gain valuable insights into the software’s strengths and weaknesses in areas most important to your team.

Efficiently Collecting and Analyzing Feedback from Trial Users

Gathering and analyzing feedback from trial users is essential for a thorough evaluation. Encourage active participation from your team by providing clear instructions and templates for documenting their experiences. Regular feedback sessions, perhaps weekly, can help identify issues early and ensure everyone’s perspectives are considered. Use a standardized feedback form to collect consistent data across users. This could include rating scales for various aspects of the software, open-ended questions for detailed comments, and a section for reporting bugs.

Analyze the collected data to identify trends and patterns. For example, if multiple users report difficulties with a particular feature, it may indicate a significant usability issue. This systematic approach to feedback ensures a comprehensive understanding of the software’s strengths and weaknesses from the perspective of your actual users.

Analyzing Vendor Documentation and Reviews

Choosing the right DevOps software is a critical decision, and relying solely on sales pitches won’t cut it. A thorough analysis of vendor documentation and independent user reviews is essential for a truly informed choice. This process allows you to move beyond marketing hype and gain a realistic understanding of the software’s capabilities, limitations, and overall suitability for your needs.Effective analysis requires a structured approach, combining careful scrutiny of official materials with a critical evaluation of user experiences.

This ensures a balanced perspective, reducing the risk of making a costly mistake.

Vendor Documentation Checklist

A well-structured review of vendor documentation should be systematic and comprehensive. The following checklist helps ensure you cover all the crucial aspects.

- Feature Matrix: Verify the advertised features are genuinely available and align with your requirements. Look for specifics, not just general statements.

- Integration Capabilities: Check for compatibility with your existing infrastructure and tools. Pay close attention to the level of integration support offered.

- Scalability and Performance: Assess the software’s ability to handle your current and projected workloads. Look for benchmarks, case studies, or performance data.

- Security Measures: Examine the security features and protocols in detail. This includes authentication, authorization, encryption, and compliance certifications.

- Pricing and Licensing: Understand the cost structure, including any hidden fees or limitations. Compare different licensing models to find the best fit.

- Support and Documentation: Evaluate the quality and comprehensiveness of the provided documentation and support channels. Look for readily available resources, responsive support teams, and clear service level agreements.

- API Documentation: If you plan to integrate with other systems, thoroughly examine the API documentation for completeness and clarity.

Identifying and Interpreting Unbiased User Reviews

User reviews offer invaluable insights into the real-world experience of using the software. However, it’s crucial to identify and filter out biased or misleading information.To ensure objectivity, focus on reviews from multiple sources, including independent review sites and forums. Look for patterns in feedback – consistent positive or negative comments across various platforms are more reliable indicators than isolated instances.

Pay attention to the detail level provided in reviews; vague or overly enthusiastic comments should be treated with caution. Consider the reviewer’s context; a review from a large enterprise might have different priorities than one from a small startup. For example, a large enterprise might prioritize scalability and security more heavily than a small startup.

Categorizing and Weighting Vendor Information

To create a comprehensive assessment, it’s helpful to organize the gathered information into a structured system. This allows for a clear comparison of different vendors.One approach is to create a weighted scoring system. Assign weights to different categories based on their importance to your specific needs. For example, security might receive a higher weight than a specific niche feature.

Then, assign scores to each vendor based on their performance in each category. This weighted scoring system allows for a quantitative comparison, facilitating a more objective decision-making process. For instance, you might assign weights as follows: Security (40%), Scalability (30%), Ease of Use (20%), Cost (10%). Each vendor would then receive a score out of 100 based on these weighted categories.

This allows for a clear numerical comparison between vendors.

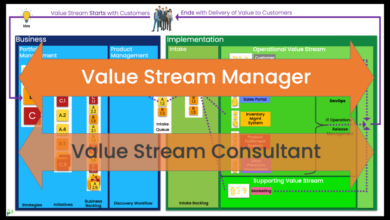

Post-Implementation Evaluation and Optimization

The honeymoon period is over; your chosen DevOps software is live. Now comes the crucial phase: ensuring it delivers the promised benefits and identifying areas for improvement. Post-implementation evaluation isn’t a one-off task but an ongoing process vital for maximizing ROI and refining your DevOps pipeline. Continuous monitoring and optimization are key to reaping the full rewards of your investment.Effective post-implementation evaluation hinges on establishing clear metrics and tracking them diligently.

This allows for objective assessment of the software’s performance and its impact on your team’s efficiency and overall project delivery.

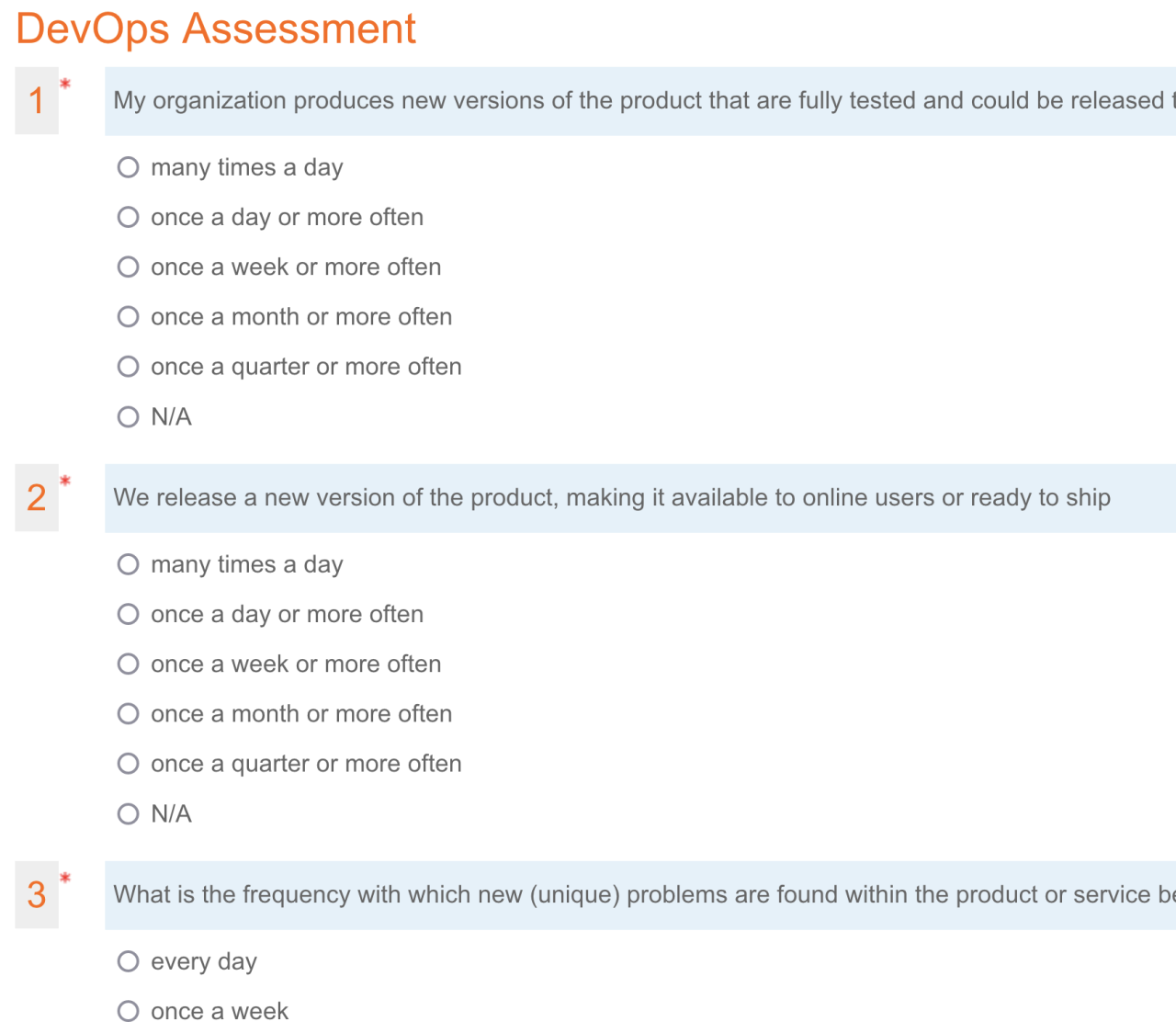

Key Metrics Tracking for DevOps Software Performance

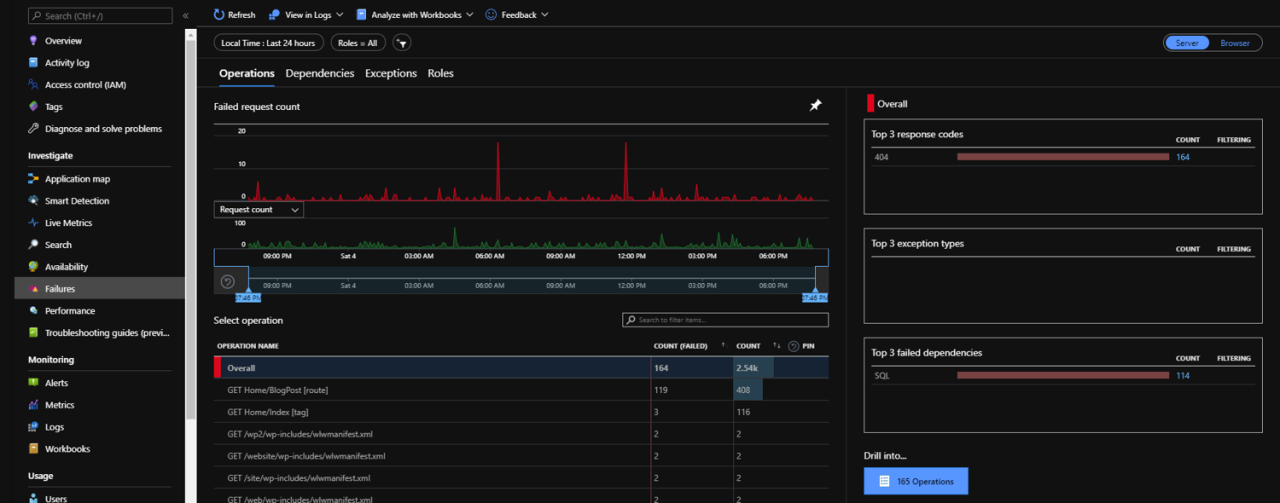

Tracking key metrics provides quantifiable data to evaluate the success of the implemented DevOps software. This data-driven approach enables informed decisions regarding optimization and further improvements. Without this step, improvements are merely guesswork. Examples of crucial metrics include deployment frequency, lead time for changes, mean time to recovery (MTTR), change failure rate, and application performance metrics such as response time and error rates.

Regularly monitoring these metrics using dashboards and reporting tools provides a clear picture of the software’s effectiveness. For example, a significant increase in deployment frequency coupled with a decrease in MTTR strongly suggests the software is positively impacting operational efficiency. Conversely, a persistent high change failure rate might indicate a need for further training or configuration adjustments.

Identifying and Addressing Shortcomings, A faster way to evaluate devops software

Even the best-chosen software may reveal unforeseen shortcomings after implementation. Proactive identification and remediation of these issues are essential for maintaining the smooth operation of your DevOps pipeline. Regularly reviewing the tracked metrics, as mentioned above, is the first step. A sudden spike in MTTR, for instance, could signal a problem with the software’s monitoring capabilities or an issue with the automated rollback process.

Analyzing error logs and conducting regular team feedback sessions can further highlight areas needing attention. For example, if feedback reveals the software’s user interface is cumbersome, causing delays in workflow, this warrants immediate action, potentially involving user training or even exploring software customization options.

Continuous Optimization Process

Continuous optimization is not a one-time event but an iterative cycle of monitoring, analysis, and improvement. This ongoing process ensures the DevOps software continues to deliver optimal value. This process typically involves regular review meetings where the team analyzes the collected metrics, discusses observed issues, and proposes solutions. These solutions might include adjustments to the software configuration, implementation of new integrations, or even exploring alternative tools or workflows.

For instance, if the initial deployment frequency target isn’t met, the team could explore automating additional tasks within the pipeline, enhancing the software’s integration with other tools, or refining the deployment process itself. This iterative approach allows for continuous improvement and adaptation, ensuring the software remains a valuable asset to the organization.

Closing Notes

Ultimately, finding a faster way to evaluate DevOps software isn’t just about saving time; it’s about making informed decisions that directly impact your team’s productivity and your project’s success. By implementing the strategies Artikeld here – from streamlining your process to leveraging automation and focusing on key features – you can significantly reduce evaluation time without compromising thoroughness. Remember, the right tools are instrumental in achieving your DevOps goals, and this optimized approach will help you find them efficiently and effectively.

Questions Often Asked

What if I don’t have a dedicated DevOps team?

Even smaller teams can benefit from streamlined evaluation. Focus on essential features, leverage free trials effectively, and involve key stakeholders early in the process.

How do I handle conflicting stakeholder requirements?

Prioritize requirements based on business impact and feasibility. Use a weighted scoring system to compare different software options against these priorities.

What are the risks of rushing the evaluation process?

Choosing the wrong software can lead to integration issues, reduced productivity, increased costs, and ultimately, project failure. A balanced approach prioritizes speed without sacrificing thoroughness.

How can I ensure the chosen software integrates with our existing systems?

Thoroughly check vendor documentation for compatibility information. Request demos showcasing integration with your current infrastructure and tools. Conduct thorough testing during the trial period.