A Growing Nuisance How to Fend Off Bad Bots 2

A growing nuisance how to fend off bad bots 2 – A Growing Nuisance: How to Fend Off Bad Bots 2 – that’s the harsh reality facing many website owners today. These pesky programs, from simple scrapers to sophisticated DDoS attackers, are constantly evolving, finding new ways to wreak havoc. This post dives deep into identifying, understanding, and ultimately, defeating these digital villains, equipping you with the knowledge and strategies to protect your online presence.

We’ll explore the various types of bad bots, examining their methods and the devastating consequences of their actions – from financial losses and reputational damage to crippling service disruptions. Then, we’ll arm you with both technical and non-technical defenses, from implementing robust CAPTCHAs and rate limiting to conducting regular security audits and educating your users. We’ll even peek into the future of bot warfare, anticipating emerging threats and exploring cutting-edge preventative measures.

Identifying Bad Bots

The internet, a boundless landscape of opportunity, is unfortunately also a haven for malicious actors. These actors deploy sophisticated bots to wreak havoc, from stealing data to crippling online services. Understanding how these bad bots operate is the first step in effectively defending against them. This involves recognizing their common traits, infiltration methods, and the diverse forms they take.

Malicious bots share several common characteristics. They often exhibit unusual patterns of activity, such as making extremely rapid requests, accessing pages without human-like browsing behavior, or targeting specific data points repeatedly. They typically mask their origins through proxies or VPNs, making tracing their source difficult. Their actions are usually automated, lacking the variability and unpredictability of human users.

They often ignore robots.txt directives, demonstrating a blatant disregard for website rules.

Methods of Infiltration

Bad bots employ various techniques to infiltrate systems. Many leverage vulnerabilities in web applications or outdated software to gain unauthorized access. Others exploit known weaknesses in security protocols or use brute-force attacks to guess passwords. Some sophisticated bots use social engineering tactics, mimicking legitimate user behavior to gain trust and access sensitive information. Advanced bots may even employ machine learning techniques to adapt to evolving security measures.

Types of Bad Bots

Scraping bots, spam bots, and DDoS bots represent distinct categories of malicious bots, each with unique characteristics and objectives. Scraping bots automatically extract large amounts of data from websites, often violating terms of service and potentially causing performance issues. Spam bots flood websites, comment sections, or email inboxes with unwanted content, disrupting user experience and potentially spreading malware. DDoS (Distributed Denial of Service) bots overwhelm a target server with traffic, rendering it inaccessible to legitimate users.

While all are harmful, their targets and methods differ significantly.

Challenges in Detecting Sophisticated Bad Bots

Identifying sophisticated bad bots presents significant challenges. These bots often mimic human behavior closely, making it difficult to distinguish them from legitimate users. They employ advanced techniques like rotating IP addresses, using headless browsers, and employing CAPTCHA-solving services to bypass detection mechanisms. The constantly evolving nature of these bots necessitates continuous adaptation and improvement of detection strategies.

Detection Methods for Various Bot Types

| Bot Type | Detection Method | Example | Effectiveness |

|---|---|---|---|

| Scraping Bots | Unusual request patterns, high frequency requests to specific URLs, ignoring robots.txt | Repeated requests for product data or user profiles within a short time frame. | High, but can be circumvented by sophisticated bots. |

| Spam Bots | Automated submissions of identical comments or forms, suspicious email patterns, use of proxy servers. | Posting identical spam comments across multiple websites. | Moderate to high, depending on the sophistication of the spam bot. |

| DDoS Bots | Sudden surges in traffic from multiple IP addresses, unusual traffic patterns, targeting specific server ports. | A large number of requests originating from geographically diverse locations targeting a single server. | High, often requires specialized DDoS mitigation techniques. |

| Sophisticated Bots | Behavioral analysis, machine learning models, CAPTCHAs, IP reputation databases | Bots using rotating IPs, headless browsers, and CAPTCHA-solving services. | Moderate, requires a multi-layered approach. |

The Impact of Bad Bots

The rise of sophisticated bad bots poses a significant threat to businesses and individuals alike. Their impact extends far beyond simple annoyance, leading to substantial financial losses, reputational damage, and operational disruptions. Understanding the full scope of this damage is crucial for implementing effective countermeasures.Bad bots inflict a wide range of harms, impacting businesses across multiple dimensions. These impacts can be categorized into financial, reputational, and operational losses, each with potentially devastating consequences.

Financial Losses Caused by Bad Bots

Malicious bots are responsible for significant financial losses across various sectors. Credit card fraud, fueled by bots that automate the process of stealing and using card details, is a major concern. Similarly, bots can manipulate online pricing, generating fraudulent transactions and draining resources. Ticket scalpers use bots to buy up large quantities of event tickets, then resell them at inflated prices, depriving legitimate customers and causing significant revenue loss for event organizers.

Furthermore, bots can engage in sophisticated ad fraud, generating fake clicks and impressions, costing advertisers millions of dollars annually. These activities represent a considerable drain on resources and profits. For example, a study by Juniper Research estimated that ad fraud cost businesses over $42 billion in 2022.

Reputational Damage Caused by Bad Bots

Beyond the direct financial losses, bad bot activity can severely damage a company’s reputation. Account takeovers, often facilitated by credential stuffing attacks using bots, can lead to data breaches and compromised customer information. This can erode customer trust, leading to lost business and negative publicity. Furthermore, bots can be used to generate fake reviews or spread misinformation about a company or its products, harming its brand image and consumer perception.

A single large-scale data breach or a series of negative fake reviews can have long-term, damaging effects on a company’s ability to attract and retain customers.

Security Breaches Attributed to Bad Bots

Bad bots are frequently instrumental in major security breaches. They can be used to perform brute-force attacks, systematically attempting various password combinations to gain unauthorized access to accounts. This is often compounded by vulnerabilities in websites and applications, allowing bots to exploit weaknesses and gain access to sensitive data. For example, the 2017 Equifax data breach, which exposed the personal information of millions of people, was partially attributed to the exploitation of a known vulnerability by malicious actors, likely using automated bots to scale their attacks.

The resulting legal costs, reputational damage, and loss of customer trust were enormous.

Disruption to Service Caused by Bot Attacks

Bots can overwhelm websites and online services with massive amounts of traffic, leading to denial-of-service (DoS) attacks. These attacks render websites inaccessible to legitimate users, disrupting business operations and causing significant losses in revenue and productivity. This can be particularly damaging for e-commerce businesses, where even short periods of downtime can result in lost sales and frustrated customers. Furthermore, some bots are designed to scrape data from websites, overwhelming the server resources and slowing down the site for all users.

Categorized Impacts of Bad Bots

- Financial: Ad fraud, credit card fraud, price manipulation, ticket scalping, data breaches leading to financial penalties and legal costs.

- Reputational: Account takeovers, data breaches leading to loss of customer trust, fake reviews and negative publicity, spread of misinformation.

- Operational: Denial-of-service attacks, data scraping causing service disruptions, increased security costs and time spent managing bot threats.

Defensive Strategies: A Growing Nuisance How To Fend Off Bad Bots 2

So, you’ve identified the bad bots targeting your website. Now what? The good news is that there are several effective technical solutions you can implement to significantly reduce their impact. This isn’t about completely eliminating bots – some are legitimate – but about creating a robust defense against malicious actors. Let’s explore some key strategies.

Robust CAPTCHA Implementation

A CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is a first line of defense. A well-designed CAPTCHA presents a challenge easily solved by humans but difficult for bots to overcome. Simple text-based CAPTCHAs are easily bypassed by advanced bots, so consider more sophisticated options. These might include image-based CAPTCHAs requiring identification of objects or solving simple math problems, or even reCAPTCHA, which leverages Google’s advanced bot detection technology.

Remember to choose a CAPTCHA that’s accessible to users with disabilities, adhering to WCAG guidelines. Regularly update your CAPTCHA system to stay ahead of evolving bot techniques.

Rate Limiting Implementation

Rate limiting is a crucial technique for controlling the number of requests your server receives from a single IP address or user agent within a specific time frame. By setting thresholds, you can identify and block suspicious activity. For example, if a single IP address sends hundreds of requests per second, it’s highly likely to be a bot.Here’s a step-by-step guide for implementing basic rate limiting:

- Identify the Request Source: Use your server’s logging capabilities to identify the IP address and user agent of each request.

- Set Thresholds: Determine acceptable request rates per IP address or user agent within a defined time window (e.g., 10 requests per minute).

- Implement a Counter: Track the number of requests received from each source within the time window.

- Block Exceeding Requests: If a source exceeds the predefined threshold, block further requests from that source for a specified duration.

- Monitor and Adjust: Regularly monitor your rate limiting system and adjust thresholds as needed based on observed traffic patterns.

Honeypot Deployment

Honeypots are cleverly disguised elements on your website designed to attract and trap bots. These are typically hidden form fields or links that are not visible to human users. If a bot interacts with a honeypot, it reveals its automated nature. You can use this information to identify and block the bot. For instance, you could include a hidden form field with a label like “email” but no associated input field.

A bot might automatically fill this field, while a human would likely ignore it.

Effective IP Address Blocking

IP address blocking is a straightforward method to prevent requests from known malicious IP addresses. However, it’s crucial to use this method cautiously, as it can inadvertently block legitimate users. Dynamic IP addresses further complicate this approach. Effective IP address blocking requires monitoring your server logs, identifying suspicious IP addresses associated with bot activity, and adding them to a blocklist.

Many web servers offer built-in mechanisms for IP address blocking, or you can use third-party tools and services. Regularly review and update your blocklist.

Utilizing Bot Detection Services

Many services specialize in identifying and mitigating bot traffic. These services often employ advanced machine learning algorithms to analyze user behavior and identify bots with high accuracy. They typically offer features such as IP address reputation checks, user agent analysis, and behavioral pattern detection. These services often integrate with your website through APIs, providing real-time bot detection and mitigation capabilities.

Examples include Imperva, DataDome, and Distil Networks. Choosing a service depends on your specific needs and budget. Consider factors such as the accuracy of detection, ease of integration, and level of support offered.

Dealing with bad bots is a constant headache; it feels like a never-ending game of whack-a-mole. Building robust security measures is key, and understanding efficient development practices helps. For example, learning about modern approaches like those discussed in this article on domino app dev the low code and pro code future can inform how we design applications resistant to bot attacks.

Ultimately, staying ahead of these malicious scripts requires constant vigilance and innovation in app development.

Defensive Strategies: A Growing Nuisance How To Fend Off Bad Bots 2

Beyond the technical solutions, a robust defense against bad bots requires a multi-faceted approach encompassing proactive measures and a commitment to ongoing vigilance. Ignoring the non-technical aspects leaves your website vulnerable, even with the most sophisticated firewalls in place. A layered security approach, incorporating these non-technical strategies, significantly enhances overall protection.

Regular Security Audits

Regular security audits are crucial for identifying vulnerabilities before bad bots can exploit them. These audits should be more than just a cursory check; they need to be thorough examinations of your website’s code, infrastructure, and security practices. Think of it like a yearly health check-up for your website. A comprehensive audit might include penetration testing (simulated attacks to find weaknesses), vulnerability scanning (automated checks for known vulnerabilities), and a review of your security policies and procedures.

The frequency of these audits depends on your website’s size, complexity, and the sensitivity of the data it handles. For high-traffic, e-commerce sites, quarterly audits might be necessary, while smaller sites might manage with annual reviews. The goal is to identify and address weaknesses before they become points of entry for malicious bots.

User Education and Awareness

Educating your users about the threat of bad bots is a surprisingly effective defensive strategy. While it won’t stop all attacks, it can significantly reduce the success rate of phishing attempts and other socially engineered attacks. This involves clearly communicating the risks associated with clicking suspicious links, downloading unknown files, and entering personal information on untrusted websites. Consider creating informative blog posts, FAQs, or even short videos explaining these risks in plain language.

Regularly updating this information is crucial, as new threats constantly emerge. Training your staff is also critical, as they are often the first line of defense against social engineering attacks targeting your website. A well-informed user base is less likely to fall victim to bot-driven scams, thereby reducing the impact of bot attacks.

Improving Website Security Protocols, A growing nuisance how to fend off bad bots 2

Strengthening your website’s security protocols goes beyond simply installing security software. It requires a holistic approach to secure coding practices, robust password policies, and the implementation of multi-factor authentication (MFA) where possible. For example, enforcing strong password requirements, using HTTPS, and regularly updating your website’s software and plugins are fundamental steps. Implementing MFA adds an extra layer of security by requiring users to provide multiple forms of authentication, such as a password and a one-time code sent to their phone, making it significantly harder for bots to gain unauthorized access.

Regularly reviewing and updating your website’s security protocols, based on the latest security best practices and emerging threats, is essential for maintaining a robust defense.

Website Security Enhancement Checklist

Before implementing any changes, it’s crucial to have a clear understanding of your current security posture. This checklist provides a starting point for enhancing your website’s security:

- Implement HTTPS to encrypt all communications between your website and users.

- Use a strong, unique password for all website accounts.

- Enable multi-factor authentication (MFA) for all administrative accounts.

- Regularly update all website software and plugins.

- Install and maintain a web application firewall (WAF).

- Conduct regular security audits and penetration testing.

- Implement robust input validation to prevent injection attacks.

- Monitor website logs for suspicious activity.

- Educate users about the risks of phishing and other online threats.

- Develop and implement a comprehensive incident response plan.

Employing a Security Expert

While many security measures can be implemented in-house, employing a security expert offers significant advantages. A skilled professional can provide a comprehensive assessment of your website’s security posture, identify vulnerabilities that might be missed by internal teams, and recommend and implement appropriate security measures. They also stay abreast of the latest threats and vulnerabilities, ensuring your website remains protected against emerging attacks.

Consider it an investment in protecting your website’s reputation, data, and ultimately, your business. The cost of a security breach can far outweigh the cost of hiring an expert to prevent one.

Emerging Threats and Future Defenses

The battle against bad bots is a constantly evolving arms race. As bot technology advances, so too must our defenses. Understanding emerging threats and proactively developing future defenses is crucial to maintaining a secure online environment. This section explores the latest trends in bad bot technology, the impact of AI on bot detection, and potential strategies for future-proofing our systems against increasingly sophisticated attacks.

Emerging Trends in Bad Bot Technology

Bad bots are becoming increasingly sophisticated, employing advanced techniques to evade detection. We’re seeing a rise in the use of residential proxies, making it harder to pinpoint the bot’s origin. Bots are also leveraging machine learning to adapt their behavior, mimicking human interactions more effectively. Furthermore, the use of serverless functions and decentralized networks allows bots to operate with greater anonymity and resilience, making them harder to track and block.

The integration of bots with legitimate services, such as social media platforms, also presents a significant challenge. This allows bots to blend seamlessly into normal user activity, further complicating detection efforts.

The Impact of AI on Bot Detection and Prevention

The rise of AI presents both challenges and opportunities in the fight against bad bots. On one hand, AI-powered bots are becoming more sophisticated, employing techniques like deep learning to generate realistic and unpredictable behavior. On the other hand, AI also offers powerful tools for detection and prevention. Machine learning algorithms can analyze vast amounts of data to identify patterns indicative of bot activity.

AI-driven systems can learn and adapt to new bot techniques, making them more effective over time. For example, AI can be used to analyze user behavior patterns, identifying anomalies that suggest automated activity. This could involve tracking mouse movements, typing speeds, and navigation patterns. Real-time analysis coupled with historical data allows the system to refine its detection capabilities and improve accuracy.

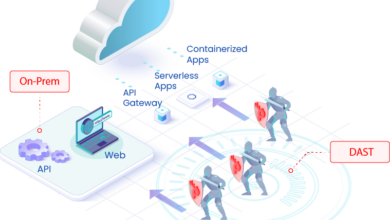

A Hypothetical Proactive Bot Defense System

Imagine a system that uses a multi-layered approach combining several AI-powered components. The first layer would be a behavioral analysis engine, constantly monitoring user interactions for anomalies. This engine would analyze a wide range of metrics, including request frequency, navigation patterns, and the timing of interactions. A second layer would involve a machine learning model trained to identify known bot signatures and patterns.

This layer would leverage a constantly updated database of known bot techniques and characteristics. The third layer would focus on anomaly detection using unsupervised learning algorithms, capable of identifying novel bot behaviors that haven’t been seen before. This system would continuously learn and adapt, improving its accuracy over time. Finally, a feedback loop would allow the system to learn from its mistakes and refine its detection capabilities.

This feedback loop would be essential for maintaining the system’s effectiveness against evolving bot techniques.

Future Strategies for Combating Advanced Bot Attacks

Future strategies must focus on proactive measures and collaboration. This includes developing more sophisticated behavioral analysis techniques, utilizing advanced machine learning models, and fostering greater information sharing among organizations. Blockchain technology could also play a role in verifying user identities and tracking bot activity. Furthermore, integrating bot detection systems with other security measures, such as web application firewalls and intrusion detection systems, is essential for a comprehensive defense.

The development of decentralized and distributed bot detection networks could further enhance resilience against sophisticated attacks. This would involve multiple organizations sharing data and collaborating to identify and mitigate bot threats.

Visual Representation of a Future Bot Defense System

Imagine a dynamic, multi-layered shield. The outermost layer represents the behavioral analysis engine, constantly scanning incoming traffic. The next layer is a complex network of interconnected nodes, each representing a machine learning model trained on different aspects of bot detection. These nodes are constantly exchanging information and adapting to new threats. At the core lies a central intelligence system, analyzing data from all layers and coordinating the overall defense.

This system constantly learns and adapts, dynamically adjusting its defenses based on real-time threat analysis. The shield is not static; it constantly evolves, expanding and strengthening to counter emerging bot threats.

Ultimate Conclusion

The battle against bad bots is an ongoing arms race, but by understanding their tactics and employing a multi-layered defense strategy, you can significantly reduce your vulnerability. Remember, a proactive approach combining technical solutions, strong security practices, and user awareness is your best bet. Don’t let bad bots win – take control of your online security and safeguard your website’s future.

Question Bank

What is the difference between a scraper bot and a spam bot?

Scrapers automate data collection from websites, often for unethical purposes. Spam bots flood websites with unwanted comments or registrations.

Can I completely eliminate bad bot activity?

Complete elimination is nearly impossible, but a robust strategy significantly reduces their impact.

How often should I update my website’s security plugins?

Regularly, ideally whenever updates are released. Outdated plugins are prime targets for exploitation.

Are free bot detection services reliable?

Free services offer basic protection, but paid services often provide more comprehensive features and support.