A Recipe for Avoiding Software Failures

A Recipe for Avoiding Software Failures: Ever felt the stomach-churning dread of a major software crash? We’ve all been there, watching helplessly as our carefully crafted code crumbles. This isn’t just about avoiding embarrassing glitches; it’s about building robust, reliable systems that stand the test of time and user demands. This post dives deep into the practical strategies, design principles, and testing methodologies that will transform your approach to software development, ensuring your projects are not only functional but also resilient.

From understanding the root causes of common failures – be it design flaws, coding errors, or infrastructure hiccups – to implementing robust design principles and rigorous testing, we’ll cover it all. We’ll explore effective deployment and monitoring strategies, incident management plans, and the critical role of human factors in preventing those dreaded software meltdowns. Get ready to level up your software development game!

Understanding Software Failure Causes: A Recipe For Avoiding Software Failures

Software failures, those frustrating moments when our carefully crafted applications crash, malfunction, or simply don’t behave as expected, are unfortunately a common occurrence. Understanding the root causes of these failures is crucial for building more robust and reliable software. This involves a multifaceted approach, encompassing everything from the initial design phase to the ongoing maintenance and support of the application.

Ignoring these causes can lead to significant financial losses, reputational damage, and even safety hazards in critical systems.

Common Software Failure Categories

Software failures rarely stem from a single, isolated cause. Instead, they are often the result of a complex interplay of factors. We can broadly categorize these causes into three major groups: design flaws, coding errors, and infrastructure issues. Let’s explore each category in more detail. Design flaws represent fundamental weaknesses in the architecture or logic of the software.

Coding errors are mistakes made during the implementation phase, introducing bugs and vulnerabilities into the codebase. Infrastructure issues encompass problems with the underlying hardware, network, or operating system that support the software.

Examples of Real-World Software Failures

Learning from past mistakes is a powerful tool in preventing future failures. Examining real-world examples helps us understand the consequences of neglecting proper software development practices. The following table illustrates several notable instances, highlighting the failure type, description, root cause, and resulting impact.

| Failure Type | Description | Root Cause | Impact |

|---|---|---|---|

| Design Flaw | The Year 2000 problem (Y2K) | Insufficient space allocated for storing dates (using only two digits for the year) in many software systems. | Widespread fears of global system failures; costly remediation efforts. |

| Coding Error | The Therac-25 radiation therapy machine accidents | A race condition in the software controlling the machine’s radiation dose, leading to massive overdoses. | Patient deaths and serious injuries. |

| Infrastructure Issue | The 2012 London Olympics website crash | The website infrastructure was unable to handle the high volume of concurrent users attempting to access it. | Significant disruption to ticket sales and public access to information. |

| Design Flaw & Coding Error | The Ariane 5 rocket failure | A software error related to the conversion of a 64-bit floating-point number to a 16-bit integer, causing an exception and system failure. This was exacerbated by a lack of robust error handling in the design. | Complete loss of the rocket and its payload. |

Proactive Identification of Failure Points

Preventing software failures requires a proactive approach, integrating quality assurance measures throughout the entire software development lifecycle (SDLC). This includes rigorous testing at each stage, code reviews to identify potential errors, and the use of static and dynamic analysis tools to detect vulnerabilities. Furthermore, employing robust error handling mechanisms in the code, designing for scalability and resilience, and adhering to established coding best practices significantly minimize the risk of failures.

Regular security audits and penetration testing are also vital for identifying and mitigating potential security vulnerabilities. Adopting a culture of continuous improvement, where feedback from users and developers is actively sought and incorporated, is key to building more resilient software systems.

Robust Design Principles

Building software that doesn’t crumble under pressure requires more than just writing code; it demands a robust design philosophy. This involves proactively anticipating potential problems and building systems that can gracefully handle unexpected situations, minimizing disruptions and data loss. This section explores key principles and best practices for achieving robust software.

Robust software design centers around three pillars: modularity, fault tolerance, and defensive programming. Modularity breaks down complex systems into smaller, independent modules, making them easier to understand, test, and maintain. Fault tolerance incorporates mechanisms to detect and recover from errors, preventing cascading failures. Defensive programming involves writing code that anticipates and handles potential errors gracefully, preventing crashes and unexpected behavior.

Modularity and its Benefits

Modularity is the cornerstone of robust software. By decomposing a large application into smaller, self-contained modules, we improve code organization, reduce complexity, and enhance testability. Each module has a specific responsibility, reducing the likelihood of unintended side effects. Changes within one module are less likely to impact others, simplifying maintenance and reducing the risk of introducing new bugs.

Consider a large e-commerce platform: modularity allows for independent development and deployment of features like the shopping cart, payment gateway, and user accounts. If a bug arises in the payment gateway, it won’t necessarily bring down the entire platform.

Fault Tolerance Mechanisms

Fault tolerance goes beyond simply handling errors; it aims to prevent failures from escalating and impacting the entire system. This involves techniques like redundancy (having backup systems ready to take over if one fails), error detection (monitoring for anomalies and potential problems), and error recovery (mechanisms to automatically restore functionality after a failure). For example, a database system might use replication to create multiple copies of the data, ensuring availability even if one server fails.

Load balancing distributes traffic across multiple servers, preventing overload on a single machine. These mechanisms significantly enhance the resilience of the software.

Defensive Programming Techniques

Defensive programming is about anticipating potential problems and writing code that handles them gracefully. This includes input validation (checking user input for errors and unexpected values), error handling (using try-catch blocks or similar mechanisms to handle exceptions), and assertions (checking assumptions about the program’s state). For example, before performing a calculation, you might check if a divisor is zero to prevent a division-by-zero error.

Robust error messages can aid debugging and provide valuable information to users. By anticipating potential issues and incorporating checks, we prevent unexpected crashes and data corruption.

So, what’s the secret recipe for avoiding software failures? Thorough testing is key, of course, but a smart approach to development helps too. I’ve been reading up on domino app dev the low code and pro code future lately, and the flexible approach there really seems to minimize potential pitfalls. Ultimately, choosing the right development strategy is a huge part of that recipe for success.

Documentation and Maintainability Best Practices

Well-documented and easily maintainable code is crucial for long-term success. This involves writing clear, concise comments explaining the purpose and functionality of the code, using consistent naming conventions, and adhering to coding standards. Regular code reviews help catch potential problems early on, and automated testing ensures that changes don’t introduce new bugs. A well-structured codebase, employing meaningful variable names and clear function definitions, is easier to understand and modify, leading to reduced maintenance costs and improved reliability.

Comprehensive documentation, including API specifications and user manuals, facilitates the use and maintenance of the software.

Microservices vs. Monolithic Architecture: A Resilience Comparison

The choice between microservices and monolithic architectures significantly impacts resilience. Monolithic architectures, where all components are tightly coupled, are vulnerable to cascading failures: a bug in one component can bring down the entire system. Microservices, on the other hand, decompose the application into independent services. A failure in one microservice typically doesn’t affect others, improving overall resilience.

However, microservices introduce complexities in terms of inter-service communication and data consistency management. The optimal choice depends on the specific application’s requirements and scale. For high-availability, fault-tolerant systems, microservices often offer a more resilient approach. However, smaller applications might find monolithic architectures simpler to manage.

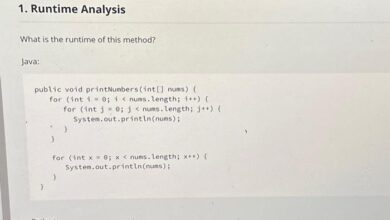

Testing and Quality Assurance

Rigorous testing is the bedrock of reliable software. Without a comprehensive testing strategy, even the most brilliantly designed software is vulnerable to unexpected failures. This section delves into the crucial role of testing and quality assurance in preventing software failures. We’ll explore different testing methodologies and Artikel a practical approach to building robust and dependable software.Testing isn’t just a final step; it’s an integral part of the entire development lifecycle.

Early and frequent testing helps identify and resolve issues before they escalate, saving time, resources, and preventing costly fixes later on. A well-defined testing strategy significantly reduces the risk of software failures in production.

Types of Software Testing

Effective software testing involves a multi-layered approach, encompassing various testing types to cover different aspects of the software. These include unit testing, integration testing, and system testing. Unit testing focuses on individual components, integration testing verifies the interaction between components, and system testing evaluates the entire system as a whole. Each layer plays a vital role in ensuring the overall quality and stability of the software.

Think of it like building a house: you wouldn’t skip checking individual bricks (unit testing) before putting up the walls (integration testing) and finally inspecting the entire structure (system testing).

A Comprehensive Testing Strategy

A comprehensive testing strategy combines various testing methods to maximize coverage and identify a wide range of potential issues. Black-box testing assesses functionality without looking at the internal code, focusing on inputs and outputs. White-box testing, conversely, examines the internal structure and logic of the code, enabling more thorough checks for potential flaws. Regression testing is crucial for ensuring that new code changes haven’t introduced unintended consequences or broken existing functionality.

For example, imagine a banking application. Black-box testing would focus on verifying that a deposit transaction correctly updates the account balance, while white-box testing would examine the code to ensure that the database update is handled securely and efficiently. Regression testing would be performed after each new feature is added to ensure that existing deposit and withdrawal functionalities still work correctly.

Quality Assurance Checklist

A robust quality assurance process involves multiple checkpoints to ensure software reliability and stability. This checklist provides a starting point for building a comprehensive QA process.

- Code Reviews: Regular code reviews by peers help identify potential issues early in the development cycle. This collaborative approach allows for diverse perspectives and helps catch errors that individual developers might miss.

- Static Analysis: Automated tools can analyze code without executing it, identifying potential bugs, security vulnerabilities, and style inconsistencies. This process can significantly improve code quality and reduce the number of defects that make it into testing phases.

- Automated Testing: Implementing automated tests for unit, integration, and regression testing accelerates the testing process, increases test coverage, and reduces the risk of human error. This is especially beneficial for large projects with frequent code changes.

- Performance Testing: Performance testing evaluates the software’s response time, scalability, and stability under various load conditions. This ensures that the software performs optimally even under high demand.

- Security Testing: Security testing identifies vulnerabilities and weaknesses that could be exploited by malicious actors. This includes penetration testing and vulnerability scanning to ensure the software’s security posture.

- Usability Testing: Usability testing involves observing users interacting with the software to identify areas for improvement in terms of user experience and ease of use. This helps ensure that the software is intuitive and easy to navigate.

- Documentation: Comprehensive documentation is crucial for maintainability and troubleshooting. This includes user manuals, technical documentation, and API specifications.

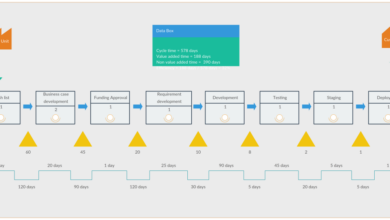

Deployment and Monitoring

Deploying software to production is the culmination of all your hard work, but it’s also a critical juncture where things can easily go wrong. A well-planned deployment strategy, coupled with robust monitoring, is crucial for ensuring a smooth transition and minimizing the impact of unforeseen issues. This section covers best practices for deployment and the essential monitoring aspects to keep your software running smoothly.Deployment to production environments requires a meticulous approach to minimize risk and maximize stability.

A poorly executed deployment can lead to downtime, data loss, and a severely damaged user experience. Therefore, careful planning and execution are paramount.

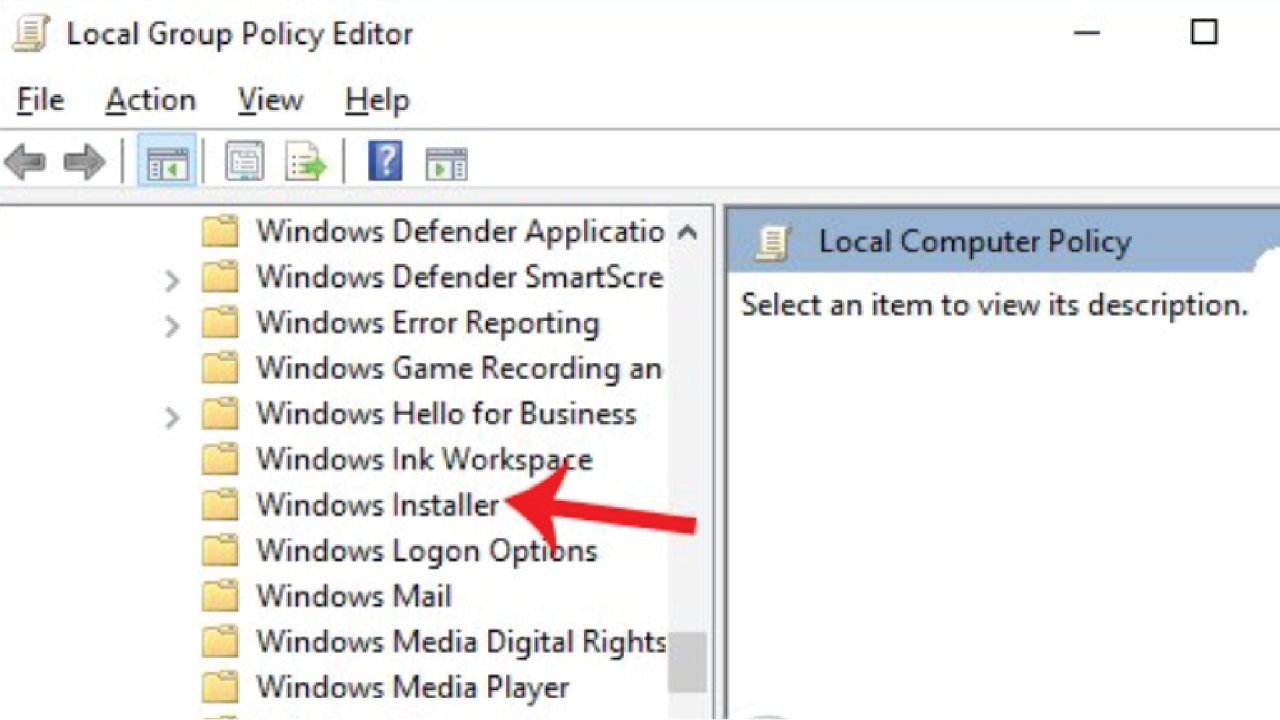

Version Control and Rollback Strategies

Effective version control is the cornerstone of reliable deployments. Using a system like Git allows you to track changes, revert to previous versions easily, and collaborate effectively with your team. A robust branching strategy (like Gitflow) helps to isolate development work from production, preventing accidental deployment of unstable code. Furthermore, a well-defined rollback strategy is essential. This plan should detail how to quickly revert to a previously stable version in case of a deployment failure.

This might involve automated scripts that revert to a previous version within minutes, minimizing downtime. For example, if a new version introduces a critical bug causing widespread outages, a rollback strategy ensures a rapid recovery to a working state. This rapid recovery minimizes business disruption and maintains user trust.

Blue/Green Deployments

Blue/green deployments are a powerful technique for minimizing downtime during deployments. In this method, you maintain two identical environments: a “blue” (production) environment and a “green” (staging) environment. The new version is deployed to the green environment, thoroughly tested, and then traffic is switched from blue to green. If issues arise, traffic can be quickly switched back to the blue environment with minimal disruption.

This approach significantly reduces the risk associated with deploying new code to a live production system. Imagine a large e-commerce site deploying a new checkout system. Using blue/green deployment, the new system is tested in the green environment, and once approved, traffic is switched. If a problem occurs, the switch is reversed instantly, avoiding revenue loss and customer frustration.

Key Metrics for Software Performance Monitoring

Monitoring is crucial for proactively identifying and addressing potential problems. By tracking key metrics, you can gain valuable insights into your software’s performance and health.

It’s vital to continuously monitor these metrics to maintain application health and quickly identify and resolve issues.

- Uptime: Percentage of time the application is available.

- Response Time: Time taken for the application to respond to requests.

- Error Rate: Number of errors occurring per request or time unit.

- Resource Utilization (CPU, Memory, Disk I/O): Monitoring these resources helps prevent performance bottlenecks.

- Throughput: Number of requests processed per unit of time.

- Database Performance: Query execution times, connection pool usage.

- Log Analysis: Examining application logs to identify error patterns and anomalies.

Handling and Resolving Software Failures

A well-defined incident response plan is crucial for effectively handling and resolving software failures in production. This plan should Artikel clear roles, responsibilities, and procedures for dealing with various types of failures.

A step-by-step procedure ensures a consistent and efficient response to incidents, minimizing downtime and impact.

- Detect the Failure: Automated alerts from monitoring systems are key for early detection.

- Diagnose the Root Cause: Utilize logs, metrics, and debugging tools to pinpoint the problem.

- Implement a Fix: This might involve deploying a hotfix, rolling back to a previous version, or performing a temporary workaround.

- Deploy the Fix: Utilize your deployment strategy (e.g., blue/green) to deploy the fix with minimal disruption.

- Monitor Recovery: Closely monitor the system to ensure the fix is effective and the system is stable.

- Post-Incident Review: Analyze the incident to identify areas for improvement in your processes and prevent similar issues in the future. This review should document the cause, impact, response, and lessons learned.

Incident Management and Recovery

A robust incident management plan is the final, critical line of defense against software failures. It’s not about preventing failures entirely (though we strive for that!), but about minimizing their impact and learning from them. A well-defined plan ensures swift response, efficient recovery, and valuable post-mortem analysis, ultimately leading to a more resilient system.Effective incident management is about more than just fixing the bug; it’s about managing the entire lifecycle of a failure, from initial detection to post-incident review.

This includes clear communication, well-defined escalation paths, and a structured approach to problem resolution. The goal is to reduce downtime, maintain customer trust, and extract valuable lessons to prevent similar incidents in the future.

Key Components of an Effective Incident Management Plan

An effective incident management plan hinges on several key elements. These elements work together to ensure a coordinated and efficient response to software failures. A well-defined plan provides clear roles, responsibilities, and procedures, reducing confusion and accelerating resolution times. It should be regularly reviewed and updated to reflect changes in the system and team structure.

Communication Protocols and Escalation Procedures

Clear communication is paramount during an incident. The plan should specify communication channels (e.g., Slack, email, dedicated incident management system) and who is responsible for communicating with different stakeholders (e.g., customers, management, development team). Escalation procedures should define the steps to take when an incident exceeds the capabilities of the initial responders, ensuring that senior personnel are involved when necessary.

For example, a minor bug might be handled by the development team, while a major outage requiring immediate action would involve the operations team and potentially executive management. Regular drills and simulations can help teams practice these procedures and identify potential weaknesses.

Strategies for Minimizing Downtime and Restoring Service

Minimizing downtime requires a proactive approach. This includes having redundant systems, automated failover mechanisms, and readily available rollback plans. For example, a database cluster with automatic failover can ensure continued operation even if one server fails. A robust rollback plan allows for a quick reversion to a stable version of the software if a new release introduces a critical bug.

The plan should also detail procedures for quickly identifying the root cause of the failure and implementing the necessary fixes. Prioritizing critical functions and having a well-defined process for restoring services incrementally can also help minimize overall downtime.

Post-Incident Analysis and Learning

Post-incident analysis, also known as a post-mortem, is crucial for continuous improvement. It involves a thorough review of the incident to identify the root cause, understand the impact, and determine how to prevent similar incidents in the future. Root cause analysis (RCA) techniques, such as the “5 Whys” method, can help uncover the underlying issues. The analysis should be documented and shared with the relevant teams to promote learning and prevent recurrence.

The goal is not to assign blame, but to identify systemic weaknesses and implement preventative measures. For instance, a post-mortem might reveal a lack of sufficient monitoring, leading to the implementation of new monitoring tools and alerts.

Preventing Data Loss and Corruption

Data loss and corruption represent significant threats to any software system, potentially leading to financial losses, reputational damage, and legal repercussions. Implementing robust strategies to prevent and mitigate these risks is crucial for maintaining system integrity and business continuity. This section explores practical methods for protecting your valuable data.

Data Backup Strategies

Regular and reliable data backups are the cornerstone of any data loss prevention strategy. A well-defined backup plan should incorporate multiple backup types, including full backups (copying all data), incremental backups (copying only changed data since the last backup), and differential backups (copying data changed since the last full backup). These different approaches offer flexibility and efficiency. Consider using a 3-2-1 backup strategy: three copies of your data, on two different media types, with one copy offsite.

This redundancy protects against various failure scenarios, such as hardware failure, natural disasters, or ransomware attacks. Regular testing of your backup and restore procedures is essential to ensure they function correctly when needed. Cloud-based backup solutions offer an additional layer of protection and offsite storage.

Data Redundancy and Replication, A recipe for avoiding software failures

Redundancy minimizes the impact of single points of failure. Employing techniques like RAID (Redundant Array of Independent Disks) for storage, database replication across multiple servers, and geographically dispersed data centers ensures data availability even if one component fails. Replication involves creating exact copies of data and storing them in separate locations. This ensures that if one location is compromised, the data remains accessible from another.

The choice of redundancy method depends on the criticality of the data and the acceptable level of downtime. For instance, a high-availability system might use synchronous replication, ensuring immediate data consistency across all replicas, while asynchronous replication, with a slight delay, might be sufficient for less critical data.

Data Validation and Integrity Checks

Data validation techniques, implemented at various stages of the software development lifecycle, help prevent corrupted data from entering the system in the first place. This involves checks on data types, ranges, and formats. Regular data integrity checks, such as checksums or hash functions, verify the consistency and accuracy of stored data. These checks can detect subtle data corruption that might otherwise go unnoticed.

For example, a checksum is a numerical value calculated from the data; if the data changes, the checksum will also change, allowing detection of corruption. Implementing these checks proactively reduces the likelihood of data corruption and facilitates faster identification of issues if corruption does occur.

Securing Sensitive Data

Protecting sensitive data requires a multi-layered approach. This includes implementing robust access control mechanisms, encrypting data both in transit and at rest, and adhering to data privacy regulations like GDPR or CCPA. Access control lists (ACLs) should restrict access to sensitive data based on the principle of least privilege, granting users only the necessary permissions. Encryption renders data unreadable without the appropriate decryption key, safeguarding it from unauthorized access even if a security breach occurs.

Regular security audits and penetration testing help identify and address vulnerabilities before they can be exploited. Employee training on data security best practices is also crucial to prevent human error, a frequent cause of data breaches.

Data Recovery Procedure

A well-defined data recovery procedure is essential for minimizing downtime and data loss in the event of a system failure. This procedure should detail steps for identifying the cause of the failure, accessing backup data, restoring data to a functional system, and verifying data integrity after restoration. Regularly testing this procedure ensures that staff is familiar with the process and that the backup and recovery mechanisms function as expected.

The procedure should also include contact information for support teams and escalation paths for critical situations. Clear communication protocols should be in place to keep stakeholders informed during the recovery process. A detailed post-incident review should be conducted to identify areas for improvement and prevent similar incidents in the future.

The Human Factor in Software Reliability

Let’s face it: software isn’t built by robots (yet!). Humans are at the heart of every stage of the software development lifecycle, from initial design to deployment and maintenance. This means human error, with all its variability and unpredictability, is a significant contributor to software failures. Understanding and mitigating this human factor is crucial for building truly reliable software.The role of human error in software failures is multifaceted.

It’s not just about typos or simple mistakes; it encompasses a broader range of issues, including flawed design choices stemming from misunderstandings of requirements, inadequate testing due to time constraints or insufficient skill, and poor communication leading to inconsistencies and integration problems. Even seemingly minor errors can cascade, creating significant problems later in the development process.

Minimizing the Impact of Human Error

Effective strategies for minimizing the impact of human error rely on a multi-pronged approach. This includes rigorous code reviews, where multiple developers scrutinize each other’s work, catching potential problems early. Automated testing tools can help identify many common errors, freeing up developers to focus on more complex issues. Furthermore, establishing clear coding standards and style guides promotes consistency and reduces ambiguity, making code easier to understand and maintain.

Finally, using version control systems allows for easy rollback to previous versions in case of errors, minimizing downtime and the impact of mistakes. A well-defined process for bug reporting and tracking helps identify recurring errors and trends, allowing developers to address systemic issues.

Effective Communication and Collaboration Enhance Software Reliability

Open and transparent communication is the bedrock of successful software development. Effective collaboration fosters a shared understanding of project goals, reduces misunderstandings, and prevents duplicated efforts. Regular team meetings, clear documentation, and the use of collaborative tools such as shared code repositories and project management software are essential. For example, a daily stand-up meeting where developers briefly discuss their progress, roadblocks, and plans for the day helps to identify and address potential issues early.

Using a shared project management tool allows for transparent task assignment and progress tracking, preventing confusion and missed deadlines. These collaborative practices contribute significantly to higher software reliability.

Best Practices for Developer Training and Professional Development

Investing in developer training and professional development is paramount for improving software development skills and reducing human error. A comprehensive training program should encompass a range of topics, including:

- Fundamentals of software engineering principles: This includes topics such as design patterns, data structures, algorithms, and software architecture.

- Best practices for coding style and standards: Consistent coding practices make code easier to read, understand, and maintain, reducing errors.

- Testing methodologies and techniques: Developers need to be proficient in various testing methods, including unit testing, integration testing, and system testing.

- Debugging and troubleshooting skills: Effective debugging is crucial for identifying and resolving errors efficiently.

- Version control systems: Proficiency in using version control systems like Git is essential for collaborative development and managing code changes.

- Security best practices: Developers need to be aware of common security vulnerabilities and how to prevent them.

- Continuous learning and professional development: Encouraging developers to stay up-to-date with the latest technologies and best practices is vital for long-term success.

Regular training sessions, workshops, and access to online learning resources are all effective ways to achieve these goals. Furthermore, mentorship programs, where experienced developers guide newer team members, can significantly improve skills and knowledge transfer. The investment in training translates directly into reduced errors, improved code quality, and increased software reliability.

Last Recap

Building reliable software isn’t just about writing clean code; it’s a holistic approach that encompasses design, testing, deployment, and incident management. By understanding the common pitfalls, implementing robust design principles, and embracing a culture of continuous improvement, you can significantly reduce the risk of software failures. Remember, a proactive approach, combined with rigorous testing and effective monitoring, is your secret weapon in creating software that’s not only functional but also resilient to the inevitable bumps in the road.

So, ditch the late-night debugging sessions and embrace the recipe for success!

FAQ

What’s the difference between unit testing and integration testing?

Unit testing focuses on individual components of your code, while integration testing checks how those components work together.

How often should I perform software updates?

Regular updates are crucial for patching security vulnerabilities and improving performance. The frequency depends on your software and the update’s nature, but a planned schedule is essential.

What’s the best way to communicate a software failure to users?

Transparency is key. Provide clear, concise updates about the issue, its impact, and the estimated time for resolution. Avoid technical jargon.

How can I improve my team’s collaboration to reduce software failures?

Establish clear communication channels, use collaborative tools, and foster a culture of open feedback and knowledge sharing.