Accelerating Your Test Automation with the Power of Containers

Accelerating your test automation with the power of containers is a game-changer! Imagine a world where setting up testing environments is a breeze, parallel testing is effortless, and scaling your tests is as simple as adding more containers. This isn’t science fiction; it’s the reality of containerized test automation. This post dives deep into how leveraging containers can dramatically improve your testing workflow, from setup and execution to maintenance and scaling.

Get ready to revolutionize your testing strategy!

We’ll cover everything from the basics of containerization and Docker to advanced techniques like orchestrating containers with Kubernetes for massive parallel testing. We’ll also tackle practical challenges, share real-world examples, and explore future trends in this rapidly evolving field. Prepare to boost your testing efficiency and say goodbye to those frustrating environment inconsistencies!

Containerization in Test Automation

Containerization is revolutionizing software testing by providing a consistent and isolated environment for running tests. Essentially, it packages an application and all its dependencies—libraries, system tools, settings—into a single unit, called a container. This ensures that tests run identically regardless of the underlying infrastructure, eliminating the dreaded “works on my machine” problem. The benefits extend beyond simple consistency; containers significantly boost the speed and efficiency of test automation.Containers improve the speed and efficiency of test automation primarily through their lightweight nature and rapid deployment capabilities.

Unlike virtual machines (VMs), which emulate entire operating systems, containers share the host OS kernel, resulting in much smaller image sizes and faster startup times. This means you can spin up test environments in seconds, drastically reducing the time spent on environment setup and teardown. This translates directly into faster test execution cycles and quicker feedback for developers.

Parallel Test Execution with Containers

The ability to quickly and easily create and destroy containerized environments is especially advantageous for parallel test execution. Imagine running your test suite across multiple containers simultaneously—each container representing an independent, isolated test environment. This dramatically reduces overall test execution time, enabling faster release cycles and quicker identification of bugs. Orchestration tools like Kubernetes can manage and scale these containerized test environments efficiently, ensuring optimal resource utilization and parallel execution across a cluster of machines.

Comparison of Traditional and Containerized Testing Environments

The following table highlights the key differences between traditional testing environments and those leveraging containerization:

| Feature | Traditional | Containerized | Advantages of Containerization |

|---|---|---|---|

| Environment Setup | Complex, time-consuming, often manual configuration of hardware and software. | Automated, fast deployment using Docker or similar tools. | Reduced setup time, improved consistency, less manual intervention. |

| Environment Consistency | Prone to inconsistencies across different machines and developers. | Highly consistent environment across different platforms. | Eliminates “works on my machine” issues, increased reliability of test results. |

| Resource Utilization | Can be inefficient, especially for parallel testing. | Lightweight and efficient, allowing for parallel execution with minimal overhead. | Faster test execution, improved resource utilization, cost savings. |

| Scalability | Scaling up testing resources can be difficult and expensive. | Easily scalable using container orchestration tools like Kubernetes. | Easy scaling to meet demand, improved flexibility. |

Setting up a Containerized Test Environment

Containerizing your test environment offers significant advantages in test automation, including improved consistency, reproducibility, and scalability. By encapsulating your testing dependencies within Docker containers, you eliminate the “works on my machine” problem and ensure that tests run reliably across different environments. This section details how to leverage Docker to streamline your test automation workflow.

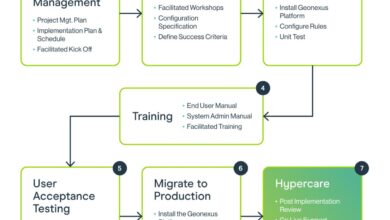

Setting up a robust containerized test environment involves several key steps, from installing Docker and creating efficient images to deploying and integrating them into your CI/CD pipeline. Let’s delve into each aspect.

Docker Installation and Configuration

Installing Docker is generally straightforward, varying slightly depending on your operating system. For Linux distributions, package managers like apt (Debian/Ubuntu) or yum (Red Hat/CentOS) are typically used. On macOS and Windows, you can download the official Docker Desktop application. After installation, ensure Docker is running and configured correctly. You’ll likely need to add your user to the `docker` group to avoid constantly using `sudo` with Docker commands.

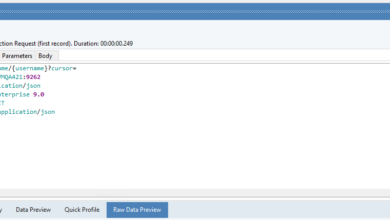

Verify your installation by running `docker version` in your terminal. This command will display the Docker version and other relevant information, confirming a successful installation.

Best Practices for Creating Docker Images for Testing

Creating efficient and well-structured Docker images is crucial for a smooth testing process. Prioritize using a minimal base image to reduce image size and attack surface. For example, instead of a full-blown operating system image, consider using a slim version of a Linux distribution like `alpine` or a specialized image tailored for your testing framework (e.g., a Node.js image for testing JavaScript applications).

Maintain a clear separation of concerns; use separate images for different testing stages or components if necessary. This approach facilitates better organization and easier debugging. Always leverage Docker’s layering system to optimize image build times and reduce storage consumption. Changes in subsequent layers only trigger rebuilds of those specific layers, rather than the entire image.

Deploying a Containerized Testing Environment

Deploying a containerized testing environment involves orchestrating the containers that comprise your test infrastructure. This might include a database container, an application server container, and a container specifically for running your tests. Docker Compose is an excellent tool for managing multi-container applications. A `docker-compose.yml` file defines the services (containers) and their dependencies, making it easy to spin up and tear down your entire test environment with a single command: `docker-compose up -d`.

The `-d` flag runs the containers in detached mode, allowing them to run in the background. Once your environment is running, you can execute your tests within the appropriate container.

Configuring a CI/CD Pipeline with Docker Integration

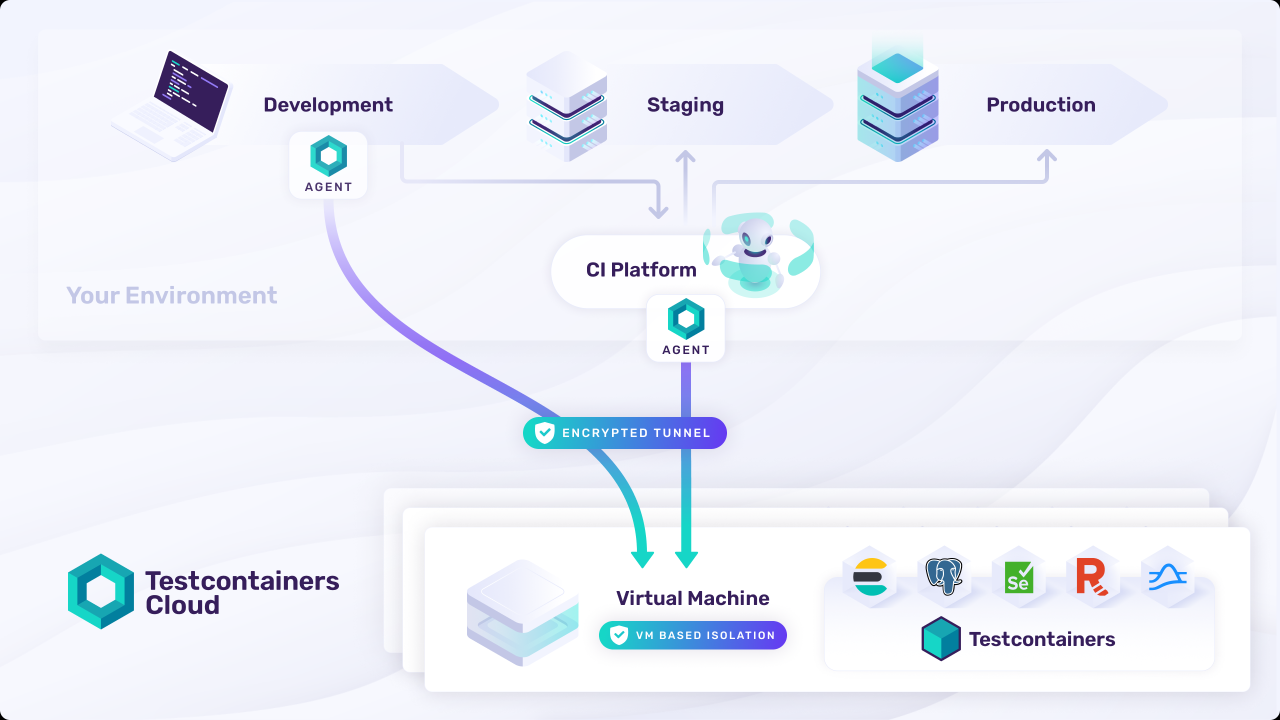

Integrating Docker into your CI/CD pipeline enables automated testing within consistent, isolated environments. Popular CI/CD tools like Jenkins, GitLab CI, and CircleCI seamlessly integrate with Docker. The general workflow involves:

First, your CI/CD system builds your Docker image from a Dockerfile during the build stage. Next, it pushes the image to a container registry (like Docker Hub or a private registry) for easy access by other stages. Then, in the test stage, the CI/CD system pulls the image and runs your tests inside a container instance of this image. Finally, the results of the tests are reported back to the CI/CD system, triggering further actions based on the outcome (e.g., deployment to production if tests pass).

A sample Jenkins pipeline snippet illustrating this process (assuming you’ve already set up Jenkins and Docker plugins):

pipeline agent any stages stage(‘Build’) steps sh ‘docker build -t my-test-image .’ sh ‘docker push my-docker-registry/my-test-image’ stage(‘Test’) steps sh ‘docker run -d my-docker-registry/my-test-image’ // Run your tests inside the container sh ‘docker exec -it

pytest’ //or your test runner command sh ‘docker stop ‘

Remember to replace placeholders like `

Utilizing Containers for Parallel Testing

Parallel testing, the simultaneous execution of multiple tests, is a game-changer for accelerating your test automation pipeline. By drastically reducing overall execution time, parallel testing allows for faster feedback cycles, quicker identification of bugs, and ultimately, faster releases. Containerization, through tools like Docker Compose and Kubernetes, provides the perfect infrastructure for efficiently managing and scaling parallel test runs.Leveraging the power of containers significantly simplifies the complexities of setting up and managing parallel test environments.

Each test can run in its own isolated container, ensuring consistent and reproducible results, regardless of the underlying system’s configuration. This eliminates the inconsistencies that often plague traditional parallel testing setups, resulting in more reliable test results.

Docker Compose for Parallel Test Management, Accelerating your test automation with the power of containers

Docker Compose streamlines the process of defining and running multi-container applications. For parallel testing, this means defining separate services for each test, or groups of tests, within a single `docker-compose.yml` file. Each service can specify its own image (containing the test framework and dependencies), environment variables, and volumes. This allows for easy scaling of parallel tests simply by adjusting the number of containers launched.

For example, a `docker-compose.yml` file might define three services, each running a different set of Selenium tests against a separate containerized application server. The tests in each service run concurrently, resulting in a significant reduction in overall testing time. Imagine running three sets of integration tests concurrently; Docker Compose makes this straightforward. Each test set resides in its own container, ensuring isolated environments and preventing conflicts.

Benefits of Parallel Testing and Impact on Test Execution Time

The primary benefit of parallel testing is a significant reduction in test execution time. If a single test suite takes 1 hour to complete, running 10 tests in parallel could reduce the total execution time to just over 6 minutes (assuming relatively even test durations). This dramatically accelerates the feedback loop, allowing developers to address bugs more quickly. Moreover, parallel testing enhances test coverage, as more tests can be executed within a given timeframe.

This leads to more comprehensive testing and a higher degree of confidence in the software’s quality. Consider a continuous integration/continuous delivery (CI/CD) pipeline: the faster the tests run, the faster the feedback cycle, and the faster new features can be deployed.

Containerized Testing Frameworks

Many popular testing frameworks integrate seamlessly with containerization. For example, Selenium tests, often used for web application testing, can easily be packaged within a Docker image along with the necessary browser drivers and dependencies. Similarly, frameworks like JUnit (Java), pytest (Python), and Mocha (JavaScript) are easily containerized. The container image becomes a self-contained unit, containing everything needed to run the tests, ensuring consistency across different environments.

This approach simplifies the setup and deployment of tests, making it easier to scale parallel testing.

Orchestrating Containers with Kubernetes for Large-Scale Parallel Testing

For very large-scale parallel testing, Kubernetes provides robust orchestration capabilities. Kubernetes can manage hundreds or even thousands of containers, automatically scaling resources based on demand. This is particularly beneficial when running tests across different environments or using different browser versions in parallel. Kubernetes handles the scheduling, deployment, and monitoring of containers, ensuring high availability and efficient resource utilization. This allows for running thousands of tests concurrently, a task that would be extremely difficult to manage manually.

Imagine a large e-commerce platform; Kubernetes would be instrumental in managing the parallel execution of thousands of tests across various browser versions and devices to ensure the platform’s stability and performance.

Managing Dependencies and Environments with Containers

Containerization revolutionizes test automation by providing isolated and reproducible environments. This dramatically simplifies dependency management and ensures consistent test execution, regardless of the underlying infrastructure. By encapsulating applications and their dependencies within containers, we eliminate the notorious “works on my machine” problem and pave the way for more reliable and efficient testing.Containers isolate dependencies by packaging the application, its libraries, and runtime environment into a single, self-contained unit.

This prevents conflicts between different versions of libraries or system configurations, a common source of errors in traditional testing environments. Each container acts as a miniature virtual machine, providing a consistent and predictable execution environment for your tests. This isolation ensures that your tests are not affected by changes in the host system or other containers.

Docker Volumes for Persistent Data

Docker volumes provide a mechanism for managing persistent data within containers. Instead of storing data directly within the ephemeral container filesystem, which is discarded when the container is removed, Docker volumes persist data independently. This is crucial for test automation because it allows you to maintain test data, database snapshots, or other persistent resources across multiple test runs. For example, you might use a Docker volume to store a database used for integration testing.

Even if you rebuild or remove the container, the data within the volume remains intact, allowing you to resume testing without data loss. This approach ensures data integrity and simplifies the management of test data across different stages of the testing process.

Environment Variable Management in Containers

Several approaches exist for managing environment variables within containers. One common method is to set environment variables during container creation using the `-e` flag with the `docker run` command. This approach is suitable for simple configurations. For more complex scenarios, environment variables can be defined in a `.env` file and loaded into the container using a process manager like `dotenv`.

This allows for better organization and management of environment variables, particularly in larger projects. Another method involves using Docker Compose, which allows defining environment variables within the `docker-compose.yml` file, providing a centralized location for managing configuration settings across multiple containers. Each method offers different levels of flexibility and control, depending on the complexity of the testing environment.

Security and Access Control in Containerized Testing Environments

Security is paramount in any testing environment, and containerization offers several mechanisms to enhance security. Employing non-root users within containers reduces the potential impact of vulnerabilities. Limiting network access to only necessary ports and using appropriate network isolation techniques (e.g., Docker networks) further strengthens security. Implementing role-based access control (RBAC) for managing access to Docker resources ensures that only authorized users can create, modify, or delete containers and images.

Regularly scanning container images for vulnerabilities using tools like Clair or Trivy is essential for proactive security management. By implementing these security best practices, you can create a robust and secure containerized testing environment.

Optimizing Test Automation with Containerization

Containerization revolutionizes test automation by addressing many persistent bottlenecks. By isolating dependencies and providing consistent environments, containers significantly improve efficiency, reliability, and scalability of your testing pipeline. This section explores specific optimization strategies enabled by this technology.

Common Bottlenecks Addressed by Containerization

Test automation often suffers from environment inconsistencies, dependency conflicts, and resource limitations. Containers directly tackle these issues. Inconsistent environments, where tests fail on one machine but pass on another due to differing software versions or configurations, are eliminated because containers provide a consistent, reproducible environment for each test run. Dependency conflicts, arising from incompatible libraries or software versions, are resolved through containerized isolation; each container gets its own set of dependencies, preventing clashes.

Finally, resource limitations, such as insufficient memory or CPU, are mitigated by efficient resource allocation within the containerized infrastructure, enabling parallel testing and better utilization of available hardware.

Strategies for Optimizing Resource Utilization

Efficient resource utilization is crucial for cost-effective and high-throughput test automation. Container orchestration platforms like Kubernetes allow for dynamic scaling of resources based on demand. This means that you can spin up additional containers only when needed for testing, then scale down when finished, avoiding unnecessary resource consumption. Furthermore, utilizing smaller, more lightweight container images reduces the overhead associated with running tests, freeing up resources for more tests.

Techniques such as using multi-stage builds for Docker images can drastically reduce image size, leading to faster deployments and reduced resource consumption. For example, a multi-stage build allows you to separate the build environment from the runtime environment, resulting in a smaller final image containing only the necessary components.

Monitoring and Troubleshooting Containerized Tests

Effective monitoring is vital for identifying and resolving issues promptly. Tools like Prometheus and Grafana provide comprehensive monitoring capabilities for containerized environments. They allow you to track resource usage (CPU, memory, disk I/O), container health, and test execution times. This data is invaluable for identifying bottlenecks and optimizing resource allocation. For troubleshooting, logging is essential.

Containers should be configured to produce detailed logs, which can be aggregated and analyzed using tools like the ELK stack (Elasticsearch, Logstash, Kibana) for efficient debugging and problem resolution. For example, if a test consistently fails within a container, reviewing the container logs can pinpoint the root cause, whether it’s a dependency issue, a configuration problem, or a bug in the test itself.

Best Practices Checklist for Optimizing Test Automation with Containers

Effective containerization requires careful planning and execution. Here’s a checklist of best practices:

- Use lightweight container images: Minimize image size for faster deployments and reduced resource consumption.

- Implement multi-stage builds: Separate build and runtime environments for smaller, more efficient images.

- Leverage container orchestration: Utilize platforms like Kubernetes for dynamic resource allocation and scaling.

- Implement robust logging: Configure containers to produce detailed logs for effective debugging.

- Utilize monitoring tools: Employ tools like Prometheus and Grafana to track resource usage and test execution.

- Employ CI/CD integration: Integrate containerized testing into your CI/CD pipeline for continuous feedback.

- Regularly update container images: Ensure you’re using the latest versions of your dependencies and base images to benefit from security patches and performance improvements.

Real-world Examples and Case Studies

Containerization has revolutionized software development and deployment, and its impact on test automation is undeniable. Seeing is believing, so let’s explore some real-world examples where containerized test automation has delivered significant improvements in efficiency, scalability, and reliability. These examples highlight the practical benefits and address common challenges encountered during implementation.We’ll examine several case studies, focusing on how different organizations leveraged containerization to streamline their testing processes and achieve faster time-to-market.

We’ll also analyze the selection of container orchestration tools and the practical lessons learned from these experiences.

A Large E-commerce Platform’s Experience

A major e-commerce company migrated its test automation suite to a containerized environment using Kubernetes. Previously, their testing infrastructure was complex and prone to inconsistencies, leading to flaky tests and delayed releases. By containerizing their tests and deploying them on Kubernetes, they achieved significant improvements. Their test execution time was reduced by 40% due to parallel execution capabilities.

Furthermore, the consistent and reproducible environment ensured more reliable test results, reducing the time spent investigating false positives. The standardized setup simplified onboarding for new team members, improving overall team efficiency.

Microservices Architecture and Containerized Testing

A financial technology company adopting a microservices architecture found containerization essential for their testing strategy. Each microservice had its own set of dependencies and required a specific environment for testing. Docker containers provided isolated environments for each microservice, allowing for independent and parallel testing. This significantly sped up the testing cycle and improved the overall quality of the microservices.

The team utilized Docker Compose for managing the interconnected services during testing, simplifying the setup and teardown process.

Comparative Analysis of Container Orchestration Tools

Choosing the right container orchestration tool is crucial for successful containerized test automation. Here’s a comparison of Kubernetes and Docker Swarm, two popular choices:

| Feature | Kubernetes | Docker Swarm |

|---|---|---|

| Scalability | Highly scalable, suitable for large-scale deployments | Scalable, but generally less complex than Kubernetes |

| Complexity | Steeper learning curve, requires more expertise | Simpler to learn and manage, good for smaller projects |

| Features | Rich feature set including self-healing, rolling updates, and advanced networking | Fewer features compared to Kubernetes, but sufficient for many use cases |

| Community Support | Large and active community, extensive documentation and resources | Smaller community compared to Kubernetes |

The choice between Kubernetes and Docker Swarm (or other tools like Nomad or Rancher) depends on the scale and complexity of the testing environment. For smaller projects with simpler needs, Docker Swarm might be sufficient. For large-scale deployments with complex requirements, Kubernetes offers greater scalability and advanced features.

Practical Tips and Advice

Implementing containerized test automation requires careful planning and execution. Here are some practical tips learned from real-world experiences:

- Start Small: Begin with a small subset of your tests to validate the approach before migrating your entire suite.

- Choose the Right Tools: Select container orchestration and CI/CD tools that align with your team’s skills and project requirements.

- Automate Everything: Automate the process of building, deploying, and managing your containerized test environment.

- Monitor and Optimize: Regularly monitor your containerized test environment to identify and address performance bottlenecks.

- Version Control Everything: Maintain version control for your Dockerfiles, test scripts, and container images to ensure reproducibility and traceability.

“The key to success with containerized test automation is to embrace automation and standardization throughout the entire process.”

Future Trends and Considerations

Containerization has revolutionized software development and deployment, and its impact on test automation is only going to grow. As the technology matures, we’ll see even more sophisticated applications and integrations, alongside new challenges that need addressing. This section explores the emerging trends, potential hurdles, and future directions of containerized test automation.The landscape of containerization is constantly evolving, with new technologies and approaches emerging regularly.

These advancements will directly influence how we approach test automation, leading to more efficient and robust testing pipelines. Understanding these trends is crucial for staying ahead of the curve and leveraging the full potential of containerization in testing.

Emerging Trends in Containerization and Their Impact on Test Automation

Serverless computing, coupled with container orchestration platforms like Kubernetes, is becoming increasingly prevalent. This allows for highly scalable and cost-effective test environments, automatically scaling resources up or down based on demand. Imagine a scenario where your automated tests run on a serverless platform, only consuming resources when actively executing tests. This significantly reduces infrastructure costs and improves resource utilization compared to maintaining always-on virtual machines.

Another emerging trend is the increased use of container registries with enhanced security features, allowing for more robust and secure management of test images and dependencies. This minimizes the risk of vulnerabilities and ensures the integrity of the testing process. Finally, the integration of AI and machine learning into containerized testing pipelines promises to automate even more aspects of the testing process, from test case generation to result analysis.

This could lead to significantly reduced testing times and improved accuracy.

Challenges and Limitations of Containerized Testing

While containerization offers numerous benefits, it also presents some challenges. One significant hurdle is the complexity of managing and orchestrating containers, especially in large-scale testing environments. Setting up and maintaining a robust Kubernetes cluster requires specialized skills and can be time-consuming. Another challenge is debugging issues within containers. Troubleshooting problems can be more difficult compared to traditional testing environments due to the isolated nature of containers.

Furthermore, the need for specialized knowledge and expertise in Docker, Kubernetes, and related technologies can create a barrier to entry for some teams. Finally, network latency within containerized environments can sometimes impact test execution speeds, especially when dealing with distributed testing across multiple containers. Proper network configuration and optimization are crucial to mitigate this.

Future Directions of Containerized Test Automation

The future of containerized test automation points towards greater integration with DevOps and CI/CD pipelines. We can anticipate more seamless integration with tools like Jenkins, GitLab CI, and Azure DevOps, enabling automated test execution as part of the continuous integration and delivery process. This will lead to faster feedback loops and quicker identification of bugs. Furthermore, the rise of cloud-native testing platforms will provide managed container services, simplifying the deployment and management of containerized test environments.

These platforms will abstract away much of the underlying infrastructure complexity, making containerized testing accessible to a wider range of teams. Finally, expect to see more sophisticated container image management tools, offering features like automated image building, vulnerability scanning, and version control for test images. This will enhance the security and reliability of the testing process.

Recommendations for Adopting Containerization in Test Automation

Start small. Begin by containerizing a single component of your test environment, gradually expanding to encompass more aspects of your testing pipeline. This allows for a phased approach, minimizing disruption and facilitating a smoother transition. Choose the right tools. Select containerization and orchestration tools that align with your team’s expertise and infrastructure.

Don’t jump into Kubernetes immediately if your team lacks the necessary skills; start with simpler solutions like Docker Compose if needed. Invest in training. Ensure your team has the necessary skills to effectively utilize containerization technologies. Provide training on Docker, Kubernetes, and related tools to ensure successful adoption. Establish clear processes and best practices.

Define standardized procedures for building, managing, and deploying containerized test environments to maintain consistency and reproducibility. This includes clear guidelines for image versioning, dependency management, and security best practices.

Last Point: Accelerating Your Test Automation With The Power Of Containers

So, there you have it – a journey into the world of containerized test automation. By embracing containers, you’re not just streamlining your testing process; you’re future-proofing it. The benefits – increased speed, improved efficiency, consistent environments, and effortless scalability – are undeniable. Take the leap, explore the possibilities, and watch your test automation soar to new heights.

Happy testing!

Top FAQs

What are the main drawbacks of using containers for test automation?

While incredibly beneficial, containerization isn’t a silver bullet. Learning curves exist, especially with orchestration tools like Kubernetes. Resource management needs careful consideration, and debugging can sometimes be more complex than in traditional setups. Security also needs careful planning to prevent vulnerabilities.

Can I use containers with any testing framework?

Yes, most popular testing frameworks are compatible with containerization. You can easily containerize frameworks like Selenium, JUnit, pytest, and many others. The key is creating a Docker image that includes all the necessary dependencies for your chosen framework.

How do I choose the right container orchestration tool?

The best tool depends on your needs. Docker Compose is great for smaller projects, while Kubernetes shines for large-scale, complex deployments. Consider factors like team expertise, project complexity, and scalability requirements when making your choice.