After Italy, Germany to Ban AI Chatbot Use

After italy germany to issue ban on the chatgpt use – After Italy, Germany is poised to issue a ban on the use of a popular AI chatbot. This move has sent ripples through the tech world, sparking debates about data privacy, economic impact, and the future of AI regulation. Will other European nations follow suit? What are the potential consequences for businesses and individuals? Let’s dive in and explore this rapidly evolving situation.

The potential economic fallout is significant, with businesses reliant on this technology facing disruption. Concerns over data privacy and misuse are also paramount, driving the regulatory push. The ban raises critical questions about job displacement, societal impact, and the balance between technological advancement and responsible innovation. It’s a complex issue with far-reaching implications.

Potential Reasons for the Ban

The recent bans on Kami use in Italy and Germany, while seemingly drastic, highlight growing concerns surrounding the rapid advancement of AI technologies. These actions are not isolated incidents but rather represent a broader European debate on the ethical, economic, and security implications of large language models (LLMs). Understanding the rationale behind these bans requires examining several key factors.

Economic Impacts of a Kami Ban

The potential economic impact of a Kami ban in Germany and Italy is multifaceted. While some might argue that it protects domestic industries from foreign competition, the reality is far more nuanced. The ban could stifle innovation within these countries, hindering the development of AI-related businesses and potentially slowing the adoption of AI technologies across various sectors. Furthermore, the loss of access to a powerful tool like Kami could impact productivity in numerous industries, from customer service and marketing to research and development.

For example, Italian businesses relying on Kami for automated customer support might face increased operational costs and reduced efficiency. The long-term effects could be a loss of competitive edge in the global market. Germany, with its strong manufacturing base, could also see reduced efficiency in design and prototyping processes if access to Kami’s capabilities is restricted.

Data Privacy Violations and Security Concerns

A primary driver behind the bans is the concern over data privacy violations. Kami, like other LLMs, relies on vast amounts of data for training and operation. The potential for this data to be misused, especially concerning personally identifiable information (PII), is a significant concern. For instance, if a user inputs sensitive information during a conversation, there’s a risk that this data could be inadvertently stored or processed in ways that violate data protection regulations like GDPR.

The lack of complete transparency regarding data handling practices within these systems further exacerbates these worries. The Italian data protection authority, Garante, specifically cited concerns about the lack of age verification and consent mechanisms as a key reason for the ban.

Examples of Misuse Cases Justifying Regulatory Measures, After italy germany to issue ban on the chatgpt use

Several misuse cases illustrate the need for regulatory oversight. The generation of deepfakes and misinformation using LLMs is a significant threat. Kami, while not explicitly designed for malicious purposes, could be easily exploited to create convincing fake news articles, impersonate individuals, or spread propaganda. Furthermore, its ability to generate convincing code could be leveraged for malicious activities like creating phishing scams or developing malware.

These scenarios highlight the potential for harm and underscore the need for safeguards to prevent misuse. The spread of such content could severely damage public trust and even impact political processes.

Job Displacement and Societal Impact Arguments for the Ban

Concerns regarding job displacement and societal impact also contribute to the debate surrounding the ban. The automation potential of LLMs like Kami raises anxieties about the future of work, particularly for roles involving repetitive tasks or data processing. While Kami may also create new jobs, the transition period could lead to significant unemployment and social disruption if not managed effectively.

Moreover, the potential for biased outputs from LLMs, reflecting biases present in the training data, raises concerns about fairness and equity. This bias could perpetuate existing societal inequalities, further amplifying existing social divisions. These arguments highlight the need for a broader societal discussion about the responsible development and deployment of AI technologies.

Comparison of Regulatory Approaches Across the EU

Germany and Italy’s regulatory approaches differ somewhat from other EU nations. While several countries are actively exploring regulatory frameworks for AI, the immediate ban adopted by Italy and Germany represents a more proactive and stringent response. Other EU nations might favor a more gradual approach, focusing on developing guidelines and standards rather than outright bans. This difference in approach reflects varying perspectives on the balance between innovation and risk mitigation.

The long-term outcome will likely involve a harmonized EU-wide approach, but the current divergence highlights the complexities involved in regulating rapidly evolving technologies.

Impact on Businesses and Users

The recent ban on Kami in Italy and Germany sends ripples through various sectors, impacting businesses that integrated the technology into their workflows and individual users who relied on it for various tasks. The extent of the impact depends heavily on the degree of reliance on Kami and the availability of suitable alternatives.The effects on businesses are multifaceted. Companies using Kami for customer service, content creation, data analysis, or market research face immediate disruptions.

For example, a startup relying on Kami to automate customer support might experience a significant drop in efficiency and customer satisfaction if forced to switch to a less efficient system. Larger companies with more robust internal resources might fare better, but they will still incur costs associated with transitioning to new technologies and retraining staff. The ban also raises concerns about data privacy and security, particularly for businesses that processed sensitive information through Kami.

Consequences for Individual Users

Imagine a university student relying on Kami to generate essay Artikels and summarize complex research papers. The ban immediately impacts their workflow, potentially leading to missed deadlines and reduced academic performance. Similarly, a freelance writer using Kami to overcome writer’s block or generate initial drafts faces a significant setback, affecting their productivity and income. Individuals with disabilities who utilize Kami for communication or accessibility purposes experience a direct and potentially severe impact on their daily lives.

The sudden loss of a readily available tool could create significant challenges and frustrations.

Alternative Technologies and Solutions

Several alternative technologies exist, offering similar functionalities to Kami, although with varying capabilities and limitations. These include other large language models like Google Bard, Jasper, or Cohere, as well as specialized tools designed for specific tasks such as content creation or code generation. Open-source language models also offer a degree of independence from proprietary systems.

Comparison of Alternatives

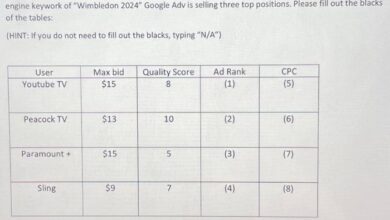

| Alternative | Advantages | Disadvantages | Cost |

|---|---|---|---|

| Google Bard | Widely accessible, integrates with Google ecosystem | Potentially less sophisticated than Kami in certain areas, data privacy concerns | Free (currently) |

| Jasper | Specialized for marketing and content creation | Can be expensive, limited functionality outside its niche | Subscription-based, varying costs |

| Cohere | Strong focus on enterprise solutions, customizable models | Higher barrier to entry, requires technical expertise | Enterprise pricing, varies based on usage |

| Open-source LLMs (e.g., LLaMA) | Cost-effective, greater control over data and model | Requires technical expertise to deploy and maintain, potentially lower performance | Variable, mostly free but requires computing resources |

Potential for a Black Market

The ban on Kami could inadvertently create a black market for access to the technology. Individuals and businesses might seek out unofficial methods to use Kami, potentially compromising security and data privacy. This could lead to the proliferation of malicious actors offering compromised or insecure versions of the technology, exposing users to risks such as malware or data breaches.

The lack of regulation and oversight in such a market would create significant challenges for enforcement and consumer protection. This situation mirrors the historical development of black markets for other banned goods and services. For instance, the prohibition of alcohol in the US during the 1920s led to the rise of organized crime and widespread illegal distribution networks.

Similarly, a black market for Kami could attract malicious actors and lead to unforeseen consequences.

Legal and Ethical Considerations

The recent bans on Kami use in Italy and Germany raise complex legal and ethical questions surrounding artificial intelligence, data privacy, and freedom of speech. These bans, while seemingly drastic, highlight the urgent need for a robust legal framework to govern the development and deployment of powerful AI technologies. Understanding the legal basis for these actions, as well as the ethical implications, is crucial for navigating the future of AI.The legal framework underlying the bans is multifaceted, drawing upon existing data protection laws and potentially extending into broader consumer protection and liability regulations.

In Italy’s case, the Garante per la protezione dei dati personali (Italian Data Protection Authority) cited violations of the General Data Protection Regulation (GDPR), specifically concerning the lack of transparency in data collection and processing, insufficient legal basis for data processing, and the absence of age verification mechanisms. Germany’s approach may differ slightly, but it is likely to rely on similar principles and legislation.

The specific laws and regulations involved will vary based on the jurisdiction, but the core concerns remain consistent across countries grappling with the regulation of AI.

Legal Challenges to the Ban

The bans are not without potential legal challenges. Companies like OpenAI could argue that the bans are disproportionate, lacking a sufficient legal basis, or infringe upon fundamental rights such as freedom of expression. Challenges could focus on the interpretation of existing laws in the context of AI, arguing that the current regulatory frameworks are inadequate for the nuances of AI technology.

For example, the GDPR focuses on the processing of personal data, but determining what constitutes “personal data” in the context of large language models (LLMs) like Kami requires careful consideration. Further legal battles might involve the definition of responsibility in cases of misinformation or harmful outputs generated by the AI. The outcome of these challenges will likely shape the future regulatory landscape for AI.

Ethical Dilemmas Posed by the Ban

The bans present significant ethical dilemmas. Restricting access to a powerful tool like Kami raises concerns about freedom of information and the potential for censorship. While concerns about misinformation and harmful content are valid, a complete ban may disproportionately affect users who rely on Kami for legitimate purposes, such as research, education, or creative writing. Balancing the risks associated with AI with the benefits it offers is a critical ethical challenge.

The ban also raises questions about the pace of technological advancement and the ability of regulatory bodies to keep up with rapidly evolving technologies.

Comparison with Bans on Other Technologies

The bans on Kami can be compared to past restrictions on other technologies, such as the early days of the internet or the regulation of social media platforms. While the specific technologies differ, the underlying ethical considerations often overlap. Similar concerns about misinformation, harmful content, data privacy, and the potential for misuse have been raised in the past.

However, the scale and potential impact of AI technologies like Kami are arguably greater, demanding more nuanced and comprehensive regulatory approaches. Learning from past experiences with technological regulation can inform the development of effective and ethically sound policies for AI.

Role of Data Protection Regulations

Data protection regulations, such as the GDPR, play a crucial role in the context of the Kami bans. These regulations aim to protect individuals’ personal data from unauthorized collection, processing, and use. In the case of Kami, concerns arise about the potential for the model to process and retain sensitive personal information, raising questions about compliance with data minimization, purpose limitation, and data security requirements.

The bans highlight the need for stronger enforcement of existing data protection regulations in the context of AI and the development of more specific guidelines for AI-related data processing. The ongoing debate about the application of GDPR to LLMs underscores the necessity for clear and consistent legal interpretations.

Political and Societal Implications: After Italy Germany To Issue Ban On The Chatgpt Use

A ban on Kami in Italy and Germany carries significant political and societal ramifications, extending far beyond the immediate impact on users and businesses. The ripple effects will be felt across public opinion, governmental trust, technological innovation, and international relations. Understanding these implications is crucial for navigating the complex landscape of AI regulation and its impact on democratic societies.The potential political fallout is considerable.

Public reaction will likely be divided, with some praising the ban as a necessary safeguard against misinformation and ethical concerns, while others will criticize it as an overreach of government power, stifling innovation and freedom of expression. This division could easily fuel political debate, potentially impacting upcoming elections and influencing policy decisions on future AI regulations.

Public Opinion and Political Debate

The ban’s impact on public trust will depend heavily on the government’s justification and communication strategy. Transparent and well-reasoned explanations emphasizing public safety and ethical considerations might garner support. Conversely, a poorly communicated or heavy-handed approach could erode public trust, fostering skepticism towards government regulation of technology and potentially leading to a decline in confidence in both the government’s competence and the technology itself.

We might see parallels to the public response to previous instances of government intervention in technology, such as the debates surrounding data privacy regulations or the censorship of online content.

Impact on Public Trust in Government and Technology

A poorly managed ban could create a scenario where citizens feel their digital freedoms are being curtailed without sufficient justification. This could lead to a decline in trust not only in the government’s ability to regulate technology effectively but also in the technology itself. For example, if the ban is perceived as arbitrary or disproportionate, it could fuel conspiracy theories and further distrust in AI and governmental oversight.

Conversely, a carefully considered and transparently implemented ban, coupled with clear communication about its rationale and ongoing review, could potentially increase public trust in the government’s commitment to responsible technological development.

Impact on Innovation and Technological Development

The ban could significantly hinder innovation within Germany and Italy’s tech sectors. Restricting access to a powerful AI tool like Kami could stifle research and development in areas reliant on large language models. Startups and established companies might relocate their AI projects elsewhere, leading to a “brain drain” and a loss of competitive advantage in the global AI race.

This scenario could be similar to the impact of restrictive regulations in other industries, where companies choose to operate in more favorable jurisdictions.

Long-Term Societal Consequences

The long-term societal consequences of the ban are multifaceted and potentially far-reaching.

- Reduced access to information and educational resources: Kami’s educational applications could be significantly hampered, limiting access to information and learning opportunities.

- Increased digital divide: The ban could disproportionately affect individuals and communities with limited access to alternative AI tools.

- Stifled technological advancement: The lack of access to and experimentation with advanced AI technologies could hinder the development of future innovations.

- Erosion of trust in AI: Overly restrictive regulations could lead to public distrust in the potential benefits of AI.

- Increased regulatory uncertainty: The ban could create uncertainty for businesses and researchers, making it difficult to plan for the future.

Impact on International Relations and Tech Sector Collaborations

The ban could strain international relations and collaborations in the tech sector. Other countries might view the ban as protectionist and retaliate with similar restrictions on German and Italian companies. This could lead to fragmentation in the global AI ecosystem, hindering international cooperation on AI safety and ethical guidelines. The EU’s efforts to establish a unified approach to AI regulation could also be complicated by differing national approaches to Kami and similar technologies.

We might see a scenario similar to trade wars, where restrictive measures in one country trigger retaliatory actions from others, ultimately harming global economic growth and cooperation.

Future of AI Regulation

The Italy-Germany Kami ban marks a significant turning point, forcing a re-evaluation of AI regulation’s trajectory. This isn’t simply about restricting one application; it’s about establishing a framework for managing the risks and potential benefits of increasingly powerful AI systems. The ripple effects will be felt globally, prompting a much-needed conversation about responsible AI development and deployment.A Hypothetical Timeline for AI Regulation in Europe Following the BanThe ban will likely accelerate the development of a comprehensive European AI Act.

We can envision a phased approach: Within the next 12 months, expect intensified discussions and revisions to the proposed AI Act, incorporating lessons learned from the Kami ban and similar incidents. Over the following two years (2024-2026), we’ll see the Act’s formal adoption and gradual implementation, starting with high-risk AI systems. The subsequent five years (2026-2031) will focus on enforcement, adaptation to technological advancements, and addressing emerging challenges.

Following Italy’s lead, Germany’s ChatGPT ban has me thinking about the future of AI and its implications for development. It makes me wonder how we’ll build secure and reliable apps in this changing landscape, especially considering the advancements discussed in this article on domino app dev, the low-code and pro-code future , which explores alternative development paths. The restrictions on ChatGPT highlight the urgent need for robust, transparent, and ethically sound development practices, making the future of app development all the more critical.

This timeline is, of course, subject to political maneuvering and technological evolution. Similar timelines could be observed in other jurisdictions mirroring the EU’s approach.

Influence on Global AI Regulation

The European Union’s regulatory actions often set a precedent for other regions. This ban, coupled with the upcoming AI Act, could spur similar regulatory efforts in countries like Canada, Japan, and those within the EU itself. We might see a global trend towards stricter data privacy rules and increased transparency requirements for AI systems. The US, while likely to adopt a less stringent approach initially, might face pressure to align with international standards to maintain competitiveness and avoid trade barriers.

This could lead to a fragmented regulatory landscape, where different regions have varying levels of control over AI, posing challenges for multinational companies.

A Visual Representation of the Future Regulatory Landscape

Imagine a world map. Europe is depicted with a clearly defined, multi-layered regulatory framework for AI, indicated by distinct color-coded zones representing different risk levels and regulatory requirements. North America shows a less structured approach, with some states implementing stricter rules than others, resulting in a patchwork of regulations. Asia and Africa exhibit a range of approaches, from relatively lax regulations to those mirroring the European model.

This illustrates the potential for a diverse and sometimes conflicting global regulatory landscape for AI.

International Cooperation in Establishing Global Standards

International cooperation will be crucial in navigating the complexities of AI regulation. Organizations like the OECD and the G20 will play a significant role in fostering dialogue and facilitating the development of common principles and standards. This will require addressing differing national interests and regulatory philosophies. Successful cooperation could lead to a more harmonized global approach, reducing regulatory fragmentation and promoting innovation.

However, significant challenges remain, including differing interpretations of ethical principles and the need for effective enforcement mechanisms.

Key Factors Shaping the Future of AI Regulation

Several key factors will shape the future of AI regulation: the pace of technological advancement, public perception and trust in AI, the lobbying efforts of technology companies, and the capacity of regulatory bodies to adapt to rapid changes. The balance between fostering innovation and mitigating risks will be a continuous challenge. Furthermore, the potential for AI to exacerbate existing societal inequalities will necessitate careful consideration and proactive measures to ensure equitable access and benefits.

The development and enforcement of effective regulatory frameworks are essential for guiding the future of AI responsibly.

Concluding Remarks

The impending ban in Germany, following Italy’s lead, marks a pivotal moment in the global conversation surrounding AI regulation. The economic and societal consequences are substantial, highlighting the need for careful consideration of both the benefits and risks of this rapidly advancing technology. The future of AI development and its integration into our lives hinges on finding a balance between innovation and responsible governance.

The coming months will be crucial in shaping the regulatory landscape, not just in Europe, but worldwide.

FAQ

What specific AI chatbot is being banned?

While not explicitly named in the initial reports, the ban targets a specific large language model chatbot known for its advanced capabilities.

How will the ban be enforced?

Enforcement details are still emerging, but it’s likely to involve a combination of legal action against companies offering the technology and blocking access through internet service providers.

What are the long-term implications for AI development in Europe?

The ban could slow down AI development in Europe, but it could also spur innovation in areas like responsible AI development and data privacy technologies.

Will this ban affect other countries?

The ban’s influence on other countries is uncertain. It might inspire similar regulations elsewhere, but it could also lead to a fragmented global AI landscape.