America to Offer Compensation to Deepfake Victims

America to offer compensation to victims of deep fake AI content – that’s a headline that’s grabbed my attention, and I bet it’s grabbed yours too! The rise of incredibly realistic deepfakes is terrifying, isn’t it? Imagine someone using AI to create a fake video of you doing something illegal or embarrassing, completely ruining your reputation and life.

This isn’t science fiction anymore; it’s a growing problem impacting real people, causing significant emotional distress, financial ruin, and even impacting political discourse. The US government is finally stepping up to address this, and exploring how to compensate those harmed by these sophisticated fakes. Let’s dive into the details.

This emerging crisis demands a multifaceted approach. We’ll look at the sheer scale of the deepfake problem in America, exploring the various legal loopholes and ethical dilemmas it presents. Then, we’ll examine potential compensation models – from government funds to civil lawsuits – and discuss the challenges in determining fair compensation amounts. We’ll also discuss the crucial role of tech companies in detecting and removing deepfakes, and the importance of public education to combat the spread of misinformation.

The Scale of the Problem

The proliferation of deepfake AI content in the United States presents a significant and growing threat to individuals and society. While precise figures are difficult to obtain due to the clandestine nature of deepfake creation and distribution, the problem is undeniably substantial and rapidly expanding, fueled by increasingly sophisticated AI technology and readily available online tools. The potential for misuse is vast, impacting everything from political discourse to personal relationships.The harms caused by deepfake videos and images are multifaceted and far-reaching.

Reputational damage is perhaps the most immediate and devastating consequence. A single convincing deepfake video can irrevocably tarnish a person’s public image, leading to job loss, social ostracism, and even legal repercussions. Beyond reputational harm, deepfakes inflict significant emotional distress on victims. The violation of privacy and the feeling of being manipulated or impersonated can cause anxiety, depression, and even post-traumatic stress disorder.

Financially, deepfakes can be used to facilitate scams, fraud, and blackmail, resulting in substantial monetary losses for victims.

High-Profile Deepfake Cases in the US

Several high-profile cases illustrate the real-world impact of deepfakes in the United States. For instance, the spread of deepfake videos targeting prominent politicians during election cycles has raised concerns about the integrity of the democratic process. These videos, often designed to sow discord or influence voters, can have significant consequences on election outcomes. Similarly, deepfakes have been used in revenge porn scenarios, where intimate images or videos are manipulated and shared online without the consent of the victim, leading to severe emotional trauma and reputational damage.

In the business world, deepfakes have been used to impersonate executives, authorizing fraudulent transactions or divulging sensitive information. These cases highlight the urgent need for effective countermeasures and legal frameworks to address the growing threat of deepfakes.

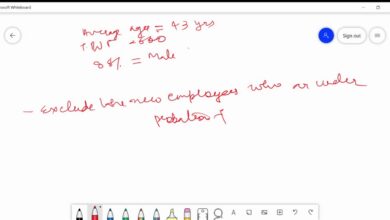

Deepfake Impact Across Demographics

The impact of deepfakes is not evenly distributed across different demographics in America. Certain groups may be disproportionately vulnerable due to factors such as social standing, access to resources, and existing societal biases. While comprehensive data is still limited, preliminary research suggests some trends.

| Demographic | Type of Deepfake | Impact | Number of Cases (Estimated) |

|---|---|---|---|

| Politicians | Political endorsements, fabricated speeches | Reputational damage, electoral influence | High (though exact numbers are difficult to verify) |

| Celebrities | Non-consensual pornography, fabricated scandals | Reputational damage, emotional distress, financial loss | Moderate to High (often unreported) |

| Business Executives | Fraudulent transactions, impersonation | Financial loss, reputational damage for companies | Moderate (often undisclosed due to reputational concerns) |

| Average Citizens | Revenge porn, blackmail, identity theft | Emotional distress, financial loss, reputational damage | High (vast majority likely unreported) |

Note: The “Number of Cases” column provides a qualitative assessment rather than precise figures, due to the underreporting and difficulty in tracking deepfake incidents. Further research is needed to accurately quantify the prevalence of deepfakes across different demographics.

Legal and Ethical Frameworks

The rise of deepfake technology presents a significant challenge to the existing legal and ethical frameworks in the United States. While some laws address aspects of deepfake misuse, significant gaps exist, necessitating a comprehensive reassessment of our legal and ethical responses to this rapidly evolving technology. The current landscape is a patchwork of existing legislation applied to new circumstances, leaving many victims without adequate recourse and perpetrators relatively unaccountable.Current US laws addressing deepfakes are largely reactive rather than proactive.

Existing statutes, such as those related to defamation, fraud, and identity theft, are being used in attempts to prosecute those who create and distribute harmful deepfakes. However, the application of these laws is often complex and challenging, especially given the sophisticated nature of deepfake technology and the difficulties in establishing clear lines of causation and intent. For instance, proving that a deepfake was created with malicious intent to defraud or defame can be a considerable hurdle in court.

Furthermore, the jurisdictional complexities surrounding online content distribution further complicate matters.

Existing Legal Mechanisms and Their Limitations

The existing legal landscape relies heavily on pre-existing laws designed for different contexts. Laws against defamation, for example, require proving malice, which can be difficult to demonstrate in cases involving deepfakes that are convincingly realistic. Similarly, laws concerning fraud require proving financial loss, a threshold that may not be met in many instances of deepfake misuse, such as those involving reputational damage or emotional distress.

The challenge lies in adapting these existing legal tools to the specific characteristics of deepfake technology, which often blurs the lines between reality and fabrication, making it difficult to determine culpability and establish harm. This is further complicated by the international nature of the internet, making it difficult to track down and prosecute perpetrators.

Loopholes and Shortcomings in Current Laws, America to offer compensation to victims of deep fake ai content

A major loophole lies in the difficulty of identifying the creators and distributors of deepfakes. The anonymity offered by the internet, combined with the sophisticated techniques used to create and disseminate deepfakes, makes tracing the source of malicious content extremely challenging. Moreover, current laws often struggle to address the non-monetary harms caused by deepfakes, such as reputational damage, emotional distress, and the erosion of public trust.

Existing legal frameworks are often ill-equipped to handle the subtle yet significant ways in which deepfakes can manipulate public perception and influence political discourse. The lack of clear legal definitions of deepfakes and related terms further complicates the situation, leading to inconsistencies in enforcement and interpretation across different jurisdictions.

Ethical Considerations and the Need for Stricter Regulations

Beyond the legal challenges, deepfakes raise profound ethical concerns. The potential for misuse is vast, ranging from the creation of non-consensual pornography to the manipulation of elections and the spread of disinformation. The ease with which deepfakes can be created and disseminated raises concerns about the erosion of trust in media and the potential for widespread social disruption.

Ethical considerations necessitate a proactive approach to regulation, one that goes beyond simply reacting to individual instances of misuse. This includes addressing the ethical responsibilities of developers, distributors, and users of deepfake technology, fostering media literacy, and promoting the development of effective detection and mitigation technologies.

Comparison of Legal Approaches to Deepfakes in the US and Other Countries

The US legal approach to deepfakes, while still developing, is largely reactive and relies on adapting existing legislation. Other countries, such as the UK and the EU, are exploring more proactive regulatory frameworks, including the development of specific laws targeting the creation and distribution of malicious deepfakes. Some countries are also investing heavily in the development of detection technologies and public awareness campaigns.

The news about America offering compensation to deepfake victims is a huge step, highlighting the urgent need for robust AI safety measures. This kind of rapid technological advancement necessitates innovative solutions, like those explored in the article on domino app dev the low code and pro code future , which could help build better detection and prevention tools.

Ultimately, combating deepfakes requires a multi-pronged approach, including legal frameworks and advanced technological solutions.

A comparative analysis of these different approaches can offer valuable insights into potential strategies for strengthening the US legal framework and addressing the unique challenges posed by deepfake technology. The absence of a unified global approach, however, complicates efforts to regulate the technology effectively.

Compensation Models

The creation of a fair and effective compensation system for victims of deepfake-related harm is a complex undertaking, requiring careful consideration of various factors and the exploration of different models. This section will examine potential approaches, highlighting the challenges involved in assessing damages and proposing a framework for a robust compensation system. The goal is to provide a pathway to redress for individuals whose lives have been negatively impacted by this emerging technology.

Government Funding as a Compensation Source

Government funding represents a potential avenue for compensating victims of deepfakes. This approach could involve the creation of a dedicated fund, similar to those used for victims of other crimes or disasters. The fund could be financed through general taxation or specific levies on technology companies involved in the development or deployment of AI technologies. However, securing sufficient funding and establishing clear eligibility criteria would be significant challenges.

Political considerations, budgetary constraints, and the potential for differing interpretations of eligibility could significantly impact the feasibility and effectiveness of this model. Furthermore, the quantification of damages resulting from deepfake content, and ensuring equitable distribution of funds among victims, would require careful consideration. For example, a victim whose reputation has been severely damaged might require significantly more compensation than someone who experienced minor emotional distress.

Civil Lawsuits and Legal Recourse

Civil lawsuits offer another avenue for victims to seek compensation. Individuals harmed by deepfakes could sue the creators, distributors, or platforms that hosted the malicious content. This approach, however, presents several hurdles. Identifying the perpetrators of deepfakes can be challenging, particularly in cases involving sophisticated techniques or anonymous actors. Furthermore, proving causation—demonstrating a direct link between the deepfake and the harm suffered—can be complex and expensive.

The legal landscape surrounding deepfakes is still evolving, and the success of lawsuits would depend on the specific legal jurisdiction and the interpretation of existing laws. For instance, a successful lawsuit might require demonstrating negligence on the part of the platform hosting the deepfake or intent to cause harm by the creator. The cost and time involved in pursuing such litigation could also deter many victims.

Insurance Programs to Mitigate Deepfake Risks

The development of specialized insurance programs designed to cover deepfake-related damages offers a potentially proactive approach. These programs could cover a range of harms, including reputational damage, financial losses, and emotional distress. Insurance companies could assess risks based on factors such as the individual’s public profile, the nature of their profession, and the potential for them to become targets of deepfake attacks.

This model would require careful actuarial analysis to determine appropriate premiums and coverage limits. However, the relative novelty of deepfake technology and the difficulty in predicting the frequency and severity of related harms present challenges to the development of accurate risk assessment models. Moreover, ensuring fair and equitable access to such insurance programs would be crucial.

A Potential Compensation Framework

A comprehensive compensation framework should include clear eligibility criteria, a streamlined application process, and a robust dispute resolution mechanism. Eligibility could be determined based on verified evidence of harm, such as police reports, medical documentation, or expert witness testimony. The application process should be user-friendly and accessible to individuals with varying levels of technological literacy. A dedicated independent body, perhaps a government agency or a specialized tribunal, could be responsible for adjudicating disputes and determining the appropriate level of compensation.

This body would need expertise in assessing the impact of deepfake content and in handling complex legal and ethical issues.

Criteria for Determining Severity of Harm

Determining the severity of harm caused by deepfake content requires a multi-faceted approach. The following criteria could be considered:

- Extent of reputational damage: This includes loss of employment, social standing, and credibility.

- Financial losses: This encompasses direct losses such as lost income or business opportunities, and indirect losses such as legal fees or therapy costs.

- Emotional distress: This includes anxiety, depression, PTSD, and other psychological impacts.

- Physical harm: This encompasses any physical injuries directly resulting from the deepfake, though this is less common.

- Extent of dissemination: The wider the spread of the deepfake, the more significant the potential harm.

- Intent of the perpetrator: Malicious intent generally warrants higher compensation than accidental or unintentional creation and dissemination.

The Role of Technology Companies

The proliferation of deepfake content presents a significant challenge, and technology companies, particularly social media platforms, bear a substantial responsibility in addressing this issue. Their role extends beyond simply being conduits for information; they are active participants in shaping the online environment and have the resources and influence to mitigate the harms caused by deepfakes. This requires a multifaceted approach involving proactive detection, effective removal mechanisms, and the development of more robust AI-powered solutions.Technology companies facilitate the spread of deepfake content through their vast networks and algorithms.

The ease with which videos and images can be shared across platforms like Facebook, Twitter, and YouTube, coupled with the often-viral nature of shocking or sensational content, creates a fertile ground for deepfakes to proliferate rapidly. Furthermore, sophisticated recommendation algorithms can inadvertently amplify the reach of deepfakes by suggesting them to users based on their viewing history or other factors.

This unintentional amplification significantly contributes to the problem’s scale.

Strategies for Deepfake Detection and Removal

Effective strategies for combating deepfakes require a combination of technological and human-driven approaches. Technology companies should invest in advanced AI-powered detection tools that go beyond simple visual analysis. These tools need to incorporate contextual analysis, considering factors such as the source of the content, the user’s history, and any accompanying metadata. Furthermore, they should implement robust reporting mechanisms that allow users to flag suspicious content easily and efficiently.

Human review teams are also crucial for validating flagged content and ensuring that legitimate content is not mistakenly removed. A layered approach combining technological and human oversight is essential.

Improving AI-Powered Detection Tools

Current AI-powered deepfake detection tools are constantly evolving, but they still face significant challenges in keeping pace with the increasing sophistication of deepfake creation techniques. One area of improvement lies in the development of more robust algorithms that can identify subtle inconsistencies in facial expressions, body language, and other visual cues that may be missed by current methods. Another crucial area is the integration of multimodal analysis, which combines visual analysis with audio analysis to provide a more comprehensive assessment of the authenticity of a video or image.

Finally, continuous training of these AI models with a diverse and constantly updated dataset of both real and deepfake content is essential to maintain their effectiveness. For example, advancements in generative adversarial networks (GANs) used to create deepfakes necessitate equally advanced detection methods.

Best Practices for Minimizing Deepfake Creation and Dissemination

Technology companies should adopt a set of best practices to minimize the creation and dissemination of deepfakes. This includes:

- Investing in research and development of advanced detection technologies.

- Implementing stricter content moderation policies that prioritize the removal of deepfakes.

- Developing educational resources for users to help them identify and report deepfakes.

- Collaborating with researchers, law enforcement, and other stakeholders to share information and best practices.

- Promoting media literacy initiatives to enhance public awareness of deepfakes and their potential impact.

- Working with content creators to develop methods for authenticating their content and protecting it from being misused to create deepfakes.

Implementing these best practices will require significant investment and effort from technology companies, but the potential risks associated with unchecked deepfake proliferation far outweigh the costs of prevention. The collaborative effort across industries and governments is crucial in this fight against misinformation and its potential harm.

Public Awareness and Education

The proliferation of deepfake technology necessitates a robust public awareness campaign to mitigate its harmful effects. Educating the public about the technology’s capabilities and potential for misuse is crucial in empowering individuals to critically evaluate online information and protect themselves from manipulation. This involves a multi-pronged approach encompassing media literacy training, readily accessible resources, and collaborative efforts between governments, technology companies, and educational institutions.Effective strategies for educating the public must be multifaceted and easily accessible.

We need to move beyond simple warnings and delve into practical skills that equip individuals to identify and counter deepfakes. This involves understanding the technology’s mechanics and recognizing common telltale signs.

Deepfake Identification Techniques

Understanding how deepfakes are created is the first step towards identifying them. Deepfakes manipulate existing videos and images, often using artificial intelligence to seamlessly replace faces or create entirely fabricated scenes. However, these manipulations often leave subtle clues. For example, inconsistencies in lighting, unnatural blinking patterns, or inconsistencies in facial expressions or skin texture can be indicative of a deepfake.

Additionally, analyzing the audio for inconsistencies with lip movements or unusual background noise can help to spot fakes. A visual representation of this process could show a flowchart, starting with a video being examined, then branching into checks for inconsistencies in lighting, blinking, facial expressions, and audio synchronization. The flowchart would end with a conclusion indicating whether the video is likely a deepfake or not.

Public Awareness Campaigns

Successful public awareness campaigns should employ diverse media channels to reach a broad audience. These campaigns could utilize short, easily digestible videos demonstrating deepfake identification techniques, distributed through social media platforms. They could also incorporate interactive online modules and workshops teaching critical thinking skills and media literacy. Partnerships with social media companies to flag and remove deepfake content, along with the development of easy-to-use detection tools, would also be vital.

One example of a successful campaign could be a series of short, animated videos on YouTube, each focusing on a specific telltale sign of a deepfake. Another example could be an interactive online quiz that tests users’ ability to spot deepfakes in various scenarios.

The Importance of Media Literacy

Media literacy is paramount in the age of deepfakes. It equips individuals with the critical thinking skills necessary to evaluate the credibility of online information. This involves understanding the context of information, verifying sources, and recognizing biases. Media literacy programs should be integrated into school curricula from an early age, teaching children how to identify misinformation and disinformation, and how to think critically about what they see and hear online.

Such programs could include practical exercises and case studies illustrating how deepfakes are used to spread misinformation and manipulate public opinion. Furthermore, the development of national media literacy initiatives, supported by government funding and collaboration with educational institutions and media organizations, is essential. This ensures a consistent and comprehensive approach to media literacy education across the nation.

Epilogue: America To Offer Compensation To Victims Of Deep Fake Ai Content

The fight against deepfakes is a battle on multiple fronts. While offering compensation to victims is a crucial step towards justice and healing, it’s only one piece of the puzzle. We need stronger laws, more effective AI detection tools, and a much more informed public to truly combat this threat. It’s a complex issue, but one we absolutely must tackle.

The future of trust and online safety depends on it. Let’s hope that America’s commitment to compensating victims marks a turning point in the fight against deepfake-related harm.

Answers to Common Questions

What types of deepfakes are causing the most harm?

Currently, deepfakes involving revenge porn, defamation, and political manipulation seem to be causing the most significant harm.

How will the government determine who is eligible for compensation?

That’s still being worked out, but it will likely involve a rigorous process of verifying the authenticity of the deepfake and proving the resulting harm to the victim.

What if the creator of the deepfake is unknown?

This presents a major challenge. The government might need to explore alternative avenues for compensation, potentially focusing on the platforms where the deepfakes were shared.

Will insurance companies cover deepfake-related damages?

Potentially, but it’s still an evolving area. Insurance policies might need to be updated to specifically address deepfake-related harm.