Architecture Matters When It Comes to SSE

Architecture matters when it comes to SSE – it’s not just about choosing the right tools, it’s about building a system that’s robust, scalable, and secure. From microservices to monolithic architectures, the choices we make fundamentally impact performance, security, and maintainability. This post dives deep into the architectural considerations crucial for successful Server-Sent Events (SSE) implementation, exploring best practices and potential pitfalls along the way.

We’ll examine how different architectural patterns influence everything from real-time chat applications to high-throughput data streaming systems.

We’ll cover crucial aspects like choosing between various architectural styles, implementing security measures, optimizing for scalability, and handling the unique challenges of SSE, such as managing disconnections and reconnections. We’ll also look at practical examples and explore how different technologies can be leveraged to build efficient and reliable SSE systems. Get ready to level up your understanding of SSE architecture!

The Impact of Architectural Styles on SSE Performance

Server-Sent Events (SSE) rely heavily on efficient, persistent connections between the server and the client. The architecture chosen for implementing an SSE system significantly impacts its performance, scalability, and overall reliability. Different architectural styles present distinct trade-offs, and understanding these is crucial for building robust and high-performing SSE applications.Microservices and monolithic architectures represent two contrasting approaches, each with its own implications for SSE implementation.

Let’s delve into how architectural choices influence the performance characteristics of SSE systems.

Microservices Architecture and SSE Performance

In a microservices architecture, the SSE functionality might be distributed across multiple independent services. This approach offers benefits in terms of scalability and maintainability, allowing individual services to be scaled independently based on demand. However, managing multiple persistent connections across different services introduces complexity. A bottleneck could arise from the overhead of inter-service communication, particularly if the SSE data requires aggregation from multiple services.

For instance, imagine an e-commerce application where product updates (SSE) are handled by one service, and order updates by another. The client might need to maintain separate connections to each service, increasing latency and complexity. Optimizations could involve using a message broker (discussed later) to consolidate SSE updates from different services before delivering them to the client, thus reducing the number of connections.

Monolithic Architecture and SSE Performance

A monolithic architecture, in contrast, houses all SSE functionality within a single application. This simplifies the management of connections and data aggregation, potentially leading to better performance in simpler applications. However, scaling becomes a challenge as the entire application needs to be scaled up, even if only one part (e.g., the SSE component) experiences high demand. A potential bottleneck could be the single point of failure represented by the monolithic application itself.

If the application crashes, all SSE connections are disrupted. Optimizations might involve techniques like load balancing and horizontal scaling, but these are often less efficient than scaling individual microservices. For example, a simple news website with a single SSE stream for updates could easily use a monolithic architecture without performance issues. However, if it expands to include many streams and features, a monolithic design could easily become a bottleneck.

Hypothetical SSE-Optimized System Architecture

For a hypothetical system designed for optimal SSE performance, a hybrid approach might be most effective. A central message broker (e.g., Kafka or RabbitMQ) would act as a hub for all SSE updates. Individual microservices would publish updates to the broker, which would then fan out the updates to clients subscribed to specific SSE streams. This architecture combines the scalability of microservices with the streamlined data delivery of a centralized hub.

We could utilize technologies like Node.js for its non-blocking I/O capabilities to handle a large number of concurrent SSE connections efficiently. The message broker’s durability ensures reliable delivery of events even if individual services temporarily fail. Databases like Redis could be used for caching frequently accessed data, reducing database load and improving response times.

The Role of Message Queues and Pub/Sub Systems in SSE Architecture

Message queues and pub/sub systems are essential for building scalable and reliable SSE architectures. They decouple event producers (microservices) from event consumers (clients), allowing independent scaling and fault tolerance. A pub/sub system allows services to publish updates to a topic, and clients subscribe to specific topics to receive only the relevant updates. This significantly reduces the load on individual services and improves the overall system’s resilience.

For example, if a service temporarily fails, the message broker can buffer the updates until the service recovers, ensuring no data loss. Message queues provide similar benefits but typically involve point-to-point communication rather than the publish-subscribe model. The choice between them depends on the specific requirements of the SSE system. In scenarios with many subscribers, a pub/sub system offers superior scalability.

Security Considerations in SSE Architecture: Architecture Matters When It Comes To Sse

Server-Sent Events (SSE) offer a simple and efficient way for servers to push updates to clients, but this simplicity can mask some significant security vulnerabilities. A robust SSE architecture requires careful consideration of authentication, authorization, and data protection to prevent unauthorized access and data breaches. This section will explore these vulnerabilities and mitigation strategies, along with best practices for secure implementation.

Potential Security Vulnerabilities in SSE Architectures

Several architectural patterns inherent to SSE can introduce security risks. For instance, a lack of proper authentication can allow unauthorized clients to connect and receive sensitive data. Similarly, insufficient authorization controls could permit clients access to data beyond their privileges. Furthermore, the persistent nature of SSE connections, if not managed correctly, can lead to denial-of-service (DoS) attacks.

Finally, failure to encrypt the SSE stream leaves the data vulnerable to eavesdropping and manipulation.

Mitigation Strategies for SSE Vulnerabilities

Addressing these vulnerabilities requires a multi-layered approach. Robust authentication mechanisms, such as OAuth 2.0 or JWT (JSON Web Tokens), should be implemented to verify client identity before granting access to the SSE stream. Fine-grained authorization controls, based on roles or permissions, ensure that clients only receive data they are entitled to. Rate limiting and connection timeouts can help mitigate DoS attacks.

Finally, always encrypt the SSE stream using HTTPS to protect data in transit from eavesdropping and tampering. Implementing these measures significantly reduces the risk of various attacks.

Authentication and Authorization Mechanisms in SSE

Implementing secure authentication and authorization is crucial. One common approach involves using JWTs. The server generates a JWT containing user information and permissions upon successful authentication. This token is then included in the SSE connection request. The server verifies the token on each request, ensuring only authenticated users can access the SSE stream.

Authorization can be implemented by embedding permissions within the JWT or by consulting a separate authorization service based on the user’s identity and the requested data. This allows for granular control over data access, ensuring data privacy and integrity. For example, a financial application might use JWTs to grant different levels of access to trading data based on user roles.

Secure Design Patterns for SSE

Several secure design patterns can enhance SSE security. A common pattern involves using a dedicated authentication and authorization service separate from the SSE server. This separation improves maintainability and security by centralizing security logic. Another pattern is to incorporate data validation and sanitization at both the server and client sides. This prevents malicious data from disrupting the SSE stream or causing vulnerabilities.

Finally, implementing logging and monitoring mechanisms provides crucial insights into connection attempts, data access, and potential security breaches. This allows for proactive threat detection and response.

Security Checklist for Evaluating SSE Architecture Robustness

A comprehensive security checklist is essential for evaluating the robustness of an SSE architecture. This checklist should encompass various aspects of security, from authentication and authorization to data protection and logging.

| Category | Vulnerability | Control |

|---|---|---|

| Authentication | Unauthorized access | Implement robust authentication (e.g., OAuth 2.0, JWT) |

| Authorization | Data leakage | Implement fine-grained authorization based on roles/permissions |

| Data Protection | Eavesdropping/Tampering | Encrypt the SSE stream using HTTPS |

| DoS Protection | Denial of service | Implement rate limiting and connection timeouts |

| Logging and Monitoring | Security breaches | Implement comprehensive logging and monitoring |

| Input Validation | Injection attacks | Validate and sanitize all client inputs |

Scalability and Maintainability of SSE Architectures

Building scalable and maintainable Server-Sent Events (SSE) architectures is crucial for applications requiring real-time updates to numerous clients. Ignoring these aspects can lead to performance bottlenecks, increased downtime, and ultimately, a poor user experience. This section explores strategies for achieving both scalability and maintainability in SSE systems.

Horizontal Scaling in SSE Architectures

Horizontal scaling involves adding more servers to handle increased load, rather than increasing the capacity of a single server. In the context of SSE, this means distributing the load of managing client connections across multiple servers. Load balancers play a vital role here, distributing incoming connections evenly across available servers. For instance, a load balancer like HAProxy or Nginx can receive SSE connection requests and forward them to different backend servers based on a chosen algorithm (e.g., round-robin, least connections).

This prevents any single server from becoming overloaded. Further enhancing scalability, distributed caching mechanisms like Redis or Memcached can store frequently accessed data, reducing the load on backend databases and improving response times. For example, if your SSE application involves sending stock prices, caching the most recent prices can significantly reduce database queries and improve the speed of updates to connected clients.

Challenges of Maintaining and Updating SSE Systems

Maintaining and updating an SSE system presents unique challenges. The continuous nature of the connections means that any downtime during upgrades can disrupt the real-time data stream for all connected clients. Strategies for minimizing downtime include employing blue-green deployments or canary deployments. Blue-green deployments involve running two identical environments (blue and green). Updates are deployed to the green environment, which is then switched over to become the live environment once testing is complete.

The old blue environment remains as a backup. Canary deployments involve gradually rolling out updates to a small subset of users before deploying them to the entire user base. This allows for early detection and mitigation of any unforeseen issues. Furthermore, robust monitoring and logging are essential for proactively identifying and addressing potential problems before they escalate into major outages.

Regular automated testing, encompassing both functional and performance tests, is vital for ensuring the stability and reliability of the system after updates.

Data Persistence Strategies in SSE Architectures, Architecture matters when it comes to sse

Choosing the right data persistence strategy is crucial for the performance and scalability of an SSE architecture. Several options exist, each with its own trade-offs. Relational databases like PostgreSQL or MySQL offer strong data consistency and ACID properties but can become bottlenecks under high write loads. NoSQL databases like MongoDB or Cassandra, on the other hand, are better suited for handling high volumes of data and high write throughput but may sacrifice some data consistency.

Message queues like Kafka or RabbitMQ can be used to decouple the SSE application from the data persistence layer, allowing for better scalability and resilience. For instance, new events can be written to a message queue, and the SSE application can consume these events independently, allowing the system to scale horizontally by adding more consumers. The choice depends on factors such as the volume of data, required consistency levels, and the specific requirements of the application.

Comparison of SSE Architectures

The following table compares three common SSE architectures: Event Sourcing, Reactive Streams, and Long Polling.

| Architecture | Scalability | Maintainability | Complexity |

|---|---|---|---|

| Event Sourcing | High; easily scales horizontally by adding event processors. | Moderate; requires careful event handling and replay mechanisms. | High; complex to implement and manage. |

| Reactive Streams | High; leverages asynchronous, non-blocking operations. | Moderate; requires careful management of backpressure. | Medium; relatively easier to implement than Event Sourcing. |

| Long Polling | Low; prone to connection timeouts and inefficient resource utilization. | Low; simpler to implement but less scalable. | Low; easiest to implement but least scalable. |

Choosing the Right Architecture for Specific SSE Use Cases

Selecting the appropriate architecture for Server-Sent Events (SSE) is crucial for optimal performance, scalability, and security. The ideal design depends heavily on the specific application requirements, particularly the nature of the data being streamed and the expected number of concurrent users. Different use cases demand different architectural considerations.

SSE Architecture for Real-time Chat Applications

Real-time chat applications require low-latency, bi-directional communication. While SSE excels at unidirectional updates from server to client, a full-duplex solution often involves combining SSE with a technology like WebSockets for handling client-to-server messages. The server would use SSE to push new messages to connected clients. A separate mechanism, like WebSockets, would handle the client sending messages to the server.

The architecture could employ a message broker (e.g., Redis, RabbitMQ) to manage message distribution efficiently, especially with a large number of users. The server would use a persistent connection pool to manage client connections and efficiently push updates. A robust error handling mechanism is vital to ensure message delivery and reconnection capabilities.

Architectural Differences Between Stock Market Data and Collaborative Document Editing

Stock market data updates demand extremely high throughput and low latency. The architecture needs to prioritize speed and efficiency above all else. A highly optimized and scalable solution might involve a dedicated high-performance message queue, specialized hardware, and load balancing across multiple servers. In contrast, collaborative document editing applications prioritize consistency and conflict resolution. While speed is important, data integrity is paramount.

An architecture might incorporate techniques like operational transformation (OT) to ensure all clients see a consistent view of the document, even with concurrent edits. This often requires more complex server-side logic compared to the simpler data streaming in a stock ticker application.

SSE Architecture for High-Concurrency Systems

Designing an SSE architecture for a large number of concurrent users requires careful consideration of several factors. A key component is a robust load balancer to distribute the connection load across multiple servers. This prevents any single server from becoming overloaded. Efficient connection management is essential; techniques like connection pooling and keep-alive mechanisms minimize overhead. A scalable message queue (e.g., Kafka) can handle a high volume of messages efficiently, decoupling the server from the clients and improving resilience.

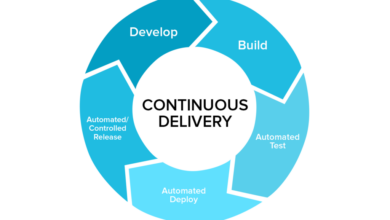

Furthermore, employing caching mechanisms (e.g., Redis) can significantly reduce the load on the database by storing frequently accessed data. The system should also incorporate features for handling disconnections and reconnections gracefully, ensuring minimal data loss and user disruption. This diagram illustrates a high-concurrency SSE architecture. A load balancer distributes incoming client connections across multiple servers. Each server manages a subset of connections and uses a message queue (e.g., Kafka) for efficient message delivery.

The load balancer ensures even distribution of load, preventing server overload. The message queue provides scalability and resilience.

So, you’re thinking about server-side events (SSE) and realizing architecture is key? Choosing the right approach significantly impacts performance and scalability. This is especially true when considering the evolving landscape of application development, as highlighted in this insightful piece on domino app dev, the low-code and pro-code future , which shows how architectural decisions influence the success of modern applications.

Ultimately, getting your SSE architecture right from the start pays off big time in the long run.

Architectural Patterns for Efficient SSE Implementation

Efficiently implementing Server-Sent Events (SSE) requires careful consideration of architectural patterns. Choosing the right pattern significantly impacts performance, scalability, and maintainability. This section explores key architectural patterns and their implications for SSE applications.

Publish-Subscribe Pattern in SSE

The publish-subscribe pattern is ideally suited for SSE, enabling efficient one-to-many communication. In this model, a server acts as a publisher, broadcasting updates to subscribed clients. Clients, acting as subscribers, passively receive these updates without needing to actively poll the server. This eliminates the overhead associated with frequent requests, resulting in a more efficient and responsive system. Several messaging systems facilitate this pattern’s implementation.

- Redis Pub/Sub: Redis provides a robust pub/sub mechanism, where the server publishes events to a channel, and clients subscribe to that channel to receive updates. Its in-memory data structure ensures fast event delivery. A potential drawback is the need for a separate Redis instance, adding complexity to the architecture.

- RabbitMQ: RabbitMQ, a powerful message broker, offers flexible routing and durable messaging, making it suitable for handling large volumes of SSE events. Its features, however, might be overkill for simpler SSE applications.

- Kafka: For high-throughput, distributed SSE systems, Kafka is a compelling choice. Its distributed architecture ensures scalability and fault tolerance. However, the added complexity of managing a Kafka cluster might outweigh the benefits for smaller applications.

Handling Disconnections and Reconnections in SSE

Efficiently managing disconnections and reconnections is crucial for a seamless user experience in SSE applications. Several approaches exist, each with its own advantages and disadvantages.

- Client-Side Retries: The simplest approach involves the client automatically reconnecting after a disconnection. This is usually implemented with exponential backoff to avoid overwhelming the server. While straightforward, this method can be inefficient if the server is down for an extended period, leading to unnecessary retry attempts.

- Server-Side Keep-Alive: The server periodically sends keep-alive messages to clients to detect disconnections. This allows for faster detection and reduces the latency of reconnection. However, this adds overhead to the server and requires careful management to avoid unnecessary network traffic.

- Heartbeat Mechanism: A heartbeat mechanism involves periodic exchange of short messages between client and server to verify connectivity. This combines the advantages of both client-side retries and server-side keep-alives, offering a robust solution for managing disconnections and reconnections. It necessitates more complex implementation compared to the simpler approaches.

Load Balancing and Caching in SSE Architectures

Load balancing and caching are critical for optimizing the performance and scalability of SSE architectures. Load balancing distributes incoming requests across multiple servers, preventing any single server from becoming overloaded. Caching reduces the load on the backend by storing frequently accessed data closer to the clients.

For instance, a CDN (Content Delivery Network) can cache SSE event streams, reducing the latency for clients geographically distant from the origin server. Load balancers like Nginx or HAProxy can distribute incoming connections across multiple application servers, ensuring high availability and responsiveness even under heavy load. Implementing a caching strategy for SSE data, such as using Redis or Memcached, can significantly improve response times, especially for frequently updated data.

Last Point

Building a successful SSE system isn’t just about coding; it’s about strategic architectural design. We’ve explored the critical role architecture plays in performance, security, and scalability. By carefully considering the trade-offs between different approaches and selecting the right tools for the job, you can create a robust and efficient SSE system that meets the specific demands of your application.

Remember, the right architecture isn’t one-size-fits-all – it’s about understanding your needs and building a solution tailored to them. Happy building!

Clarifying Questions

What are the main differences between using WebSockets and SSE?

SSE is unidirectional (server to client), simpler to implement, and better for low-latency, high-volume updates. WebSockets are bidirectional, offering more flexibility but with added complexity.

How do I handle client disconnections in an SSE application?

Implement reconnect logic on the client-side. The server should ideally maintain a connection pool or use a mechanism to detect and re-establish connections gracefully.

What are some common security concerns with SSE?

Cross-site scripting (XSS) vulnerabilities, unauthorized access to data streams, and denial-of-service (DoS) attacks are common concerns. Proper authentication, authorization, and input validation are crucial.

Can I use SSE with multiple clients?

Yes, SSE can handle multiple concurrent clients. However, scaling effectively may require load balancing and efficient resource management on the server-side.