AI Voice App Detects Coronavirus

Artificial intelligence based app uses the human voice to test for corona virus – Artificial intelligence based app uses the human voice to test for coronavirus – it sounds like science fiction, right? But this innovative technology is closer to reality than you might think. Imagine a world where a simple voice recording could potentially detect COVID-19, offering a quick, non-invasive screening method. This app leverages the power of AI and sophisticated voice recognition to analyze subtle changes in a person’s voice, potentially identifying characteristics associated with the virus.

While still under development and facing challenges in accuracy and ethical considerations, the potential impact of this technology on global health is immense.

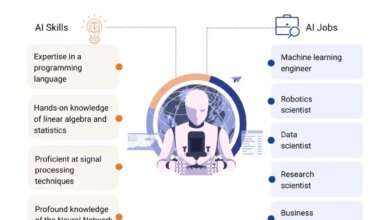

The core technology relies on advanced machine learning models trained on vast datasets of voice samples from both COVID-19 positive and negative individuals. These models learn to identify patterns in vocal characteristics like pitch, tone, and breathing patterns that might indicate infection. The process involves recording a short voice sample, processing it to remove background noise, extracting relevant acoustic features, and finally, running the data through the AI model for analysis.

The result is a potential diagnosis, though further testing would likely be needed for confirmation.

Technological Feasibility

Developing a voice-based AI app for preliminary COVID-19 screening presents significant technological hurdles, primarily revolving around the accuracy and reliability of voice recognition in diverse settings and the nuanced nature of differentiating COVID-19 symptoms from other respiratory illnesses. While advancements in AI have made significant strides, achieving high accuracy and clinical-grade reliability requires careful consideration of several factors.The success of such an application hinges on the robust performance of its core components, including accurate voice recognition, effective symptom analysis, and a reliable diagnostic engine.

The complexity of human vocalizations, coupled with the variability of acoustic environments, poses considerable challenges.

Voice Recognition Technology and Accuracy

Current state-of-the-art Automatic Speech Recognition (ASR) systems leverage deep learning models, achieving impressive accuracy in controlled environments. However, real-world conditions introduce significant noise and variability. Factors like background noise, accent variations, microphone quality, and the speaker’s health (e.g., a congested nose affecting pronunciation) can significantly impact the accuracy of transcription. While advancements in noise reduction and speaker adaptation techniques are ongoing, achieving consistently high accuracy across diverse populations and acoustic environments remains a challenge.

For example, a system trained primarily on clear speech from native English speakers might perform poorly on individuals with strong accents or those experiencing respiratory distress. The accuracy of the ASR system directly impacts the downstream analysis of vocal symptoms, making robustness a critical design consideration.

Differentiating COVID-19 Vocal Symptoms from Other Illnesses

A major challenge lies in the subtle and often overlapping nature of vocal symptoms associated with COVID-19 and other respiratory illnesses like the common cold, influenza, or bronchitis. Cough characteristics (frequency, intensity, duration), voice quality (hoarseness, breathiness), and the presence of other symptoms like shortness of breath are often similar across various respiratory infections. Developing an algorithm capable of reliably distinguishing COVID-19 based solely on vocal characteristics requires a large, high-quality dataset of labeled voice samples from individuals with confirmed diagnoses of various respiratory conditions.

Furthermore, the algorithm must account for individual variability in symptom presentation and the potential for asymptomatic or minimally symptomatic infections. The reliance on solely vocal symptoms might lead to false positives or negatives, necessitating a clear understanding of the application’s limitations and the need for confirmatory testing.

System Architecture

The system would function in a series of stages, from voice data acquisition to a preliminary diagnostic assessment.

| Component | Function | Technology Used | Potential Challenges |

|---|---|---|---|

| Voice Data Acquisition | Capture user’s voice sample using a smartphone or other device microphone. | Smartphone microphone, potentially with noise reduction pre-processing. | Background noise, microphone quality variability, inconsistent recording conditions. |

| Speech Recognition (ASR) | Convert the voice sample into a text transcription. | Deep learning-based ASR model (e.g., recurrent neural networks, transformers). | Accuracy degradation in noisy environments, accent variations, speech impairments. |

| Feature Extraction | Extract relevant acoustic features from the speech signal (e.g., pitch, jitter, shimmer, spectral characteristics of cough sounds). | Signal processing algorithms, machine learning techniques. | Identifying the most discriminative features for COVID-19 differentiation. |

| Symptom Analysis | Analyze extracted features to identify potential COVID-19 related vocal symptoms. | Machine learning classification models (e.g., support vector machines, random forests, deep neural networks). | Overlapping symptoms with other respiratory illnesses, limited data availability for training. |

| Diagnostic Engine | Provide a preliminary assessment of the likelihood of COVID-19 based on the analysis. | Probabilistic model, risk scoring system. | Balancing sensitivity and specificity to minimize false positives and negatives. |

| Output Presentation | Present the results to the user in a clear and understandable manner. | User interface design, clear instructions. | Communicating uncertainty and the need for further testing. |

Data Acquisition and Preprocessing

Building a reliable AI-powered coronavirus detection system based on voice analysis requires meticulous data acquisition and preprocessing. The quality of the initial recordings directly impacts the accuracy and performance of the AI model. Careful consideration of microphone specifications and robust noise reduction techniques are crucial steps in this process.The accuracy of our AI model hinges on the quality of the voice recordings used to train it.

Therefore, a rigorous approach to data acquisition and preprocessing is essential. This involves selecting appropriate recording equipment, implementing effective noise reduction strategies, and establishing a clear data cleaning protocol.

Microphone Specifications

Optimal voice recording for this application requires high-fidelity microphones capable of capturing a wide frequency range, crucial for distinguishing subtle vocal nuances indicative of respiratory illness. A minimum sampling rate of 44.1 kHz is recommended, with a bit depth of at least 16 bits to ensure sufficient dynamic range. The microphone should possess a flat frequency response across the human voice range (typically 100 Hz to 8 kHz), minimizing distortion and preserving the integrity of the acoustic signal.

A cardioid polar pattern is desirable to minimize background noise pickup. Microphones with built-in pre-amplifiers can be beneficial in low-noise environments, but care must be taken to avoid clipping or saturation of the signal. Examples of suitable microphones include professional-grade USB condenser microphones or high-quality electret condenser microphones commonly found in smartphones and laptops, provided they meet the specified sampling rate and bit depth.

Noise Reduction and Signal Enhancement

Recorded audio inevitably contains background noise that can interfere with the analysis. Various techniques can be employed to reduce this noise and enhance the voice signal. Spectral subtraction is a common method that estimates the noise spectrum and subtracts it from the speech signal. Wiener filtering is another technique that uses statistical methods to separate the noise from the signal.

More sophisticated methods include wavelet denoising and deep learning-based approaches, which can effectively remove complex noise patterns. Furthermore, techniques like adaptive noise cancellation can be used to actively reduce persistent background noise sources. The choice of method depends on the nature and level of background noise present in the recordings. For instance, if the primary noise source is consistent humming, adaptive noise cancellation might be most effective.

Conversely, for random background noise, spectral subtraction or Wiener filtering may be more suitable.

Data Cleaning and Preparation

A standardized procedure for data cleaning and preparation is essential to ensure data consistency and quality. This involves several steps. First, each recording should be reviewed for clipping, which occurs when the signal amplitude exceeds the maximum recording level. Clipped segments should be either removed or replaced with interpolated data. Second, any silent periods at the beginning and end of the recordings should be trimmed.

Third, recordings with excessive background noise that cannot be effectively removed should be discarded. Fourth, the data should be segmented into smaller, manageable chunks, for example, 1-3 second segments, to reduce computational complexity and improve the model’s performance. Finally, the data should be standardized to ensure consistent volume levels across all recordings. This involves adjusting the amplitude of each recording to a target level, using techniques such as normalization.

For handling missing or corrupted data, imputation techniques can be employed. This might involve replacing missing values with the mean, median, or mode of the surrounding data points, or using more sophisticated methods such as k-nearest neighbors imputation. Alternatively, corrupted data segments can be removed entirely if they represent a small portion of the total dataset.

Feature Extraction and Model Training

Building a reliable COVID-19 detection system using voice data requires careful consideration of feature extraction and model selection. The goal is to identify acoustic characteristics that reliably distinguish between COVID-19 positive and negative individuals, and then train a machine learning model to accurately classify new, unseen voice samples. This process involves extracting meaningful features from the raw audio data and then using these features to train a model capable of making accurate predictions.

Acoustic Features for COVID-19 Detection

Several acoustic features can potentially differentiate between the voices of individuals with and without COVID-19. These features reflect changes in the vocal tract caused by inflammation or other physiological effects of the virus. The selection of appropriate features is crucial for the performance of the machine learning model.

- Jitter and Shimmer: Jitter measures the variation in the time intervals between successive periods of a vocal fold vibration, while shimmer quantifies the variation in the amplitude of these vibrations. Increased jitter and shimmer are often associated with vocal fold irregularities, which can be a symptom of respiratory illnesses like COVID-19. For example, a higher jitter value might indicate a more irregular vocal fold vibration, potentially linked to inflammation caused by the virus.

- Harmonics-to-Noise Ratio (HNR): This feature reflects the ratio of harmonic energy to noise energy in the voice signal. A lower HNR suggests a higher proportion of noise, potentially indicating vocal tract irregularities or breathing difficulties, common in COVID-19 patients. A person with COVID-19 might exhibit a lower HNR compared to a healthy individual due to increased breathiness or hoarseness.

- Mel-Frequency Cepstral Coefficients (MFCCs): MFCCs are a widely used set of features in speech recognition that capture the spectral envelope of the voice signal. Changes in the MFCCs can reflect alterations in the vocal tract resonance, which might be indicative of COVID-19. For instance, a change in the formant frequencies (peaks in the spectral envelope) could be a subtle yet significant indicator of the disease.

Machine Learning Models for COVID-19 Classification

Several machine learning models can be applied to classify COVID-19 based on voice data. The choice depends on factors like the size of the dataset, computational resources, and desired performance characteristics.

- Support Vector Machines (SVM): SVMs are effective in high-dimensional spaces and can handle non-linear relationships between features. Strengths include relatively simple implementation and good generalization performance. Weaknesses include difficulty in handling large datasets and sensitivity to the choice of kernel function.

- Random Forest: Random Forests are ensemble methods that combine multiple decision trees to improve accuracy and robustness. Strengths include high accuracy, ability to handle large datasets, and inherent feature importance estimation. Weaknesses include potential overfitting if not properly tuned and can be computationally expensive for very large datasets.

- Deep Neural Networks (DNNs): DNNs, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), are powerful models capable of learning complex patterns from raw audio data. Strengths include high accuracy potential and ability to automatically learn relevant features. Weaknesses include high computational cost, requirement for large datasets, and potential for overfitting if not carefully regularized. For example, a CNN could be used to extract temporal features from the audio spectrogram, while an RNN could capture sequential dependencies in the speech signal.

Experimental Design and Performance Evaluation

To evaluate the performance of the chosen model (e.g., a Random Forest), a rigorous experiment is necessary. The dataset should be split into training, validation, and testing sets (e.g., 70%, 15%, 15% respectively). The model will be trained on the training set, hyperparameters tuned on the validation set, and finally evaluated on the unseen testing set.The performance will be assessed using standard classification metrics:

- Accuracy: The percentage of correctly classified samples.

- Precision: The proportion of correctly predicted positive cases among all predicted positive cases.

- Recall (Sensitivity): The proportion of correctly predicted positive cases among all actual positive cases.

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of performance.

A confusion matrix will be generated to visualize the model’s performance across different classes (COVID-19 positive and negative). Furthermore, Receiver Operating Characteristic (ROC) curves and Area Under the Curve (AUC) will be computed to assess the model’s ability to discriminate between the classes. The experiment will be repeated using k-fold cross-validation to ensure robustness and reduce the impact of dataset randomness.

For example, a 5-fold cross-validation would involve splitting the data into 5 folds, training the model on 4 folds and testing on the remaining fold, and repeating this process 5 times. The final performance metrics would be the average across all 5 folds.

Ethical and Societal Implications

Developing an AI-powered voice-based COVID-19 diagnostic tool presents significant ethical and societal challenges that must be carefully considered. The accuracy and trustworthiness of the system are directly tied to the data used to train it, and the collection and use of sensitive voice data raise crucial privacy concerns. Addressing these issues is vital to ensure responsible innovation and public trust.The potential for bias in the data used to train the AI model is a major concern.

If the training data does not accurately reflect the diversity of the population, the resulting model may be less accurate for certain demographic groups. For instance, if the training data predominantly includes individuals with specific accents or speaking styles, the system might misdiagnose individuals with different vocal characteristics. This could lead to disparities in healthcare access and outcomes, potentially exacerbating existing health inequalities.

Imagine an AI-powered app diagnosing COVID-19 just by analyzing your voice! The speed and efficiency of such a tool are incredible, and developing it likely involved rapid prototyping. This highlights the importance of platforms like Domino, discussed in this insightful article on domino app dev the low code and pro code future , which allows for faster development cycles.

This kind of rapid development is crucial for creating and deploying life-saving AI applications like this voice-based coronavirus test.

Similarly, if the data is skewed towards a particular age group or health status, the diagnostic accuracy could vary significantly across different populations. This highlights the critical need for diverse and representative datasets in the development of such technologies.

Data Bias and Diagnostic Accuracy, Artificial intelligence based app uses the human voice to test for corona virus

Bias in the training data can manifest in various ways, leading to inaccurate diagnoses. For example, a model trained primarily on data from individuals with clear pronunciation might misinterpret the coughs or breathing patterns of individuals with speech impediments or those speaking in dialects not represented in the training set. This could result in false negatives (missing cases) or false positives (incorrect diagnoses), both with potentially serious consequences.

Furthermore, the presence of background noise in the training data could also impact the model’s performance, leading to inaccurate classifications. Addressing this requires careful data curation and the use of techniques to mitigate bias, such as data augmentation and algorithmic fairness methods.

Privacy Concerns Related to Voice Data

The collection and use of voice data for medical diagnosis raise significant privacy concerns. Voice recordings contain sensitive information about an individual’s health status, potentially revealing details about their respiratory condition, speech patterns, and even their emotional state. This data could be vulnerable to breaches, misuse, or unauthorized access, leading to serious consequences for individuals. Furthermore, the ongoing storage and use of this data require robust security measures and clear data governance policies to protect individual privacy.

The potential for the data to be used for purposes beyond diagnosis, such as profiling or targeted advertising, must also be carefully considered and explicitly prohibited.

Strategies for Mitigating Ethical Concerns

Addressing the ethical concerns associated with this technology requires a multi-faceted approach. The responsible development and deployment of this technology require careful consideration of privacy, fairness, and transparency.

- Employ rigorous data curation and preprocessing techniques to minimize bias. This includes ensuring the training data is diverse and representative of the target population, and using techniques to identify and mitigate bias in the data.

- Implement robust data anonymization and security measures to protect individual privacy. This includes encrypting voice data, using secure storage solutions, and adhering to strict data governance policies.

- Develop transparent and explainable AI models. This allows for better understanding of the model’s decision-making process and facilitates the identification of potential biases.

- Obtain informed consent from users before collecting and using their voice data. This should clearly explain the purpose of data collection, how the data will be used, and the associated privacy risks.

- Establish clear data governance policies that comply with relevant regulations and ethical guidelines. This includes defining the permissible uses of voice data, establishing procedures for data access and control, and specifying mechanisms for data deletion.

- Engage in ongoing monitoring and evaluation of the system’s performance and fairness. This allows for the identification and mitigation of biases and ensures the system remains accurate and equitable over time.

User Interface and Experience

Designing a user-friendly and effective interface is crucial for a successful COVID-19 voice-testing app. The app needs to guide users through the testing process seamlessly, provide clear instructions, and deliver results in an easily understandable format. This section details the proposed user interface and user experience (UI/UX) design, aiming for simplicity and accessibility for a broad range of users.The app’s design prioritizes clarity and ease of use, recognizing that users may be experiencing stress or anxiety related to their health.

The visual design will be clean and uncluttered, using a calming color palette and intuitive navigation. We aim to minimize the number of steps and screens required to complete a test, reducing potential user frustration.

App Home Screen

The home screen will feature a prominent “Start Test” button, centrally located and easily visible. Below this, a brief, reassuring message will appear, such as “Take a quick voice test to help assess your risk.” A smaller section might display relevant information, such as links to public health resources or FAQs. The overall aesthetic will be calming and reassuring, avoiding overly clinical or alarming imagery.

Voice Recording Screen

Upon pressing “Start Test,” the user will be directed to the voice recording screen. Clear instructions will appear, guiding the user on how to record their voice sample. These instructions might include: “Speak clearly and steadily into your device’s microphone for [duration] seconds. Avoid background noise.” A visual progress bar will indicate the recording duration. A “re-record” button will allow users to retry if needed.

A visual representation of the audio levels, such as a dynamic meter, will provide feedback on recording quality.

Results Screen

After the recording is complete, the app will process the audio and display the results on the results screen. The results will be presented in a clear and concise manner, avoiding medical jargon. A simple traffic light system (green for low risk, yellow for moderate risk, red for high risk) will be used to indicate the initial assessment. Below this, a more detailed explanation of the results will be provided, including potential next steps, such as contacting a healthcare professional or self-isolating.

This section will also clearly state the limitations of the test, emphasizing that it is not a definitive diagnosis and should not replace medical advice. For example, the app might display a message like: “This test provides an initial assessment only. It is not a substitute for professional medical advice. Consult your doctor for diagnosis and treatment.”

Feedback Mechanism

The app will include a feedback mechanism, allowing users to report any issues or provide suggestions for improvement. This could be a simple form accessible through the settings menu, where users can provide details about their experience. This feedback will be crucial for improving the app’s accuracy, usability, and overall user experience. The feedback form will request information on user experience, any technical issues encountered, and suggestions for improvement.

Mockup Descriptions

Imagine the home screen as a simple, clean design with a large, round “Start Test” button in the center, vibrant green. Below it, in a smaller, calmer font, the message “Take a quick voice test to help assess your risk” is displayed. The background is a soft, light blue.The voice recording screen displays a large, circular microphone icon with an animated progress bar circling around it, indicating recording time.

Below this, clear, concise instructions are displayed, such as “Speak clearly and steadily for 10 seconds.” A simple audio level meter is displayed to the side, providing visual feedback on recording quality.The results screen uses a simple traffic light system. A large green circle indicates low risk, a yellow circle moderate risk, and a red circle high risk.

Below the circle, a detailed explanation of the result is given, along with recommended next steps and a clear statement regarding the test’s limitations. The overall design maintains a calm and reassuring tone, avoiding alarmist imagery.

Validation and Deployment

Getting our AI-powered voice-based COVID-19 testing app ready for the public requires rigorous validation and careful navigation of regulatory hurdles. This process is crucial to ensure the app’s accuracy, reliability, and safety for users. We need to demonstrate its effectiveness and gain the necessary approvals before widespread deployment.The path to deployment involves several key stages, beginning with validating the app’s performance and concluding with a well-planned rollout strategy.

This includes addressing regulatory requirements and considering the practical aspects of making the app widely available and accessible to those who need it most.

Clinical Trials for Validation

Validating the app’s accuracy requires a robust clinical trial process. This will involve recruiting a diverse group of participants, representing various demographics and health conditions, to undergo both the app’s voice test and a gold-standard COVID-19 test (like a PCR test). Data from both tests will be compared to determine the app’s sensitivity (ability to correctly identify positive cases) and specificity (ability to correctly identify negative cases).

The sample size will be determined using power analysis to ensure statistically significant results. We will also meticulously document any adverse events or unexpected results. For example, we might compare the results of 1000 participants using the app against the results of their PCR tests. If the app correctly identifies 950 out of 1000 positive and negative cases, this provides a strong indication of accuracy.

This data will be analyzed statistically to determine the confidence intervals for sensitivity and specificity. This rigorous testing will help establish the app’s clinical validity and reliability.

Regulatory Requirements for Medical App Deployment

Deploying a medical app like ours requires navigating a complex regulatory landscape. This will vary depending on the specific region or country. In the US, for instance, we will likely need to comply with regulations set by the Food and Drug Administration (FDA), potentially seeking clearance or approval under their 510(k) pathway. This process involves submitting comprehensive documentation detailing the app’s design, functionality, validation results, and risk mitigation strategies.

Similar regulatory bodies exist in other countries, each with its own specific requirements. Meeting these requirements is paramount to ensure the app’s safety and efficacy are thoroughly reviewed and approved before public release. We will need to proactively engage with regulatory agencies throughout the development and validation process to ensure compliance and a smooth approval process.

Deployment Plan: Scalability and Accessibility

Our deployment plan will prioritize scalability and accessibility. Scalability means the app must be able to handle a large number of users simultaneously without performance degradation. This involves using robust cloud infrastructure and efficient database management. Accessibility means ensuring the app is usable by people with varying levels of technological literacy and those with disabilities. This will require careful design considerations, such as providing clear instructions, multilingual support, and compatibility with various devices.

We will also need to consider data security and privacy, complying with relevant regulations like HIPAA (in the US) or GDPR (in Europe). A phased rollout, starting with a limited beta test in a specific region, will allow us to identify and address any unforeseen issues before a wider release. This phased approach will also enable us to monitor app performance and user feedback, allowing for iterative improvements and optimization.

Last Point: Artificial Intelligence Based App Uses The Human Voice To Test For Corona Virus

The development of an AI-powered voice app for coronavirus detection represents a significant leap forward in medical technology. While challenges remain in achieving high accuracy and addressing ethical concerns, the potential benefits are undeniable. Imagine rapid, accessible screening, especially in resource-limited settings. This technology could revolutionize early detection and help curb the spread of future pandemics. However, responsible development and deployment, prioritizing accuracy, privacy, and ethical considerations, are paramount to ensuring its success and widespread adoption.

The future of disease detection may well be in the sound of our voices.

FAQ Corner

How accurate is this technology?

Accuracy is still under development and depends heavily on the quality of the training data and the complexity of the AI model. Current accuracy rates are not yet at a level suitable for standalone diagnosis.

What if I have a cold or the flu? Will it confuse it with COVID-19?

Differentiating between COVID-19 and other respiratory illnesses is a significant challenge. The app’s accuracy in distinguishing these conditions needs further refinement.

Is my voice data safe and private?

Data privacy and security are critical concerns. Robust anonymization techniques and secure data storage are essential to protect user information.

Will this app replace traditional testing methods?

No, this technology is intended as a supplementary screening tool, not a replacement for established diagnostic methods like PCR tests.