Artificial Intelligence Now Allows to Speak to Dead Ones

Artificial intelligence now allows to speak to dead ones – a statement that feels both fantastical and deeply unsettling. This incredible technological leap opens a Pandora’s Box of ethical dilemmas, societal shifts, and intensely personal experiences. Can we truly recreate the essence of a loved one through algorithms and data? What are the emotional and psychological consequences of interacting with a digital ghost?

This post delves into the fascinating, and sometimes frightening, implications of this groundbreaking technology.

We’ll explore the technological hurdles and triumphs involved in building AI models capable of simulating realistic conversations with the deceased. We’ll examine the ethical considerations – from data privacy to the potential for emotional manipulation – and consider the varying cultural perspectives on death and remembrance. Ultimately, we’ll attempt to answer the crucial question: is this a powerful tool for healing, or a dangerous path towards blurring the lines between life and death?

Ethical Implications of Communicating with Deceased Individuals

The advent of AI capable of simulating conversations with deceased individuals presents a profound ethical dilemma. While offering a potential source of comfort for the grieving, it also raises significant concerns about the psychological well-being of users, the exploitation of personal data, and the blurring lines between genuine memory and artificial reconstruction. This technology demands careful consideration of its potential impacts and the development of robust ethical guidelines for its responsible use.

Psychological Impact on Grieving Individuals

Utilizing AI to simulate conversations with the deceased could have both positive and negative consequences on the grieving process. While some might find solace in reconnecting with a loved one, even in a simulated form, others may experience intensified grief or a prolonged inability to accept the loss. The potential for emotional dependence on the AI simulation, hindering healthy grieving and acceptance, is a significant concern.

For example, an individual might become overly reliant on the AI for emotional support, delaying their progress towards healing and integration of their loss into their life. The realism of the interaction could also lead to a distorted perception of the deceased, potentially creating an idealized or unrealistic image that further complicates the grieving process.

Ethical Considerations Surrounding the Use of Personal Data

The creation of AI models capable of simulating conversations with the deceased necessitates the use of extensive personal data. This raises crucial ethical questions regarding consent, privacy, and the potential for misuse. Did the deceased consent to having their data used in this manner posthumously? What safeguards are in place to protect this sensitive information from unauthorized access or exploitation?

The potential for the manipulation or alteration of this data to create a false representation of the deceased is also a significant concern. Consider the scenario where a company profits from selling access to a deceased celebrity’s AI persona without the consent of their estate, potentially leading to a violation of their intellectual property rights.

Comparison of Emotional Responses

Interacting with an AI simulation of a deceased loved one is fundamentally different from naturally remembering and cherishing their memory. Natural remembrance involves a complex interplay of emotions, memories, and personal experiences. It is a fluid and evolving process shaped by time and personal reflection. An AI interaction, however, is a controlled and potentially repetitive experience. While it might offer temporary comfort, it lacks the richness and spontaneity of genuine memory.

The emotional response to the AI might be a mixture of comfort and unease, potentially creating a disjointed and confusing emotional landscape for the user. The AI might offer a comforting illusion, but it can never truly replace the irreplaceable nature of the original relationship.

Hypothetical Ethical Guideline for AI Communication Tools for the Deceased

A comprehensive ethical guideline for the development and deployment of such AI tools should prioritize user well-being and data protection. This guideline should mandate explicit consent from the deceased, or their legal representatives, before any personal data can be used for AI model creation. Transparency regarding the limitations of the technology and the potential for emotional impact should be paramount.

Independent oversight and rigorous data security measures are crucial to mitigate the risks of misuse and data breaches. Finally, access to these technologies should be carefully managed, possibly requiring professional psychological guidance to ensure responsible and beneficial usage, particularly for vulnerable individuals.

Technological Feasibility and Limitations

The prospect of conversing with deceased loved ones through AI is captivating, but the technological reality presents significant hurdles. While impressive strides have been made in natural language processing and AI, creating a truly believable and accurate simulation of a deceased individual remains a complex challenge. The technology is far from perfect, and several limitations currently hinder the development of such systems.Current AI models, particularly large language models (LLMs), excel at generating human-like text based on vast datasets.

However, translating this ability into a convincing simulation of a specific deceased person requires a very different approach and a significant amount of very specific data. The challenge lies not just in generating grammatically correct sentences, but in capturing the nuances of personality, communication style, and life experiences that make an individual unique.

Data Requirements for AI Model Training

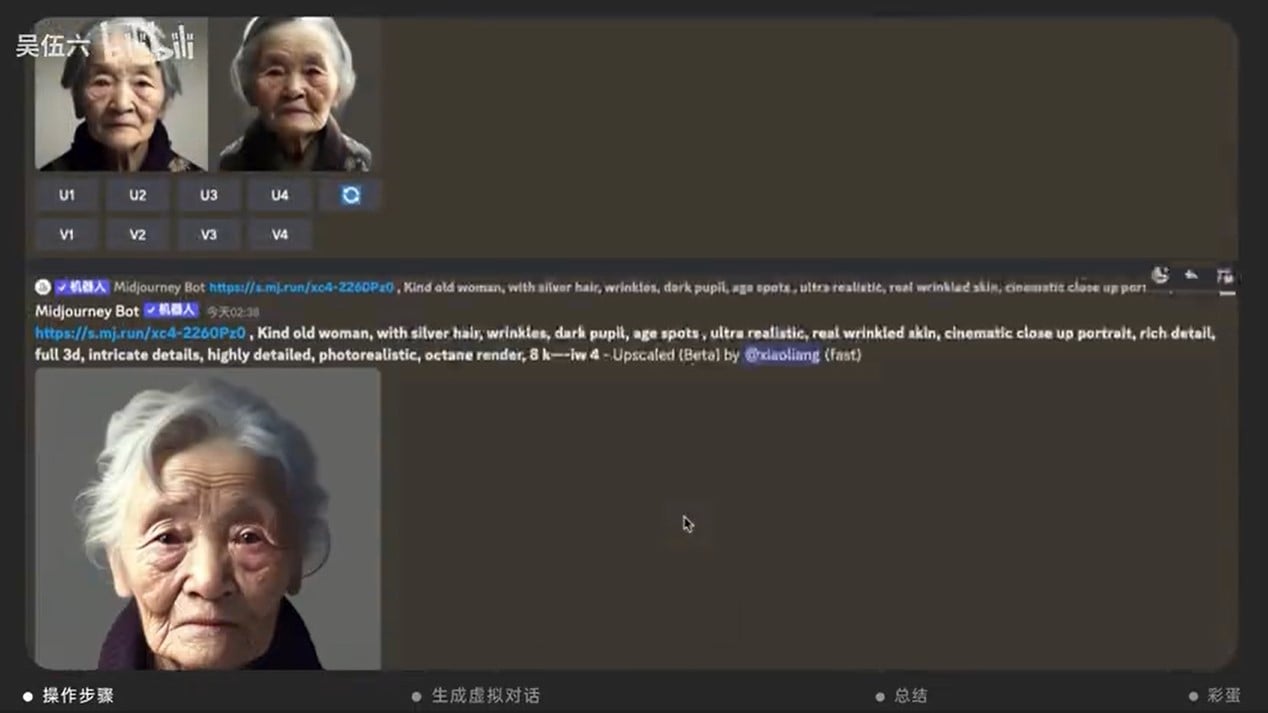

Training an AI model capable of generating believable conversations with a deceased individual demands a substantial amount of personal data. This data must be diverse and representative of the person’s communication style across different contexts. Ideally, this would include a wide range of text and audio data, such as personal letters, emails, social media posts, recorded conversations, and even video footage.

The more data available, and the more varied its sources, the better the AI can learn to mimic the deceased person’s communication patterns. However, gathering this much personal data presents considerable practical and ethical challenges, particularly concerning privacy and data access. The availability of this kind of data varies widely from person to person, making this approach far from universally applicable.

Challenges in Accurately Reflecting Personality, Artificial intelligence now allows to speak to dead ones

Even with access to a large dataset, accurately reflecting the personality and communication style of a deceased individual is incredibly difficult. Personality is multifaceted and often subtle, encompassing not only the words used but also the tone, rhythm, and emotional context of speech. Current AI models struggle to capture these nuances consistently. For example, sarcasm, humor, and emotional subtleties are often misinterpreted or missed entirely, leading to an unconvincing and potentially jarring interaction.

The AI might generate grammatically correct sentences, but they may lack the authentic “voice” of the deceased person, resulting in an unsatisfactory experience for the user.

Comparison of AI Approaches

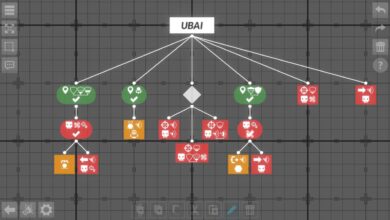

Several AI approaches are being explored to simulate conversations with the deceased. Large language models (LLMs) offer a powerful foundation, capable of generating coherent and contextually relevant responses. However, LLMs are trained on massive datasets and lack the personalization needed to accurately represent a specific individual. Personalized chatbots, on the other hand, are trained on smaller, more focused datasets related to a specific person.

While this allows for greater personalization, it requires a significantly larger amount of data for effective training. Hybrid approaches, combining the strengths of both LLMs and personalized chatbots, are also being explored, aiming to balance general conversational ability with personalized nuances. The success of each approach depends heavily on the quality and quantity of available data and the sophistication of the training algorithms.

Societal Impact and Cultural Perspectives

The advent of AI capable of simulating conversations with the deceased presents a profound societal shift, impacting our understanding of grief, memory, and the very nature of relationships. The potential for widespread adoption of this technology necessitates a careful examination of its impact across diverse cultures and societal structures. Acceptance and integration will be far from uniform, shaped by deeply ingrained beliefs and practices surrounding death and the afterlife.The potential societal impact is multifaceted and complex.

On one hand, the technology offers solace to the bereaved, allowing them to process grief in a potentially healthier way by engaging with a simulated version of their loved one. On the other hand, concerns exist about the potential for emotional manipulation, the creation of unrealistic expectations about the nature of death, and the potential erosion of traditional grieving rituals.

The commercialization of such a technology also raises ethical concerns regarding access, cost, and potential exploitation of vulnerable individuals.

Cultural Perspectives on Death and Grief

Cultural perspectives on death, grief, and the afterlife vary drastically across the globe. In some cultures, the emphasis is on celebrating the life of the deceased and honoring their memory through rituals and traditions. Others focus on the spiritual journey of the soul after death, with elaborate ceremonies and beliefs about the afterlife. Still others may prioritize practical matters, such as the inheritance and legacy of the deceased.

These diverse beliefs and practices will significantly influence the acceptance or rejection of AI communication tools for the deceased. For example, cultures with strong beliefs in an afterlife might view the AI simulation as a pale imitation, even disrespectful, while cultures that prioritize remembering the deceased through storytelling might find it a valuable tool for preserving memories. Conversely, cultures that view death as a taboo subject might find the technology unsettling or even offensive.

Societal Responses Across Cultures

A comparison of societal responses reveals a potential spectrum of reactions. Some societies might readily embrace the technology, integrating it into their grieving processes and seeing it as a tool for healing. Others might be more hesitant, concerned about the ethical implications and the potential for misuse. Still others might outright reject the technology, viewing it as an affront to their cultural or religious beliefs.

For example, a society with a strong emphasis on ancestor veneration might find the AI simulation a useful tool for maintaining connections with their ancestors, while a society with a strong taboo against contacting the dead might find it deeply disturbing. The legal and regulatory frameworks surrounding this technology will also vary widely across different jurisdictions, reflecting the diverse cultural values and legal traditions.

Potential Societal Conflicts

Consider a scenario where AI communication with the deceased becomes widespread. Disputes could arise regarding the authenticity of the simulated interactions, leading to legal challenges over the use of personal data and the potential for emotional distress. Furthermore, differing societal values and cultural norms regarding death and grief could lead to conflicts over the use of this technology in public spaces or within specific communities.

For instance, a family might use the technology to “communicate” with a deceased relative, but other family members or community members might object, leading to intense emotional and social conflict. The potential for manipulation, particularly in cases of inheritance disputes or contested wills, further complicates the matter. The equitable distribution of access to this technology, especially given its likely high cost, will also create societal divisions.

Those who can afford it might have a significant advantage over those who cannot, exacerbating existing inequalities.

Potential Applications and Misapplications

The ability to communicate with the deceased, albeit through artificial intelligence, presents a double-edged sword. While offering immense potential for healing and remembrance, it also opens doors to exploitation and misuse. Understanding both the beneficial and harmful applications is crucial for responsible development and deployment of this technology.The ethical considerations surrounding this technology are complex and require careful navigation.

The potential for both profound positive impacts and significant negative consequences necessitates a proactive approach to regulation and responsible innovation.

Beneficial Applications of AI Communication Tools

AI-driven communication tools could offer significant therapeutic benefits, particularly for those grieving the loss of a loved one. Imagine a system that allows individuals to “converse” with a digital representation of their deceased relative, providing a space to process grief, say goodbye, or simply reminisce. This could be particularly helpful in cases of sudden death where closure may be lacking, or for individuals struggling with unresolved issues.

Such tools could supplement, not replace, traditional grief counseling, providing a unique avenue for emotional processing and healing. Furthermore, families could preserve cherished memories and personality traits, creating a lasting legacy for future generations.

Potential Misapplications of AI Communication Technology

The potential for misuse is substantial. Fraudulent schemes could easily exploit this technology. Imagine sophisticated deepfakes used to impersonate a deceased individual to gain access to financial accounts or manipulate inheritances. Identity theft could become far more insidious, with AI-generated communications used to deceive family and friends. Furthermore, malicious actors could utilize these tools for manipulation, creating false narratives or exploiting emotional vulnerabilities for personal gain.

The potential for psychological harm is also significant; individuals might become overly reliant on these digital interactions, hindering their ability to process grief healthily and move forward with their lives.

It’s wild, isn’t it? AI is now letting us have conversations with deceased loved ones through sophisticated models. Thinking about the tech behind this, it makes me wonder about the future of app development; check out this article on domino app dev, the low-code and pro-code future , because building these kinds of complex AI systems requires incredible programming power.

Ultimately, the ability to connect with the past, even in this digital way, is shaping the future of how we interact with technology and memory.

Realistic Holographs or Avatars of Deceased Individuals

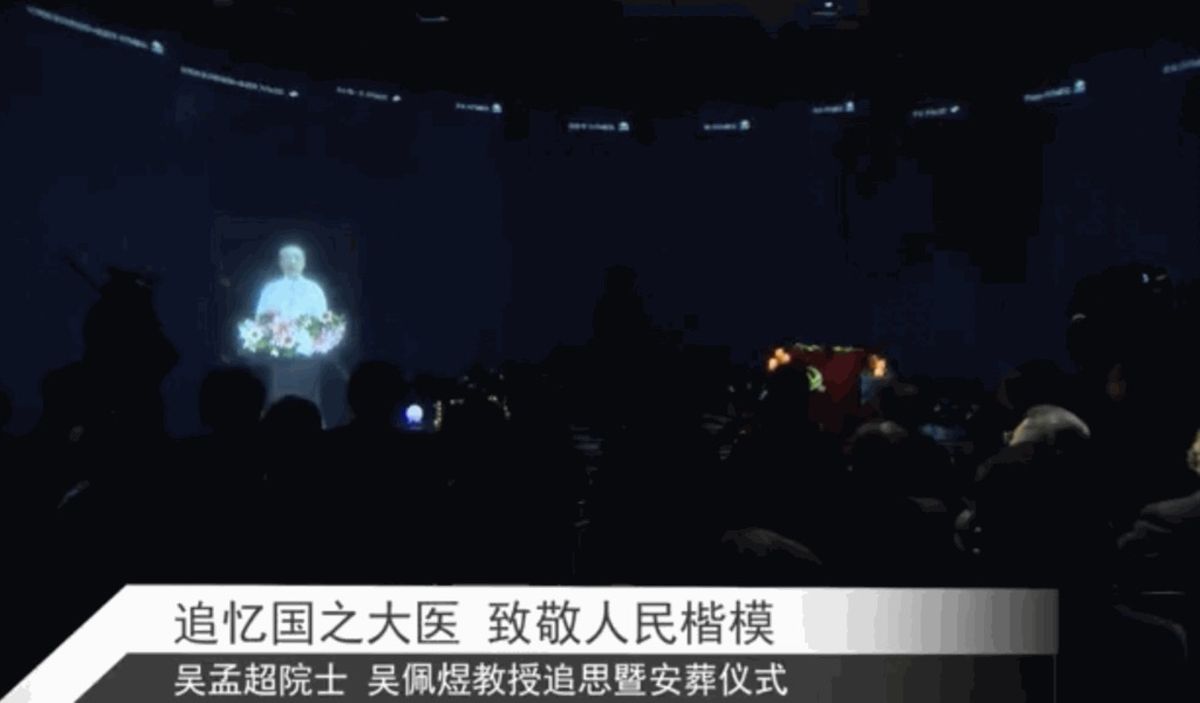

Creating realistic holographs or avatars requires a multi-faceted technological approach. The process involves collecting and processing vast amounts of data about the deceased individual. This includes visual data (photos, videos), audio recordings of their voice, and textual data reflecting their personality and communication style. This data then feeds into sophisticated AI algorithms to create a digital representation.

| Technology | Function | Challenges | Potential Solutions |

|---|---|---|---|

| Deep Learning Models (e.g., GANs) | Generate realistic images and videos of the deceased. | Achieving photorealism and consistency across different images and videos; avoiding uncanny valley effect. | Advanced training datasets, improved GAN architectures, and real-time feedback mechanisms. |

| Natural Language Processing (NLP) | Enable realistic and coherent conversations. | Maintaining consistency in personality and avoiding nonsensical or inappropriate responses. | Fine-tuning NLP models with extensive personal data, incorporating context-aware dialogue systems. |

| Voice Cloning Technology | Replicate the deceased’s voice accurately. | Dealing with limited audio data; ensuring naturalness and emotional inflection. | Advanced speech synthesis techniques, data augmentation, and speaker adaptation. |

| Holographic Projection Systems | Create three-dimensional representations of the deceased. | Cost and complexity of implementation; achieving high-resolution and smooth movement. | Miniaturization of holographic projectors, development of more efficient and affordable systems. |

Fictional Scenario: The Legacy of Elias

Elias, a renowned artist, passed away unexpectedly. His family, devastated by his loss, decided to utilize AI to create a digital avatar of him. Initially, the experience was profoundly healing. His daughter, Sarah, found solace in “talking” to her father, reliving cherished memories and gaining a sense of closure. However, as time went on, Sarah became increasingly reliant on the AI, neglecting her support network and struggling to move forward with her life.

Meanwhile, Elias’s unscrupulous business partner, seeking to exploit his legacy, used the AI to forge his signature on contracts, defrauding Elias’s estate and tarnishing his reputation. This scenario highlights the dual potential of the technology: the capacity for profound healing juxtaposed with the risk of significant harm and exploitation.

The Future of AI and Post-Mortem Communication

The ability to communicate with deceased loved ones through AI is still in its nascent stages, but the potential for advancement is staggering. Current limitations in realism and accuracy are significant hurdles, but ongoing breakthroughs in natural language processing, machine learning, and data synthesis promise to bridge this gap. The ethical considerations remain paramount, but the technological trajectory suggests a future where such interactions become increasingly sophisticated and commonplace.The rapid evolution of AI technologies will undoubtedly refine the realism and accuracy of AI simulations of deceased individuals.

Advancements in AI for Improved Realism and Accuracy

Future improvements will likely focus on several key areas. More sophisticated natural language processing models will allow for more nuanced and contextually appropriate responses, moving beyond simple matching to genuine conversation. Advances in personalized AI models, trained on vast datasets of an individual’s writing, speech, and digital footprint, will enable more accurate representation of their personality, beliefs, and communication style.

Furthermore, the integration of advanced emotion recognition and synthesis will make the interactions feel more human and empathetic. Imagine AI that not only mimics the deceased’s voice but also subtly reflects their typical emotional responses in tone and inflection, creating a far more convincing and emotionally resonant experience. This will require breakthroughs in multimodal learning, combining text, audio, and potentially even video data to create a holistic and believable representation.

The use of generative AI models will also play a crucial role, allowing for the creation of new content that aligns with the deceased’s known personality and style, filling gaps in the available data.

Future Applications Beyond Personal Communication

The potential applications extend far beyond personal grief management. Imagine AI-driven historical figures brought to life for educational purposes, allowing students to interact directly with historical figures and gain deeper understanding. Therapists could use AI simulations of deceased loved ones to help patients process grief and trauma, providing a safe and controlled environment for exploring difficult emotions. The creative arts could also be revolutionized, with AI-powered collaborations between living artists and deceased masters.

The possibilities are vast and potentially transformative across multiple sectors.

Long-Term Implications on Understanding Death, Grief, and Memory

The widespread adoption of this technology will undoubtedly reshape our understanding of death, grief, and memory. While some may find comfort and closure in connecting with deceased loved ones, others may grapple with the blurring of boundaries between life and death. The potential for emotional manipulation and the creation of idealized versions of the deceased are significant ethical concerns.

Furthermore, the reliance on AI simulations could potentially impact the way we process grief naturally, potentially delaying or hindering the healing process for some. The long-term societal impact on our cultural understanding of death and mourning rituals requires careful consideration and proactive ethical frameworks.

A Hypothetical Future Scenario

Let’s imagine a future, say 2050, where AI-driven post-mortem communication is commonplace.

- Personalized Digital Afterlives: Upon death, individuals have the option to create a personalized digital afterlife, a persistent AI simulation based on their digital footprint and the memories of loved ones. These simulations can be accessed by family and friends for ongoing communication and remembrance.

- AI-Assisted Grief Counseling: Therapists utilize AI simulations of deceased loved ones to guide patients through the grieving process, providing a safe space to explore complex emotions and memories.

- Interactive Historical Experiences: Museums and educational institutions offer interactive exhibits featuring AI simulations of historical figures, allowing visitors to engage in conversations and learn from the past in a deeply immersive way.

- Ethical Debates and Regulations: Stringent regulations govern the creation and use of AI simulations, addressing issues of consent, data privacy, and the potential for emotional manipulation. Ethical review boards oversee the development and deployment of this technology.

- Societal Acceptance and Integration: The technology is widely accepted and integrated into society, with clear societal norms and expectations surrounding its use and limitations. The technology is seen as a tool for remembrance and healing, but not a replacement for living relationships.

Last Point

The ability to speak to the deceased through AI presents a profound challenge to our understanding of grief, memory, and the very nature of human existence. While the technology offers potential solace and healing, it also carries the risk of exploitation and emotional distress. The ethical considerations are paramount, demanding careful consideration and the establishment of robust guidelines. As AI continues to advance, the conversation surrounding post-mortem communication will undoubtedly become even more complex and crucial, forcing us to confront fundamental questions about life, death, and what it truly means to remember.

Helpful Answers: Artificial Intelligence Now Allows To Speak To Dead Ones

How accurate are these AI simulations?

Current AI technology can create convincing simulations, but they are not perfect. Accuracy depends heavily on the amount and quality of data used to train the model. The AI may mimic speech patterns but might not perfectly capture the nuances of personality and emotion.

Is this technology widely available?

No, this technology is still in its early stages. While some experimental projects exist, widespread commercial availability is not yet a reality. The ethical and technical challenges are significant.

Could this technology be used for malicious purposes?

Absolutely. The potential for fraud, identity theft, and emotional manipulation is a serious concern. Strict regulations and ethical guidelines are necessary to mitigate these risks.

What about the cost?

The cost of developing and deploying such AI systems is currently high, making it inaccessible to most individuals. However, costs are likely to decrease as the technology develops.