Artificial Intelligence to Combat Cyber Attacks

Artificial intelligence to combat cyber attacks is no longer a futuristic fantasy; it’s the present-day reality reshaping how we defend against increasingly sophisticated online threats. This isn’t just about faster firewalls or more robust antivirus software; it’s about leveraging the power of machine learning and AI to anticipate, detect, and respond to attacks in ways previously unimaginable. We’re talking about systems that can learn from past attacks, identify subtle anomalies in network traffic that would slip past human eyes, and even predict future vulnerabilities before they’re exploited.

This blog post dives into the fascinating world of AI’s role in cybersecurity, exploring its capabilities and the ethical considerations that come with such powerful technology.

From proactively identifying zero-day exploits to automating incident response and even personalizing cybersecurity training, AI is revolutionizing the landscape of digital defense. We’ll explore how AI algorithms analyze massive datasets to pinpoint threats, how it helps manage vulnerabilities more effectively, and how it’s improving network and data security. We’ll also discuss the crucial ethical considerations involved, such as algorithmic bias and the potential for misuse.

Get ready to explore the cutting edge of cybersecurity!

AI-Powered Threat Detection

The digital landscape is constantly evolving, and so are the cyber threats targeting our systems. Traditional security measures often struggle to keep pace with the sophistication and velocity of modern attacks. This is where Artificial Intelligence (AI) steps in, offering a powerful new approach to threat detection and prevention. AI, specifically machine learning, allows us to analyze vast amounts of data, identify subtle patterns, and react to threats in real-time with unprecedented accuracy.

Machine learning algorithms excel at identifying anomalous network traffic indicative of cyberattacks by learning from historical data. These algorithms are trained on massive datasets of normal network activity, allowing them to establish a baseline of expected behavior. Any deviation from this baseline, such as unusual traffic patterns, data transfers to suspicious IP addresses, or unexpected spikes in network activity, triggers an alert, signaling a potential attack.

The more data the algorithm is trained on, the more accurate and nuanced its detection capabilities become. For example, a machine learning model could learn to distinguish between a legitimate surge in traffic during a popular online event and a distributed denial-of-service (DDoS) attack attempting to overwhelm a system.

Advantages of AI-Driven Intrusion Detection Systems

AI-driven intrusion detection systems (IDS) offer several key advantages over traditional signature-based approaches. Signature-based systems rely on predefined patterns of malicious code or network activity. This means they are only effective against known threats, leaving them vulnerable to zero-day exploits and novel attack techniques. In contrast, AI-based systems can detect anomalies and deviations from established norms, even if they don’t match any known signatures.

This proactive approach allows for the detection of previously unseen threats, providing a critical layer of defense against sophisticated attacks. Furthermore, AI systems can automatically adapt and learn from new data, constantly improving their detection capabilities and reducing the need for manual intervention.

Hypothetical AI System for Zero-Day Exploit Prediction and Prevention

Imagine an AI system that continuously monitors log data from various sources, including network traffic, system events, and application logs. This system utilizes a combination of machine learning techniques, such as anomaly detection and time series analysis, to identify patterns and predict potential zero-day exploits. The system could, for instance, detect unusual system calls, unexpected file access patterns, or unusual user behavior that deviate from established baselines.

Upon detecting a potential threat, the system could automatically trigger mitigation actions, such as isolating affected systems, blocking malicious traffic, or initiating automated incident response procedures. The system would continuously learn and adapt, improving its predictive capabilities over time, making it increasingly effective at identifying and preventing zero-day attacks. For example, if the system observes a sequence of events that are statistically improbable but lead to a successful exploit in a simulated environment, it can flag similar events in a live system as high-risk, even before a formal vulnerability is identified.

Comparison of AI Algorithms for Threat Detection

The effectiveness of an AI-based threat detection system depends heavily on the choice of machine learning algorithm. Different algorithms have different strengths and weaknesses, making the selection process crucial.

| Algorithm | Strengths | Weaknesses | Example Use Case |

|---|---|---|---|

| Support Vector Machines (SVM) | Effective in high-dimensional spaces, relatively simple to implement | Can be computationally expensive for very large datasets, sensitive to the choice of kernel function | Classifying network traffic as malicious or benign |

| Random Forest | Robust to noise, handles high dimensionality well, provides feature importance estimates | Can be computationally intensive, may overfit if not properly tuned | Detecting phishing emails based on email headers, content, and sender information |

| Neural Networks (Deep Learning) | Can learn complex patterns and relationships, highly adaptable | Requires large amounts of training data, computationally expensive, can be difficult to interpret | Analyzing network traffic patterns to detect advanced persistent threats (APTs) |

| Long Short-Term Memory (LSTM) networks | Excellent for time-series data, capable of capturing long-range dependencies | Computationally expensive, requires significant training data, difficult to interpret | Predicting future network traffic based on historical patterns to anticipate DDoS attacks |

AI in Vulnerability Management

The integration of Artificial Intelligence (AI) into vulnerability management is revolutionizing how organizations identify, prioritize, and remediate security weaknesses. AI’s ability to process vast amounts of data far surpasses human capabilities, enabling faster and more accurate vulnerability assessments and ultimately leading to stronger cybersecurity postures. This enhanced speed and accuracy allows for proactive security measures, reducing the window of opportunity for attackers.

AI algorithms can analyze codebases, network traffic, and system configurations to pinpoint vulnerabilities that might otherwise go undetected. This proactive approach shifts the paradigm from reactive patching to preventative security, minimizing the risk of exploitation.

Automated Vulnerability Assessment Process

A step-by-step process for using AI to automatically assess software vulnerabilities typically involves several key stages. First, AI tools gather data from various sources, including static code analysis (examining code without execution), dynamic code analysis (examining code during execution), and vulnerability databases. This data is then pre-processed and cleaned to ensure accuracy and consistency. Next, machine learning models are employed to identify patterns and anomalies indicative of vulnerabilities.

These models, often trained on massive datasets of known vulnerabilities, can recognize subtle indicators that might escape human analysts. Following the identification, the AI system prioritizes vulnerabilities based on severity and exploitability, providing a ranked list for remediation efforts. Finally, the system generates detailed reports outlining the identified vulnerabilities, their locations, and potential remediation strategies.

Ethical Implications of AI-Driven Vulnerability Identification, Artificial intelligence to combat cyber attacks

The use of AI to identify vulnerabilities in systems without explicit permission raises significant ethical concerns. Unauthorized scanning of systems, even for security purposes, could be considered a violation of privacy or even illegal depending on jurisdiction and the specific context. It is crucial that any AI-powered vulnerability scanning adheres strictly to legal and ethical guidelines, respecting the boundaries of ownership and privacy.

Transparency and informed consent are paramount, ensuring that system owners are aware of the scanning process and its implications. The potential for misuse, such as targeting specific organizations for malicious purposes, highlights the importance of responsible AI development and deployment in this domain.

Prioritization of Vulnerability Remediation Efforts

AI significantly enhances the prioritization of vulnerability remediation efforts by employing sophisticated risk assessment models. These models consider various factors, including the severity of the vulnerability (e.g., CVSS score), the likelihood of exploitation, the potential impact on the organization, and the availability of remediation patches. For example, a high-severity vulnerability in a critical system with a readily available patch will receive higher priority than a low-severity vulnerability in a less critical system with no immediate patch.

This allows security teams to focus their resources on the most pressing threats, maximizing the effectiveness of their remediation efforts. This prioritization also reduces the overall time and resources spent on less impactful vulnerabilities.

AI-Assisted Secure Software Development

AI can play a vital role in the development of more secure software by identifying potential vulnerabilities during the coding phase. Static and dynamic application security testing (SAST and DAST) tools, enhanced with AI capabilities, can analyze code in real-time, flagging potential vulnerabilities as they are introduced. This allows developers to address security flaws early in the development lifecycle, reducing the cost and complexity of fixing them later.

AI can also learn from past vulnerabilities, identifying patterns and predicting potential weaknesses before they are even exploited. This proactive approach fosters a security-first mindset throughout the software development process. For instance, AI can detect common coding errors that often lead to vulnerabilities, such as SQL injection or cross-site scripting (XSS) flaws.

AI-Driven Incident Response

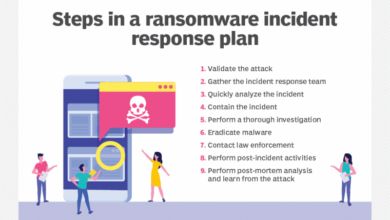

The speed and complexity of modern cyberattacks often overwhelm even the most skilled human security teams. This is where AI steps in, offering the potential to revolutionize incident response by automating tasks, accelerating analysis, and ultimately improving the overall effectiveness of security operations. AI’s ability to process vast amounts of data far surpasses human capabilities, allowing for quicker identification of threats and faster mitigation strategies.AI can significantly automate various incident response procedures.

This automation leads to faster containment and eradication of malware, reducing the overall impact of an attack. For example, AI-powered systems can automatically quarantine infected systems, block malicious network traffic, and even initiate automated remediation processes such as removing malware or patching vulnerabilities, all within minutes of detection. This speed is critical in minimizing the damage caused by a breach.

AI vs. Human-Led Incident Response

AI and human-led incident response teams are not mutually exclusive; rather, they are complementary. AI excels at rapid data processing, pattern recognition, and automation of repetitive tasks, while human analysts bring critical thinking, contextual understanding, and the ability to handle nuanced situations that require human judgment. A human-led team might spend hours analyzing log files to pinpoint the source of an attack, while an AI system could perform the same task in minutes, identifying anomalies and suspicious activities that might be missed by human eyes.

However, the AI’s findings would still require human validation and interpretation to ensure accuracy and avoid false positives. Ultimately, the most effective approach combines the strengths of both AI and human expertise.

AI-Driven Threat Intelligence Analysis and Improved Incident Response Strategies

A comprehensive plan leveraging AI for threat intelligence analysis and improved incident response strategies would involve several key steps. First, AI algorithms can analyze vast quantities of threat intelligence feeds from various sources (e.g., security information and event management (SIEM) systems, threat intelligence platforms, open-source intelligence) to identify emerging threats and predict potential attack vectors. This proactive approach allows security teams to prepare defenses and implement preventative measures before an attack occurs.

Second, AI can create detailed profiles of known attackers, their tactics, techniques, and procedures (TTPs), and their preferred targets. This allows for faster identification of attacks and more effective mitigation strategies. Third, AI can simulate various attack scenarios to test the effectiveness of current security measures and identify weaknesses. Finally, AI can continuously learn and adapt, refining its threat detection and response capabilities over time based on the analysis of past incidents and new threat information.

This continuous improvement cycle is crucial for maintaining a strong security posture in the ever-evolving threat landscape.

AI’s Role in Identifying Root Cause and Mitigating Future Occurrences

AI’s ability to analyze massive datasets allows it to identify subtle patterns and correlations that might be missed by human analysts. This capability is crucial for pinpointing the root cause of a cyberattack. For example, AI can analyze network traffic, log files, and endpoint data to trace the attack path, identify the initial entry point, and determine how the attackers moved laterally within the network.

By understanding the root cause, organizations can implement targeted mitigation strategies to prevent similar attacks in the future. This might involve patching vulnerabilities, strengthening access controls, improving security awareness training, or implementing new security technologies. For instance, if an AI system identifies a vulnerability in a specific software application as the root cause of a data breach, the organization can prioritize patching that vulnerability across all affected systems, thereby reducing the risk of future attacks exploiting the same weakness.

This proactive approach, powered by AI’s analytical capabilities, is key to building a more resilient security posture.

AI for Cybersecurity Training and Education

The cybersecurity landscape is constantly evolving, with new threats emerging daily. Traditional training methods struggle to keep pace, making AI-powered solutions crucial for equipping professionals with the skills needed to effectively combat these challenges. AI can personalize learning, create dynamic simulations, and automate assessment, leading to more effective and efficient cybersecurity training programs.

AI-Focused Cybersecurity Training Curriculum

A comprehensive AI-focused cybersecurity training program should incorporate a multi-faceted curriculum. This curriculum should not only cover theoretical concepts but also provide hands-on experience with AI-powered tools and techniques. The program could be structured around several key modules: Introduction to AI and Machine Learning in Cybersecurity; AI-Powered Threat Detection and Analysis; AI in Vulnerability Management; AI-Driven Incident Response; Ethical Considerations of AI in Cybersecurity; and Advanced Topics in AI for Cybersecurity.

Each module would include lectures, practical exercises, and case studies showcasing real-world applications of AI in cybersecurity.

Interactive AI-Powered Cybersecurity Simulations

Interactive simulations offer a safe and controlled environment for cybersecurity professionals to practice their skills. AI can significantly enhance these simulations by creating dynamic and unpredictable scenarios. For example, an AI-powered simulation could mimic a sophisticated phishing attack, adapting its tactics based on the trainee’s responses. The AI could also simulate the actions of malicious actors, introducing unexpected vulnerabilities and challenges.

This adaptive approach allows trainees to develop critical thinking and problem-solving skills in a realistic, yet safe, environment. The system could provide immediate feedback, highlighting areas for improvement and reinforcing successful strategies. Think of it as a virtual war game, but focused on cybersecurity skills development.

Personalized Cybersecurity Training via AI

AI can personalize the learning experience based on individual learning styles and skill levels. By analyzing a trainee’s performance on assessments and simulations, the AI can tailor the curriculum to focus on areas where improvement is needed. For instance, a trainee struggling with incident response might receive more focused training in that area, while a trainee proficient in threat detection could move on to more advanced topics.

This personalized approach ensures that training is efficient and effective, maximizing the trainee’s learning outcomes. This could involve adaptive learning platforms that adjust the difficulty and content based on real-time performance feedback. For example, if a trainee consistently misses questions on a particular type of malware, the platform could automatically provide additional resources and exercises focused on that specific malware family.

AI-Powered Cybersecurity Knowledge and Skills Assessment Platform

An AI-powered platform can automate the assessment of cybersecurity knowledge and skills. This platform could use various methods, including multiple-choice questions, coding challenges, and interactive simulations. The AI could analyze the trainee’s responses to identify strengths and weaknesses, providing detailed feedback and personalized recommendations for improvement. Furthermore, the platform could continuously adapt its assessment methods based on the trainee’s performance, ensuring that the assessment remains challenging and relevant.

The platform could also track the trainee’s progress over time, providing a comprehensive overview of their skill development. A robust reporting system would allow trainers to monitor individual and group performance, enabling data-driven adjustments to the training program. Imagine a system that not only grades the answers but also analyzes the approach taken by the trainee, providing feedback on their problem-solving methodology and identifying potential blind spots.

Ethical Considerations of AI in Cybersecurity

The increasing reliance on artificial intelligence (AI) in cybersecurity presents a complex ethical landscape. While AI offers powerful tools to combat evolving cyber threats, its deployment raises significant concerns regarding bias, privacy, misuse, and responsible development. Understanding and addressing these ethical dilemmas is crucial for ensuring the safe and beneficial integration of AI into our digital defenses.

AI Algorithm Bias in Cybersecurity

AI algorithms learn from the data they are trained on. If this data reflects existing societal biases, the resulting AI system will likely perpetuate and even amplify those biases. For example, an AI system trained primarily on data from one geographic region or demographic group might be less effective at detecting threats targeting other populations. This can lead to unequal protection and increased vulnerability for certain groups.

Mitigation strategies involve careful data curation to ensure representation from diverse sources, rigorous testing for bias, and ongoing monitoring of the AI system’s performance across different demographics. Transparency in the algorithms used and the data sets employed is also critical to identifying and addressing potential biases.

Privacy Implications of AI-Driven Cybersecurity Analysis

AI-powered cybersecurity systems often analyze vast datasets of user activity to identify threats. This analysis can inadvertently expose sensitive personal information, raising serious privacy concerns. For instance, analyzing browsing history or communication patterns could reveal private details about an individual’s political affiliations, religious beliefs, or health status. To mitigate these risks, strong data anonymization and aggregation techniques are necessary.

Implementing robust privacy-preserving technologies, such as differential privacy or federated learning, can allow for valuable threat detection without compromising individual privacy. Furthermore, clear and transparent privacy policies outlining how user data is collected, used, and protected are essential.

Risks of AI Misuse in Cyber Warfare and Cybercrime

The same AI technologies used to defend against cyberattacks can be weaponized for malicious purposes. AI can automate the creation of sophisticated phishing campaigns, accelerate the development of malware, and enhance the effectiveness of denial-of-service attacks. The potential for AI-powered autonomous weapons systems raises even more profound ethical concerns, potentially leading to unpredictable and devastating consequences in cyber warfare.

International cooperation and the development of ethical guidelines for the development and deployment of AI in cybersecurity are vital to preventing its misuse. Robust cybersecurity defenses, including AI-powered threat intelligence systems, are necessary to counteract these threats.

Strategies for Responsible AI Development in Cybersecurity

Ensuring the responsible development and deployment of AI in cybersecurity requires a multi-faceted approach. This includes establishing clear ethical guidelines and standards for AI development, fostering collaboration between researchers, policymakers, and industry stakeholders, and prioritizing transparency and accountability in AI systems. Regular audits and evaluations of AI systems are needed to identify and address potential biases and vulnerabilities.

Investing in education and training programs to build a workforce equipped to understand and manage the ethical implications of AI in cybersecurity is also crucial. Furthermore, robust regulatory frameworks are needed to ensure compliance with ethical standards and protect user rights.

AI and Network Security

AI is revolutionizing network security, offering powerful tools to detect and respond to threats far more effectively than traditional methods. Its ability to analyze massive datasets in real-time allows for proactive security measures, significantly improving the overall network security posture. This enhanced capability is crucial in today’s complex and ever-evolving threat landscape.AI enhances network segmentation by analyzing network traffic patterns and user behavior to identify optimal segmentation strategies.

This goes beyond simple rule-based segmentation, dynamically adapting to changing network conditions and user activities. Instead of static segmentation, AI can create micro-segmentation, isolating sensitive data and applications more effectively. This limits the impact of a breach, preventing attackers from moving laterally across the network.

AI-Enhanced Network Segmentation

AI algorithms analyze network traffic, user behavior, and application dependencies to identify logical groupings of devices and users. This allows for the creation of highly granular network segments, isolating critical assets and minimizing the blast radius of a security breach. For example, AI can identify all devices accessing a specific database and automatically segment them, regardless of their physical location or department.

This dynamic approach contrasts sharply with traditional, static segmentation methods which often lag behind actual network usage and vulnerabilities. This improved granularity minimizes the attack surface and enhances overall security.

AI Optimization of Firewall Rules and Intrusion Prevention Systems

AI can significantly improve the effectiveness of firewalls and intrusion prevention systems (IPS) by automating rule creation and optimization. Traditional methods rely heavily on manual rule creation, which is often slow, error-prone, and unable to keep pace with the ever-growing number of sophisticated threats. AI, however, can analyze vast amounts of network traffic to identify patterns indicative of malicious activity and automatically generate or adjust firewall rules to block these threats in real-time.

This automation reduces the workload on security teams and improves the accuracy and speed of threat response. For instance, AI can detect anomalies in network traffic that indicate a potential intrusion attempt, and automatically create a firewall rule to block the malicious traffic source, preventing further damage.

Visual Representation of AI-Improved Network Monitoring

Imagine a dynamic dashboard displaying a network map. Nodes represent individual devices and servers, colored according to their security status (green for secure, yellow for potential risk, red for compromised). Thick lines connecting nodes represent high-bandwidth communication channels, while thin lines indicate low-bandwidth connections. The thickness and color of these lines change in real-time based on AI analysis of network traffic.

Anomalies, such as unusual traffic spikes or communication patterns, are highlighted with flashing alerts and visual cues. A separate panel displays a real-time threat score, calculated by the AI, which summarizes the overall network security posture. The dashboard also features a heatmap showing areas of the network with the highest concentration of vulnerabilities or suspicious activity, allowing security teams to quickly identify and address critical issues.

This visual representation provides a clear and concise overview of the network’s security status, enabling proactive threat detection and response.

AI is revolutionizing cybersecurity, offering proactive defenses against increasingly sophisticated attacks. Building these AI-powered security systems often requires robust, efficient development, which is where the advancements in domino app dev, the low-code and pro-code future , become incredibly valuable. This allows developers to rapidly create and deploy the tools needed to stay ahead of the ever-evolving threat landscape, ultimately strengthening our AI-driven cyber defenses.

AI in Denial-of-Service Attack Detection and Prevention

AI algorithms can effectively detect and mitigate denial-of-service (DoS) attacks by analyzing network traffic patterns and identifying anomalies that indicate a DoS attack in progress. Traditional methods often struggle to differentiate between legitimate traffic spikes and malicious DoS attacks. AI, however, can identify subtle patterns in traffic volume, source IP addresses, and packet characteristics that are indicative of a coordinated DoS attack.

This allows for rapid detection and response, minimizing the impact of the attack. Furthermore, AI can automate mitigation strategies, such as rerouting traffic or blocking malicious sources, to protect network resources and maintain service availability. For example, an AI system could detect a sudden surge in traffic from a large number of seemingly unrelated IP addresses, all targeting a specific server, indicating a distributed denial-of-service (DDoS) attack.

It would then automatically implement mitigation strategies such as rate limiting or blacklisting the malicious IP addresses, preventing the server from being overwhelmed.

AI and Data Security

The integration of artificial intelligence (AI) into data security practices is no longer a futuristic concept; it’s a necessity. The sheer volume and complexity of modern data necessitate solutions that go beyond traditional security measures. AI offers a powerful toolkit to enhance data protection, offering speed, adaptability, and analytical capabilities far surpassing human capacity. This allows for proactive defense strategies, a critical element in today’s rapidly evolving threat landscape.AI significantly improves data security by automating and optimizing several key processes.

Its ability to analyze massive datasets allows for the identification of subtle patterns and anomalies that might indicate impending threats, which would be impossible for human analysts to spot efficiently. This proactive approach allows for quicker responses and minimizes potential damage.

AI-Enhanced Encryption and Decryption

AI algorithms can enhance encryption by dynamically adjusting encryption keys based on real-time threat analysis. This adaptive approach makes it significantly more difficult for attackers to crack the encryption, even with advanced techniques. Furthermore, AI can optimize decryption processes, making access to authorized data faster and more efficient while maintaining robust security protocols. For example, AI could analyze user behavior and adjust decryption speed accordingly, prioritizing urgent requests while maintaining stringent security checks for less time-sensitive access.

AI-Driven Real-Time Breach Detection and Prevention

AI-powered security systems continuously monitor network traffic and user activity, looking for deviations from established baselines. This real-time monitoring enables the immediate detection of suspicious activities, such as unauthorized access attempts or data exfiltration. The AI can then automatically trigger preventative measures, such as blocking malicious connections or isolating compromised systems, significantly reducing the impact of a potential breach.

A practical example is an AI system detecting unusual login attempts from an unfamiliar geographic location and immediately locking the account, preventing unauthorized access.

AI in Data Loss Prevention (DLP) Systems

AI significantly boosts the effectiveness of DLP systems. By analyzing data content, context, and user behavior, AI can identify sensitive information that might be at risk of being leaked or lost. This includes identifying confidential documents, personally identifiable information (PII), and intellectual property. AI-powered DLP systems can then automatically prevent the unauthorized transfer or deletion of this sensitive data, either through blocking actions or alerting security personnel.

For instance, an AI system might detect an employee attempting to email a large file containing customer PII to a personal email address and immediately flag it for review, preventing a potential data breach.

Best Practices for Securing AI Systems

Securing AI systems themselves is crucial, as a compromised AI could become a potent weapon for attackers. The following best practices are essential:

- Regular security audits and penetration testing to identify vulnerabilities.

- Implementing robust access control measures, limiting access to sensitive AI components and data.

- Employing strong encryption techniques to protect both AI models and the data they process.

- Using anomaly detection systems to monitor AI system behavior and detect any unusual activity that could indicate a compromise.

- Regularly updating AI system software and libraries to patch known vulnerabilities.

- Implementing robust data sanitization procedures to remove sensitive information from AI training data.

- Training personnel on AI security best practices and threat awareness.

Final Review: Artificial Intelligence To Combat Cyber Attacks

The integration of artificial intelligence into cybersecurity is not just an incremental improvement; it’s a paradigm shift. As cyber threats grow more complex and frequent, AI offers a powerful arsenal of tools to combat them. While ethical considerations remain paramount, the potential benefits – from proactive threat detection to automated incident response – are undeniable. The future of cybersecurity is intelligent, and this intelligent future is here.

By understanding and responsibly implementing AI-powered solutions, we can create a safer and more secure digital world. Let’s continue to explore and innovate in this crucial field, pushing the boundaries of what’s possible in the ongoing battle for online safety.

Common Queries

What are the limitations of AI in cybersecurity?

AI systems are only as good as the data they’re trained on. Biased data can lead to inaccurate results, and AI can be vulnerable to adversarial attacks designed to fool its algorithms. Furthermore, AI can’t replace human expertise entirely; human judgment and oversight remain crucial.

How expensive is implementing AI-powered cybersecurity solutions?

The cost varies greatly depending on the scale and complexity of the solution. Smaller businesses might opt for cloud-based AI security services, while larger organizations may invest in custom-built systems. The initial investment can be significant, but the potential cost savings from preventing breaches often outweigh the expense.

Can AI prevent all cyberattacks?

No, AI is not a silver bullet. While it significantly enhances cybersecurity capabilities, it’s not foolproof. Sophisticated, well-resourced attackers can still find ways to circumvent AI-based defenses. A layered security approach combining AI with other methods is essential.