Beware of OpenAI & Turbo in Finance Growing API Attack Surface

Beware of openai and chatgpt 4 turbo in financial services organizations growing api attack surface – Beware of OpenAI and Kami 4 Turbo in financial services organizations growing API attack surface – that’s the chilling reality we face. The rapid adoption of APIs in finance, while offering incredible efficiency, has inadvertently created a massive, vulnerable landscape ripe for exploitation. This isn’t just about a few rogue hackers; the potential for sophisticated, AI-powered attacks is exponentially increasing, threatening the very foundations of our financial systems.

We’ll delve into the specifics of these threats, exploring how large language models could be weaponized against financial institutions and examining the crucial steps needed to protect ourselves.

Think about it: AI can now generate incredibly realistic phishing emails, craft convincing social engineering schemes, and even write malicious code to exploit API vulnerabilities. This isn’t science fiction; it’s a present-day danger. We’ll examine real-world examples, discuss the latest security measures, and explore how AI itself can be used to combat this growing threat. We’ll also cover the regulatory landscape and the serious legal consequences of failing to secure these vital systems.

The Expanding API Attack Surface in Financial Services

The financial services industry’s increasing reliance on Application Programming Interfaces (APIs) to power digital services and streamline internal operations has inadvertently created a significantly larger attack surface for cybercriminals. This shift towards API-driven architectures, while offering benefits like improved efficiency and customer experience, exposes organizations to new and evolving threats. Understanding this expanding attack surface is crucial for mitigating potential risks and ensuring the security of sensitive financial data.

Current State of API Usage in Financial Services

APIs are now fundamental to the functioning of modern financial institutions. They facilitate interactions between internal systems, enable the development of third-party applications (like mobile banking apps), and power crucial services such as payment processing, account management, and fraud detection. This widespread adoption, however, creates a complex network of interconnected systems, each presenting a potential entry point for malicious actors.

The sheer number of APIs deployed, coupled with their often-complex interdependencies, significantly increases the difficulty of maintaining robust security across the entire ecosystem.

Vulnerabilities Inherent in Widely Adopted APIs, Beware of openai and chatgpt 4 turbo in financial services organizations growing api attack surface

Many commonly used APIs suffer from inherent vulnerabilities that can be exploited by attackers. These vulnerabilities often stem from poor design, inadequate testing, or insufficient security controls. Examples include insecure authentication mechanisms, lack of input validation, insufficient authorization checks, and the exposure of sensitive data through improperly configured APIs. The use of outdated or unsupported API versions further exacerbates these risks, as security patches and updates may not be applied consistently.

Many APIs also lack proper logging and monitoring capabilities, hindering the detection of malicious activity.

How Increasing Reliance on APIs Expands the Attack Surface

The expanding use of APIs directly correlates with an expanding attack surface. Each API represents a potential point of entry for attackers. As financial institutions adopt more APIs to support new services and integrations, the number of potential attack vectors increases proportionally. Furthermore, the interconnected nature of APIs means that a successful attack on a single API can potentially compromise the entire system, leading to widespread data breaches and financial losses.

The complexity of these interconnected systems also makes identifying and addressing vulnerabilities more challenging.

Specific Types of Attacks Targeting Financial APIs

Financial APIs are targeted by a range of sophisticated attacks. Injection attacks, such as SQL injection and cross-site scripting (XSS), allow attackers to manipulate API requests to gain unauthorized access to data or execute malicious code. Data breaches, often facilitated by exploiting vulnerabilities in API authentication or authorization, can lead to the theft of sensitive customer information, including account details, personal data, and financial transactions.

The rise of OpenAI and ChatGPT-4 Turbo presents a serious challenge for financial services; their expanding API attack surface is a major concern. Building secure and robust applications is crucial, and this is where understanding the power of modern development comes in, like what’s discussed in this great article on domino app dev the low code and pro code future.

Ultimately, leveraging secure development practices is key to mitigating the risks posed by AI advancements in the financial sector.

Denial-of-service (DoS) attacks can disrupt API functionality, rendering crucial financial services unavailable to legitimate users. Furthermore, API manipulation can be used to facilitate fraudulent transactions or to manipulate financial markets.

Comparison of Traditional and API-Specific Security Measures

| Security Measure | Traditional Approach | API-Specific Approach | Effectiveness |

|---|---|---|---|

| Authentication | Username/password, multi-factor authentication | OAuth 2.0, OpenID Connect, API keys with rate limiting | Improved with API-specific approaches due to better granularity and control. |

| Authorization | Role-based access control (RBAC) | Fine-grained access control based on API resources and actions, using JSON Web Tokens (JWT) | API-specific approaches offer more precise control over access. |

| Input Validation | Basic input sanitization | Robust input validation, data type checking, and protection against injection attacks | API-specific approaches are essential to prevent injection attacks. |

| Data Protection | Encryption at rest and in transit | Encryption of API requests and responses, data masking, tokenization | Stronger encryption and data masking crucial for API security. |

| Monitoring and Logging | System logs, security information and event management (SIEM) | API-specific monitoring tools, detailed request and response logging, anomaly detection | Dedicated API monitoring is vital for detecting and responding to attacks. |

OpenAI and Large Language Models

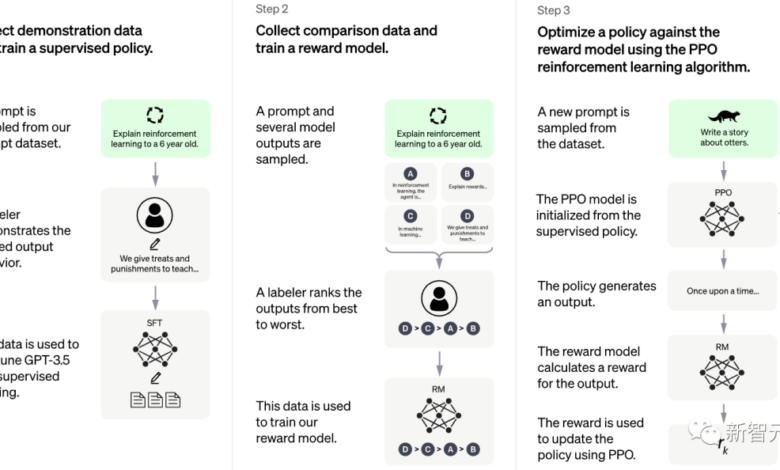

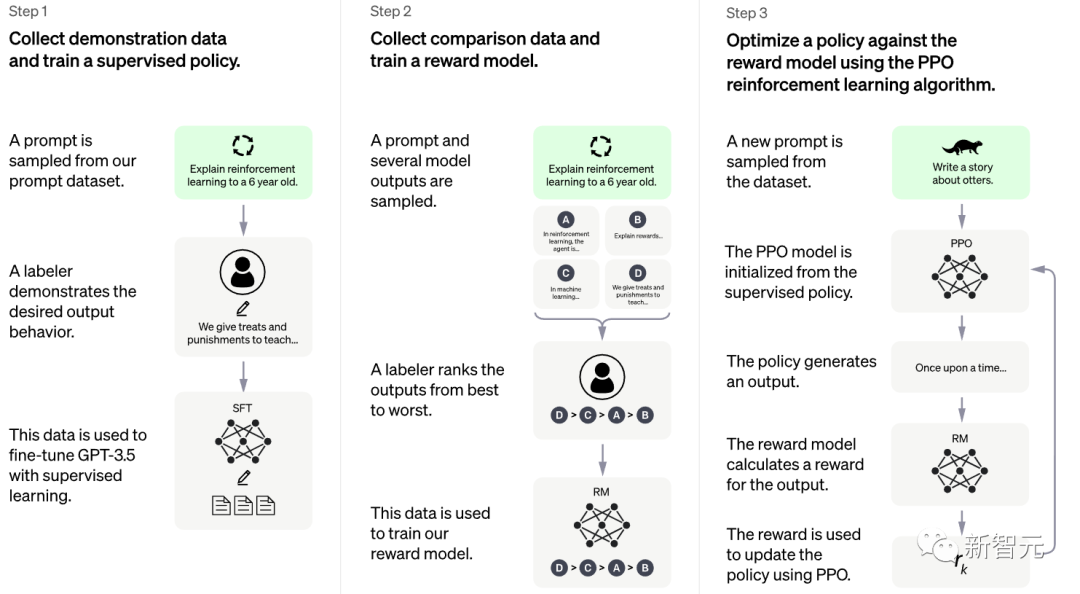

The rise of powerful large language models (LLMs) like those offered by OpenAI presents a double-edged sword for the financial services industry. While these models offer potential benefits in areas like customer service and fraud detection, their capabilities also significantly expand the attack surface, creating new and sophisticated avenues for malicious actors. This post explores the security risks associated with LLMs in the financial sector, focusing on their potential for misuse in targeting financial APIs.LLMs possess the ability to generate human-quality text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

This versatility, however, makes them incredibly potent tools for cybercriminals. The ease with which these models can generate convincing phishing emails, craft realistic social engineering scenarios, and even produce malicious code capable of exploiting API vulnerabilities poses a significant threat.

Phishing and Social Engineering Attacks Leveraging LLMs

LLMs can be used to create highly personalized and targeted phishing campaigns. Instead of generic emails, attackers can leverage LLMs to generate emails tailored to specific individuals, incorporating details gleaned from publicly available information or data breaches. These highly targeted attacks are significantly more effective than generic phishing attempts. For example, an LLM could craft an email seemingly from a legitimate financial institution, referencing a specific account detail to build trust and increase the likelihood of a successful attack.

Similarly, LLMs can generate convincing social engineering scenarios, guiding victims through complex steps to compromise their accounts or reveal sensitive information. The ability to dynamically adapt the attack based on the victim’s responses makes these attacks extremely difficult to detect.

Exploiting API Vulnerabilities with LLM-Generated Code

The ability of LLMs to generate code presents another significant risk. Attackers can use LLMs to generate code snippets designed to exploit known vulnerabilities in financial APIs. This eliminates the need for extensive coding expertise, lowering the barrier to entry for malicious actors. For instance, an attacker could provide an LLM with information about a specific API vulnerability and request code to exploit it.

The LLM could then generate the necessary code, potentially including obfuscation techniques to evade detection. This automated code generation significantly accelerates the attack process and allows for rapid exploitation of newly discovered vulnerabilities.

Hypothetical Attack Scenario: Compromising a Financial API

Imagine a scenario where an attacker uses an LLM to target a financial institution’s API responsible for processing wire transfers. First, the attacker uses the LLM to generate a sophisticated phishing email targeting a high-level employee within the finance department. The email contains seemingly legitimate information and a link to a fake login page, also generated by the LLM.

Once the employee’s credentials are compromised, the attacker uses the LLM to generate code that exploits a known vulnerability in the wire transfer API. This code allows the attacker to initiate unauthorized wire transfers, potentially siphoning off significant funds. The attacker might even use the LLM to generate plausible explanations for the transactions, further obscuring their malicious activity.

The entire attack, from initial phishing to successful funds transfer, could be orchestrated with minimal technical expertise, relying heavily on the capabilities of the LLM.

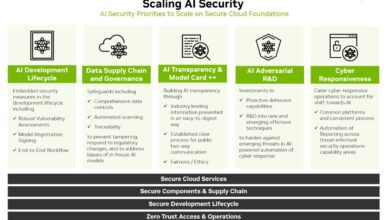

The Role of Generative AI in Threat Detection and Prevention

The increasing sophistication of API attacks necessitates a similarly advanced defense. Generative AI, with its ability to learn from vast datasets and identify complex patterns, offers a powerful new tool in the arsenal of financial services security. By analyzing massive volumes of API traffic data, generative AI can detect anomalies and predict potential threats far more efficiently than traditional methods.

This proactive approach allows for faster response times and minimizes the impact of successful attacks.Generative AI offers several methods for detecting anomalous API activity. It can establish baselines of normal API behavior based on historical data, identifying deviations that might indicate malicious activity. These deviations can range from unusual traffic volumes to unexpected API call sequences or data patterns.

Furthermore, generative AI can learn to recognize subtle patterns indicative of sophisticated attacks, such as those that use polymorphic malware or evade traditional signature-based detection systems.

AI-Powered Security Solutions for API Protection: A Comparison

Several AI-powered security solutions are emerging to address the growing threat to APIs. These solutions often leverage different machine learning techniques, such as anomaly detection, supervised learning, and reinforcement learning. For example, some solutions focus on real-time anomaly detection, flagging suspicious API activity as it occurs. Others utilize supervised learning models trained on known attack patterns to classify incoming API requests as malicious or benign.

A key differentiator lies in the specific algorithms employed, the breadth of data they analyze, and the level of customization offered to adapt to the unique needs of different financial institutions. A robust solution would incorporate multiple techniques for a layered approach to security.

Predicting and Preventing Future API Attacks with AI

AI’s predictive capabilities are crucial in preventing future attacks. By analyzing historical data on successful and unsuccessful attacks, generative AI can identify common attack vectors and predict future targets. This predictive analysis allows organizations to proactively strengthen their security posture by addressing vulnerabilities before they can be exploited. For example, if an AI model identifies a recurring pattern of attacks targeting a specific API endpoint, security teams can implement additional authentication measures or strengthen access controls to mitigate the risk.

Furthermore, AI can be used to simulate attacks, allowing security teams to test their defenses and identify weaknesses in their security infrastructure. This proactive approach significantly reduces the likelihood of successful attacks.

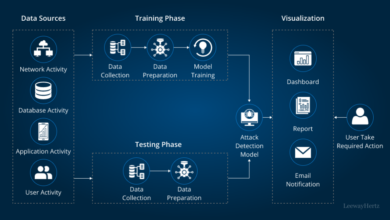

Analyzing API Logs to Identify Potential Threats

AI can efficiently analyze massive API logs, identifying patterns and anomalies that would be impossible for human analysts to detect within a reasonable timeframe. The process involves several steps: data preprocessing (cleaning and formatting log data), feature extraction (identifying relevant features like request frequency, source IP, and response codes), and model training (building an AI model to identify anomalies based on extracted features).

Once trained, the model can continuously monitor API logs, alerting security personnel to potential threats in real-time. For instance, a sudden surge in requests from an unusual geographic location, coupled with unusually high error rates, might indicate a Distributed Denial-of-Service (DDoS) attack. The AI system can automatically flag such events, allowing for immediate investigation and mitigation.

Mitigation Strategies and Best Practices

The integration of OpenAI’s technologies, while offering significant potential, introduces considerable security risks for financial services organizations. These risks are amplified by the expanding API attack surface and the sensitive nature of financial data. Robust mitigation strategies are crucial to ensure the secure and responsible deployment of these powerful tools. Failing to address these risks can lead to significant financial losses, reputational damage, and regulatory penalties.

Effective mitigation hinges on a multi-layered approach encompassing robust API security, comprehensive security controls tailored to OpenAI technologies, and a commitment to ongoing security assessments. This involves a proactive and preventative stance, rather than a reactive one, to effectively manage the evolving threat landscape.

API Security Best Practices in Financial Services

Securing APIs is paramount in the financial sector, given their role in connecting various systems and handling sensitive data. A comprehensive approach requires a combination of technical and procedural measures.

- Input Validation and Sanitization: Thoroughly validate and sanitize all API inputs to prevent injection attacks (SQL injection, cross-site scripting, etc.). This includes checking data types, lengths, and formats, and escaping special characters.

- Authentication and Authorization: Implement strong authentication mechanisms (e.g., OAuth 2.0, OpenID Connect) and granular authorization controls to restrict access to sensitive resources based on user roles and permissions. Multi-factor authentication (MFA) should be mandatory for all privileged users.

- Rate Limiting and Throttling: Implement rate limiting and throttling mechanisms to mitigate denial-of-service (DoS) attacks by limiting the number of requests from a single source within a specific timeframe.

- API Gateway Security: Utilize an API gateway to act as a central point of control, enforcing security policies, managing traffic, and providing additional layers of protection such as request filtering and bot detection.

- Regular Security Updates and Patching: Keep all API components and related infrastructure up-to-date with the latest security patches to address known vulnerabilities.

- Robust Logging and Monitoring: Implement comprehensive logging and monitoring of API traffic to detect and respond to suspicious activities. This includes real-time monitoring of API performance and security alerts.

Key Security Controls for OpenAI Technologies

The unique characteristics of OpenAI’s large language models necessitate specific security controls to mitigate the inherent risks.

- Data Leakage Prevention: Implement strict data loss prevention (DLP) measures to prevent sensitive financial data from being inadvertently disclosed through prompts or responses generated by OpenAI models. This includes data masking and redaction techniques.

- Prompt Engineering and Input Validation: Carefully design prompts to avoid inadvertently eliciting sensitive information or triggering unintended behavior from the model. Validate and sanitize all inputs to prevent malicious code injection or prompt injection attacks.

- Model Monitoring and Auditing: Continuously monitor the model’s outputs for unexpected or inappropriate behavior. Regularly audit the model’s training data and parameters to ensure compliance with security and privacy regulations.

- Access Control and User Management: Restrict access to OpenAI APIs and models to authorized personnel only. Implement strong access controls and user management practices to prevent unauthorized access or modifications.

- Sandboxing and Isolation: Run OpenAI models in isolated sandboxes to prevent them from accessing or modifying sensitive systems or data outside their designated environment.

Regular Security Audits and Penetration Testing

Regular security assessments are crucial for identifying and addressing vulnerabilities before they can be exploited. These assessments should be conducted by independent security experts.

Penetration testing simulates real-world attacks to identify weaknesses in the API security infrastructure. Regular audits ensure compliance with security standards and best practices. This proactive approach helps to maintain a strong security posture.

Implementing Robust API Security Measures: A Step-by-Step Procedure

Implementing robust API security requires a structured approach. This procedure Artikels key steps.

- Risk Assessment: Conduct a thorough risk assessment to identify potential vulnerabilities and prioritize security controls.

- API Design and Development: Design APIs with security in mind, incorporating security best practices from the outset. Follow secure coding practices and use secure libraries and frameworks.

- Security Testing: Conduct thorough security testing, including static and dynamic application security testing (SAST/DAST), and penetration testing.

- Deployment and Monitoring: Deploy APIs to a secure environment and implement robust monitoring and logging to detect and respond to security incidents.

- Incident Response Plan: Develop and regularly test an incident response plan to effectively handle security breaches.

- Continuous Improvement: Regularly review and update security policies and procedures based on emerging threats and vulnerabilities.

Regulatory and Compliance Considerations: Beware Of Openai And Chatgpt 4 Turbo In Financial Services Organizations Growing Api Attack Surface

The rapid adoption of AI, particularly large language models like Kami, within financial services presents significant regulatory and compliance challenges. The increasing reliance on APIs to facilitate these technologies expands the attack surface, making robust security measures paramount to avoid hefty fines and reputational damage. Failure to adequately address these concerns can lead to severe consequences for financial institutions.The implications of API vulnerabilities and data breaches in finance are far-reaching and potentially catastrophic.

Beyond direct financial losses from fraud or theft, reputational damage can severely impact a firm’s ability to attract and retain clients, investors, and talent. Regulatory scrutiny and subsequent penalties further exacerbate these negative outcomes.

Relevant Regulatory Frameworks and Compliance Standards

Financial institutions are subject to a complex web of regulations designed to protect customer data and maintain the stability of the financial system. These regulations often mandate specific security controls, including those related to API security. Key frameworks include the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in the United States, and various industry-specific regulations such as those from the Financial Conduct Authority (FCA) in the UK and the Securities and Exchange Commission (SEC) in the US.

These regulations frequently mandate data encryption, access control mechanisms, and robust audit trails for all data processing, including API interactions. Compliance requires a multi-faceted approach encompassing technical, procedural, and managerial controls.

Implications of Data Breaches and API Vulnerabilities

Data breaches resulting from API vulnerabilities can lead to significant financial losses, legal liabilities, and reputational damage. The theft of sensitive customer data, such as personal information, financial records, and transaction details, can result in substantial fines under regulations like GDPR, which can impose penalties up to €20 million or 4% of annual global turnover, whichever is greater. Beyond direct financial penalties, institutions face the cost of remediation, including notifying affected customers, credit monitoring services, and legal fees associated with potential lawsuits.

The reputational damage from a breach can also significantly impact customer trust and lead to a decline in business. For example, a major data breach at a large financial institution could lead to a mass exodus of customers and a significant drop in stock value.

Potential Penalties and Legal Ramifications

Failure to adequately secure APIs can result in a range of penalties and legal ramifications. These can include substantial fines levied by regulatory bodies, class-action lawsuits from affected customers, and potential criminal charges against individuals or the organization itself. The severity of the penalties depends on factors such as the extent of the breach, the sensitivity of the compromised data, and the institution’s demonstrable efforts to mitigate risks.

For instance, a failure to implement appropriate security controls leading to a large-scale data breach could result in millions of dollars in fines and significant legal battles. Furthermore, reputational damage can have long-term consequences, impacting the institution’s ability to operate effectively.

Industry Best Practices for Meeting Regulatory Requirements

Meeting regulatory requirements related to API security demands a proactive and comprehensive approach. This includes implementing robust authentication and authorization mechanisms, such as OAuth 2.0 and OpenID Connect, to verify API requests and control access to sensitive data. Regular security testing, including penetration testing and vulnerability assessments, is crucial to identify and address potential weaknesses. Data encryption, both in transit and at rest, is essential to protect sensitive information.

Maintaining comprehensive audit trails of API activity allows for effective monitoring and incident response. Finally, a strong security awareness training program for employees is vital to ensure they understand the importance of API security and best practices. Continuous monitoring and threat intelligence analysis enable proactive identification and mitigation of emerging risks.

Conclusive Thoughts

The integration of powerful AI models like OpenAI’s into our financial infrastructure presents both incredible opportunities and significant risks. While the potential for increased efficiency and enhanced security through AI-powered threat detection is undeniable, the threat of malicious use is equally potent. Ultimately, securing our financial APIs requires a multi-pronged approach, combining robust security measures with a proactive, AI-driven defense.

Ignoring this threat is simply not an option; the future of financial security depends on our ability to adapt and innovate in the face of this evolving challenge.

Essential Questionnaire

What specific types of data are most at risk in a financial API breach?

Sensitive data like customer PII (Personally Identifiable Information), account numbers, transaction details, and financial balances are prime targets.

How can I tell if my organization is vulnerable to these kinds of attacks?

Regular security audits, penetration testing, and monitoring for anomalous API activity are crucial. Consider employing AI-powered security solutions to detect suspicious patterns.

What are the potential legal and regulatory repercussions of an API data breach?

Penalties can be substantial, including hefty fines, legal action from affected customers, and reputational damage. Compliance with regulations like GDPR and CCPA is paramount.

Are there any open-source tools or resources available to help improve API security?

Yes, many open-source security tools and frameworks exist. Researching and implementing these can significantly enhance your security posture.