Breaking the Cycle of Traditional Vulnerability Management

Breaking the cycle of traditional vulnerability management isn’t just about patching holes; it’s about fundamentally changing how we think about security. For too long, we’ve reacted to vulnerabilities instead of preventing them. This post dives into the limitations of the old ways, explores modern, proactive approaches, and shows you how to build a truly resilient security posture. We’ll uncover the hidden inefficiencies, the surprising human element, and the exciting possibilities of automation and integration.

We’ll journey through the typical vulnerability lifecycle, from discovery to remediation, highlighting where traditional methods falter. We’ll then explore emerging paradigms like DevSecOps and threat modeling, examining how automation and orchestration can revolutionize your approach. Finally, we’ll discuss the crucial role of communication, continuous improvement, and addressing the ever-evolving threat landscape—including the challenges posed by cloud computing, IoT, and AI.

Defining Traditional Vulnerability Management

Traditional vulnerability management (TVM) represents a largely reactive approach to cybersecurity, focusing primarily on identifying and remediating known vulnerabilities after they’ve been discovered. It’s a process built upon established security practices, but often falls short in addressing the rapidly evolving threat landscape. Understanding its core components, limitations, and comparison to more proactive strategies is crucial for moving beyond its inherent weaknesses.

The core components of TVM typically involve vulnerability scanning, vulnerability assessment, and remediation. Vulnerability scanning utilizes automated tools to identify potential weaknesses in systems and applications. This process often involves port scanning, operating system fingerprinting, and checking for known vulnerabilities against a database of known exploits (like those found in the National Vulnerability Database – NVD). Vulnerability assessment takes the raw data from scans and analyzes it to prioritize risks, determining which vulnerabilities pose the greatest threat to the organization.

Finally, remediation involves the actual patching, configuration changes, or other actions taken to fix the identified vulnerabilities.

Reactive Versus Proactive Vulnerability Management Strategies

Reactive vulnerability management, the hallmark of traditional approaches, prioritizes addressing vulnerabilities

- after* they are discovered, often triggered by an incident or security audit. This approach is often characterized by a high volume of alerts, a struggle to prioritize critical vulnerabilities amidst the noise, and a reliance on manual processes. In contrast, proactive vulnerability management shifts the focus to

- preventing* vulnerabilities before they can be exploited. This approach utilizes techniques such as secure coding practices, robust configuration management, and continuous security monitoring to identify and address vulnerabilities early in the software development lifecycle (SDLC). A proactive approach aims to minimize the attack surface and reduce the likelihood of successful breaches. For example, a reactive approach might involve patching a web server after a successful exploit, while a proactive approach would involve implementing web application firewalls (WAFs) and regular security audits during development to prevent such an exploit in the first place.

Limitations and Inherent Weaknesses of Traditional Methods

Traditional vulnerability management methods suffer from several key limitations. Firstly, the reliance on periodic scans creates a window of vulnerability. Between scans, new vulnerabilities may emerge and be exploited before they are detected. Secondly, the sheer volume of alerts generated often leads to alert fatigue and prioritization challenges. Security teams may struggle to differentiate between critical and less significant vulnerabilities, leading to delayed or incomplete remediation.

Thirdly, TVM often struggles to address vulnerabilities in complex, dynamic environments like cloud-based systems or microservices architectures. The traditional approaches, designed for static on-premise environments, may not be adequately equipped to cope with the rapid changes and complexities of modern infrastructure. Finally, traditional methods often fail to address non-technical vulnerabilities such as social engineering or weak security policies, leaving organizations exposed to a wider range of threats.

Consider a scenario where a company relies solely on vulnerability scans but neglects employee security training. A phishing attack could easily bypass all the technical safeguards put in place, highlighting the limitations of a purely technical approach.

Identifying the Cycle of Vulnerability

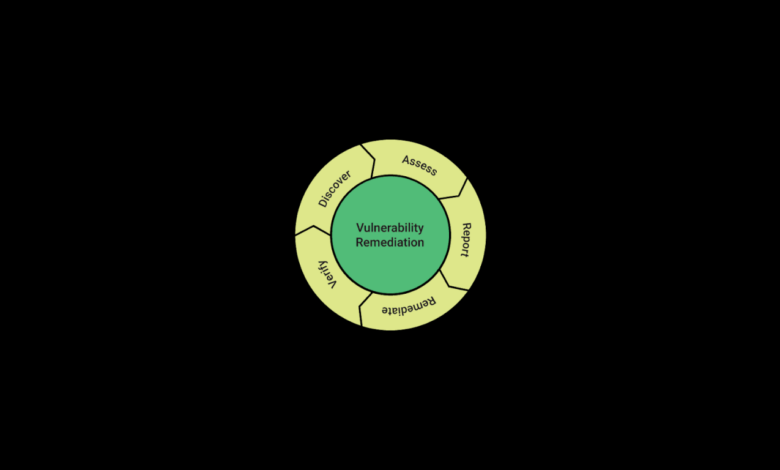

The lifecycle of a vulnerability, from its initial discovery to its eventual remediation, is a crucial aspect of understanding why traditional vulnerability management often fails. Understanding this cycle helps pinpoint weaknesses in traditional approaches and highlights opportunities for improvement in securing systems. This cycle, unfortunately, often becomes a frustratingly repetitive loop.The typical lifecycle begins with the discovery of a vulnerability, often through automated scans, penetration testing, or even accidental exposure.

This discovery is followed by a process of verification and analysis to confirm the vulnerability’s existence and potential impact. Next comes prioritization, where vulnerabilities are ranked based on severity and likelihood of exploitation. Remediation involves patching, configuration changes, or other corrective actions. Finally, validation ensures the vulnerability has been successfully addressed.

Stages Where Traditional Methods Fall Short

Traditional vulnerability management often struggles in several key areas of this lifecycle. The sheer volume of vulnerabilities discovered by automated scanners frequently overwhelms security teams, leading to prioritization difficulties and delayed remediation. Furthermore, the reliance on static vulnerability databases can lead to inaccuracies and missed vulnerabilities, particularly zero-day exploits that aren’t yet cataloged. Another critical weakness is the lack of effective integration between vulnerability scanning, risk assessment, and remediation processes.

This disjointed approach often results in vulnerabilities slipping through the cracks.

Breaking the cycle of traditional vulnerability management requires a shift in how we build applications. This is where embracing modern development methodologies like those discussed in this insightful article on domino app dev the low code and pro code future becomes crucial. By adopting these approaches, we can build more secure, agile applications from the ground up, proactively addressing vulnerabilities rather than reacting to them after deployment, ultimately leading to a more robust and resilient security posture.

Examples of Persistent Vulnerabilities Due to Traditional Limitations

Consider a scenario where a company relies solely on quarterly vulnerability scans. A critical vulnerability is discovered in the scan, but due to competing priorities and resource constraints, remediation is delayed for several weeks. In this time, a sophisticated attacker could have already exploited the vulnerability, gaining unauthorized access to sensitive data. This illustrates a common failure point: prioritizing remediation based on severity scores alone without considering the likelihood of exploitation and potential impact.

Another example is the reliance on signature-based detection. New and evolving attack techniques, especially those exploiting zero-day vulnerabilities, often bypass signature-based defenses. The traditional approach simply isn’t equipped to handle this dynamic threat landscape. Finally, the lack of integration between vulnerability management and change management processes can create conflicts and further delay remediation. Changes to systems may inadvertently introduce new vulnerabilities or reintroduce previously patched ones, highlighting the need for a more holistic approach.

Emerging Approaches

The traditional reactive approach to vulnerability management is increasingly inadequate in today’s fast-paced, interconnected digital landscape. The sheer volume of vulnerabilities discovered, coupled with the sophistication of modern cyberattacks, necessitates a fundamental shift towards proactive and preventative strategies. This means moving beyond simply patching known vulnerabilities after they’re discovered to actively preventing them from ever appearing in the first place.This paradigm shift involves embedding security throughout the entire software development lifecycle (SDLC) and operational processes, rather than treating it as an afterthought.

This integrated approach requires a cultural change within organizations, demanding collaboration between security teams and development teams.

DevSecOps and Threat Modeling

Modern vulnerability management methodologies like DevSecOps and threat modeling represent this proactive shift. DevSecOps integrates security practices into every stage of the software development process, from planning and design to deployment and operation. This contrasts sharply with traditional methods where security is often addressed only after development is complete. Threat modeling, on the other hand, involves systematically identifying potential security vulnerabilities in a system before it’s built or deployed.

By proactively identifying and mitigating risks, these methods significantly reduce the attack surface and the likelihood of successful exploits. For example, a company using DevSecOps might incorporate automated security testing into their CI/CD pipeline, automatically flagging vulnerabilities before code reaches production. A company utilizing threat modeling might create a visual representation of their system, identifying potential attack vectors and designing mitigations beforehand.

Resource Requirements Comparison

The resource requirements for traditional and modern vulnerability management approaches differ significantly. Traditional methods often rely heavily on manual processes, leading to higher costs and longer remediation times. Modern approaches, while requiring upfront investment, often result in long-term cost savings by preventing vulnerabilities from ever reaching production.

| Method | Cost | Time | Personnel |

|---|---|---|---|

| Traditional Vulnerability Management | High (manual patching, incident response) | Long (reactive patching, investigation) | Large security team, potentially external consultants |

| DevSecOps | Moderate to High (initial investment in tools and training) | Shorter (proactive identification and mitigation) | Smaller, cross-functional team (developers, security engineers, operations) |

| Threat Modeling | Low to Moderate (training and potentially specialized tools) | Moderate (design phase integration) | Security architects, developers, potentially business stakeholders |

Automation and Orchestration

The traditional, manual approach to vulnerability management is slow, error-prone, and ultimately unsustainable in today’s dynamic IT landscape. Automation and orchestration offer a powerful solution, dramatically improving efficiency and effectiveness across the entire vulnerability lifecycle. By automating repetitive tasks and integrating various security tools, organizations can significantly reduce their attack surface and strengthen their overall security posture.Automation significantly enhances vulnerability detection and remediation by accelerating the process and minimizing human error.

Instead of relying on manual scans and analysis, automated systems can continuously monitor for vulnerabilities, providing immediate alerts and prioritizing remediation efforts based on risk level. This proactive approach allows for faster response times, reducing the window of opportunity for attackers to exploit weaknesses. Furthermore, automated tools can perform tasks such as patching systems, configuring firewalls, and implementing other security controls with far greater speed and precision than manual processes.

Automated Vulnerability Detection

Automated vulnerability detection leverages various technologies, including vulnerability scanners, configuration management databases (CMDBs), and security information and event management (SIEM) systems. These tools continuously scan systems and applications for known vulnerabilities, comparing their findings against constantly updated vulnerability databases. The results are then analyzed to identify critical vulnerabilities that require immediate attention, allowing security teams to prioritize their efforts based on the potential impact and likelihood of exploitation.

For example, a system automatically detecting a critical vulnerability in a web server would trigger an immediate alert and initiate a workflow to remediate the issue, potentially including patching the server and validating the patch.

Orchestration in Vulnerability Management

Orchestration plays a vital role in streamlining the vulnerability management process by integrating and automating various security tools and workflows. This allows for a coordinated response to vulnerabilities, eliminating manual handoffs and reducing the risk of errors. An orchestration platform can automatically trigger actions based on predefined rules and policies, such as initiating a patch deployment upon detecting a critical vulnerability or escalating an issue to the appropriate team for investigation.

This automated coordination ensures that vulnerabilities are addressed efficiently and consistently, reducing the overall time-to-remediation. For example, if a vulnerability scan identifies a critical flaw in a database server, the orchestration system might automatically initiate a patch deployment, update the CMDB, and send notifications to the database administrator.

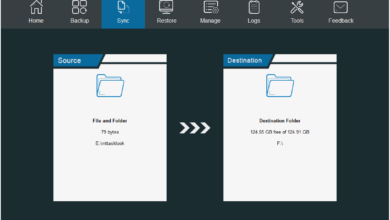

Workflow Diagram of an Automated Vulnerability Management System

Imagine a diagram depicting a system with several interconnected blocks.The first block, “Vulnerability Scanning,” represents the automated scanning process, constantly monitoring systems and applications. Arrows flow from this block to “Vulnerability Analysis,” where the results are processed and prioritized based on risk levels (e.g., CVSS scores). From there, arrows lead to “Remediation Workflow,” which shows automated patching, configuration changes, and other remediation actions.

Another arrow branches from “Vulnerability Analysis” to “Security Information and Event Management (SIEM),” where the data is integrated for comprehensive security monitoring and reporting. Finally, arrows from “Remediation Workflow” and “SIEM” flow to “Reporting and Monitoring,” providing a consolidated view of the vulnerability management process, including metrics on remediation time and overall security posture. The entire system is represented as a closed loop, with “Reporting and Monitoring” feeding back into “Vulnerability Scanning” to refine the process and improve its effectiveness.

This continuous feedback loop ensures that the system adapts to evolving threats and maintains optimal security.

Integrating Security into the Development Lifecycle

Shifting from reactive vulnerability management to a proactive approach necessitates a fundamental change: integrating security into the very fabric of software development. This isn’t simply about adding a security check at the end; it’s about embedding security considerations throughout the entire Software Development Lifecycle (SDLC). This proactive strategy significantly reduces vulnerabilities, minimizes remediation costs, and ultimately delivers more secure software.The benefits of incorporating security testing at each stage are substantial.

Tired of the endless patching and scanning of traditional vulnerability management? We need a smarter approach, and that’s where solutions like cloud security posture management (CSPM) come in. Check out this great article on bitglass and the rise of cloud security posture management to see how it’s changing the game. Ultimately, CSPM helps us break the cycle by proactively identifying and addressing risks in the cloud, leading to a more secure and efficient process.

By identifying and addressing security flaws early in the process, organizations can avoid costly and time-consuming fixes later on. Early detection also prevents vulnerabilities from reaching production environments, thus protecting sensitive data and maintaining user trust. This shift from a “bolt-on” security approach to a deeply integrated one dramatically improves the overall security posture of the software.

Security Testing at Each SDLC Stage

Implementing security testing at each stage of the SDLC is crucial for effective vulnerability management. This involves tailoring security assessments to the specific activities and artifacts produced at each phase. Failing to do so leaves gaps that attackers can exploit.

Best Practices for Embedding Security into the SDLC, Breaking the cycle of traditional vulnerability management

Several best practices can facilitate the integration of security into the SDLC. These practices ensure that security is not an afterthought but a core component of the development process.

- Security Requirements Gathering: Incorporating security requirements early in the planning phase, alongside functional requirements, ensures that security is considered from the outset. This might involve threat modeling exercises to identify potential vulnerabilities and design security controls to mitigate them.

- Secure Coding Practices: Training developers in secure coding techniques is paramount. This includes understanding common vulnerabilities like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF), and implementing secure coding practices to prevent them. Regular code reviews can also help identify and address security flaws.

- Static and Dynamic Application Security Testing (SAST/DAST): Employing SAST tools to analyze source code for vulnerabilities before runtime and DAST tools to test the running application for vulnerabilities is crucial. These tools automate the detection of many common security flaws.

- Penetration Testing: Regular penetration testing simulates real-world attacks to identify vulnerabilities that automated tools might miss. This provides a valuable assessment of the application’s overall security posture.

- Security Awareness Training: Educating developers about security best practices and the importance of secure coding is essential. Regular training helps to cultivate a security-conscious culture within the development team.

Example: Shift-Left Security in a Microservices Architecture

Consider a company developing a new application using a microservices architecture. By implementing shift-left security, they integrate security testing into each microservice’s development lifecycle. SAST is used during development to identify vulnerabilities in the code. DAST is then used to test the deployed microservices. Penetration testing is conducted regularly to simulate real-world attacks and identify any remaining vulnerabilities.

This layered approach ensures that security is addressed at every stage, significantly reducing the risk of vulnerabilities in the final application. Furthermore, the modular nature of microservices allows for quicker identification and patching of vulnerabilities, minimizing the overall impact.

Improving Communication and Collaboration

Effective communication and collaboration are the cornerstones of a successful vulnerability management program. Traditional approaches often silo information, leading to delays, inefficiencies, and ultimately, increased risk. Breaking the cycle requires a fundamental shift towards open, transparent, and proactive communication across all relevant teams.Traditional vulnerability management often suffers from communication breakdowns between security, development, and operations teams. Security teams might discover vulnerabilities but struggle to effectively convey the risk and remediation priorities to developers who are often overwhelmed with other tasks.

Developers, in turn, might not fully understand the security implications of their code changes, leading to unintentional vulnerabilities being introduced. Operations teams, responsible for deploying and maintaining systems, may lack the context to prioritize patching efforts effectively. This fragmented communication creates a bottleneck, delaying remediation and increasing the window of vulnerability exposure.

Cross-Functional Collaboration: A Necessary Shift

Cross-functional collaboration is crucial for bridging the communication gaps inherent in traditional vulnerability management. By fostering a collaborative environment where security, development, and operations teams work together seamlessly, organizations can significantly improve their response times and reduce their overall risk profile. This collaborative approach involves shared responsibility for security, ensuring that security considerations are integrated into every stage of the software development lifecycle (SDLC), from design and development to deployment and maintenance.

For example, regular joint meetings, shared dashboards, and collaborative tools can facilitate efficient communication and coordinated action. A successful collaboration relies on shared goals, mutual respect, and a commitment to a unified security posture.

Communication Plan: Key Stakeholders and Strategies

A well-defined communication plan is essential for ensuring that the right information reaches the right people at the right time. This plan should clearly Artikel key stakeholders, their communication needs, and the channels through which information will be shared.

The following Artikels key stakeholders and their specific communication requirements:

- Security Team: Requires regular updates on vulnerability discoveries, remediation progress, and overall security posture. Needs access to vulnerability scanning results, risk assessments, and remediation prioritization information. Communication channels should include dashboards, reports, and regular meetings.

- Development Team: Needs clear and concise vulnerability reports, including detailed descriptions, severity levels, and remediation guidance. Requires timely communication about vulnerabilities found in their code, enabling quick and effective patching. Communication channels should include ticketing systems, code review tools, and direct communication from the security team.

- Operations Team: Needs to be informed about vulnerabilities impacting production systems, patching schedules, and potential service disruptions. Requires clear instructions on how to implement patches and mitigate risks. Communication channels should include incident management systems, change management processes, and regular operational updates.

- Management: Requires high-level summaries of the organization’s security posture, major vulnerabilities, and remediation efforts. Needs to be informed of any significant security incidents or breaches. Communication channels should include executive dashboards, regular reports, and presentations.

Effective information sharing strategies include:

- Regular meetings: Cross-functional meetings facilitate open communication and problem-solving.

- Shared dashboards: Real-time visibility into vulnerability status and remediation progress.

- Automated alerts: Timely notifications for critical vulnerabilities and security incidents.

- Centralized vulnerability database: A single source of truth for all vulnerability information.

- Knowledge base and documentation: Provides easily accessible information on security best practices and remediation procedures.

Measuring Effectiveness and Continuous Improvement

So, you’ve implemented new vulnerability management strategies. Fantastic! But how do you know if they’re actually working? Measuring the effectiveness of your program is crucial, not just for proving ROI, but for continuously refining your processes and achieving optimal security posture. This isn’t a one-time task; it’s an ongoing cycle of assessment, analysis, and improvement.Effective vulnerability management isn’t just about patching; it’s about reducing risk.

Therefore, your metrics should reflect this broader goal. Simply counting patched vulnerabilities, while useful, doesn’t tell the whole story. We need to look at the bigger picture, analyzing trends and identifying weaknesses in our approach.

Key Metrics for Evaluating Vulnerability Management Programs

To accurately gauge the effectiveness of your vulnerability management program, several key metrics are essential. These metrics provide a comprehensive view of your security posture and highlight areas needing attention. Focusing solely on a single metric can be misleading; a holistic approach is key.

- Mean Time To Remediation (MTTR): This metric measures the average time taken to remediate a vulnerability from discovery to resolution. A lower MTTR indicates a more efficient and responsive vulnerability management process. For example, an MTTR of 7 days suggests room for improvement, whereas an MTTR of 2 days indicates a highly effective process.

- Vulnerability Remediation Rate: This metric tracks the percentage of identified vulnerabilities that have been successfully remediated within a specified timeframe. A high remediation rate signifies a proactive approach to risk mitigation. For instance, a remediation rate of 95% indicates a highly effective program, whereas a rate below 80% suggests the need for process optimization.

- Number of Critical and High-Severity Vulnerabilities: Monitoring the number of critical and high-severity vulnerabilities helps prioritize remediation efforts and understand the overall risk exposure. A significant increase in these vulnerabilities suggests a potential weakness in the vulnerability management process.

- False Positive Rate: This metric indicates the percentage of alerts that are not actual vulnerabilities. A high false positive rate can lead to alert fatigue and missed genuine threats. Ideally, this rate should be minimized through refined scanning and vulnerability identification processes.

- Percentage of Assets Scanned: This metric tracks the percentage of assets within your infrastructure that are regularly scanned for vulnerabilities. A high percentage indicates comprehensive coverage, while a low percentage highlights gaps in your vulnerability management program.

Using Data to Identify Areas for Improvement

The data collected from these metrics provides invaluable insights into your vulnerability management program’s performance. Analyzing trends over time allows you to identify areas requiring improvement and optimize your processes. For instance, a consistently high MTTR for a specific type of vulnerability might indicate a need for improved training or automated remediation scripts. Similarly, a low remediation rate for certain asset types could point to a lack of access or insufficient resources allocated to those areas.

Developing a Plan for Continuous Monitoring and Improvement

Continuous improvement is the cornerstone of a robust vulnerability management program. This requires a structured approach to monitoring, analysis, and adaptation.

- Regular Reporting and Analysis: Establish a regular reporting schedule (e.g., monthly or quarterly) to review the key metrics discussed earlier. This allows for timely identification of trends and potential problems.

- Root Cause Analysis: When issues arise, conduct a thorough root cause analysis to understand the underlying causes and prevent recurrence. This might involve reviewing processes, tools, or training programs.

- Process Optimization: Based on the analysis, implement changes to optimize your vulnerability management processes. This could include automating tasks, improving communication, or enhancing training programs.

- Regular Tool Evaluation: Regularly assess the effectiveness of your vulnerability scanning and management tools. New tools and technologies constantly emerge, and staying up-to-date is crucial for maintaining a strong security posture.

- Feedback Loops: Establish feedback loops to gather input from security teams, developers, and other stakeholders. This collaborative approach ensures that the vulnerability management program is aligned with the organization’s overall security objectives.

Addressing Human Factors

Let’s face it: even the most robust vulnerability management program can be undone by human error. People are the weakest link in any security chain, and ignoring this reality is a recipe for disaster. Understanding the human element is crucial for truly effective vulnerability management. This isn’t about blaming individuals; it’s about recognizing predictable human behaviors and designing systems and processes to mitigate their impact.Human error contributes significantly to vulnerability management failures in various ways.

For example, employees might inadvertently click on phishing links, fail to update software, or reuse passwords across multiple accounts. These seemingly small actions can have catastrophic consequences, creating entry points for malicious actors. Furthermore, inadequate training or a lack of awareness can lead to employees overlooking security protocols or failing to report suspicious activities promptly. A lack of clear communication about security policies and procedures further exacerbates these issues.

The Role of Human Error in Vulnerability Management Failures

Human error is a major cause of security breaches. Negligence, such as failing to change default passwords or neglecting software updates, often opens the door to attackers. Social engineering attacks, which exploit human psychology, are also highly effective. For example, a convincing phishing email can trick even well-trained employees into revealing sensitive information or downloading malware. Even seemingly minor mistakes, like leaving a laptop unattended in a public place, can have significant security implications.

The cumulative effect of these errors can severely compromise an organization’s security posture.

Strategies for Improving Security Awareness and Training

Effective security awareness training is not a one-time event; it’s an ongoing process. Regular training sessions should cover topics such as phishing recognition, password management best practices, and safe internet browsing habits. Simulations, such as mock phishing attacks, can effectively demonstrate the real-world consequences of human error. Gamification can make training more engaging and memorable, improving knowledge retention.

Training should be tailored to the specific roles and responsibilities of employees, focusing on the threats they are most likely to encounter. Regular refresher courses are essential to reinforce learning and address emerging threats. For instance, training materials should regularly be updated to reflect new phishing techniques and social engineering tactics.

Fostering a Security-Conscious Culture

Creating a security-conscious culture requires a multifaceted approach. Leadership buy-in is paramount; security should be viewed as a shared responsibility, not solely the domain of the IT department. Open communication is key; employees should feel comfortable reporting security incidents without fear of reprisal. Regular security awareness campaigns, incorporating various communication channels, can reinforce the importance of security practices.

Recognizing and rewarding employees who demonstrate strong security practices can further encourage a culture of security. Finally, integrating security into the organization’s overall risk management framework ensures that security is considered at all levels of decision-making. This might involve creating a security champion program, where designated employees act as security advocates within their teams.

Addressing Emerging Threats and Technologies

The rapid evolution of technology introduces unprecedented challenges to traditional vulnerability management strategies. New attack vectors emerge constantly, exploiting weaknesses in novel systems and architectures. Successfully navigating this dynamic landscape requires a proactive, adaptable approach that anticipates emerging threats and integrates seamlessly with the ever-changing technological environment. Failing to do so leaves organizations vulnerable to sophisticated attacks that can severely compromise security and operational efficiency.The adaptation required to address these challenges involves a fundamental shift from reactive patching to a more predictive and preventative posture.

This necessitates a deeper understanding of the vulnerabilities inherent in new technologies, coupled with the implementation of robust security measures throughout the entire system lifecycle. It’s no longer sufficient to simply react to discovered vulnerabilities; organizations must actively seek them out and mitigate potential risks before they can be exploited.

Cloud Computing Vulnerabilities

Cloud computing, while offering significant advantages in scalability and cost-effectiveness, introduces unique security challenges. Misconfigurations of cloud infrastructure, such as improperly secured storage buckets or exposed APIs, are common vulnerabilities. Furthermore, the shared responsibility model of cloud security requires a clear understanding of where responsibility lies between the cloud provider and the organization itself. Effective management involves rigorous configuration management, regular security audits, and the implementation of robust access control mechanisms.

For example, leveraging tools that automatically scan cloud environments for misconfigurations and vulnerabilities, coupled with strong Identity and Access Management (IAM) practices, is crucial.

Internet of Things (IoT) Device Vulnerabilities

The proliferation of IoT devices presents a significant challenge due to the sheer number and diversity of devices, many of which lack robust security features. These devices often have limited processing power and memory, making them difficult to patch and update. Furthermore, many IoT devices are deployed in environments with limited network visibility, making it difficult to monitor their security posture.

Proactive vulnerability management in this context involves careful device selection, focusing on devices with strong security features and regular firmware updates. Implementing network segmentation to isolate IoT devices from critical systems can also mitigate the risk of a compromise spreading throughout the network. Consider a scenario where a compromised smart home device is used as a launching point for an attack on a company network; strong network segmentation would limit the damage.

Artificial Intelligence (AI) Vulnerabilities

AI systems, while offering immense potential, also introduce new vulnerabilities. Adversarial attacks, where malicious inputs are designed to manipulate AI models, are a growing concern. Data poisoning, where training data is compromised to affect the model’s behavior, is another significant threat. Managing these vulnerabilities requires robust data security measures, including data validation and anomaly detection. Regular model testing and validation are also crucial to identify and mitigate vulnerabilities before they can be exploited.

For instance, rigorous testing of an AI-powered fraud detection system can reveal vulnerabilities to adversarial attacks that might otherwise lead to significant financial losses.

Concluding Remarks: Breaking The Cycle Of Traditional Vulnerability Management

Ultimately, breaking the cycle of traditional vulnerability management requires a holistic shift in mindset. It’s about integrating security into every stage of the software development lifecycle, fostering a security-conscious culture, and embracing automation and continuous improvement. By proactively addressing vulnerabilities and building a resilient system, you can significantly reduce your risk and protect your organization from costly breaches. The journey might seem daunting, but the rewards of a more secure and efficient system are well worth the effort.

Let’s start building a better future for security, together.

Quick FAQs

What’s the biggest misconception about vulnerability management?

Many believe vulnerability management is solely about patching. It’s actually a much broader process encompassing prevention, detection, response, and continuous improvement.

How can I get buy-in from my team for a new vulnerability management approach?

Start by showcasing the costs associated with current methods (breaches, downtime). Then, demonstrate the ROI of a proactive approach through clear metrics and case studies. Involve the team in the planning process to foster ownership.

What are some low-cost ways to improve vulnerability management?

Prioritize critical vulnerabilities, implement basic security awareness training, and utilize free or open-source vulnerability scanning tools. Focus on improving communication and collaboration first.

How do I measure the effectiveness of my vulnerability management program?

Track key metrics like mean time to remediation (MTTR), number of critical vulnerabilities, and the cost of security incidents. Regularly analyze this data to identify areas for improvement.