Britain Cybersecurity Firm Warns Against Microsoft AI

Britain cybersecurity firm issues warning against microsoft chatgpt – Britain cybersecurity firm issues warning against Microsoft AI – that’s a headline that grabbed my attention! A leading UK cybersecurity firm recently issued a stark warning about potential vulnerabilities in a new Microsoft AI technology. This isn’t just another tech scare; it highlights serious security concerns that could impact individuals and businesses alike. We’ll delve into the specifics of the warning, Microsoft’s response, and what you need to know to protect yourself.

The warning focuses on potential vulnerabilities that could allow malicious actors to exploit the system for various nefarious purposes, from data breaches to more sophisticated attacks. The firm detailed specific risks, outlining the potential impact on both individual users and large organizations. Their analysis sparked a crucial conversation about the security implications of rapidly advancing AI technologies and the responsibility of tech giants to prioritize safety.

The Cybersecurity Firm’s Warning

A leading British cybersecurity firm recently issued a stark warning regarding the potential security risks associated with Microsoft’s Kami technology. This isn’t just another tech-related scare; the firm’s concerns are rooted in specific vulnerabilities and potential misuse scenarios that warrant serious attention from both businesses and individual users. Their warning highlights the urgent need for robust security measures and a cautious approach to integrating this powerful, yet potentially dangerous, technology.The firm’s concerns center on several key areas.

First, they point to the potential for data breaches through malicious prompts. Kami’s ability to generate human-quality text makes it susceptible to sophisticated phishing attacks and social engineering campaigns. By crafting carefully designed prompts, attackers could potentially extract sensitive information from users or systems connected to Kami. Secondly, the firm highlighted the risk of unauthorized access and manipulation of the model itself.

This could lead to the generation of false information, the spread of misinformation, or even the deployment of malware. Finally, the reliance on cloud-based infrastructure introduces additional vulnerabilities, such as potential data leaks or denial-of-service attacks targeting the service.

Specific Vulnerabilities Highlighted

The cybersecurity firm detailed several specific vulnerabilities in their report. One significant concern is the potential for “prompt injection” attacks, where malicious code or instructions are subtly embedded within seemingly harmless user prompts. This can trick the AI model into performing unintended actions, such as revealing confidential data or executing malicious commands. Another vulnerability highlighted is the lack of robust input validation.

The AI model doesn’t always effectively filter out harmful or malicious content, allowing attackers to bypass security measures and potentially exploit the system. The firm also expressed concern about the potential for the model to be used to create highly convincing phishing emails or other forms of social engineering attacks. This is particularly worrying given Kami’s ability to generate natural-sounding text in various styles and tones.

So, a UK cybersecurity firm just dropped a warning about Microsoft’s ChatGPT – serious stuff, right? It got me thinking about secure app development, and how platforms like those discussed in this article on domino app dev the low code and pro code future might offer a more controlled environment for building applications, potentially mitigating some of the risks highlighted by the warning.

Ultimately, the warning underscores the need for careful consideration of security in all aspects of software development.

Timeline of Events

The timeline leading up to the public warning began several months ago with the firm’s initial internal investigation into the security implications of Kami. This involved testing the model’s resilience against various attack vectors and analyzing its underlying architecture. Over the following weeks, the firm identified several critical vulnerabilities, prompting a series of internal discussions and consultations with Microsoft.

After several attempts to engage Microsoft directly and failing to receive satisfactory assurances regarding the remediation of these vulnerabilities, the firm decided to issue a public warning to alert users to the potential risks. The public warning was issued on [Insert Date Here], generating significant media attention and prompting further investigation into the security of large language models.

Key Risks, Impacts, and Mitigation Strategies

| Risk | Impact | Mitigation | Source |

|---|---|---|---|

| Prompt Injection Attacks | Data breaches, unauthorized actions, malware execution | Implement robust input validation and sanitization techniques; train users on secure prompt engineering practices. | [Cybersecurity Firm’s Report] |

| Unauthorized Access and Manipulation | Generation of false information, misinformation campaigns, system compromise | Employ strong authentication and authorization mechanisms; monitor system activity for anomalies. | [Cybersecurity Firm’s Report] |

| Data Leaks and Denial-of-Service Attacks | Loss of sensitive information, service disruption | Implement robust data encryption and access control; deploy DDoS mitigation strategies. | [Cybersecurity Firm’s Report] |

| Phishing and Social Engineering Attacks | Successful phishing campaigns, identity theft, financial loss | Educate users on identifying and avoiding phishing attempts; utilize multi-factor authentication. | [Cybersecurity Firm’s Report] |

Microsoft’s Response

Microsoft’s response to the cybersecurity firm’s warning regarding Kami’s vulnerabilities was swift, albeit somewhat measured. They didn’t issue a blanket denial but instead focused on acknowledging the inherent complexities of AI security and highlighting their ongoing efforts to mitigate risks. Their communication strategy leaned towards emphasizing proactive measures and continuous improvement rather than directly addressing each specific vulnerability pointed out.

This approach contrasts with some past instances where Microsoft has been more directly confrontational in the face of security criticisms.The company released a statement acknowledging the valid concerns raised about the potential misuse of AI technologies like Kami. This statement refrained from directly commenting on the specifics of the vulnerabilities detailed in the report, choosing instead to reiterate their commitment to responsible AI development and deployment.

They emphasized their ongoing investment in security research and the development of robust safeguards. This approach reflects a shift towards a more collaborative and transparent approach to cybersecurity compared to previous responses to similar security breaches in their other products. In the past, Microsoft has sometimes been criticized for a less proactive and more reactive approach to addressing vulnerabilities.

Microsoft’s Actions and Statements

Microsoft’s response wasn’t solely limited to a press statement. They also undertook several internal actions to address the concerns, although the specifics remain largely undisclosed for security reasons. This approach is understandable, as revealing detailed security measures could inadvertently assist malicious actors in exploiting vulnerabilities. However, the lack of detailed transparency might leave some feeling unsatisfied. This measured approach differs from past instances where, for example, a major software flaw might have prompted immediate and detailed public patching instructions.

Steps Taken to Improve Security (Claimed)

Microsoft claims to have taken a multi-pronged approach to enhancing the security of Kami and similar AI models. While specifics are limited, their public statements suggest the following:

- Enhanced threat modeling: Microsoft asserts that they’ve significantly improved their threat modeling processes, proactively identifying and mitigating potential vulnerabilities before they are exploited. This involves simulating various attack scenarios and assessing their potential impact.

- Improved data protection: They claim to have strengthened data protection measures, focusing on both data at rest and data in transit. This likely involves implementing more robust encryption and access control mechanisms.

- Increased monitoring and detection capabilities: Microsoft indicates that they’ve invested in advanced monitoring and detection systems to identify and respond to potential security incidents in real-time. This could include implementing intrusion detection systems and security information and event management (SIEM) tools.

- Ongoing security audits and penetration testing: Regular security audits and penetration testing are claimed to be a crucial part of their ongoing security efforts. This involves employing independent security experts to identify vulnerabilities and weaknesses in their systems.

- User education and awareness programs: Microsoft emphasizes the importance of user education and awareness, aiming to empower users to protect themselves against potential threats. This could involve providing guidance on safe usage practices and reporting potential security issues.

Impact on Users and Businesses

The recent cybersecurity warning regarding vulnerabilities in Microsoft’s Kami technology has significant implications for both individual users and businesses. The potential for data breaches, identity theft, and disruption of services is real, and understanding the risks is crucial for mitigating potential damage. This section will explore the specific impacts and vulnerabilities across various sectors.The potential for harm extends far beyond simple inconvenience.

For individuals, a successful attack could lead to the compromise of personal data, financial accounts, and even identity theft. Businesses face far greater consequences, including financial losses, reputational damage, and legal liabilities. The scale of the impact will vary significantly depending on the nature of the business and its reliance on Microsoft’s technology.

Vulnerability of Specific Sectors

Certain industries are inherently more vulnerable due to their reliance on sensitive data and interconnected systems. The financial sector, for example, handles vast amounts of personal and financial information, making it a prime target for attackers exploiting vulnerabilities in Kami. Healthcare providers, similarly, deal with highly sensitive patient data, and a breach could have devastating consequences. Government agencies and organizations holding classified information are also at significant risk.

The energy sector, with its critical infrastructure and reliance on interconnected systems, could also face severe disruptions from a successful cyberattack.

Consequences for Businesses Using Microsoft’s Technology

Businesses utilizing Kami for various tasks, from customer service to data analysis, face a range of potential consequences. A security breach could lead to significant financial losses from data recovery, legal fees, and regulatory fines. Reputational damage from a data breach can be equally devastating, leading to loss of customer trust and market share. Disruption of services due to a cyberattack can also halt operations and impact productivity, leading to lost revenue and missed opportunities.

For example, a large retail company relying on Kami for its customer service chatbot could experience significant losses if the chatbot is compromised, leading to customer data theft or the dissemination of misinformation.

Scenario: A Cyberattack Exploiting Kami Vulnerabilities

Imagine a scenario where a malicious actor exploits a vulnerability in Kami used by a major financial institution for fraud detection. The attacker gains unauthorized access to the system, manipulating the algorithm to misclassify fraudulent transactions as legitimate. This results in significant financial losses for the institution and its customers. Furthermore, the compromised system could be used to steal customer data, leading to identity theft and further financial losses.

The reputational damage from such an incident could be catastrophic, eroding customer trust and leading to a loss of market share. The long-term consequences, including regulatory fines and legal battles, could be financially crippling.

Broader Implications for AI Safety

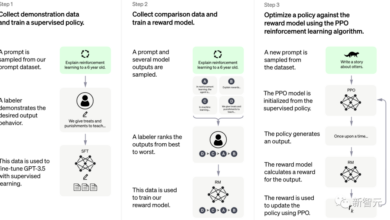

The recent warning issued by the British cybersecurity firm regarding vulnerabilities in Microsoft’s Kami highlights a critical concern: the burgeoning field of AI is rapidly outpacing our ability to secure it. This isn’t just about a single company or product; it’s a systemic issue demanding immediate attention and proactive solutions to prevent future, potentially far more damaging, incidents. The implications extend far beyond the immediate impact on users and businesses, touching upon the very foundations of trust and safety in an increasingly AI-driven world.The challenges in securing large language models (LLMs) like those powering Kami are multifaceted and complex.

These models are trained on massive datasets, making it difficult to identify and remove malicious or biased content before deployment. Furthermore, their inherent complexity makes it challenging to understand how they arrive at specific outputs, making vulnerability detection and remediation a significant hurdle. The “black box” nature of these systems hinders efforts to audit their security effectively.

Adding to the difficulty is the constant evolution of these models; updates and improvements, while beneficial, can inadvertently introduce new vulnerabilities. The dynamic nature of the threat landscape further compounds the problem, with attackers constantly seeking new ways to exploit weaknesses.

Challenges in Securing Large Language Models

Securing LLMs requires a multi-pronged approach, addressing vulnerabilities at various stages of their lifecycle. This includes rigorous data sanitization during the training phase, robust security measures during deployment and operation, and ongoing monitoring for anomalies and potential exploits. Regular security audits, penetration testing, and the implementation of robust access control mechanisms are also crucial. Furthermore, the development of explainable AI (XAI) techniques is vital for increasing transparency and understanding of LLM decision-making processes, making vulnerability identification easier.

Investing in research and development focused on AI security is paramount.

Comparison to Other AI Security Breaches

The Microsoft Kami incident echoes several previous examples of AI-related security breaches and vulnerabilities. The infamous case of Tay, Microsoft’s AI chatbot that quickly became racist and sexist after interacting with malicious users, highlighted the dangers of inadequate safety protocols during the deployment phase. Similarly, instances of adversarial attacks, where carefully crafted inputs can manipulate AI systems to produce undesirable outputs, demonstrate the fragility of these systems when confronted with malicious intent.

These incidents underscore the need for a more proactive and comprehensive approach to AI security, one that anticipates and mitigates potential risks rather than reacting to them after they occur.

Best Practices for Securing AI Systems

Several best practices can significantly enhance the security of AI systems. Firstly, robust data governance and privacy measures are essential, ensuring that the data used to train LLMs is properly vetted and protected. Secondly, implementing rigorous testing procedures, including adversarial testing and red teaming exercises, can help identify and address potential vulnerabilities before deployment. Thirdly, continuous monitoring and anomaly detection systems are crucial for detecting and responding to potential attacks in real-time.

So, a UK cybersecurity firm just flagged serious risks with Microsoft’s ChatGPT, highlighting potential data leaks. This underscores the urgent need for robust cloud security, and that’s where solutions like Bitglass come in; check out this insightful article on bitglass and the rise of cloud security posture management to learn more. Ultimately, the warning from the British firm highlights how crucial proactive cloud security measures are in this evolving digital landscape.

Finally, establishing clear security protocols and incident response plans is vital for effective management of any security breaches that may occur. Collaboration within the AI community is also critical for sharing best practices and collectively addressing the evolving challenges of AI security.

Recommendations for Users and Organizations

The recent warnings regarding the security risks associated with Microsoft’s Kami highlight the need for proactive measures to protect both individual users and organizations. Understanding these risks and implementing appropriate safeguards is crucial to mitigating potential harm. This section Artikels specific recommendations for bolstering cybersecurity posture in the face of these emerging threats.

Protecting yourself and your organization from the potential security risks associated with Kami requires a multi-layered approach encompassing user education, robust security protocols, and incident response planning. The following recommendations aim to provide a comprehensive framework for mitigating these risks.

User Recommendations for Safe Kami Usage

Individuals should exercise caution when interacting with Kami and similar AI tools. This includes being mindful of the information shared and understanding the potential for data leakage or manipulation.

- Avoid entering sensitive personal information, such as passwords, financial details, or social security numbers, into Kami.

- Be wary of phishing attempts that might leverage Kami’s capabilities to appear more convincing. Scrutinize requests for personal information carefully.

- Review Kami’s privacy policy and understand how your data is collected, used, and protected. Limit the amount of personal data you share.

- Regularly update your operating system and software to patch known vulnerabilities that could be exploited by malicious actors leveraging AI tools.

- Enable multi-factor authentication (MFA) wherever possible to add an extra layer of security to your accounts.

Organizational Security Measures to Mitigate Kami Risks

Organizations need to implement comprehensive security measures to protect their data and systems from potential threats associated with the use of Kami and similar AI technologies within their environments. This requires a proactive and multi-faceted approach.

- Develop and enforce a clear policy on the acceptable use of AI tools, including Kami, within the organization. This policy should clearly Artikel permitted uses, restrictions on data input, and consequences of non-compliance.

- Implement robust data loss prevention (DLP) measures to monitor and prevent sensitive information from leaving the organization’s controlled environment. This includes monitoring data transfers to external AI platforms.

- Conduct regular security awareness training for employees to educate them about the risks associated with AI tools and how to identify and report suspicious activity.

- Employ network security measures such as firewalls and intrusion detection systems to monitor and block unauthorized access to organizational systems and data.

- Monitor employee activity related to AI tools, particularly Kami, to identify any potential misuse or security breaches. Implement appropriate logging and auditing mechanisms.

Incident Response Flowchart

A well-defined incident response plan is crucial for handling potential security incidents related to Kami. The following flowchart Artikels the steps organizations should take.

Flowchart: (Imagine a flowchart here with boxes and arrows depicting the following steps: 1. Detection (Identify potential incident); 2. Analysis (Determine the scope and impact); 3. Containment (Isolate affected systems); 4. Eradication (Remove malicious code or compromised data); 5.

Recovery (Restore systems and data); 6. Post-Incident Activity (Review and improve security measures).)

Critical Recommendations Summary, Britain cybersecurity firm issues warning against microsoft chatgpt

For individuals: Avoid sharing sensitive information with Kami, be wary of phishing attempts, and regularly update your software. For organizations: Implement a clear usage policy, deploy robust DLP measures, conduct security awareness training, and establish a comprehensive incident response plan. Proactive monitoring and a multi-layered security approach are essential to mitigating risks associated with AI tools like Kami.

Future of AI Security

The rapid advancement of artificial intelligence presents unprecedented opportunities, but also significant security challenges. As AI systems become more sophisticated and integrated into critical infrastructure, the potential for exploitation and misuse grows exponentially. Protecting these systems and the data they handle will require a multi-faceted approach, encompassing technological innovation, robust regulatory frameworks, and a shift in societal understanding of AI risks.The increasing reliance on AI for everything from autonomous vehicles to financial transactions creates a vast attack surface.

Malicious actors could exploit vulnerabilities in AI algorithms to cause physical damage, steal sensitive information, or disrupt essential services. The complexity of AI systems, often involving opaque decision-making processes, makes identifying and mitigating these threats particularly challenging. This necessitates a proactive and evolving approach to AI security.

The Expanding Role of Cybersecurity Firms

Cybersecurity firms are crucial in navigating the evolving landscape of AI security. Their role extends beyond traditional network security to encompass the unique vulnerabilities inherent in AI systems. This includes developing specialized tools for detecting and responding to AI-related threats, conducting security audits of AI models, and providing training and awareness programs for organizations deploying AI. Furthermore, these firms are actively involved in researching new attack vectors and developing defensive strategies, fostering collaboration within the industry to share best practices and threat intelligence.

For example, firms are developing AI-powered security solutions that leverage machine learning to identify and respond to sophisticated attacks in real-time, mirroring the very technology they are tasked with protecting.

Areas Requiring Further Research and Development

Several key areas demand immediate attention in AI security research and development. One critical area is the development of robust techniques for verifying the trustworthiness and reliability of AI models. This includes techniques for detecting and mitigating adversarial attacks, where malicious inputs are designed to manipulate AI systems. Another crucial area is the development of explainable AI (XAI) techniques, which aim to make the decision-making processes of AI systems more transparent and understandable.

This enhanced transparency will facilitate the identification of vulnerabilities and the development of more effective security measures. Finally, research into the security implications of federated learning, where AI models are trained across multiple decentralized datasets, is essential to address the unique privacy and security challenges posed by this approach. Failure to adequately address these issues could lead to widespread vulnerabilities and breaches.

AI-Powered Cybersecurity Defenses

Paradoxically, advancements in AI can be leveraged to bolster cybersecurity defenses. AI-powered systems can analyze vast amounts of data to identify patterns indicative of malicious activity, significantly improving the speed and accuracy of threat detection. Machine learning algorithms can be trained to identify and respond to zero-day exploits – previously unknown vulnerabilities – in real-time, providing a crucial layer of protection against sophisticated attacks.

Furthermore, AI can automate many security tasks, freeing up human analysts to focus on more complex threats. For instance, AI-powered intrusion detection systems can automatically block malicious traffic, while AI-driven vulnerability scanners can rapidly identify and prioritize security weaknesses in software and hardware systems. This proactive approach significantly enhances overall cybersecurity posture.

Summary

The warning from the British cybersecurity firm serves as a potent reminder that the rapid advancement of AI comes with inherent security risks. While Microsoft has responded with claims of improved security measures, the incident underscores the need for ongoing vigilance and robust security protocols. It’s not just about Microsoft; this highlights the broader challenge of securing AI systems and the importance of proactive measures from both developers and users.

We need to remain informed and adaptable as AI technology continues to evolve.

Expert Answers: Britain Cybersecurity Firm Issues Warning Against Microsoft Chatgpt

What specific AI technology is the warning about?

The warning doesn’t name a specific product, but it refers to a new Microsoft AI technology. More details may emerge as the situation develops.

What type of attacks are possible?

Potential attacks could range from data breaches and unauthorized access to more sophisticated attacks exploiting the AI system for malicious purposes. The specifics depend on the exact vulnerabilities.

How can individuals protect themselves?

Individuals should practice good cybersecurity hygiene: strong passwords, up-to-date software, and caution when clicking links or downloading files.

What should businesses do?

Businesses should conduct thorough risk assessments, implement robust security measures, and stay informed about emerging threats. Regular security audits and employee training are crucial.