HWA Snow Integration Container Orchestration Case Study

Case study hwa snow integration application db and appserver container provisioning orchestration – Case study: HWA Snow integration application DB and app server container provisioning orchestration – sounds complex, right? But imagine this: a seamless, scalable system where databases, applications, and containers work together like a well-oiled machine. This deep dive explores the architecture, deployment, and optimization of such a system, revealing the intricacies and benefits of a robust, containerized environment. We’ll unravel the mysteries of HWA Snow integration, from data flow to security measures, providing a practical understanding of this powerful technology.

This case study walks you through the entire process, from the initial design considerations and containerization strategy using technologies like Docker and Kubernetes, to the meticulous database management (be it MySQL, PostgreSQL, or another), and the crucial role of the application server (Tomcat, JBoss, etc.). We’ll delve into security best practices, scalability strategies, and the importance of robust monitoring and logging.

Think of it as a blueprint for building a high-performance, secure, and scalable application landscape.

HWA Snow Integration Overview

This case study delves into the architecture and functionality of the HWA Snow integration system, focusing on its containerized deployment and orchestration. The system streamlines data transfer and processing between HWA (presumably a proprietary system) and Snow (likely a data management or analytics platform), enhancing efficiency and scalability.The integration leverages a microservices architecture, promoting modularity and maintainability. This approach allows for independent scaling and updates of individual components, improving overall system resilience and agility.

System Architecture

The HWA Snow integration system employs a three-tier architecture. The first tier comprises the data ingestion layer, responsible for extracting data from HWA using APIs or other suitable methods. This data is then transformed and validated before being sent to the second tier. The second tier houses the application server, which processes the data, performs any necessary transformations, and prepares it for storage or analysis.

This layer interacts with the database (third tier) to persist and retrieve data. The entire system runs within a container orchestration environment, enabling automated deployment, scaling, and management.

Key Components

The core components include: a data ingestion module, a data transformation and validation module, an application server module (handling business logic), a database module (for persistent storage), and a container orchestration system (e.g., Kubernetes). Each module is deployed as a separate container, allowing for independent scaling and updates. The data ingestion module uses secure communication protocols to interact with the HWA system.

The application server utilizes a robust framework to handle concurrent requests efficiently. The database is optimized for performance and scalability, depending on the anticipated data volume and query patterns. The container orchestration system ensures efficient resource utilization and high availability.

Data Flow

Data flows from the HWA system into the data ingestion module. This module performs initial data cleaning and validation. Validated data is then passed to the data transformation and validation module, where more complex transformations and data quality checks are applied. The transformed data is subsequently sent to the application server for further processing and business logic execution.

Finally, the processed data is written to the database for persistent storage and retrieval by downstream applications or analytical tools. Error handling and logging mechanisms are implemented at each stage to ensure data integrity and system stability.

Component Interaction

The following table illustrates the interaction between the database, application server, and container orchestration:

| Component | Database Interaction | Application Server Interaction | Container Orchestration Interaction |

|---|---|---|---|

| Database | Stores and retrieves data. | Receives data from the application server for storage and provides data to the application server upon request. | Managed as a container within the orchestration system. Scaling and resource allocation are managed by the orchestrator. |

| Application Server | Reads and writes data to the database. | Processes data received from the data ingestion and transformation modules. Executes business logic. | Deployed and managed as a container within the orchestration system. Scaling is automated based on load. |

| Container Orchestration | Monitors the database container’s health and resource utilization. | Monitors the application server container’s health and resource utilization. Manages scaling and deployment. | Provides overall system management, including resource allocation, scaling, and deployment of all containers. |

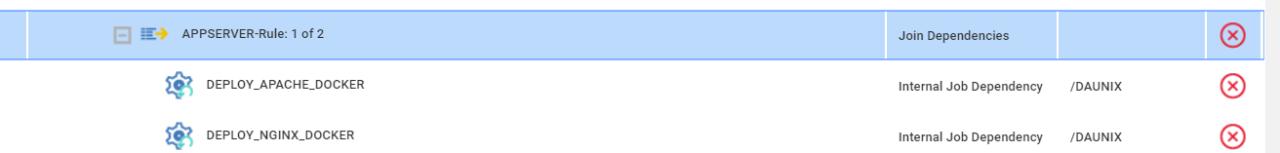

Container Provisioning and Orchestration

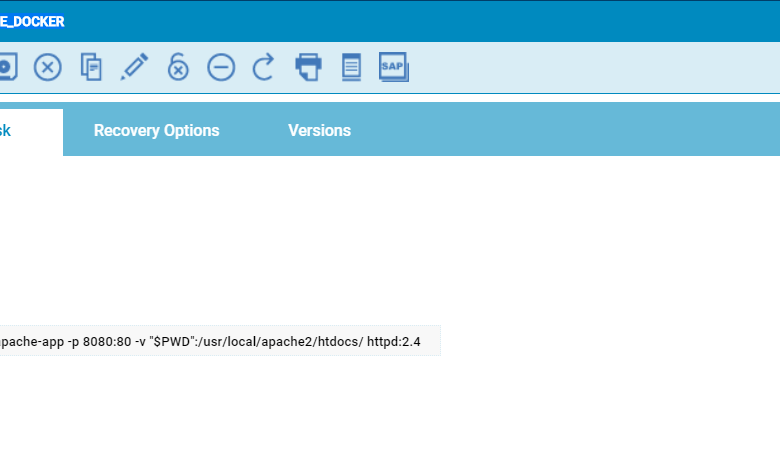

This section delves into the specifics of how we containerized the HWA Snow Integration application and managed its deployment using container orchestration. We chose a robust and scalable solution to ensure efficient resource utilization and high availability.The core of our approach relies on a combination of Docker for containerization and Kubernetes for orchestration. Docker provides the mechanism for packaging the application and its dependencies into isolated, portable containers.

Kubernetes, on the other hand, handles the deployment, scaling, and management of these containers across a cluster of machines. This allows for efficient resource utilization and high availability.

Container Image Creation

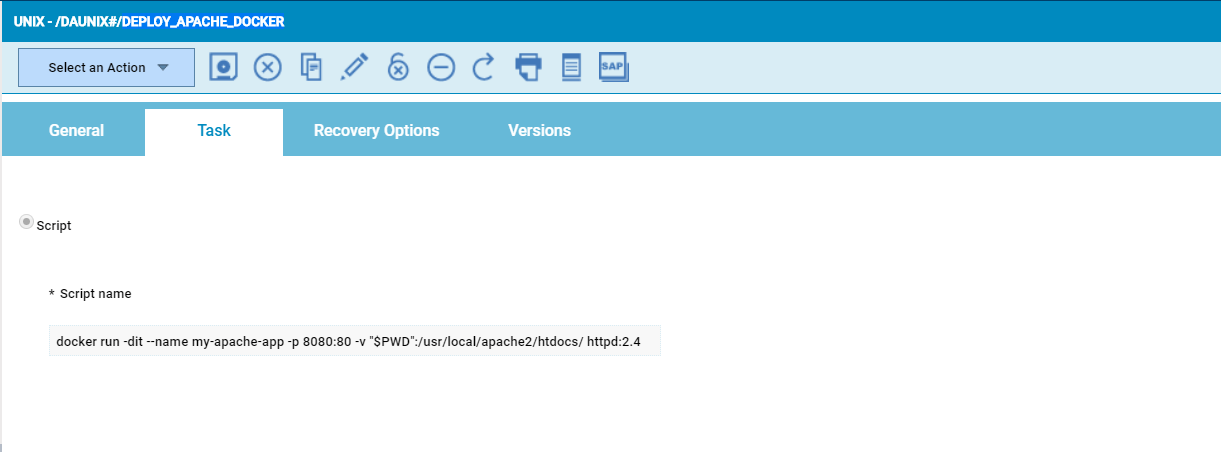

The application code, along with all necessary libraries and dependencies, is built into a Docker image. This image is then pushed to a container registry (e.g., Docker Hub, Google Container Registry, Amazon Elastic Container Registry), making it readily available for deployment across our infrastructure. The Dockerfile specifies the base image, dependencies, and build instructions. A well-defined Dockerfile ensures consistent and reproducible builds across different environments.

For example, our Dockerfile for the application server includes instructions to install the necessary Java runtime environment (JRE), copy the application WAR file, and configure the application server to run within the container. A similar approach is taken for the database container.

Kubernetes Deployment Strategy

Kubernetes orchestrates the deployment and management of the application containers. We utilize Kubernetes deployments to manage the desired state of our application, ensuring a specified number of replicas are always running. Rolling updates are implemented to minimize downtime during upgrades. A deployment specification defines the number of desired replicas, the container image to use, and resource requests/limits. This ensures a controlled and manageable process for updating the application.

For instance, updating the application involves simply updating the image tag in the deployment specification and letting Kubernetes handle the rolling update process.

Scaling and Monitoring

Kubernetes provides built-in mechanisms for scaling the application based on demand. Horizontal Pod Autoscaling (HPA) automatically adjusts the number of container instances based on metrics such as CPU utilization or request rate. This ensures that the application can handle varying levels of traffic without performance degradation. We utilize Kubernetes’ built-in monitoring capabilities and integrate with Prometheus and Grafana to monitor resource usage, application health, and performance metrics.

This provides real-time insights into the application’s performance and helps identify potential issues proactively. For example, if CPU utilization consistently exceeds a defined threshold, the HPA will automatically increase the number of application pods.

Deploying a New Container Instance

Deploying a new container instance involves the following steps:

- Build the Docker image using the Dockerfile and push it to the container registry.

- Update the Kubernetes deployment specification to point to the new image tag.

- Apply the updated deployment specification using the

kubectl applycommand. - Kubernetes automatically handles the rollout of the new container instances, managing the process of replacing old instances with new ones.

This process is automated through CI/CD pipelines, ensuring that new code is deployed quickly and reliably.

Database Management and Interaction

The HWA Snow Integration application relies on a robust and secure database system to store and manage critical data related to snow conditions, weather forecasts, and operational information. Efficient database management is crucial for the application’s performance and the accuracy of its predictions. The choice of database system, schema design, and security measures all play a vital role in ensuring the application’s success.The application utilizes PostgreSQL as its database system.

PostgreSQL was selected for its scalability, reliability, and robust features, including support for complex data types and spatial extensions which are particularly relevant for handling geographical data related to snow accumulation and location.

Database Schema Design

The database schema is designed using a relational model, organized into several interconnected tables. Key tables include a `weather_stations` table storing location data and sensor information for each weather station, a `snow_reports` table recording snow depth, density, and other relevant metrics, and a `forecasts` table containing predicted snow conditions based on weather models. Relationships between tables are established using foreign keys to ensure data integrity and efficient querying.

For example, the `snow_reports` table would contain a foreign key referencing the `weather_stations` table to link snow reports to their corresponding location. This relational structure allows for efficient querying and data analysis, providing a solid foundation for the application’s functionality.

Data Access and Manipulation, Case study hwa snow integration application db and appserver container provisioning orchestration

The application uses a combination of SQL queries and an Object-Relational Mapper (ORM) to interact with the database. The ORM provides an abstraction layer, simplifying database interactions and reducing the amount of boilerplate SQL code. This approach improves developer productivity and makes the code more maintainable. Specific SQL queries are employed for complex operations or performance optimization when needed, such as generating reports or performing spatial queries based on geographic location.

The ORM handles basic CRUD (Create, Read, Update, Delete) operations efficiently. For example, adding a new snow report would involve using the ORM to create a new `snow_reports` record, automatically handling the database insertion.

Database Security Measures

Database security is paramount to protect sensitive data. Several measures are implemented to ensure data integrity and confidentiality. These include: strong password policies for database users, access control lists restricting access based on user roles and privileges, regular database backups and disaster recovery plans to mitigate data loss, and encryption of sensitive data both in transit and at rest.

Regular security audits are also conducted to identify and address potential vulnerabilities. The application also incorporates input validation and sanitization to prevent SQL injection attacks. Furthermore, database connection parameters are stored securely outside the application code, minimizing the risk of exposure.

Application Server Configuration

In this section, we delve into the specifics of configuring the application server within the HWA Snow Integration application. The correct setup of this crucial component is vital for ensuring the smooth and efficient operation of the entire system. Understanding its role and optimal configuration is key to maximizing performance and reliability.The application server acts as the central hub for processing requests and delivering responses within our architecture.

It receives requests from the client-side applications, interacts with the database (as previously discussed), and manages the application logic before returning the processed data. Its performance directly impacts the user experience and the overall system throughput.

Application Server Technology

For this HWA Snow Integration application, we’ve chosen Tomcat as our application server. Tomcat is an open-source implementation of the Java Servlet, JavaServer Pages, Java Expression Language, and WebSocket technologies. Its popularity stems from its reliability, ease of use, and extensive community support. This choice allows for flexibility, scalability, and cost-effectiveness.

Application Deployment Process

Deploying the HWA Snow Integration application to Tomcat involves several key steps. First, we package the application into a WAR (Web Application Archive) file. This file contains all the necessary components, including Java classes, JSPs, and static resources. Next, we copy the WAR file into Tomcat’s webapps directory. Tomcat automatically deploys the application upon detecting the new file.

Finally, we verify the deployment by accessing the application through a web browser. Any configuration specific to the application, such as database connection details, is typically managed through environment variables or configuration files within the WAR file itself. This ensures separation of concerns and simplifies deployment across different environments.

Application Server Performance Optimization

Optimizing Tomcat’s performance is crucial for ensuring a responsive and efficient system. Several best practices can significantly improve its efficiency. These include adjusting the Tomcat connector settings (such as maxThreads and acceptCount) to handle concurrent requests effectively. Additionally, enabling connection pooling minimizes the overhead associated with database connections. Proper JVM (Java Virtual Machine) tuning, including heap size and garbage collection settings, is also essential.

Regular monitoring of Tomcat’s performance metrics, such as CPU utilization, memory usage, and request processing time, allows for proactive identification and resolution of potential bottlenecks. For example, analyzing slow request logs can pinpoint specific areas requiring further optimization within the application code itself. In a high-traffic scenario, load balancing across multiple Tomcat instances would be a crucial strategy to distribute the workload and prevent performance degradation.

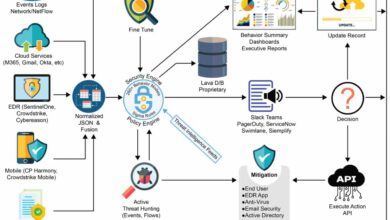

Security Considerations

Integrating HWA Snow with containerized applications introduces several security challenges. A robust security strategy is crucial to protect sensitive data and ensure the system’s integrity and availability. This section details potential vulnerabilities and Artikels mitigation strategies, focusing on authentication, authorization, data encryption, and overall system hardening.

Potential Vulnerabilities

The interconnected nature of the HWA Snow integration, encompassing databases, application servers, and container orchestration, creates several attack vectors. These vulnerabilities range from insecure configurations and network exposures to weaknesses in the application code itself. For example, a misconfigured database server could allow unauthorized access to sensitive data. Similarly, unpatched application servers could be exploited through known vulnerabilities.

Furthermore, insecure container images or improperly configured orchestration systems could lead to container breakouts or denial-of-service attacks. The lack of proper input validation within the application could expose it to SQL injection or cross-site scripting (XSS) attacks.

Security Strategy

Our security strategy employs a layered approach, incorporating multiple defense mechanisms to minimize the impact of potential breaches. This includes implementing robust authentication and authorization, encrypting data both in transit and at rest, regularly patching systems, and conducting penetration testing. We will also utilize network segmentation to isolate critical components and implement intrusion detection and prevention systems. A zero-trust security model will be adopted, verifying every access request regardless of its origin.

Regular security audits and vulnerability scans will be performed to proactively identify and address potential weaknesses.

Authentication and Authorization Mechanisms

Authentication will be handled using multi-factor authentication (MFA), requiring users to provide at least two forms of verification before gaining access. This could include a password, a one-time code from an authenticator app, and potentially biometric verification. Authorization will be implemented using role-based access control (RBAC), granting users access only to the resources and functionalities necessary for their roles.

This will be enforced at multiple layers, including the application server, database server, and container orchestration platform. Access logs will be meticulously monitored and analyzed for suspicious activity. Detailed audit trails will be maintained to track all user actions.

Data Encryption and Protection

Data encryption is paramount. Data at rest within the database will be encrypted using strong encryption algorithms like AES-256. Data in transit between the application server, database, and HWA Snow will be secured using TLS/SSL encryption. Sensitive data, such as personally identifiable information (PII), will be further protected through tokenization or data masking techniques. Regular backups will be performed and stored securely in a geographically separate location to ensure business continuity and data recovery in case of a disaster.

My recent case study on HWA Snow integration, focusing on application DB and app server container provisioning orchestration, really highlighted the need for efficient deployment strategies. This got me thinking about how much easier this could be with modern approaches, like those discussed in this excellent article on domino app dev the low code and pro code future.

The streamlined development processes described there could significantly improve the HWA Snow deployment process, making it faster and less error-prone. Ultimately, the case study reinforced the importance of exploring these new development paradigms for future projects.

Data loss prevention (DLP) tools will be used to monitor and prevent sensitive data from leaving the controlled environment.

Scalability and Performance

The HWA Snow Integration application, built upon a containerized architecture, requires careful consideration of scalability and performance to ensure its reliability and responsiveness under varying loads. This section details the strategies implemented to achieve this, analyzes potential bottlenecks, and offers recommendations for ongoing optimization.

Our approach to scalability focuses on leveraging the inherent flexibility of containerization and cloud-native technologies. We’ve designed the system to handle increased user traffic and data volume efficiently, minimizing latency and maximizing resource utilization.

Horizontal Scaling Strategies

Horizontal scaling, adding more instances of application servers and database replicas, is the primary strategy employed. This approach allows us to distribute the workload across multiple containers, preventing any single point of failure and enhancing overall system responsiveness. We utilize Kubernetes for orchestration, enabling automated scaling based on predefined metrics such as CPU utilization and request latency. For example, if CPU usage consistently exceeds 80% for a period of 5 minutes, Kubernetes automatically spins up additional application server pods.

Similarly, database read replicas are dynamically provisioned to alleviate load on the primary database server.

Potential Performance Bottlenecks

Several potential bottlenecks need ongoing monitoring and mitigation. Network latency between containers and databases can significantly impact performance. Inefficient database queries, especially those involving large datasets, can also create slowdowns. Insufficient memory allocation for containers could lead to performance degradation and instability. Finally, the application code itself may contain performance inefficiencies that need optimization.

Regular load testing and performance profiling are crucial to identify and address these bottlenecks proactively.

Performance Optimization Recommendations

To ensure optimal performance and scalability, several recommendations are in place. These include continuous monitoring of key performance indicators (KPIs) such as response times, error rates, and resource utilization. Regular code profiling will identify performance hotspots in the application code. Database query optimization is critical, involving techniques like indexing, query rewriting, and caching. Furthermore, implementing caching mechanisms at various layers (e.g., CDN for static assets, application-level caching) can significantly reduce server load.

Finally, rigorous load testing under simulated peak conditions will help anticipate and address potential issues before they impact users.

Scaling Strategy Comparison

| Scaling Strategy | Pros | Cons | Trade-offs |

|---|---|---|---|

| Horizontal Scaling | Increased capacity, high availability, easy implementation with container orchestration | Increased infrastructure costs, potential network latency | Cost vs. availability and performance |

| Vertical Scaling | Simpler management, potentially lower latency | Limited scalability, single point of failure, difficult to upgrade | Simplicity vs. scalability and resilience |

| Database Sharding | Improved database scalability, reduced query latency | Increased complexity, data consistency challenges | Scalability vs. complexity and data integrity |

| Caching | Reduced server load, improved response times | Requires additional infrastructure and management, potential for data staleness | Performance improvement vs. complexity and data freshness |

Monitoring and Logging

Effective monitoring and logging are crucial for the successful operation of the HWA Snow Integration application. A robust system allows for proactive identification of performance bottlenecks, rapid troubleshooting of issues, and ensures the overall health and stability of the application. This section details the chosen tools and strategies for achieving this.

Our monitoring and logging strategy is built around a multi-layered approach, combining real-time performance monitoring with comprehensive log aggregation and analysis. This allows us to gain a holistic view of the system’s behavior, from individual container performance to overall application health.

Monitoring Tools and Techniques

We leverage a combination of tools to monitor various aspects of the application. Prometheus, a popular open-source monitoring and alerting toolkit, is used for collecting metrics from our application containers and infrastructure. These metrics include CPU usage, memory consumption, network traffic, and request latency. Grafana, a powerful data visualization and dashboarding platform, is integrated with Prometheus to provide interactive dashboards that display these metrics in a user-friendly manner.

These dashboards allow us to quickly identify performance anomalies and potential issues.

Logging Mechanisms

Centralized logging is achieved using the Elastic Stack (Elasticsearch, Logstash, and Kibana). Each application container is configured to send its logs to a central Logstash server, which then indexes them into Elasticsearch. Kibana provides a powerful search and visualization interface for analyzing these logs. This allows us to easily search for specific error messages, track down the root cause of issues, and analyze trends in application behavior.

The logging system is designed to capture both application logs and system logs, providing a complete picture of the system’s activity.

Monitoring and Logging Strategy

Our strategy focuses on proactive monitoring and reactive troubleshooting. Real-time monitoring dashboards provide immediate visibility into the system’s performance, allowing us to identify and address issues before they impact users. Comprehensive logging enables detailed post-mortem analysis of incidents, helping us to understand the root cause of problems and prevent recurrence. The integration of Prometheus, Grafana, and the Elastic Stack provides a robust and scalable solution for managing the monitoring and logging needs of the application.

Alert Generation and Handling

Prometheus is configured to generate alerts based on predefined thresholds for key metrics. For example, if CPU usage exceeds 90% for a sustained period, an alert is triggered. These alerts are sent to PagerDuty, an incident management platform, which notifies the on-call team via email, SMS, and push notifications. The alert includes details about the issue, such as the affected container, the metric that triggered the alert, and the severity level.

The on-call team uses the monitoring dashboards and log analysis tools to diagnose the problem and take corrective action. A post-incident review process ensures that lessons learned are incorporated into our monitoring and logging strategy to prevent similar incidents in the future. For example, if a particular error message consistently appears, we may adjust our logging configuration to capture more detailed information about that specific error.

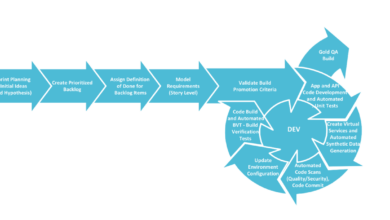

Deployment and Maintenance

Deploying and maintaining the HWA Snow Integration application requires a structured approach to ensure stability, scalability, and security. The process involves careful planning, execution, and ongoing monitoring to minimize downtime and maximize performance. This section details the deployment process, Artikels maintenance procedures, and provides best practices for managing updates and patches.The deployment process utilizes a blue-green deployment strategy.

This minimizes disruption to the live system. First, the updated application and database are deployed to a staging environment mirroring the production environment. Thorough testing is conducted in this staging environment to verify functionality and performance. Once testing is complete and sign-off is received, the staging environment is then switched to become the new production environment, while the old production environment is retained as a backup for a short period (typically 7 days).

This rollback capability ensures business continuity in case unforeseen issues arise. Automated scripts are used throughout the process to streamline deployment and reduce manual intervention, thus minimizing the risk of human error.

Deployment Process

The application is deployed using a containerized approach, leveraging Docker and Kubernetes for orchestration. This ensures consistent deployment across different environments. The deployment process involves several steps: building Docker images for the application server and database, pushing these images to a container registry, deploying the containers to the Kubernetes cluster, and finally verifying the application functionality. Rollback capabilities are built-in, allowing for swift reversion to a previous stable version if necessary.

Maintenance Procedures

Regular maintenance is crucial for the long-term health and performance of the HWA Snow Integration application. This includes proactive monitoring, scheduled backups, and timely application of updates and patches. Our approach emphasizes automation wherever possible, reducing manual intervention and the associated risk of human error. We also maintain detailed documentation of all procedures and configurations to facilitate troubleshooting and future maintenance efforts.

Update and Patch Management

Managing updates and patches is a critical aspect of maintaining a secure and stable system. We follow a strict change management process, including rigorous testing in a staging environment before applying updates to the production system. Automated patch management tools are utilized to streamline the process and ensure timely application of security updates. A clear communication plan ensures all stakeholders are informed about planned maintenance windows and any potential service disruptions.

Routine Maintenance Checklist

Effective maintenance hinges on a well-defined schedule and consistent execution. The following checklist Artikels key tasks:

- Daily: Monitor application logs for errors and performance issues; check system resource utilization (CPU, memory, disk space); review security logs for suspicious activity.

- Weekly: Run database backups; perform application performance testing; review system alerts and address any outstanding issues.

- Monthly: Apply security patches and updates; conduct a full system backup; review and update system documentation.

- Quarterly: Perform a comprehensive system audit; review and update disaster recovery plan; conduct capacity planning exercises.

- Annually: Conduct a full security assessment; review and update the system architecture; plan for upgrades and future enhancements.

Illustrative Example: A Typical User Workflow

Let’s walk through a typical scenario illustrating how a user interacts with our HWA Snow Integration application, highlighting the interplay between the database, application server, and containerized components. This example focuses on a user requesting snow-related data for a specific geographic region.

Imagine a meteorologist needing real-time snow accumulation data for Denver, Colorado. They access the application via a web browser, initiating a series of interactions that showcase the application’s functionality.

User Input and Initial Request

The user navigates to the application’s web interface and enters “Denver, Colorado” into the designated search field. Upon clicking “Submit,” a request is sent to the application server, hosted within a Docker container. This request is formatted as a JSON payload containing the search query.

Application Server Processing

The application server receives the request and, using its internal logic, prepares a structured query for the database. This query is designed to retrieve relevant snow data—accumulation, time stamps, and associated geographic coordinates—from the database, also containerized. The application server utilizes a connection pool managed by the container orchestration system (e.g., Kubernetes) to efficiently access the database.

Database Interaction and Data Retrieval

The application server sends the structured query to the database. The database, a PostgreSQL instance running in its own container, processes the query and retrieves the matching snow data. This data is then formatted into a JSON response.

Data Transformation and Response

The retrieved data is passed back to the application server. The application server performs any necessary transformations, such as unit conversions or data aggregation, before formatting the results into a user-friendly JSON response suitable for the web interface.

Response to the User

The application server sends the JSON response back to the user’s web browser. The browser interprets this JSON data and dynamically renders a map visualization displaying snow accumulation in and around Denver, Colorado, along with a tabular representation of the key data points. The entire process, from user input to data visualization, is typically completed within a few seconds.

Component Interaction Summary

This workflow showcases the coordinated effort of several components: the user’s web browser initiates the request; the application server, within its container, manages the request, interacts with the database, and processes the results; and the database, also containerized, provides the core snow data. The container orchestration system ensures the availability and scalability of all these components. The entire process is underpinned by robust security measures and monitoring mechanisms, as detailed in previous sections.

Conclusion

Successfully integrating HWA Snow with containerized application and database systems requires a multifaceted approach. This case study highlights the importance of careful planning, efficient orchestration, robust security measures, and a proactive monitoring strategy. By understanding the interplay between containerization, database management, application servers, and security protocols, we can build systems that are not only efficient and scalable but also resilient and secure.

The key takeaway? A well-architected, containerized system can significantly enhance application performance, scalability, and maintainability – making it a worthwhile investment for any organization looking to modernize its infrastructure.

FAQ Summary: Case Study Hwa Snow Integration Application Db And Appserver Container Provisioning Orchestration

What are the biggest challenges in implementing HWA Snow integration?

Common challenges include ensuring data consistency across systems, managing complex dependencies between components, and maintaining security throughout the integration process.

How does HWA Snow integration impact application performance?

Properly implemented HWA Snow integration can significantly improve application performance through efficient resource allocation and streamlined data access. However, poor design can lead to performance bottlenecks.

What are the long-term maintenance implications of this system?

Long-term maintenance involves regular updates, security patching, performance monitoring, and proactive troubleshooting. A well-defined maintenance plan is crucial for system longevity.

What are some alternative container orchestration tools besides Kubernetes?

Alternatives include Docker Swarm, Rancher, and Nomad, each with its own strengths and weaknesses.