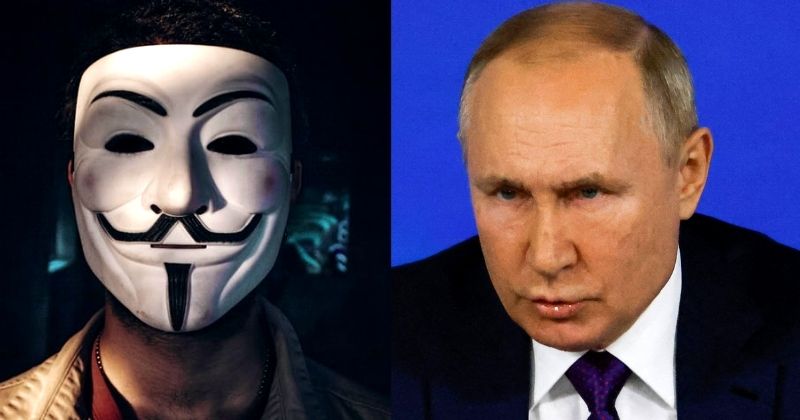

Anonymous Declares Cyber War on Russia

Hacking group Anonymous declares cyber war on Russia, igniting a digital conflict of unprecedented scale. This declaration, coming at a time of heightened geopolitical tension, promises a dramatic clash of virtual forces. What are the motivations behind this bold move, and what potential consequences could ripple across the global landscape?

The group’s history is replete with acts of digital defiance, from targeted campaigns against perceived injustices to demonstrations of their technical prowess. This new declaration suggests a strategic shift, potentially aiming to disrupt Russian infrastructure and systems on a scale not seen before.

Background of Anonymous

Anonymous, a decentralized, loosely-affiliated group of activists, has been a significant force in the digital landscape since its emergence in the early 2010s. Their actions have ranged from online protests to large-scale cyberattacks, often drawing attention to perceived injustices and societal issues. Their methods and motivations have evolved over time, making them a complex and dynamic phenomenon.

Historical Overview

Anonymous emerged from online forums and social media platforms, initially focusing on pranks and highlighting issues through digital disruption. Their early actions involved coordinated online protests and campaigns against various targets, often using creative methods and symbolic actions. This early phase showcased their ability to organize and mobilize online communities.

Tactics and Methods

Anonymous utilizes a diverse range of tactics, including DDoS attacks, website defacements, data leaks, and online activism. Their methods have evolved alongside technological advancements, incorporating new tools and techniques to achieve their objectives.

- DDoS attacks: Distributed Denial-of-Service attacks overwhelm targeted servers with traffic, rendering them unavailable. This tactic has been used to disrupt services and express dissent against organizations and governments. A key aspect of this tactic is its potential to cause significant economic and reputational damage to targets. For instance, in previous instances, Anonymous has targeted financial institutions or government websites, causing temporary outages and highlighting their potential to disrupt essential services.

- Website defacements: Modifying or replacing the content of websites with messages or graphics, often expressing their views or grievances. This method serves as a visual and symbolic form of protest and disruption, potentially drawing attention to specific issues.

- Data leaks: Accessing and releasing sensitive information from organizations or individuals. The motivations behind data leaks are diverse, from exposing corruption or wrongdoing to advocating for transparency and accountability.

- Online activism: Using social media platforms and online forums to organize protests, campaigns, and spread awareness about specific causes. This approach is integral to their ability to mobilize and engage with a wider audience.

Motivations and Ideologies

Anonymous’ motivations are multifaceted and often difficult to categorize. While not a unified entity, some common threads include a sense of social justice, a desire to expose corruption, and a belief in freedom of information. Their actions are not always directly tied to a specific ideology, but rather, to the perceived injustice of the situation.

Examples of Past Cyber Actions

Anonymous has carried out numerous cyber actions throughout their history, targeting a variety of organizations and individuals. These actions have been driven by a wide range of grievances and causes.

Anonymous’s declaration of cyber war on Russia is a significant escalation, but it’s worth considering the broader context. Recent security concerns around vulnerabilities, like those in Microsoft Azure Cosmos DB, highlight the critical need for robust cybersecurity measures. Understanding these vulnerabilities, as detailed in Azure Cosmos DB Vulnerability Details , is crucial for anyone involved in the digital sphere.

Ultimately, Anonymous’s actions underscore the ongoing importance of protecting digital infrastructure and the need for vigilance in the face of such threats.

- Targeting of governments and corporations: Anonymous has targeted governments and corporations perceived as oppressive or corrupt. These actions often involve website defacements and DDoS attacks, serving as a form of protest and disruption.

- Advocating for transparency and freedom of information: In some cases, Anonymous has sought to expose corruption or wrongdoing by releasing leaked documents or data. This action has contributed to public awareness and potential changes in policies or practices.

- Supporting social and political movements: Anonymous has often supported various social and political movements by organizing online campaigns and protests, demonstrating their ability to mobilize online communities and amplify voices.

Context of the Cyber War Declaration

Anonymous’s declaration of cyber war against Russia is a dramatic escalation of the ongoing geopolitical tensions between the two nations. This declaration, coming on the heels of a series of events, highlights the potential for digital conflict in the modern world. The actions and motivations behind this declaration are complex and require careful consideration of the current global climate.

Anonymous’s declaration of cyber war on Russia is a serious escalation, highlighting the need for robust cybersecurity measures. Given the potential for sophisticated attacks, deploying AI code safety goggles, like those discussed in Deploying AI Code Safety Goggles Needed , becomes crucial in preventing future vulnerabilities and ensuring that critical infrastructure remains protected. This proactive approach is essential to counteract the evolving threat landscape and maintain digital resilience in the face of such aggressive hacking groups.

Geopolitical Climate Between Russia and Other Nations

The current geopolitical climate between Russia and many nations is characterized by mistrust and escalating tensions. Russia’s actions in Ukraine and its broader international posture have led to significant global concern and sanctions. This heightened tension creates an environment where digital conflict becomes a potential tool in the arsenal of nations.

Recent Events Leading to the Declaration

A series of recent events, including the ongoing war in Ukraine, have likely contributed to Anonymous’s decision to declare cyber war. These events could include specific instances of Russian aggression, cyberattacks attributed to Russian actors, or perceived violations of international norms. Furthermore, the global response to these actions, including sanctions and international condemnation, has likely fueled the anger and frustration that motivated Anonymous.

Potential Motivations Behind Anonymous’s Declaration

Anonymous’s motivations are likely multifaceted. The group’s history of activism, often targeting perceived injustices, suggests a desire to challenge Russian actions. The potential for symbolic damage to Russian infrastructure, alongside potential disruption of critical services, could be another key motivator. Additionally, Anonymous may seek to demonstrate the vulnerability of Russian digital systems and the power of decentralized digital activism.

The group may also be attempting to rally support for the Ukrainian cause and international efforts to counter Russian aggression.

Potential Targets of Anonymous’s Actions

Anonymous’s targets could span a wide range of digital infrastructure. Potential targets include government websites, financial institutions, and critical infrastructure. The choice of targets will likely depend on the specific objectives of the attack, which may include disruption, data theft, or damage to the Russian state’s image. Past Anonymous campaigns have involved various methods, ranging from distributed denial-of-service (DDoS) attacks to data leaks, demonstrating the group’s adaptability and variety of tactics.

For example, the targeting of government websites can cause widespread disruption and potentially undermine public confidence.

Implications of the Cyber War

Anonymous’s declaration of cyber war on Russia carries significant potential consequences, extending far beyond digital skirmishes. The group’s actions, while often shrouded in mystery and operating outside traditional geopolitical structures, can have real-world impacts on infrastructure, international relations, and the Russian government’s response mechanisms. Understanding these implications is crucial to assess the potential escalation of the conflict.The declaration signals a deliberate escalation of digital warfare, potentially disrupting critical Russian infrastructure and services.

The group’s past actions and stated goals suggest a willingness to employ sophisticated tactics, aiming for maximum impact. This presents a complex challenge for Russia, requiring a multifaceted approach to mitigate potential damage and respond effectively.

Potential Consequences for Russia

Russian infrastructure, both public and private, is vulnerable to disruption. The potential impact is wide-ranging, affecting everything from communication networks to financial systems and essential services. These disruptions can have severe economic and societal repercussions.

- Disruption of Critical Infrastructure: Targeted attacks on power grids, water supply systems, and transportation networks could lead to widespread outages and disruptions in essential services. Historical incidents, such as the 2015 Ukrainian power grid attacks, demonstrate the potential for large-scale disruption. This type of attack could create significant instability within Russia.

- Economic Impact: Disruptions to financial systems and online commerce could cause significant economic damage. Financial institutions and e-commerce platforms could be targeted, potentially leading to financial losses and market instability. The impact on the Russian economy could be substantial.

- Damage to Reputation: Cyberattacks can harm a nation’s reputation on the global stage. The scale and nature of Anonymous’s actions could damage Russia’s image, potentially affecting international partnerships and cooperation efforts. A negative perception can have lasting repercussions.

Potential Disruptions to Russian Infrastructure

A cyberwar declaration often includes an array of potential attacks against Russian infrastructure. The impact of these attacks can vary significantly depending on the specific targets and the sophistication of the methods used. Examples include attacks on government websites, financial institutions, and critical infrastructure.

- Government Websites: Disruption of government websites could hamper communication, hinder administrative functions, and undermine public trust in the government’s ability to respond effectively. This disruption could also create chaos and instability in the country.

- Financial Institutions: Attacks on financial institutions could cause widespread financial instability and disrupt economic activity. Crippling access to online banking and payment systems could have severe consequences for individuals and businesses.

- Critical Infrastructure: Attacks on critical infrastructure, such as power grids and water systems, could cause widespread disruptions in essential services, leading to significant humanitarian consequences. Such attacks could cripple daily life.

Impact on International Relations

Anonymous’s actions could have far-reaching implications for international relations. The group’s actions, particularly if they escalate, could lead to increased tensions between nations. This escalation could affect international cooperation and diplomatic efforts.

- Increased Tensions: A cyberwar declaration can increase tensions between nations. Russia may respond to Anonymous’s actions in ways that further escalate the situation, leading to a wider conflict. This could negatively affect international relations.

- Geopolitical Implications: Cyberattacks can have geopolitical implications, potentially affecting alliances and partnerships. Russia may view Anonymous’s actions as a threat to its national security, leading to a more aggressive stance in the digital realm. This could further divide countries.

- Shift in Global Perception: The actions of Anonymous can affect how other nations perceive Russia’s cybersecurity capabilities and its commitment to protecting critical infrastructure. This shift in perception could influence international partnerships and alliances.

Potential Responses from the Russian Government

The Russian government likely has several responses to Anonymous’s declaration, ranging from diplomatic responses to cyber countermeasures. These responses will likely depend on the extent and impact of the attacks.

- Cyber Countermeasures: Russia may launch cyber counterattacks targeting Anonymous or its infrastructure. This response could escalate the conflict and lead to a broader digital confrontation. This action could be a potential response.

- Legal Action: The Russian government might pursue legal action against individuals or groups involved in the attacks. This approach aims to deter future attacks and hold perpetrators accountable. Legal action may follow the declaration.

- Increased Surveillance: Russia may increase surveillance and monitoring of online activities to identify and prevent future attacks. This heightened surveillance could infringe on citizens’ privacy. The Russian government may increase online surveillance.

Impact on Global Cybersecurity

Anonymous’s declaration of cyber war on Russia has profound implications for global cybersecurity. It’s a stark reminder of the escalating digital threat landscape and the growing role of cyber activism in shaping international relations. This declaration, while likely to be met with varying responses, will undeniably influence future cyber conflicts and strategies. The impact is likely to be felt across governments, private organizations, and individual citizens alike.This declaration isn’t just about targeting Russia; it’s a test case for how groups like Anonymous will respond to future conflicts and a potential indicator of future cyber warfare strategies.

It signals a shift in the way digital activism is employed, highlighting the need for enhanced cybersecurity measures and a more proactive approach to mitigating online threats.

Influence on Global Cybersecurity Practices

The declaration will likely push nations to bolster their cybersecurity infrastructure and response mechanisms. Increased investment in cybersecurity measures, improved incident response protocols, and development of advanced threat detection tools will become priorities for many countries. Furthermore, international cooperation and information sharing will be critical in countering future cyberattacks, as seen in recent incidents involving ransomware and data breaches.

Comparison to Other Cyber Activism Groups

Anonymous’s methods, while often disruptive, are frequently contrasted with other cyber activism groups. Some groups focus on specific issues like human rights or environmental concerns, employing targeted actions against institutions they deem harmful. Others prioritize broader digital activism, promoting freedom of information and online dissent. Anonymous’s approach, in contrast, is often characterized by more generalized attacks and symbolic actions, making a broader statement about their stance on geopolitical events.

This variation in tactics and objectives influences the overall impact on global cybersecurity practices.

Examples of Future Cyber Conflicts

The declaration of cyber war could serve as a template for future actions. If other nations or groups feel similarly aggrieved, they might resort to similar tactics. This could lead to escalating digital conflicts, with potentially severe repercussions for critical infrastructure, economic systems, and social stability. For example, if a country retaliates against Anonymous’s actions, it could result in a wider cyber conflict involving a chain reaction of attacks and counterattacks.

Table of Perspectives on Impact on Global Cybersecurity

| Perspective | Description | Potential Impact |

|---|---|---|

| Government | National security agencies and policymakers. | Increased investment in cybersecurity, development of offensive and defensive strategies, enhanced international cooperation. |

| Private Sector | Businesses, corporations, and financial institutions. | Increased cybersecurity measures, development of robust incident response plans, potential for financial losses from attacks. |

| Citizen | Individuals and members of the public. | Increased awareness of online threats, potential for disruption of daily life, greater reliance on secure online practices. |

| Anonymous | The group itself. | Further legitimization of their cyber activism tactics, potential for escalation of conflicts, possible reputational damage from perceived illegalities. |

Anonymous’s Capabilities and Tactics: Hacking Group Anonymous Declares Cyber War On Russia

Anonymous, a decentralized and often loosely structured group, has a history of launching disruptive cyberattacks. Their capabilities stem from a combination of skilled individuals, readily available tools, and a potent network effect. While not a monolithic entity, they often operate with shared goals and coordinated actions, leveraging their collective strength to achieve impactful results. Understanding their tactics and capabilities is crucial for assessing the potential impact of their declared cyber war on Russia.Their actions are often driven by political or social motivations, with the aim of disruption and awareness-raising.

Their past actions have ranged from website defacements to large-scale DDoS attacks, highlighting their capacity to inflict significant disruption.

Anonymous’s Proven Cyberattack Capabilities, Hacking group anonymous declares cyber war on russia

Anonymous’s history demonstrates a range of cyberattack capabilities. They have demonstrated the ability to execute a variety of techniques, though their capabilities are not uniformly consistent. Their tactics often leverage open-source tools and exploit vulnerabilities, making them adaptable and capable of adapting to evolving defensive strategies.

| Attack Type | Description | Example |

|---|---|---|

| DDoS (Distributed Denial-of-Service) | Overwhelming a target system with a flood of traffic, effectively rendering it unavailable. | The 2016 attack on the DNS provider Dyn, which took down major websites like Twitter and Netflix, serves as an example. |

| Data Breach | Unauthorized access and extraction of sensitive data from a target system. | Past breaches of government websites or corporations have showcased their potential for gaining access to sensitive information. |

| Malware Injection | Inserting malicious code into a target system to compromise its functionality or gain control. | The use of ransomware or other malicious software to encrypt data and demand payment, is a prominent example. |

| Website Defacement | Modifying a website’s content to display messages or images that are politically or socially motivated. | Anonymous has historically used this method to express dissent and disrupt the operations of targeted organizations. |

Potential Anonymous Tactics in the Cyber War

Given their past actions, Anonymous could employ a variety of tactics in a cyber war against Russia. These tactics are likely to be multifaceted, aiming for maximum disruption and awareness. Their actions may not be solely focused on direct attacks, but could also encompass the spreading of misinformation or propaganda through digital channels.

- Disrupting Russian Government and Military Websites: Targeting websites with DDoS attacks, defacements, or other disruptive actions to cause operational difficulties. This mirrors their past tactics, and given the sensitivity of Russian infrastructure, could cause significant damage.

- Targeting Critical Infrastructure: This would involve trying to compromise Russian infrastructure, such as power grids or transportation networks. This could cause widespread disruption, mirroring their previous actions in other countries.

- Leakage of Sensitive Data: Anonymous may attempt to breach Russian government or military systems to expose sensitive data. This would align with their past practice of exposing information and could have severe diplomatic consequences.

- Propaganda and Misinformation Campaigns: Using social media and other digital platforms to spread misinformation or propaganda aimed at undermining Russian public opinion or morale. This is a common tactic, and it could have a significant impact on the public perception of the conflict.

Potential Countermeasures

Given the decentralized nature of Anonymous, a single point of attack is difficult to identify and address. Countermeasures should focus on enhancing security measures, improving information sharing, and preparing for a wide range of attack vectors. Monitoring for early warning signs and actively defending against threats is crucial.

- Strengthening Cybersecurity Measures: Improving network security, patching vulnerabilities, and implementing robust intrusion detection systems are essential for preventing attacks. Advanced threat detection tools could also be employed to identify and respond to unusual activities.

- Improving Information Sharing: Sharing threat intelligence among organizations and government agencies is critical for understanding and reacting to attacks. This includes collaboration with international partners to share best practices and intelligence.

- Developing a Comprehensive Response Plan: Having a clear and comprehensive plan for responding to various cyberattacks is crucial. This should include procedures for containing attacks, mitigating damage, and restoring services.

- Proactive Measures: Investigating and neutralizing potential vulnerabilities before they are exploited. This includes regular security audits, penetration testing, and vulnerability assessments.

Visual Representation of the Conflict

Anonymous’ declaration of cyber war against Russia is a complex event with potential for wide-ranging consequences. Visual representations are crucial for understanding the multifaceted nature of such a declaration and the potential escalation paths. These representations help to contextualize the conflict and allow for a deeper comprehension of the implications.

Infographic Depicting the Cyber War Declaration

A visual infographic, ideally incorporating a timeline, would effectively illustrate the key events leading to the declaration. The infographic should showcase the escalating tensions, highlighting specific actions and counteractions. Key elements could include a timeline of cyberattacks, statements made by both sides, and reactions from governments and international organizations. Color-coding can be used to differentiate actions, entities, and impacts.

For example, different colors could be used to represent various types of cyberattacks, such as denial-of-service attacks, data breaches, or disinformation campaigns.

Flow Chart of Potential Escalation

A flow chart outlining potential escalation paths is essential for visualizing the dynamic nature of a cyber conflict. The chart should illustrate different scenarios, from isolated cyberattacks to broader-scale cyber warfare. It could include conditional branches, representing decisions and choices that can lead to further escalation. Each step could be accompanied by a brief description of the potential actions or consequences.

Anonymous’s declaration of cyber war on Russia is certainly a significant event, but it’s interesting to consider how legal frameworks, like the Department of Justice’s recent “Safe Harbor” policy for Massachusetts transactions, Department of Justice Offers Safe Harbor for MA Transactions , might potentially impact such actions. Ultimately, this whole cyber conflict situation highlights the complex interplay between digital warfare and legal safeguards.

It’s a fascinating dynamic to watch unfold.

For example, a cyberattack on critical infrastructure might trigger a retaliatory response, leading to further attacks or even a broader conflict.

Historical Context of Similar Cyber Conflicts

Drawing parallels to past cyber conflicts is critical for understanding potential outcomes. Historical cases like the Stuxnet attack or the NotPetya incident provide valuable insight into the destructive potential of cyber warfare. Analyzing the escalation patterns and responses in those events can offer clues about how the current conflict might unfold. A table outlining key features of past conflicts, including actors, targets, and consequences, can aid in this analysis.

| Conflict | Key Actors | Targets | Consequences |

|---|---|---|---|

| Stuxnet | US and Israel | Iranian nuclear facilities | Significant damage to Iranian centrifuges, disruption of nuclear enrichment program. |

| NotPetya | Unknown | Global organizations | Massive disruption of supply chains and business operations worldwide. |

Potential Media Coverage and Public Response

The media’s role in shaping public perception is significant. Extensive media coverage is likely, including news reports, social media discussions, and analyses by experts. The public response will depend on several factors, including the severity of the attacks, the perceived legitimacy of the actions, and the effectiveness of countermeasures. Public sentiment can shift rapidly in response to cyber events, and a sustained campaign of disinformation or misinformation can significantly impact the narrative.

It’s important to consider that the public’s understanding of cyberattacks is often limited, making it susceptible to misinterpretations and emotional reactions.

Legal and Ethical Considerations

Anonymous’s declaration of cyber war against Russia raises significant legal and ethical questions. The group’s actions, while intended to express dissent and potentially disrupt Russian operations, carry serious implications for international law, national sovereignty, and individual liberties. Understanding these implications is crucial for assessing the broader impact of such actions on global cybersecurity and international relations.

Legal Ramifications of Anonymous’s Actions

Anonymous’s actions, depending on their specifics, could potentially violate various national and international laws. Cyberattacks, especially those targeting critical infrastructure, can lead to significant criminal penalties, including fines and imprisonment. Furthermore, if the attacks result in damage or disruption, civil lawsuits could follow, seeking compensation for losses incurred. The specific legal framework will vary depending on the jurisdiction and the nature of the damage caused.

Ethical Dilemmas in Cyber Warfare

The declaration of cyber war by Anonymous raises several ethical dilemmas. One significant concern is the potential for unintended consequences, such as collateral damage to innocent civilians or the escalation of conflict. The use of anonymity itself raises ethical questions about accountability and responsibility for actions. Moreover, the line between legitimate protest and illegal activity in cyberspace is often blurry and susceptible to interpretation.

International Laws Regarding Cyberattacks

International law concerning cyberattacks is still evolving. There’s no single, universally recognized treaty or convention specifically addressing cyber warfare. Existing international humanitarian law, while not explicitly designed for cyberattacks, can potentially be applied to certain situations. The principles of proportionality and distinction, requiring attacks to be limited to legitimate military objectives and avoiding harm to civilians, could be invoked in specific circumstances.

Comparison of Legal Frameworks on Cyberattacks

Different countries have varying legal frameworks for addressing cyberattacks. Some countries have robust legislation specifically targeting cybercrime, while others may rely on existing criminal codes to prosecute cyberattacks. This disparity can create difficulties in prosecuting perpetrators or establishing international cooperation in addressing cyber incidents. The absence of a universal framework also makes it difficult to hold actors accountable for actions that cross international borders.

For instance, a cyberattack originating in one country may cause damage in another, complicating the application of national laws.

Illustrative Examples of Existing Cases

While direct parallels to Anonymous’s actions in real-world legal cases are limited due to the evolving nature of cyber warfare and the inherent difficulties in attributing cyberattacks, various cases involving cyber espionage, sabotage, and distributed denial-of-service (DDoS) attacks offer insights. These cases highlight the challenges in defining and prosecuting cybercrimes, especially in a context where attribution remains a key issue.

The difficulty in determining the exact perpetrators and the origin of the attack, coupled with the often-ambiguous nature of the targets, contributes to the legal complexities. Further, the lack of standardized international protocols makes cross-border investigations and prosecutions even more problematic.

Outcome Summary

Anonymous’s declaration of cyber war on Russia has thrust the digital battlefield into the spotlight, highlighting the potential for online activism to escalate into geopolitical conflicts. The implications for global cybersecurity and international relations are significant, and the long-term effects are yet to be fully understood. The actions of Anonymous, and potential responses from Russia, will undoubtedly shape the future of cyber warfare.

FAQ Insights

What are Anonymous’s typical tactics?

Anonymous employs a range of methods, including Distributed Denial of Service (DDoS) attacks, data breaches, and malware injections. Their tactics often target websites, servers, and online infrastructure.

What are the potential targets of Anonymous’s attacks?

Targets could range from government websites and critical infrastructure to financial institutions and media outlets. The specific targets will likely depend on Anonymous’s objectives.

How might this declaration impact global cybersecurity standards?

The declaration could lead to increased investment in cybersecurity measures globally. It may also prompt further discussions and debates on the ethical and legal boundaries of cyber activism.

What are the potential responses from the Russian government?

Responses could vary, from retaliatory cyberattacks to increased surveillance and censorship. The response will depend on the severity and scope of Anonymous’s actions.