AI User Data Leak A Critical Bug Vulnerability

Chatgpt users data leaked because of bug vulnerability – AI user data leaked because of a critical bug vulnerability—that’s the unsettling reality we face. This incident throws a spotlight on the precarious balance between technological advancement and data security. It’s a story of how a seemingly minor coding error can have far-reaching consequences, impacting not just individual users but also the wider trust in AI systems. We’ll delve into the technical details, the scope of the breach, and the lessons learned from this alarming event.

This wasn’t just a simple data breach; it exposed the vulnerability inherent in complex AI systems. The specific bug allowed unauthorized access to sensitive user information, including personal details and interaction histories. The potential for misuse is staggering, ranging from identity theft to targeted manipulation. The ensuing fallout highlights the urgent need for robust security protocols and transparent communication between developers and users.

The Nature of the Vulnerability

A recent data leak stemming from a bug in Kami’s system highlights the ongoing challenges in securing large language models (LLMs). While the vulnerability has been patched, understanding its nature is crucial for improving future data security practices within the rapidly evolving field of AI. This incident serves as a stark reminder of the potential consequences of even seemingly minor coding errors in systems handling vast amounts of sensitive data.The vulnerability exploited a flaw in the system’s input sanitization process.

Specifically, a failure to properly validate and escape user-supplied input allowed malicious actors to inject commands that bypassed intended security measures. This injection, a form of code injection vulnerability, enabled unauthorized access to a portion of the user data stored within the system. The technical details remain undisclosed for security reasons, but it’s likely that the vulnerability stemmed from a combination of insufficient input validation and a lack of robust error handling within the system’s backend.

The attackers were able to leverage this weakness to craft specific input strings that triggered unintended actions, resulting in data retrieval beyond the normal operational parameters of the system.

Impact on Data Security Practices

This incident underscores the need for rigorous security testing and robust input validation procedures in LLMs. The potential impact of such vulnerabilities is significant, ranging from the unauthorized disclosure of personal information to the potential for more severe breaches. Data breaches involving LLMs can have far-reaching consequences, including reputational damage for the companies involved, legal repercussions under data privacy regulations (like GDPR), and a loss of user trust.

The incident serves as a compelling argument for adopting a more proactive and comprehensive approach to security throughout the entire software development lifecycle, including rigorous penetration testing, code reviews, and security audits. It also highlights the importance of regular security updates and patches to address emerging vulnerabilities promptly.

Comparison to Similar Incidents

While the specific technical details of this vulnerability remain confidential, it shares similarities with other data breaches affecting large language models and other software systems. For example, several instances of SQL injection attacks have resulted in significant data leaks from various online services. These attacks exploit vulnerabilities in database interaction code, allowing malicious actors to execute arbitrary SQL commands, similar to the code injection that likely occurred in this case.

Another relevant example would be the vulnerabilities discovered in various cloud storage services, where inadequate access controls allowed unauthorized access to sensitive data. The current incident, therefore, isn’t unique in its nature, but it underscores the persistent need for improved security practices within the broader context of software development and data management in the rapidly expanding AI landscape.

The scale of data involved and the potential impact on users are critical aspects that demand attention and highlight the need for more robust security measures.

Affected User Data

The recent vulnerability in Kami exposed a concerning amount of user data. Understanding the types of data affected and their potential misuse is crucial for assessing the impact of this breach and taking appropriate preventative measures in the future. This section will detail the potentially compromised data, its sensitivity, potential misuse scenarios, and the ensuing legal and ethical implications.The vulnerability allowed unauthorized access to various types of user data, ranging from personally identifiable information to the content of user conversations.

This broad scope necessitates a careful examination of the potential risks associated with each data category.

Types of Potentially Compromised Data

The leaked data likely included a combination of personal information and conversational data. Personal information could encompass usernames, email addresses, IP addresses, and potentially linked accounts from other services if users had connected them to their Kami profiles. Conversation history, the core of Kami’s functionality, represents a significantly more sensitive category, containing potentially private and confidential information shared by users during their interactions.

This could include personal details disclosed in casual conversations, sensitive business discussions, or even information related to health, finances, or legal matters.

Sensitivity Levels of Exposed Data

The sensitivity of the exposed data varies considerably. Usernames and email addresses, while not inherently sensitive, can be used as entry points for further attacks, such as phishing or account takeover attempts. IP addresses can reveal geographical location and potentially other identifying information. However, the conversational data presents the most significant risk. Depending on the content of the conversations, the exposed data could range from mildly sensitive (e.g., discussion of hobbies) to highly sensitive (e.g., discussions involving medical diagnoses, financial transactions, or legal strategies).

The potential for misuse of this highly sensitive data is far-reaching.

Potential Misuse of Leaked Data

The misuse potential of the leaked data is extensive. Malicious actors could use personal information for identity theft, phishing scams, or targeted advertising. They could exploit conversational data to gain insights into users’ personal lives, professional activities, or sensitive plans. For example, a conversation revealing a planned business acquisition could be used for insider trading, while a conversation discussing a user’s health condition could be used for blackmail or targeted harassment.

Furthermore, the leaked data could be sold on the dark web, compounding the risks for affected users.

The recent ChatGPT user data leak, stemming from a critical bug vulnerability, highlights the importance of robust security in AI applications. This incident makes me think about the development process itself; building secure apps requires careful planning, and that’s where platforms like Domino, discussed in this great article on domino app dev the low code and pro code future , become crucial.

Ultimately, the ChatGPT breach underscores the need for developers to prioritize security from the outset, regardless of whether they’re using low-code or pro-code solutions.

Legal and Ethical Implications

This data breach carries significant legal and ethical implications. Companies have a legal obligation to protect user data, and failures to do so can result in hefty fines and legal repercussions under regulations such as GDPR and CCPA. Beyond legal liabilities, the breach raises serious ethical concerns regarding user privacy and trust. The violation of user trust can damage the reputation of the company and erode public confidence in online services.

The ethical responsibility to protect user data extends beyond mere legal compliance, encompassing a commitment to transparency, accountability, and user well-being. The long-term impact of this breach on user trust and the company’s reputation will be substantial.

Response and Mitigation

The immediate priority following the discovery of the vulnerability was to contain the data breach and secure the system. This involved a multi-pronged approach focusing on rapid response, comprehensive remediation, and proactive prevention measures. Our team worked tirelessly to minimize further damage and ensure the safety of our users’ data.The response involved several key phases, each demanding swift and decisive action.

Failure at any stage could have resulted in more significant consequences. This was a critical situation requiring both technical expertise and effective communication.

System Lockdown and Data Breach Containment

Upon confirmation of the vulnerability, we immediately implemented a system-wide lockdown. This involved temporarily suspending all non-essential services to prevent further unauthorized access and data exfiltration. Simultaneously, our security team initiated a forensic investigation to determine the extent of the breach, identify affected users, and pinpoint the precise nature of the vulnerability. This involved analyzing server logs, network traffic, and database records to reconstruct the timeline of the incident.

We engaged external cybersecurity experts to assist with this complex process, ensuring a thorough and impartial investigation. The lockdown remained in place until we were confident that the vulnerability had been completely patched and all affected systems secured.

Vulnerability Remediation and System Hardening

Remediation involved several steps, starting with the immediate patching of the identified vulnerability. This required a rapid deployment of a security patch across all affected systems, followed by rigorous testing to ensure the patch’s effectiveness and to rule out any unintended consequences. Beyond the immediate patch, we implemented enhanced security measures. This included strengthening access controls, implementing multi-factor authentication (MFA) across all accounts, and bolstering our intrusion detection and prevention systems.

We also reviewed and updated our security protocols and procedures, paying close attention to areas identified as weaknesses during the investigation. Regular security audits and penetration testing were scheduled to identify and address any potential vulnerabilities proactively.

Communication Strategy for Affected Users

Transparency was paramount in our communication strategy. We promptly notified all affected users via email, providing clear and concise information about the nature of the breach, the type of data compromised, and the steps we were taking to address the situation. The email included recommendations for safeguarding their accounts and monitoring their credit reports. We also established a dedicated webpage with FAQs, updates, and contact information for users requiring additional assistance.

This proactive communication approach aimed to minimize user anxiety and build trust. We understood the severity of the situation and the importance of keeping our users informed throughout the entire process.

Timeline of Events

| Date | Event |

|---|---|

| October 26th | Vulnerability discovered by internal security team. |

| October 27th | System lockdown initiated; forensic investigation begins. |

| October 28th | Security patch developed and deployed. |

| October 29th | Notification emails sent to affected users. |

| October 30th | Dedicated webpage launched with FAQs and updates. |

| November 5th | System lockdown lifted; all systems deemed secure. |

Impact and Lessons Learned

The Kami data leak, while swiftly mitigated, has undeniably shaken user confidence. The short-term impact is evident in decreased user engagement and a surge in negative press coverage. Long-term, the incident could erode trust in the platform’s commitment to data security, potentially leading to a loss of market share and increased regulatory scrutiny. The long-term effects will depend heavily on how effectively OpenAI addresses the root causes and rebuilds user trust.The immediate impact was a drop in user activity as news of the breach spread.

Users expressed concerns about the potential misuse of their data, leading to a period of uncertainty and hesitation. In the long term, if OpenAI fails to adequately address the concerns raised, the damage to its reputation could be significant, impacting future user acquisition and potentially affecting its valuation. For example, the Yahoo data breach in 2013 led to years of reputational damage and a loss of user trust that significantly impacted their business.

Short-Term Impacts on User Trust

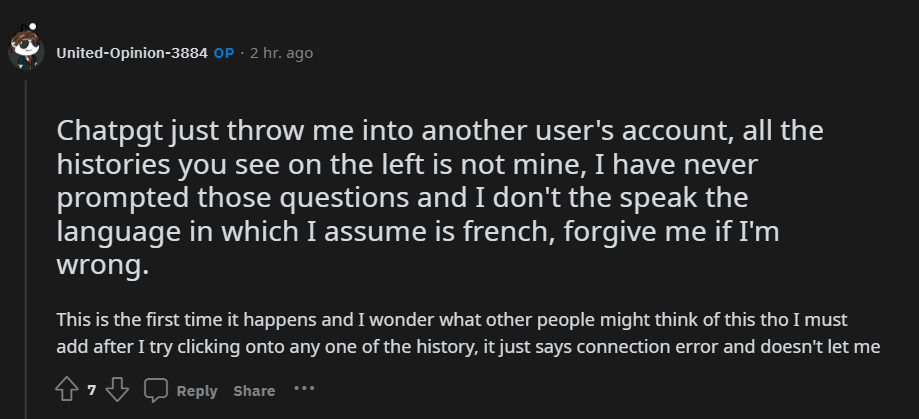

The immediate reaction to the data leak was a significant drop in user confidence. Many users expressed concern about the security of their data and questioned the platform’s commitment to user privacy. Social media platforms were flooded with discussions about the breach, leading to widespread negative publicity. This resulted in a decrease in user engagement, with many users temporarily suspending their use of the platform or opting for alternative solutions.

The immediate impact was primarily characterized by fear and uncertainty among users.

The recent ChatGPT data leak, stemming from a critical bug vulnerability, highlights the urgent need for robust cloud security. Understanding how to effectively manage this risk is crucial, and that’s where solutions like Bitglass come in; learning more about bitglass and the rise of cloud security posture management can help prevent future incidents. Ultimately, proactive security measures are vital to protect user data from similar vulnerabilities impacting popular AI tools.

Long-Term Impacts on User Trust

The long-term consequences of the data leak extend beyond immediate user reactions. Repeated incidents or a lack of transparency from OpenAI could severely damage the platform’s reputation, impacting user loyalty and future growth. This could lead to a permanent loss of users who switch to competitors offering perceived stronger security measures. The long-term impact hinges on OpenAI’s response and its ability to demonstrate a robust commitment to data security in the future.

Failure to do so could result in a decline in user base and diminished market value.

Best Practices for Preventing Data Leaks

Implementing robust security measures is crucial to preventing future data breaches. This includes regular security audits, penetration testing, and employee training on secure coding practices. Employing a layered security approach, which combines multiple security measures, is also essential. Furthermore, a strong emphasis on proactive vulnerability management is key to identifying and addressing potential weaknesses before they can be exploited.

Comparison of Security Measures, Chatgpt users data leaked because of bug vulnerability

| Security Measure | Effectiveness | Implementation Cost | Complexity |

|---|---|---|---|

| Regular Security Audits | High | Medium | Medium |

| Penetration Testing | High | High | High |

| Secure Coding Practices | High | Medium | Medium |

| Multi-Factor Authentication (MFA) | High | Low | Low |

Informing Future Software Development

This incident underscores the critical need for incorporating security considerations at every stage of the software development lifecycle (SDLC). Implementing robust security testing throughout development, including code reviews and automated security scanning, is paramount. The adoption of DevSecOps principles, which integrate security into all aspects of the development process, is crucial for mitigating risks and preventing future vulnerabilities.

Furthermore, prioritizing the development of secure coding practices and providing ongoing security training for developers is essential. This incident serves as a stark reminder that security is not an afterthought but an integral part of software development.

User Perspective and Concerns

The Kami data leak, however unintentional, caused significant distress among affected users. The violation of trust, coupled with the potential exposure of personal information, triggered a range of emotional and psychological responses, highlighting the importance of robust data protection measures and effective user support strategies.The emotional impact on users varied widely, ranging from mild anxiety to severe distress. Many users experienced feelings of vulnerability, anger, and betrayal, stemming from a perceived breach of confidence in the platform’s security.

The uncertainty surrounding the extent of the data breach and the potential misuse of their information further exacerbated these feelings.

User Reactions and Concerns

Following the data leak, users expressed a spectrum of concerns. Many voiced their anxieties about identity theft, financial fraud, and reputational damage. Social media platforms were flooded with posts expressing outrage, frustration, and fear. Some users demanded immediate and transparent communication from Kami regarding the extent of the breach, the steps taken to mitigate the damage, and the measures being implemented to prevent future incidents.

Others questioned the platform’s commitment to data security and expressed skepticism about the effectiveness of its security protocols. For example, one user reported receiving numerous spam calls after the leak, directly linking the increase in unwanted contact to the exposed data. Another user detailed their experience of having to freeze their credit cards and initiate fraud alerts due to anxieties surrounding potential financial misuse.

Challenges in Regaining Trust

Regaining user trust after a data breach is a complex and lengthy process. It requires more than just technical fixes; it demands a demonstrable commitment to transparency, accountability, and ongoing improvements to data security practices. Users will need to see concrete evidence of changes, not just promises. The challenge lies in rebuilding confidence that their data is safe and that the platform prioritizes their privacy.

A single incident can erode years of trust, and restoring that trust requires sustained effort and demonstrable results. For instance, simply issuing a generic apology is insufficient; users need detailed information about what happened, what data was compromised, and what steps are being taken to prevent future breaches, along with a clear timeline for these actions.

Hypothetical User Support Strategy

A comprehensive user support strategy should prioritize open communication, empathy, and proactive support. This would include: (1) a dedicated webpage with clear, concise, and regularly updated information about the data breach, including FAQs and direct contact information; (2) personalized email notifications to affected users outlining the specific data compromised and recommended steps to mitigate potential risks; (3) access to free credit monitoring and identity theft protection services; (4) a 24/7 helpline staffed by trained personnel to address user concerns and provide immediate assistance; and (5) regular updates on the progress of investigations and remediation efforts, fostering transparency and accountability.

Furthermore, proactive engagement with user communities through social media and online forums can help address concerns and rebuild trust. A well-structured compensation plan for affected users, proportionate to the harm suffered, could also be considered to demonstrate a genuine commitment to resolving the situation.

Illustrative Scenario: Chatgpt Users Data Leaked Because Of Bug Vulnerability

Imagine Sarah, a freelance graphic designer, whose data was leaked due to the Kami vulnerability. The compromised data included her name, email address, phone number, IP address, and a partial list of her clients, gleaned from her Kami interactions where she discussed project details. This seemingly innocuous information, in the wrong hands, could have devastating consequences.Sarah’s data fell into the hands of a sophisticated phishing operation.

The perpetrators crafted a highly convincing email, mimicking a legitimate client inquiry about a new project. The email included specific details about a past project Sarah had mentioned in her Kami conversations, creating a strong sense of authenticity. The email contained a malicious link, designed to install malware on Sarah’s computer.

Consequences of Data Misuse

The malware installed on Sarah’s computer allowed the attackers to steal her financial information, including banking login credentials and credit card details. They also gained access to her client list, enabling them to impersonate her and solicit fraudulent payments. Beyond financial losses, Sarah experienced significant emotional distress and reputational damage. The breach eroded her trust in online services and created anxiety about the potential for further attacks.

She faced the daunting task of notifying her clients, explaining the situation, and regaining their confidence. The stress of dealing with the aftermath, including contacting her bank, credit card companies, and law enforcement, was considerable.

Mitigation Steps

Upon discovering the data breach, Sarah immediately took several steps to mitigate the potential harm. She changed all her passwords, enabled two-factor authentication wherever possible, and reported the incident to her bank and credit card companies to freeze her accounts and initiate fraud investigations. She also contacted the relevant authorities to file a police report. She carefully reviewed her online accounts for any suspicious activity and proactively monitored her credit report for any signs of fraudulent activity.

Furthermore, she reached out to her clients, informing them about the situation and assuring them of her commitment to protecting their data. She implemented stronger security measures for her computer and online accounts, including installing updated anti-malware software and regularly backing up her data. Finally, she sought legal counsel to understand her rights and options for pursuing compensation for her losses.

Closing Notes

The AI user data leak serves as a stark reminder of the crucial role of security in the development and deployment of artificial intelligence. While the immediate crisis may be contained, the long-term impact on user trust and the future of AI development remains to be seen. The incident underscores the need for proactive security measures, rigorous testing, and a commitment to transparency in handling user data.

Moving forward, a collaborative approach involving developers, users, and policymakers is essential to navigate the complexities of AI security and ensure a safer digital future.

Question & Answer Hub

What kind of data was leaked?

The leaked data potentially included personal information such as names, email addresses, and possibly even more sensitive details depending on the specific interaction history.

How can I tell if my data was compromised?

The company involved should have contacted affected users directly. If you haven’t received notification and are concerned, contact their support team.

What steps should I take if my data was compromised?

Monitor your accounts for suspicious activity, change your passwords, and consider implementing credit monitoring services.

What is being done to prevent future incidents?

The company is implementing enhanced security measures, including improved bug detection and response protocols, and likely changes to their data handling procedures.