Connect to Google Batch with Workload Automation

Connect to Google Batch with workload automation: It sounds complex, right? But trust me, unlocking the power of Google Batch for automating your workloads is more achievable than you think. This post dives into the practical aspects of connecting your systems to Google Batch, walking you through the process step-by-step, from setting up API access to optimizing job performance.

We’ll cover everything from securing your credentials to handling errors and scaling your batch jobs to handle massive datasets. Get ready to streamline your workflows and boost your productivity!

We’ll explore the core functionalities of Google Batch, comparing it to other GCP workload automation services. I’ll share practical tips and tricks I’ve learned from personal experience, including common pitfalls to avoid and effective strategies for maximizing efficiency. Think of this as your personal guide to mastering Google Batch – no prior experience needed!

Understanding Google Batch and Workload Automation

Google Batch and Google Cloud’s workload automation services are powerful tools for managing and scaling your data processing tasks. They offer significant advantages over manually scheduling and executing jobs, providing efficiency, scalability, and robust error handling. This exploration will delve into their core functionalities, compare different approaches, and illustrate their practical applications.

Core Functionalities of Google Batch

Google Batch is a fully managed batch processing service on Google Cloud Platform (GCP). Its core function is to efficiently run large-scale batch jobs. It handles job scheduling, resource allocation, and monitoring, abstracting away much of the complexity involved in managing these tasks. Key features include automatic scaling to handle varying workloads, fault tolerance to ensure job completion even with failures, and detailed logging and monitoring to facilitate debugging and performance analysis.

The service integrates seamlessly with other GCP services, allowing for easy data ingestion and output. This integration simplifies the entire data pipeline, from data acquisition to final result processing.

Key Features of Google Cloud’s Workload Automation Services

Beyond Google Batch, GCP offers a suite of services for workload automation. These services cater to diverse needs, from simple scheduled tasks to complex, orchestrated workflows. Key features across these services include: centralized scheduling and orchestration, robust monitoring and alerting, integration with various GCP services, and support for different programming languages and execution environments. Many offer features like auto-scaling, enabling them to dynamically adjust resources based on demand.

Furthermore, features such as retry mechanisms and error handling are crucial for ensuring reliability and data integrity.

Comparing Workload Automation Approaches within GCP

GCP offers several approaches to workload automation, each with its strengths and weaknesses. Google Cloud Functions excels for event-driven, short-lived tasks. Cloud Composer (Apache Airflow) is ideal for complex, DAG-based workflows requiring intricate task dependencies and orchestration. Google Kubernetes Engine (GKE) provides maximum flexibility and control for containerized workloads, but requires more operational overhead. Google Batch sits comfortably in the middle, providing a managed service for large-scale batch jobs without the complexities of container orchestration.

The choice depends heavily on the specific needs of the workload. For example, a simple daily data backup might be best suited for Cloud Scheduler, while a large-scale machine learning training job would be better handled by Google Batch or GKE.

Common Use Cases for Integrating Google Batch with Workload Automation

Integrating Google Batch with other workload automation services unlocks significant power. For instance, Cloud Composer can orchestrate a complex workflow that includes multiple Google Batch jobs, ensuring sequential execution or parallel processing as needed. A typical use case might involve using Cloud Composer to trigger a Google Batch job for data preprocessing, followed by another Batch job for model training, and finally a third job for result analysis.

This approach enables modularity and maintainability of large data processing pipelines. Another common use case involves using Google Batch to process large datasets in parallel, leveraging its auto-scaling capabilities to efficiently manage resources. This is frequently used in scenarios like large-scale data transformations, ETL processes, and scientific computing.

Comparison of Google Cloud Services for Workload Automation

| Service Name | Primary Function | Integration with Google Batch | Cost Considerations |

|---|---|---|---|

| Google Batch | Managed batch processing | N/A (It is the batch processing service) | Pay-as-you-go based on resource usage |

| Cloud Composer (Apache Airflow) | Workflow orchestration | Yes, can trigger and manage Batch jobs | Pay-as-you-go based on resource usage and Airflow instance type |

| Cloud Functions | Serverless event-driven functions | Yes, can trigger Batch jobs based on events | Pay-per-invocation based on execution time and memory usage |

| Cloud Scheduler | Scheduled task execution | Yes, can trigger Batch jobs on a schedule | Pay-as-you-go based on the resources used by the triggered jobs |

Methods for Connecting to Google Batch

Connecting your workload automation system to Google Batch requires establishing secure API access. This involves understanding authentication methods, configuring service accounts, and implementing best practices for credential management. Properly setting up this connection ensures efficient and secure execution of your batch jobs within the Google Cloud Platform.

API Access Setup

Setting up API access to Google Batch involves several key steps. First, you’ll need to enable the Google Batch API in your Google Cloud project. This is typically done through the Google Cloud Console. Next, you’ll create service account credentials. These credentials provide your workload automation system with the necessary permissions to interact with the Google Batch API.

Finally, you’ll configure your workload automation system to use these credentials when submitting jobs to Google Batch. This process ensures that your system can authenticate itself and authorize requests to the Google Batch service. Detailed instructions can be found in the Google Cloud documentation.

Securing API Credentials

Protecting your API credentials is paramount. Never hardcode them directly into your application code. Instead, utilize secure methods such as environment variables or dedicated secret management systems like Google Cloud Secret Manager. Regularly rotate your credentials to minimize the risk of compromise. Restrict access to these credentials only to the necessary accounts and systems.

Implementing robust access control measures is crucial for maintaining the security and integrity of your Google Batch operations. Consider using least privilege principles, granting only the necessary permissions to your service accounts.

Authentication Methods

Google Batch primarily uses OAuth 2.0 for authentication. This industry-standard protocol allows your workload automation system to securely obtain an access token to access the Google Batch API. The access token is short-lived, promoting enhanced security. The typical flow involves obtaining an authorization code, exchanging it for an access token, and using this token in subsequent requests to the Google Batch API.

Service accounts, as discussed below, simplify this process by providing pre-configured credentials for automated access.

Configuring Service Accounts

Service accounts are specifically designed for applications and systems to access Google Cloud resources. Creating a service account for Google Batch involves specifying the project, giving it a descriptive name, and granting it the necessary IAM (Identity and Access Management) roles. The “Batch Admin” role provides comprehensive access, but it’s recommended to use the principle of least privilege and grant only the necessary permissions.

After creation, download the service account key file (JSON) – this file contains the credentials required for authentication. Keep this file secure and follow the best practices Artikeld above to protect it.

Workload Automation to Google Batch Connection Workflow

The following diagram illustrates the connection workflow:[Diagram Description: The diagram shows a workload automation system (WAS) on one side and Google Cloud Platform (GCP) including Google Batch on the other. A secure connection is established between the WAS and GCP. The WAS initiates a job submission request, including the job specification. This request is authenticated using a service account key (JSON).

The GCP receives the request, verifies the authentication using the key, and then the Google Batch service processes the job according to the specification. The results are then returned to the WAS via the secure connection. This entire process happens securely, with all communication encrypted.]

Implementing Workload Automation with Google Batch

Google Batch offers a powerful and scalable solution for automating your workloads. This involves creating and managing batch jobs, monitoring their execution, and handling potential errors effectively. By leveraging Google Batch’s capabilities, you can significantly improve efficiency and reduce manual intervention in your data processing pipelines.

Creating and Submitting Batch Jobs

Creating a batch job in Google Batch involves defining the job’s specifications, including the task’s image, resources required, and any necessary arguments. You then submit this specification to the Google Batch service. This is typically done through the Google Cloud Client Libraries (available for various programming languages like Python, Java, and Go) or via the gcloud command-line tool. A typical job specification might include details such as the Docker image containing your application, the number of CPU cores and memory required per task, the input data location (e.g., a Cloud Storage bucket), and the output location.

The submission process involves sending this structured data to the Batch API, which then orchestrates the execution across Google’s infrastructure. For example, a Python script using the Google Cloud client library might look something like this (simplified for illustration): “`python # …import necessary libraries… job = batch_client.create_job(project_id, job_id, tasks=[task]) # …where task details are defined elsewhere…

“`

Monitoring Batch Job Progress and Status

Google Batch provides robust monitoring capabilities. You can track the status of your jobs and individual tasks through the Google Cloud Console, command-line tools (gcloud), or programmatically via the API. The API allows you to retrieve detailed information on the progress, including the start time, end time, status (e.g., RUNNING, SUCCEEDED, FAILED), and any logs generated during execution.

This real-time visibility is crucial for identifying issues early and for overall job management. For instance, you might regularly poll the API to check the status of your jobs and trigger alerts if a job fails or takes longer than expected.

Handling Errors and Exceptions

Robust error handling is crucial for reliable workload automation. Google Batch allows you to define retry policies for individual tasks, specifying the number of retries and the backoff strategy. You can also implement custom error handling within your task code to gracefully handle specific exceptions. Furthermore, Google Batch provides detailed logs for each task, which are essential for debugging and identifying the root cause of failures.

These logs can be accessed via the Google Cloud Console or the API. By combining retry policies with proper exception handling and log analysis, you can significantly increase the resilience of your batch jobs.

Examples of Different Job Types

Google Batch supports a wide variety of job types. Examples include data processing tasks (e.g., using Apache Spark or Hadoop), machine learning model training, large-scale image processing, and scientific simulations. The flexibility of using Docker containers allows you to run virtually any application within the Google Batch environment. For example, you could submit a job to process a large dataset using Apache Beam, train a TensorFlow model on a massive dataset, or run a computationally intensive simulation using a specialized scientific software package.

Common Challenges and Solutions

Successfully automating workloads with Google Batch requires careful planning and consideration. Here are some common challenges and their solutions:

- Challenge: Insufficient resources allocated to tasks leading to slow execution or failures. Solution: Carefully estimate resource requirements (CPU, memory, disk) for your tasks and allocate sufficient resources during job creation. Monitor resource utilization during execution and adjust accordingly.

- Challenge: Difficulty in debugging failed tasks. Solution: Utilize the detailed logs provided by Google Batch. Implement robust logging within your task code to capture relevant information during execution. Use the Cloud Logging service for centralized log management and analysis.

- Challenge: Managing large numbers of jobs and tasks. Solution: Employ a well-structured job naming convention and utilize the Google Cloud Console or command-line tools to filter and manage jobs effectively. Consider using a job scheduling system to automate the submission of jobs.

- Challenge: Inefficient data transfer between tasks or to/from Cloud Storage. Solution: Optimize data transfer by using appropriate data formats (e.g., Parquet for large datasets) and minimizing data movement. Leverage Cloud Storage features like transfer acceleration to improve transfer speeds.

Advanced Techniques and Best Practices: Connect To Google Batch With Workload Automation

Optimizing Google Batch jobs for performance and scalability is crucial for efficient workload automation. This section delves into advanced techniques and best practices to ensure your batch jobs run smoothly, handle large datasets effectively, and maintain robust error handling. We’ll explore strategies for optimizing resource utilization, scaling your jobs, managing dependencies, and implementing comprehensive logging and monitoring.

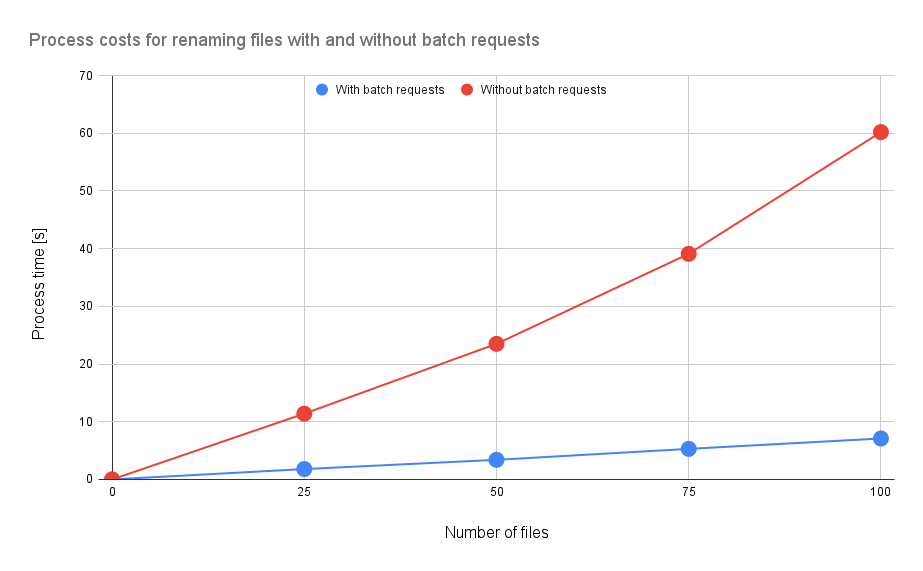

Optimizing Batch Job Performance and Resource Utilization

Efficient resource allocation is paramount for cost-effective and high-performing batch jobs. Understanding your job’s resource requirements (CPU, memory, disk) is the first step. Over-provisioning leads to wasted resources, while under-provisioning can cause delays or failures. Google Cloud’s autoscaling features can dynamically adjust resources based on demand, preventing bottlenecks. For instance, you can configure your job to scale based on the size of the input data.

Careful selection of machine types also plays a critical role; using specialized VMs optimized for specific tasks (e.g., memory-intensive tasks) significantly improves performance. Furthermore, consider techniques like parallelization and vectorization within your job’s code to maximize throughput.

Scaling Batch Jobs to Handle Large Datasets

Processing massive datasets requires strategic scaling. Google Batch excels at this, offering features like multi-worker jobs and sharding. Multi-worker jobs allow distributing tasks across multiple virtual machines, significantly reducing processing time. Sharding, or dividing the dataset into smaller, manageable chunks, allows parallel processing of these chunks across multiple workers. For example, if processing a terabyte-sized dataset, dividing it into 100 gigabytes chunks and assigning each chunk to a separate worker can dramatically decrease overall processing time.

Efficient data partitioning and optimized data transfer between workers are also crucial for scalability. Consider using optimized data storage solutions like Google Cloud Storage for efficient data access.

Managing Dependencies Between Batch Jobs

In complex workflows, managing dependencies between batch jobs is essential to ensure correct execution order. Google Cloud Composer (or similar workflow orchestration tools) can effectively manage these dependencies. You can define dependencies using directed acyclic graphs (DAGs), specifying the order in which jobs must run. For example, a job processing raw data must complete before a job analyzing the processed data can begin.

Proper dependency management prevents errors and ensures data integrity throughout the workflow. Using well-defined job IDs and status checks within the orchestration tool allows for monitoring and automated handling of job failures.

Implementing Logging and Monitoring for Batch Jobs

Comprehensive logging and monitoring are vital for troubleshooting and performance analysis. Google Cloud’s logging and monitoring services integrate seamlessly with Google Batch. Detailed logs provide insights into job execution, including start and end times, resource usage, and any errors encountered. Monitoring dashboards provide real-time visibility into job status and performance metrics. This allows for proactive identification and resolution of issues.

For instance, if a job consistently fails due to memory constraints, monitoring data would reveal this, enabling adjustments to resource allocation or code optimization. Custom metrics can be added to provide deeper insights into specific aspects of your job’s performance.

Handling Failures and Retries in a Batch Job

The process of handling failures and retries involves several key steps. A flowchart can effectively visualize this process.

Flowchart: Handling Failures and Retries in a Batch Job

The flowchart would start with a “Job Execution” block. If the job completes successfully, it proceeds to a “Job Completed” terminal block. If the job fails, it enters a “Failure Detection” block. This block checks if the maximum retry attempts have been reached. If not, the job proceeds to a “Retry Mechanism” block.

Automating workflows with Google Batch is a game-changer for efficiency, especially when dealing with large datasets. Thinking about how to integrate this with application development? The possibilities expand dramatically when you consider modern approaches like those outlined in this insightful article on domino app dev the low code and pro code future , which highlights how streamlined development can boost automation projects.

Ultimately, connecting Google Batch to your workload automation strategy depends on having the right app infrastructure in place.

This involves potentially waiting a specified period (backoff strategy) before restarting the job. If the maximum retry attempts are reached, the job moves to a “Failure Notification” block, sending alerts to designated personnel or systems. From the “Failure Notification” block, it proceeds to a “Job Failed” terminal block. The “Retry Mechanism” block loops back to the “Job Execution” block.

Each retry attempt is logged for analysis. A backoff strategy, such as exponential backoff, is employed to avoid overwhelming the system during repeated failures. The flowchart clearly depicts the sequential steps involved in handling job failures and implementing retries with clear exit points for successful completion or final failure.

Case Studies and Examples

Google Batch, with its robust capabilities for scheduling and managing large-scale batch jobs, offers significant advantages for workload automation. Its integration with other Google Cloud Platform (GCP) services further enhances its utility, making it a powerful tool for organizations of all sizes. Let’s explore some real-world applications and implementation strategies.

Real-world examples showcase Google Batch’s versatility across diverse industries. From media companies processing terabytes of video data to financial institutions running nightly risk assessments, the platform’s scalability and flexibility adapt to various needs. These scenarios highlight the benefits of a managed batch processing service, reducing infrastructure management overhead and enabling organizations to focus on their core business logic.

Media Company Video Processing

A major media company uses Google Batch to process and transcode large volumes of video content. Their workflow involves uploading raw video files to Google Cloud Storage, triggering a Google Batch job to transcode the videos into various formats (MP4, WebM, etc.) using a custom containerized application. The transcoded videos are then stored back in Google Cloud Storage, ready for distribution.

This process, previously hampered by on-premise infrastructure limitations, now scales effortlessly with Google Batch, handling peak demands without performance degradation. The company reports significant improvements in processing speed and reduced operational costs.

Financial Institution Risk Assessment

A large financial institution leverages Google Batch for its nightly risk assessment process. This involves processing vast amounts of transactional data stored in BigQuery, running complex risk models using Apache Spark on Dataproc, and storing the results in a separate database. Google Batch orchestrates this entire pipeline, ensuring reliable and timely execution. The institution benefits from improved accuracy and reduced latency in its risk assessment, allowing for proactive risk management.

Furthermore, the automated nature of the process frees up data engineers to focus on other critical tasks.

Example Code Snippet (Python with the Google Cloud Batch API)

The following Python snippet demonstrates a simple job submission using the Google Cloud Batch API. This example showcases the basic structure for creating and submitting a batch job, highlighting the flexibility and ease of integration.

from google.cloud import batch_v1

# ... (Authentication and client initialization) ...

job = batch_v1.Job()

job.job_id = "my-batch-job"

job.tasks = [batch_v1.Task(command=["my-command", "arg1", "arg2"])]

client.create_job(parent="projects/my-project/locations/us-central1", job=job)

Comparison of Workload Automation Approaches, Connect to google batch with workload automation

Implementing workload automation with Google Batch can be approached in various ways. One common method involves using Cloud Composer (Apache Airflow) to orchestrate the workflow and trigger Google Batch jobs. Another approach involves directly interacting with the Google Batch API from custom applications. The choice depends on factors like existing infrastructure, team expertise, and complexity of the workflow.

Using Cloud Composer provides a more managed and visually intuitive approach, while direct API interaction offers greater flexibility and control.

Benefits and Limitations of Google Batch for Workload Automation

Google Batch offers several advantages, including scalability, cost-effectiveness, and seamless integration with other GCP services. However, it’s crucial to acknowledge its limitations. For instance, Google Batch is primarily designed for batch processing and may not be ideal for real-time or interactive workloads. Moreover, understanding the intricacies of the Google Batch API and job configuration is essential for effective implementation.

Despite these limitations, the benefits of scalability, cost-efficiency, and integration with other GCP services often outweigh the drawbacks for many organizations.

Conclusion

So, there you have it – a comprehensive look at connecting to Google Batch for workload automation. From initial setup to advanced optimization techniques, we’ve covered the essentials to get you started. Remember, mastering Google Batch is a journey, not a sprint. Start small, experiment with different approaches, and don’t be afraid to ask for help! The possibilities are endless when it comes to automating your workloads, and Google Batch is a powerful tool to help you achieve your goals.

Happy automating!

Answers to Common Questions

What are the cost implications of using Google Batch?

Google Batch pricing is based on the resources consumed by your jobs, including compute time, memory, and storage. It’s pay-as-you-go, so you only pay for what you use. Check Google Cloud’s pricing page for the most up-to-date information.

How do I troubleshoot common errors when submitting jobs to Google Batch?

Google Batch provides detailed error messages. Carefully examine these messages, paying attention to error codes and logs. Common issues include incorrect permissions, insufficient resources, and problems with your job configuration. Google Cloud’s documentation offers troubleshooting guidance.

Can I use Google Batch with other cloud platforms?

No, Google Batch is a Google Cloud Platform (GCP) service and is tightly integrated with other GCP services. It’s not designed for interoperability with other cloud providers.

What kind of security measures should I implement when using Google Batch?

Use service accounts with restricted permissions, implement IAM roles for fine-grained access control, and regularly review and update your security policies. Employ strong encryption for sensitive data both in transit and at rest.