Creating a File Dependency for a Job Stream Using Start Condition

Creating a file dependency for a job stream using start condition is a powerful technique that allows you to orchestrate complex workflows efficiently. Imagine a scenario where a data processing job needs to wait for a specific input file to be generated before it begins. This is where the elegance and power of start conditions come in, ensuring that your job stream only kicks off when all its prerequisites are met.

We’ll explore how to set up this dependency, handle potential errors, and even boost the security of your file handling process. Get ready to level up your job stream management game!

This post will guide you through the entire process, from defining job streams and dependencies to implementing robust error handling and security measures. We’ll delve into practical examples and offer best practices for optimizing your workflows. Whether you’re a seasoned developer or just starting out, you’ll find valuable insights and practical advice to enhance your understanding and implementation of file dependencies in job streams.

Defining Job Streams and Dependencies

Job streams are the backbone of many data processing and ETL (Extract, Transform, Load) pipelines. They represent a sequence of jobs, each performing a specific task, working together to achieve a larger goal. Understanding how these jobs relate to each other, particularly through dependencies, is crucial for building robust and reliable systems. This post delves into the core concepts of job streams and the vital role of dependencies, focusing on file dependencies and the advantages of using start conditions.Job streams consist of individual jobs, each with defined inputs and outputs.

These jobs are executed in a predetermined order, often based on dependencies between them. A simple stream might involve extracting data from a database, transforming it, and then loading it into a data warehouse. More complex streams can involve dozens or even hundreds of interconnected jobs. The efficiency and reliability of the entire process hinge on the proper definition and management of these dependencies.

Job Stream Dependencies

Dependencies in job streams define the order in which jobs are executed. A job cannot start until its dependencies are met. There are several types of dependencies, including sequential dependencies (job B runs after job A completes), parallel dependencies (jobs B and C run concurrently after job A completes), and conditional dependencies (job B runs only if job A completes successfully).

File dependencies are a specific type of conditional dependency where a job’s execution is contingent on the existence or availability of a specific file.

File Dependencies in Job Streams

File dependencies are critical in scenarios where a job requires an input file generated by a previous job. For example, a job that processes sales data might depend on a file containing the raw sales figures generated by a data extraction job. Another example would be a reporting job that requires a pre-processed data file created by a data cleaning and transformation job.

Without the proper file dependency management, the downstream jobs would fail or produce incorrect results. Consider a scenario where a report generation job is scheduled to run at 8:00 AM, but the data processing job creating the input file completes at 8:15 AM. Without a file dependency, the report generation job would run prematurely using potentially incomplete or outdated data.

Advantages of Using Start Conditions for File-Based Dependencies

Start conditions provide a robust and efficient mechanism for managing file-based dependencies. Instead of relying on manual checks or time-based triggers, start conditions allow a job to automatically start only when a specified file exists and meets certain criteria (e.g., file size, modification time, specific content). This eliminates the risk of jobs running with incomplete or incorrect data, improving data quality and overall system reliability.

Start conditions also offer better control over job execution, allowing for more sophisticated workflows and automated error handling. For example, a start condition could be set to monitor for a file and only trigger the next job if the file size exceeds a certain threshold, indicating a complete data set. Another example could be setting a condition that only triggers the job if a specific pattern exists within the file, indicating the file has been properly processed.

Implementing File Dependency using Start Conditions: Creating A File Dependency For A Job Stream Using Start Condition

Implementing file dependencies within job streams using start conditions offers a powerful way to orchestrate tasks, ensuring that data processing steps occur only after necessary input files are available and valid. This approach enhances the reliability and robustness of your data pipelines by preventing jobs from starting prematurely and potentially failing due to missing or corrupted data. This post will delve into the practical aspects of implementing this technique.

Creating File Dependencies with Start Conditions

This section details the process of creating a file dependency using a start condition, focusing on practical examples and robust error handling. We’ll explore different methods for checking file existence and validity, and discuss effective error handling and logging strategies.

| Step | Description | Code Snippet (Bash) | Notes |

|---|---|---|---|

| 1. Check File Existence | Verify if the dependent file exists before starting the job. | if [ -f "/path/to/my/file.txt" ]; then echo "File exists"; else echo "File does not exist"; fi |

The `-f` option checks for a regular file. Replace `/path/to/my/file.txt` with the actual path. |

| 2. Check File Size | Ensure the file is not empty or unexpectedly small. | filesize=$(stat -c%s "/path/to/my/file.txt"); if [ $filesize -gt 0 ]; then echo "File size is greater than 0"; else echo "File is empty or too small"; fi |

`stat -c%s` gets the file size in bytes. Adjust the threshold (0 in this case) as needed. |

| 3. Check File Integrity (Checksum) | Use a checksum (e.g., MD5, SHA) to verify file integrity. | expected_checksum="a1b2c3d4e5f6..." actual_checksum=$(md5sum "/path/to/my/file.txt" | awk 'print $1') if [ "$expected_checksum" == "$actual_checksum" ]; then echo "Checksum matches"; else echo "Checksum mismatch"; fi |

Requires knowing the expected checksum beforehand. This is crucial for detecting silent data corruption. |

| 4. Combine Checks in Start Condition | Integrate the checks into a single start condition script. | #!/bin/bashif [ -f "/path/to/my/file.txt" ] && [[ $(stat -c%s "/path/to/my/file.txt") -gt 0 ]] && [[ $(md5sum "/path/to/my/file.txt" | awk 'print $1') == "a1b2c3d4e5f6..." ]]; then echo "File is valid, proceeding..." # Your job command hereelse echo "File dependency check failed!" exit 1fi |

This script combines all three checks. Exit code 1 signals failure. |

Handling File Dependency Errors

Robust error handling is crucial when dealing with file dependencies. A simple `if` statement checking for file existence isn’t always sufficient. A more comprehensive approach involves:

- Detailed Logging: Record all file checks, including results (success/failure) and timestamps. This aids in debugging and monitoring.

- Retry Mechanism: Implement retry logic with exponential backoff. This helps handle temporary file unavailability.

- Alerting: Send notifications (email, SMS) upon critical errors (e.g., persistent file absence or corruption).

- Graceful Degradation: Design the system to handle missing dependencies gracefully, perhaps by using default values or skipping the dependent job.

Comparing File Existence and Validity Checks

Several methods exist for checking file existence and validity within a start condition. The `-f` flag in Bash is a basic existence check. More advanced methods include using checksums (MD5, SHA) for integrity verification and comparing file sizes to detect unexpected changes. The choice of method depends on the criticality of data integrity and the acceptable level of overhead.

Checksums provide strong integrity guarantees but add computational cost. File size checks are faster but less reliable.

Error Handling and Logging Best Practices

Effective error handling and logging are essential for maintaining a reliable job stream. Logging should include timestamps, severity levels (e.g., DEBUG, INFO, WARNING, ERROR), detailed error messages, and potentially relevant file information (path, size, checksum). Structured logging formats (e.g., JSON) facilitate easier parsing and analysis of log data. Implementing a centralized logging system allows for aggregation and monitoring of errors across multiple job streams.

Advanced File Dependency Management

Managing file dependencies in simple job streams is relatively straightforward. However, as job streams grow in complexity, involving numerous files and intricate conditions, the need for robust and efficient dependency management becomes paramount. This section delves into strategies for navigating this complexity, optimizing performance, and integrating dependency checks with monitoring systems.

Strategies for Managing Dependencies in Complex Job Streams

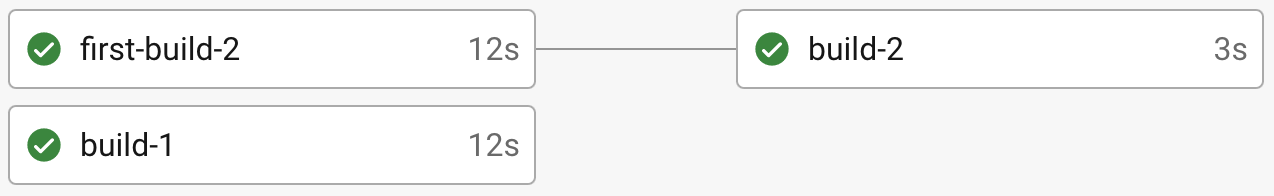

Complex job streams often involve multiple files, each with its own dependencies and conditions. A well-defined approach is crucial to prevent bottlenecks and ensure reliable execution. One effective strategy is to employ a directed acyclic graph (DAG) to visually represent the dependencies between jobs and files. This allows for clear identification of potential conflicts and simplifies the process of scheduling and monitoring the stream.

Another key aspect is the implementation of conditional logic. Jobs should only execute when all necessary files are present and meet specific criteria, such as file size, modification date, or content validation. This prevents premature execution and potential errors. Finally, employing modular design principles helps in breaking down large, complex streams into smaller, manageable units, each with its own set of dependencies.

This improves maintainability and allows for easier troubleshooting.

Flowchart Illustrating Multiple File Dependencies and Start Conditions

Imagine a job stream processing customer data. The flowchart would start with a “Start” node. Branching from this would be three separate nodes representing the arrival of three files: “Customer_List.csv”, “Transaction_Data.csv”, and “Address_Data.csv”. Each of these files would have a “File Exists and Valid” decision diamond. If the file is valid, the path continues to the next processing stage.

If invalid, an error path would be followed, potentially triggering an alert. After the validation of all three files, a “Merge Data” job node would combine the data. Following this, “Data Cleaning” and “Data Analysis” jobs would run sequentially, each dependent on the output of the previous job. Finally, an “End” node signals the completion of the stream.

The entire flow can be visualized as a DAG where nodes represent jobs and edges represent dependencies. The decision diamonds represent the conditional checks on the input files.

Best Practices for Optimizing Performance and Resource Utilization

Optimizing performance and resource utilization in complex job streams is vital for efficient processing. One key strategy is to parallelize independent jobs wherever possible. For instance, in the customer data example, the validation of the three input files could occur concurrently, significantly reducing processing time. Another approach is to employ efficient file processing techniques. Using optimized libraries and minimizing I/O operations can drastically improve performance.

Furthermore, proper resource allocation is crucial. Setting appropriate memory limits for each job and utilizing load balancing techniques across multiple processing nodes can prevent resource exhaustion and ensure smooth operation. Regular monitoring of resource usage and identification of bottlenecks is also essential for proactive optimization.

Integrating File Dependency Checks with Monitoring and Alerting Systems

Integrating file dependency checks with monitoring and alerting systems is crucial for proactive issue management. The system should monitor the status of files and trigger alerts if dependencies are not met or if files fail validation. For instance, if “Customer_List.csv” is missing or corrupted, an immediate alert should be sent to the relevant team. This ensures timely intervention and minimizes the impact of potential issues.

This integration can be achieved through various monitoring tools and scripting techniques, allowing for real-time tracking of the job stream’s progress and immediate notification of any anomalies. Real-time dashboards visualizing the status of each job and file, coupled with automated alerts, are highly beneficial for proactive problem resolution.

Security Considerations for File Dependencies

File dependencies, while crucial for orchestrating complex job streams, introduce significant security risks if not handled carefully. Unauthorized access, modification, or deletion of these files can disrupt operations, compromise data integrity, and even create vulnerabilities for malicious attacks. This section explores potential vulnerabilities and Artikels strategies for securing file dependencies within your job stream architecture.

Potential Security Vulnerabilities

Several vulnerabilities can arise from insecure file dependency management. Unsecured file storage, for instance, could allow unauthorized users to read sensitive data contained within dependent files, such as configuration parameters, credentials, or proprietary algorithms. Similarly, a lack of access control can permit unauthorized modification of these files, potentially leading to corrupted job executions or even malicious code injection.

Furthermore, insufficient auditing mechanisms can hinder the ability to track file access and modifications, making it difficult to identify and respond to security incidents. Finally, poorly implemented file transfer mechanisms could expose files to interception or manipulation during transit.

Secure File Access Control Mechanisms

Implementing robust access control is paramount. This involves using operating system features like permissions and access control lists (ACLs) to restrict file access based on user roles and responsibilities. For example, only authorized users should have read and write access to files containing sensitive information, while others might only have read-only access. Employing role-based access control (RBAC) further enhances security by assigning permissions based on user roles rather than individual identities, simplifying management and improving security posture.

In addition to operating system level controls, consider leveraging encryption for files at rest and in transit, using strong encryption algorithms and key management systems to ensure confidentiality and integrity.

Setting up a file dependency for a job stream using a start condition can be tricky, but it’s a crucial part of efficient workflow automation. Think about how this relates to the broader landscape of application development; check out this insightful article on domino app dev the low code and pro code future to see how these concepts intersect.

Understanding these foundational elements, like file dependencies, is key to building robust and reliable Domino applications, regardless of whether you’re using low-code or pro-code approaches. Mastering start conditions for file dependencies is a valuable skill for any developer.

Secure File Handling Practices, Creating a file dependency for a job stream using start condition

Secure file handling practices are critical for mitigating risks. All files should be validated before processing to prevent malicious code execution. This includes checking file signatures and employing anti-virus scanning. Regularly backing up dependent files is crucial to ensure business continuity in case of accidental deletion or corruption. Furthermore, implementing version control helps track changes and revert to previous versions if needed.

A well-defined file naming convention can also enhance organization and simplify security management. Finally, rigorous testing of file handling processes before deployment into production is essential to identify and address potential vulnerabilities early on.

Best Practices for Securing File Dependencies in Production

A robust security posture requires a multi-layered approach. Here are some best practices:

- Implement least privilege access control: Grant only the necessary permissions to users and processes.

- Encrypt sensitive data both at rest and in transit: Use strong encryption algorithms and key management systems.

- Regularly audit file access and modifications: Track who accessed and modified which files, when, and for what purpose.

- Validate all files before processing: Check file signatures and use anti-virus scanning to prevent malicious code execution.

- Implement robust version control: Track changes and allow rollback to previous versions if necessary.

- Use secure file transfer protocols: Employ protocols like SFTP or FTPS instead of insecure options like FTP.

- Regularly back up dependent files: Maintain backups to ensure business continuity in case of data loss.

- Employ a secure file storage solution: Consider using cloud storage services with robust security features or dedicated secure file servers.

- Integrate security scanning into the CI/CD pipeline: Automate security checks during the software development lifecycle.

- Follow a strong password policy: Enforce strong passwords for all users with access to dependent files.

Real-world Examples and Case Studies

File dependencies in job streams are far from theoretical; they’re the backbone of many critical data processing pipelines. Understanding how they function and how to manage them effectively is crucial for building robust and reliable systems. This section will explore real-world scenarios where file dependencies are paramount, and demonstrate the advantages of using start conditions for managing them.

Real-world Scenario: Nightly Financial Reporting

Imagine a large financial institution generating nightly reports. The process involves several steps: extracting data from various databases (Step 1), performing complex calculations and aggregations (Step 2), generating various reports in PDF and CSV formats (Step 3), and finally, archiving the reports and data (Step 4). Each step depends on the successful completion of the previous one, and the output files from one step serve as the input for the next.

The main challenge here is ensuring data integrity and timely report generation. A failure in any step could cascade, leading to missed deadlines and inaccurate reports. Solutions involve robust error handling, logging, and the implementation of file dependency checks using start conditions. This ensures that each job only starts when the necessary input files are available and the preceding jobs have completed successfully.

Hypothetical Case Study: E-commerce Order Processing

Let’s consider an e-commerce company processing customer orders. The job stream involves: receiving order data in JSON format (orders.json), validating the order data (validation_report.txt), processing payments (payment_confirmation.csv), updating inventory levels (inventory_update.log), and finally, generating shipping labels (shipping_labels.pdf). Each step generates an output file which acts as input for the subsequent step. Start conditions are implemented to ensure that:* The payment processing job only starts after the order data validation is complete and the validation report (validation_report.txt) exists.

- The inventory update job only begins after successful payment processing, indicated by the presence of payment_confirmation.csv.

- The shipping label generation job only runs once the inventory has been updated, as indicated by the existence of inventory_update.log.

This system utilizes start conditions to trigger each job only when the necessary input files are available. If a problem occurs during validation, for instance, the payment processing and subsequent steps are prevented from starting, preventing errors from propagating through the entire system.

Performance and Reliability Improvements

Using start conditions for file dependencies dramatically improves both the performance and reliability of the job stream. Performance is enhanced because jobs are not unnecessarily held up waiting for unavailable input. Reliability is boosted because the system proactively prevents errors from cascading down the processing pipeline. The system becomes more fault-tolerant, reducing the risk of data loss or incomplete processing.

Early detection of failures in one stage prevents downstream issues, minimizing downtime and ensuring consistent, accurate results. The system’s overall efficiency is improved as resources are utilized more effectively, focusing only on tasks with readily available input.

Summary

Mastering the art of creating file dependencies using start conditions significantly improves the reliability and efficiency of your job streams. By implementing the strategies and best practices discussed here, you can avoid common pitfalls, enhance security, and create robust, scalable workflows. Remember to always prioritize error handling and security, and consider the specific needs of your application when choosing your implementation strategy.

Happy coding!

Popular Questions

What happens if the dependent file is modified after the job stream starts?

The behavior depends on your implementation. If the job stream doesn’t continuously check for file changes, it will continue using the initial version. For dynamic updates, you’ll need to incorporate mechanisms for monitoring file changes and triggering actions accordingly.

Can I use start conditions with multiple dependent files?

Yes, you can define start conditions that check for the existence or validity of multiple files. The specific logic will depend on your chosen programming language and workflow management system.

How do I handle very large dependent files?

For large files, consider using techniques like checksum verification instead of comparing entire file contents. This significantly improves performance. You could also incorporate streaming techniques to avoid loading the entire file into memory.

What are some common security vulnerabilities related to file dependencies?

Common vulnerabilities include unauthorized file access, modification of dependent files, and injection attacks. Robust access control mechanisms and input validation are crucial for mitigating these risks.