The Future of Cloud E-commerce An Integrated & Composable Approach

The future of cloud e commerce an integrated and composable approach – The future of cloud e-commerce: an integrated and composable approach is no longer a futuristic fantasy; it’s the present reality rapidly reshaping online retail. Imagine a system where every element, from payment processing to inventory management, works seamlessly together, yet remains flexible enough to adapt to evolving customer needs and emerging technologies. This is the power of a composable architecture, and it’s revolutionizing how businesses build, scale, and manage their online stores.

This shift away from monolithic systems allows for unprecedented agility and customization. Businesses can pick and choose the best-in-class components to create a unique e-commerce experience tailored to their specific needs, fostering innovation and rapid response to market changes. We’ll explore the key components of this integrated and composable approach, the security considerations, and the exciting role of emerging technologies like AI and blockchain in driving this evolution.

Defining the Integrated and Composable Cloud E-commerce Landscape

The modern e-commerce landscape is rapidly evolving, driven by the need for greater agility, scalability, and personalization. This shift is leading businesses towards integrated and composable cloud e-commerce architectures, offering significant advantages over traditional monolithic systems. Understanding the key characteristics and benefits of these approaches is crucial for businesses aiming to thrive in the competitive digital marketplace.

Integrated Cloud E-commerce Platforms: Key Characteristics

An integrated cloud e-commerce platform seamlessly connects various business functions and systems into a unified whole. This integration typically includes inventory management, order processing, customer relationship management (CRM), marketing automation, and payment gateways. Key characteristics include a centralized data repository, standardized processes, and a single, unified user interface. This approach simplifies operations, improves data visibility, and reduces the risk of data silos.

A well-integrated platform enables a more streamlined customer experience and efficient internal operations.

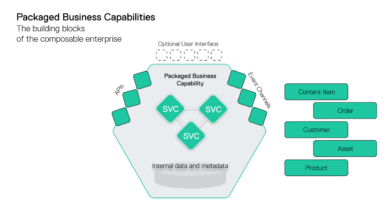

Composable Cloud E-commerce Architecture: Benefits

The composable approach emphasizes modularity and flexibility. Instead of a single, monolithic platform, a composable architecture utilizes independent, best-of-breed components that can be selected, integrated, and replaced as needed. This modularity offers several key benefits: increased agility, allowing for faster adaptation to changing market demands; improved scalability, enabling businesses to easily scale individual components to meet specific needs; enhanced customization, allowing for tailored solutions that precisely meet business requirements; and reduced vendor lock-in, giving businesses greater control over their technology stack.

Companies like Shopify Plus, with its app ecosystem, exemplify this approach.

Monolithic versus Microservices-Based E-commerce Solutions

Monolithic e-commerce solutions consist of a single, tightly coupled application. While simpler to deploy initially, they are difficult to scale and update. Microservices, on the other hand, break down the application into smaller, independent services that communicate with each other. This allows for independent scaling, updates, and deployment of individual services, leading to greater agility and resilience.

Amazon, with its vast microservices architecture powering its e-commerce platform, is a prime example of the success of this approach. The key difference lies in flexibility and scalability; microservices offer far greater adaptability to changing needs and growth.

Technological Components of a Modern, Integrated, and Composable E-commerce System

A modern e-commerce system requires a sophisticated interplay of various technological components. The following table Artikels key components, their descriptions, benefits, and potential challenges:

| Component | Description | Benefits | Potential Challenges |

|---|---|---|---|

| Headless CMS | Decouples the content management system from the front-end presentation layer. | Increased flexibility in content delivery across multiple channels; enhanced content personalization. | Increased complexity in development and integration; potential for inconsistencies across channels if not managed carefully. |

| API Gateway | Acts as a central point of access for all APIs within the e-commerce system. | Improved security; simplified integration with third-party services; enhanced scalability. | Increased complexity in managing the API gateway itself; potential performance bottlenecks if not properly configured. |

| Microservices Architecture | Breaks down the application into smaller, independent services. | Increased agility; improved scalability; easier maintenance and updates. | Increased complexity in managing and coordinating multiple services; potential for inter-service communication issues. |

| Cloud Infrastructure (e.g., AWS, Azure, GCP) | Provides the underlying infrastructure for hosting the e-commerce system. | Scalability, reliability, and cost-effectiveness. | Vendor lock-in; potential security concerns if not properly managed. |

| Customer Data Platform (CDP) | Unifies customer data from various sources to create a single, unified view of the customer. | Improved customer segmentation; enhanced personalization; increased marketing effectiveness. | Data privacy concerns; complexity in integrating data from various sources. |

Security and Scalability in the Future of Cloud E-commerce

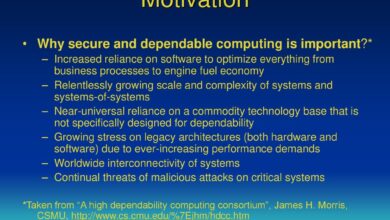

The rapid growth of cloud-based e-commerce presents both incredible opportunities and significant challenges. Successfully navigating this landscape requires a robust strategy that prioritizes both security and scalability. Failing to address these critical aspects can lead to devastating financial losses, reputational damage, and legal repercussions. This section explores the evolving threats, mitigation strategies, and architectural designs crucial for a thriving future in cloud e-commerce.

Evolving Security Threats in Cloud E-commerce

Cloud-based e-commerce faces a constantly shifting threat landscape. Traditional threats like SQL injection and cross-site scripting (XSS) remain relevant, but new challenges emerge with the increasing sophistication of cyberattacks. These include sophisticated phishing campaigns targeting employees and customers, API vulnerabilities exploited for data breaches, and the rise of ransomware attacks that can cripple operations. The distributed nature of composable architectures, while offering flexibility, also introduces complexities in securing the interconnected components.

Furthermore, the increasing reliance on third-party services expands the attack surface, demanding meticulous vetting and continuous monitoring of vendor security practices. The sheer volume of data handled by e-commerce platforms makes them attractive targets for data theft and misuse, necessitating robust data loss prevention (DLP) measures.

Data Security and Privacy in a Composable Architecture

Securing data in a composable architecture requires a layered approach. Each microservice or component should implement its own security measures, such as input validation, output encoding, and access control lists (ACLs). Data encryption, both in transit and at rest, is paramount. Employing a zero-trust security model, where every request is verified regardless of origin, is essential. Regular security audits and penetration testing are crucial for identifying vulnerabilities before attackers can exploit them.

Compliance with relevant data privacy regulations, such as GDPR and CCPA, is not just a legal requirement but a fundamental aspect of building trust with customers. Implementing robust logging and monitoring systems enables swift detection and response to security incidents. Furthermore, the use of strong authentication mechanisms, such as multi-factor authentication (MFA), is critical to prevent unauthorized access.

Scalable Cloud Infrastructure for Peak Demand

Designing a scalable cloud infrastructure capable of handling peak demand requires careful planning and the use of appropriate technologies. A key component is the use of auto-scaling features offered by cloud providers. This allows the system to automatically adjust its capacity based on real-time demand, ensuring optimal performance even during promotional events or sudden traffic spikes. Employing a content delivery network (CDN) to distribute content closer to users significantly reduces latency and improves performance.

Database optimization, including techniques like sharding and caching, is essential for handling large volumes of data efficiently. Load balancing distributes traffic across multiple servers, preventing any single server from becoming overloaded. Microservices architecture allows for independent scaling of individual components, optimizing resource utilization.

Scalable Cloud Infrastructure Diagram

The following illustrates a text-based representation of a scalable cloud infrastructure:“` +—————–+ | Load Balancer | +——–+——–+ | | +—————–+ +—————–+ +—————–+ | Web Servers |——-| Application |——-| Database | +——–+——–+ | Servers | +——–+——–+ | +——–+——–+ | | | | | +—————–+ +—————–+ +—————–+ | Caching Layer |——-| Message Queue |——-| Data Storage | (e.g., S3) +——–+——–+ +——–+——–+ +——–+——–+ | | +—————–+ | CDN | +—————–+“`This diagram shows a load balancer distributing traffic across multiple web servers.

The application servers handle business logic, interacting with a message queue for asynchronous processing. A caching layer improves response times, and a database handles persistent data. A CDN distributes static content globally, and a data storage service like Amazon S3 provides scalable storage.

Cloud Deployment Models: Security and Scalability Comparison

Public clouds (e.g., AWS, Azure, GCP) offer high scalability and cost-effectiveness but require careful consideration of security responsibilities. Private clouds offer greater control over security but may be less scalable and more expensive. Hybrid clouds combine the benefits of both, allowing organizations to tailor their infrastructure to specific needs. For e-commerce, a hybrid approach often proves optimal, using a public cloud for scalable, elastic components and a private cloud for sensitive data and applications requiring tighter security controls.

The choice depends on the specific security requirements, budget, and technical expertise of the organization. For example, a company with highly sensitive customer financial data might opt for a hybrid approach, utilizing a private cloud for payment processing and a public cloud for less sensitive aspects like product catalogs.

The Role of Emerging Technologies

The future of cloud e-commerce isn’t just about better infrastructure; it’s about leveraging emerging technologies to create truly transformative shopping experiences. AI, blockchain, AR/VR, and serverless computing are no longer futuristic concepts – they’re actively shaping the landscape, offering unparalleled opportunities for growth and innovation for online retailers. Let’s delve into how these technologies are impacting the industry.

Artificial Intelligence and Personalized Shopping Experiences

AI is revolutionizing personalization in cloud e-commerce. By analyzing vast amounts of customer data – browsing history, purchase patterns, demographics, and even social media activity – AI algorithms can create highly targeted product recommendations, personalized offers, and customized marketing campaigns. This leads to increased customer engagement, higher conversion rates, and ultimately, improved sales. For example, Amazon’s recommendation engine, powered by sophisticated AI, is a prime example of this in action, consistently suggesting products relevant to individual users’ past purchases and browsing behavior.

This level of personalization fosters customer loyalty and drives repeat business. Furthermore, AI-powered chatbots provide instant customer support, answering questions and resolving issues efficiently, improving the overall shopping experience.

Blockchain Technology and Supply Chain Transparency, The future of cloud e commerce an integrated and composable approach

Blockchain’s inherent transparency and security features are proving invaluable in enhancing supply chain management for online retailers. By recording every step of a product’s journey – from origin to delivery – on a decentralized, immutable ledger, blockchain provides complete traceability. This increased transparency allows businesses to combat counterfeiting, improve product quality control, and build stronger trust with consumers. For instance, a luxury fashion brand could use blockchain to verify the authenticity of its products, ensuring customers are buying genuine items.

This added level of security not only protects the brand’s reputation but also enhances consumer confidence. Furthermore, improved traceability can streamline logistics and reduce delays, optimizing the entire supply chain.

Augmented Reality and Virtual Reality in the Customer Journey

AR and VR are transforming how customers interact with products and brands online. AR allows customers to “try before they buy” through virtual try-on features for clothing, makeup, and even furniture. Imagine trying on glasses virtually using your smartphone camera, or visualizing a new sofa in your living room before purchasing it. This significantly reduces the risk associated with online purchases and increases customer satisfaction.

VR, on the other hand, offers immersive shopping experiences, allowing customers to explore virtual stores and interact with products in a three-dimensional environment. This is particularly beneficial for businesses selling complex or experiential products. For example, a travel agency could use VR to showcase destinations to potential customers, creating a compelling and engaging experience.

Serverless Computing and Cloud E-commerce Agility

Serverless computing offers significant advantages for cloud e-commerce platforms. By abstracting away server management, it allows businesses to focus on building and deploying applications quickly and efficiently. This improved agility enables businesses to respond rapidly to changing market demands and customer needs. Moreover, serverless computing is highly cost-effective, as businesses only pay for the compute time they consume.

This pay-as-you-go model is particularly attractive for businesses experiencing fluctuating traffic volumes, ensuring they only pay for the resources they actually need. For example, a rapidly growing e-commerce startup could leverage serverless functions to handle peak demand during sales events without the overhead of managing dedicated servers. This scalability and cost efficiency are crucial for maintaining competitiveness in the dynamic e-commerce market.

Customer Experience and Personalization

In today’s competitive e-commerce landscape, delivering exceptional customer experiences is paramount. A composable cloud architecture offers unparalleled flexibility to personalize interactions and optimize every touchpoint, fostering loyalty and driving sales. This approach allows businesses to tailor their digital storefronts to individual customer needs and preferences, creating a truly unique and engaging shopping journey.

The ability to personalize the customer experience is no longer a luxury but a necessity. Customers expect seamless interactions across all channels, relevant product recommendations, and a shopping experience that feels tailored just for them. Cloud-based e-commerce platforms, particularly those built on a composable architecture, provide the scalability and flexibility to meet these rising expectations.

Customer Journey Map for a Composable Cloud E-commerce Platform

A customer journey map for a composable cloud e-commerce platform would illustrate a highly personalized and flexible experience. The key touchpoints would include: discovery (through targeted ads or organic search), browsing (personalized product recommendations and category navigation), product detail pages (high-quality images, detailed descriptions, and customer reviews), checkout (seamless and secure payment options), order tracking (real-time updates), post-purchase engagement (personalized follow-up emails and loyalty programs), and customer service (easy access to support channels).

The beauty of the composable approach lies in the ability to easily adjust and improve each of these touchpoints based on customer data and feedback. For instance, if a customer abandons their cart, a targeted email with a discount code could be automatically triggered. This level of personalization is only achievable with the agility and scalability provided by a composable cloud architecture.

Examples of Personalized Recommendations and Targeted Marketing Strategies

Cloud-based systems allow for sophisticated personalization through data analysis and machine learning. For example, Amazon uses collaborative filtering to recommend products based on similar customer purchases. Netflix employs content-based filtering, suggesting shows based on a user’s viewing history and preferences. These systems analyze vast amounts of data to predict customer preferences with remarkable accuracy. Beyond product recommendations, targeted marketing campaigns can be orchestrated based on customer segmentation, behavioral data, and real-time interactions.

A customer browsing hiking gear might receive targeted ads for hiking boots and backpacks on other websites through retargeting campaigns, while a customer who frequently purchases organic food might receive personalized offers and promotions for new organic products.

Omnichannel Integration for Seamless Customer Experience

Omnichannel integration is crucial for providing a cohesive customer experience. A composable architecture facilitates seamless transitions between different channels, such as a website, mobile app, social media, and physical stores. For example, a customer might start browsing products on a website, add items to their cart on their mobile app, and then complete the purchase in-store using a mobile wallet.

The entire process should feel seamless and integrated, regardless of the channel used. Data synchronization across all channels is critical for maintaining a consistent view of the customer and providing personalized experiences. A unified view of customer data allows for personalized offers and recommendations, regardless of where the customer interacts with the brand.

Best Practices for Optimizing Website Performance and User Experience

Optimizing website performance and user experience is vital for customer satisfaction and conversion rates. The following best practices should be implemented:

Effective website performance and user experience optimization is essential for driving customer satisfaction and boosting conversion rates. A well-optimized site ensures fast loading times, intuitive navigation, and a visually appealing design, leading to increased engagement and sales.

- Optimize website speed: Utilize content delivery networks (CDNs) to serve content from servers closer to users, minimizing latency. Compress images and optimize code to reduce page load times.

- Ensure mobile responsiveness: Design a website that adapts seamlessly to different screen sizes and devices. This ensures a consistent and enjoyable experience across all platforms.

- Implement intuitive navigation: Organize website content logically and provide clear navigation menus and search functionality. Make it easy for customers to find what they are looking for.

- Provide high-quality product images and videos: Showcase products with professional, high-resolution images and videos to enhance the shopping experience.

- Offer personalized recommendations: Use data-driven insights to suggest relevant products based on customer browsing history and preferences.

- Integrate customer reviews and ratings: Build trust and credibility by displaying customer reviews and ratings prominently on product pages.

- Provide excellent customer service: Offer multiple channels for customer support, including live chat, email, and phone. Respond promptly and efficiently to customer inquiries.

- Regularly monitor website performance and user behavior: Use analytics tools to track website performance and identify areas for improvement. Gather customer feedback to understand their needs and expectations.

The Future of Cloud E-commerce Operations and Management

Managing a successful cloud-based e-commerce platform requires a sophisticated approach to operations and management. Gone are the days of static infrastructure; today’s e-commerce demands agility, scalability, and resilience. This necessitates a shift towards automated processes, robust monitoring, and proactive strategies for maintaining business continuity. The following sections delve into the key aspects of future-proof e-commerce operations.

DevOps and CI/CD Pipelines in Cloud E-commerce

DevOps and Continuous Integration/Continuous Delivery (CI/CD) pipelines are essential for streamlining the development, testing, and deployment of cloud-based e-commerce applications. DevOps fosters collaboration between development and operations teams, enabling faster release cycles and improved application quality. CI/CD automates the build, test, and deployment process, minimizing manual intervention and reducing the risk of errors. For example, a company like Amazon utilizes a highly sophisticated CI/CD pipeline to continuously update and improve its e-commerce platform, releasing new features and bug fixes rapidly and reliably.

This approach allows for quicker responses to market changes and customer demands. Implementing a robust CI/CD pipeline ensures that new features and bug fixes are deployed quickly and efficiently, minimizing downtime and maximizing customer satisfaction.

Monitoring and Analytics for Performance Optimization

Real-time monitoring and comprehensive analytics are crucial for identifying performance bottlenecks, security vulnerabilities, and other potential issues before they impact customers. Effective monitoring systems provide insights into various aspects of the e-commerce platform, including server load, network performance, application response times, and user behavior. Analytics tools help to analyze this data to identify trends, predict future performance, and optimize resource allocation.

For instance, a spike in error rates during a promotional campaign might indicate a need for additional server capacity or code optimization. By proactively addressing such issues, businesses can prevent service disruptions and ensure a smooth customer experience. Sophisticated analytics dashboards can provide a clear picture of key metrics, enabling data-driven decision-making and proactive problem-solving.

Ensuring Business Continuity and Disaster Recovery

Business continuity and disaster recovery (BCDR) are paramount for maintaining the availability and resilience of a cloud e-commerce platform. A robust BCDR strategy should include measures to prevent service disruptions caused by various events, such as natural disasters, cyberattacks, or hardware failures. Cloud providers offer various BCDR solutions, including automated failover mechanisms, data replication, and geographically distributed infrastructure.

A well-defined BCDR plan should Artikel procedures for restoring services quickly in the event of a disaster, minimizing downtime and data loss. For example, a multi-region deployment strategy, where data is replicated across multiple geographic locations, can ensure business continuity even if one region experiences an outage. This approach ensures high availability and reduces the risk of complete service disruption.

Key Performance Indicators (KPIs) for Cloud E-commerce Success

Regularly tracking key performance indicators (KPIs) is essential for evaluating the success and effectiveness of a cloud e-commerce platform. These metrics provide valuable insights into various aspects of the platform’s performance and allow for data-driven optimization.

- Website Load Time: Measures the time it takes for the website to load completely. A faster load time leads to improved user experience and higher conversion rates.

- Conversion Rate: Represents the percentage of website visitors who complete a desired action, such as making a purchase. A higher conversion rate indicates a more effective e-commerce platform.

- Average Order Value (AOV): Indicates the average amount spent per order. Increasing AOV can significantly boost revenue.

- Customer Acquisition Cost (CAC): Measures the cost of acquiring a new customer. Lowering CAC improves profitability.

- Customer Lifetime Value (CLTV): Represents the total revenue generated by a customer throughout their relationship with the business. Higher CLTV indicates customer loyalty and profitability.

- Uptime: Measures the percentage of time the e-commerce platform is available and operational. High uptime is crucial for ensuring customer satisfaction and minimizing revenue loss.

- Error Rate: Represents the percentage of transactions or requests that result in errors. A lower error rate indicates better system stability and reliability.

Outcome Summary: The Future Of Cloud E Commerce An Integrated And Composable Approach

In conclusion, the future of cloud e-commerce hinges on embracing an integrated and composable approach. By strategically combining best-of-breed technologies, prioritizing security and scalability, and focusing on an exceptional customer experience, businesses can unlock a new level of agility, efficiency, and growth. This isn’t just about technology; it’s about creating a future where online shopping is personalized, secure, and truly delightful.

The journey towards this future is ongoing, but the rewards for those who embrace change are immense.

FAQ Guide

What are the biggest challenges in adopting a composable e-commerce architecture?

The biggest challenges include the complexity of integrating various systems, the need for skilled developers, and the potential for increased costs initially.

How can I ensure my composable e-commerce platform remains secure?

Robust security requires a multi-layered approach including strong authentication, encryption, regular security audits, and choosing reputable vendors for your chosen components.

What is the return on investment (ROI) for a composable approach?

ROI varies depending on the business, but potential benefits include increased agility, improved scalability, reduced costs in the long run, and enhanced customer experiences leading to higher conversion rates.

How does a composable approach differ from a monolithic approach?

A monolithic approach uses a single, integrated system. A composable approach uses independent, interchangeable modules, offering greater flexibility and scalability.