Data Security Concerns Ban China AI Training

Data security concerns make us cloud companies impose ban on china ai training – Data security concerns make us cloud companies impose a ban on China AI training. This isn’t just another tech story; it’s a geopolitical earthquake rumbling beneath the surface of the digital world. The implications are vast, touching everything from US-China relations to the future of artificial intelligence itself. We’re talking about a significant shift in the global tech landscape, one driven by legitimate fears but fraught with complex consequences.

The ban, while seemingly focused on data security, highlights a deeper struggle for technological dominance and control over crucial data streams. It forces us to confront the thorny ethical questions surrounding AI development and the potential for misuse. Is this a necessary precaution, or a dangerous escalation of existing tensions? Let’s dive in.

Geopolitical Implications of the Ban

The recent ban imposed by cloud companies on the use of their services for AI training in China carries significant geopolitical weight, adding another layer to the already complex US-China relationship. This action isn’t simply a business decision; it’s a move with far-reaching consequences for technological competition, international trade, and the future trajectory of artificial intelligence development globally.The impact of this ban reverberates across multiple sectors and international relations.

It’s crucial to analyze its implications not just for the immediate players involved, but also for the broader geopolitical landscape.

US-China Technological Competition

The ban intensifies the existing technological rivalry between the US and China. The US views China’s rapid advancements in AI as a potential threat, both economically and strategically. Restricting access to powerful cloud computing resources, essential for training advanced AI models, aims to slow China’s progress and maintain a technological edge for American companies and the US military.

This move, however, risks escalating the existing tensions and prompting retaliatory measures from China, potentially leading to a further fragmentation of the global technology ecosystem.

Escalation of Trade Tensions

This ban has the potential to significantly escalate existing trade tensions between the US and China. China may view the ban as an unfair trade practice and could retaliate with tariffs, restrictions on American technology companies operating within China, or other punitive measures. This could further destabilize the global economy, particularly given the interconnectedness of the technology sector.

The situation mirrors past trade disputes, but with the added complexity of national security concerns woven into the fabric of the conflict. For example, the ongoing trade war between the US and China has already disrupted global supply chains and affected numerous industries. This new ban could exacerbate these existing disruptions.

Economic Consequences

The economic consequences are multifaceted. For cloud companies, the ban might represent a loss of revenue from the Chinese market, a significant loss considering the size and growth potential of the Chinese AI sector. Conversely, Chinese AI development will likely face significant setbacks. Access to advanced computing resources is crucial for training large language models and other sophisticated AI systems.

The ban could force Chinese companies to rely on less powerful domestic infrastructure, potentially hindering their progress and competitiveness in the global AI market. This could lead to a brain drain, as Chinese AI talent might seek opportunities elsewhere.

Comparison to Previous Technological Restrictions

This ban echoes previous instances of governments imposing technological restrictions, such as export controls on semiconductors or restrictions on the sale of certain software to specific countries. However, this ban is unique in its focus on a specific technology (AI) and its reliance on the cloud computing infrastructure, highlighting the increasing importance of cloud services in the development of cutting-edge technologies.

The COCOM (Coordinating Committee for Multilateral Export Controls) during the Cold War is a relevant historical example of a coordinated effort by Western nations to restrict the export of sensitive technologies to the Soviet bloc. This demonstrates a pattern of governments using technological restrictions as a tool in geopolitical competition.

Impact on Stakeholders

| Stakeholder | Short-Term Effects | Long-Term Effects | Potential Mitigation Strategies |

|---|---|---|---|

| Cloud Providers (e.g., AWS, Google, Microsoft) | Loss of revenue from Chinese market; potential reputational damage in China. | Potential shift in global market share; need to adapt to a more fragmented market. | Diversification of markets; investment in alternative technologies. |

| Chinese AI Companies | Reduced access to advanced computing resources; slower AI development. | Increased reliance on domestic infrastructure; potential loss of global competitiveness. | Investment in domestic cloud infrastructure; focus on niche AI applications. |

| Global AI Development | Slower overall progress in AI; potential for a more fragmented AI ecosystem. | Increased competition between different AI ecosystems; potential for technological divergence. | International collaboration on AI safety and ethics; promoting open-source AI development. |

Data Security Concerns Specific to Chinese AI Training: Data Security Concerns Make Us Cloud Companies Impose Ban On China Ai Training

The recent ban on Chinese AI training by several cloud companies highlights significant data security risks. These concerns stem from a confluence of factors, including the structure of China’s data infrastructure, government regulations, and the history of data breaches involving Chinese technology firms. Understanding these vulnerabilities is crucial for mitigating the potential damage from future incidents.

Training sophisticated AI models requires massive datasets, often encompassing sensitive personal information, intellectual property, and national security data. The potential for this data to be accessed, misused, or stolen is exponentially amplified when training occurs within a geopolitical landscape where government oversight and data protection standards differ significantly from those in Western nations. This difference creates a heightened risk for both the companies providing the cloud services and the wider global community.

Vulnerabilities in Chinese Data Infrastructure

China’s data infrastructure, while rapidly advancing, presents unique vulnerabilities. The relatively less stringent data privacy regulations compared to the EU’s GDPR or the California Consumer Privacy Act (CCPA) create an environment where data protection may be weaker. Furthermore, the potential for government access to data stored within China, even within seemingly private cloud environments, presents a significant concern.

This access might not always be for malicious purposes but could still lead to unintentional data leaks or unauthorized use, especially considering the close relationship between the Chinese government and domestic technology companies. The lack of independent oversight and robust auditing mechanisms further exacerbates these vulnerabilities.

Examples of Past Data Breaches in Chinese Technology

Several high-profile data breaches involving Chinese technology companies have underscored the potential for significant data loss. While pinpointing the exact causes and attributing responsibility is often complex, these incidents illustrate the existing vulnerabilities. For example, the 2016 breach of Yahoo, affecting billions of user accounts, involved a suspected state-sponsored attack originating from China, highlighting the potential for sophisticated attacks targeting large datasets.

While not directly related to AI training, this illustrates the capability and intent of certain actors. Other less publicized incidents involving the compromise of sensitive data held by Chinese companies, although lacking public detail, further support the notion of existing vulnerabilities. These incidents underscore the need for heightened security protocols when dealing with data within the Chinese ecosystem.

Impact of Chinese Government Regulations on Data Handling

The Chinese government’s regulations on data handling, while aiming to improve data security and control, also contribute to the security concerns surrounding AI training in China. Regulations like the Cybersecurity Law and the Data Security Law, while intending to protect data, also grant the government broad access to data under certain circumstances. This potential for government access, combined with a lack of transparency in how this access is exercised, creates uncertainty and potential risks for companies storing and processing data for AI training within China.

The opacity of these regulations and their enforcement makes it difficult for foreign companies to assess and mitigate the associated risks effectively.

Hypothetical Data Breach Scenario

Imagine a multinational company training a large language model (LLM) on a dataset containing sensitive customer information, including personally identifiable information (PII), financial details, and medical records, hosted on a Chinese cloud provider. Due to a combination of insufficient security measures within the cloud infrastructure and potential government access, a sophisticated state-sponsored actor gains access to the dataset. This actor could use the data to create highly targeted phishing campaigns, conduct identity theft, or even develop sophisticated deepfakes for disinformation purposes.

The recent bans on Chinese AI training by cloud companies highlight the escalating data security concerns. It’s a complex issue, and managing this risk effectively requires robust solutions like those offered by bitglass and the rise of cloud security posture management , which helps organizations maintain a strong security posture across their cloud environments. Ultimately, these restrictions underscore the crucial need for proactive and comprehensive data protection strategies in the face of growing geopolitical tensions.

The resulting damage could be catastrophic, affecting not only the company but also its customers and potentially causing significant geopolitical instability. The lack of transparent legal recourse and potential limitations on reporting the breach further complicate the situation.

Impact on Cloud Computing Industry Strategies

The ban on Chinese AI training by major cloud providers will fundamentally alter their global expansion strategies. The immediate impact is a significant shift in resource allocation and a reevaluation of market opportunities. This necessitates a more nuanced approach to geopolitical risk assessment and a deeper understanding of the regulatory landscapes in alternative locations.The ban forces cloud companies to re-strategize, moving beyond simply chasing the largest markets and instead focusing on regions with robust data privacy regulations and supportive governmental policies regarding AI development.

This requires a significant investment in infrastructure and personnel in these new target areas.

Reshaped Global Expansion Strategies

The ban will likely accelerate the diversification of cloud providers’ global footprint. Instead of focusing heavily on the Chinese market, we can expect to see increased investment in regions like Southeast Asia, India, and parts of Europe and Africa. This shift involves not just setting up data centers but also cultivating partnerships with local businesses and governments to navigate complex regulatory frameworks.

For example, Google might prioritize expansion in India given its large and growing tech sector, while AWS could focus on strengthening its presence in Europe, leveraging existing data center infrastructure and compliance expertise. This strategic realignment will involve detailed market analysis, identifying areas with high growth potential and favorable regulatory environments for AI development.

Comparative Approaches of Cloud Companies

Different cloud providers will adopt varying strategies. Some, like Microsoft, may choose a more cautious approach, prioritizing compliance and focusing on regions with well-established data privacy regulations. Others, such as Amazon, might take a more aggressive approach, investing heavily in infrastructure in alternative high-growth markets, accepting some initial higher risk in return for potentially larger rewards. This divergence in strategy will likely lead to a more fragmented cloud computing landscape, with companies specializing in specific geographical regions and regulatory environments.

Increased Investment in Alternative AI Training Locations

The ban will undeniably lead to a surge in investment in AI training infrastructure outside of China. We can anticipate increased capital expenditure in data centers, high-performance computing resources, and skilled personnel in regions like Canada, Singapore, and the European Union. This investment will not only support the needs of cloud providers but also stimulate local AI ecosystems, fostering innovation and competition.

For example, the increased demand could trigger the construction of new hyperscale data centers in regions previously underserved, boosting local economies and creating new job opportunities.

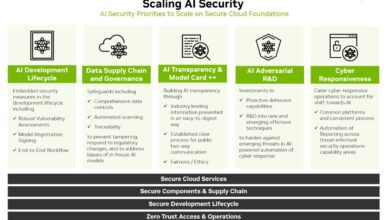

Innovation in Data Security Technologies

This situation will act as a powerful catalyst for innovation in data security technologies. Cloud providers will be compelled to develop more sophisticated tools and techniques to protect sensitive data, particularly those involved in AI training. This might involve advancements in encryption, data anonymization, and federated learning – techniques that allow for collaborative AI training without sharing raw data.

The increased scrutiny on data security will also lead to the development of more robust auditing and compliance mechanisms, ensuring adherence to evolving regulations across different jurisdictions.

Potential Adjustments to Data Governance Policies

Cloud companies will need to make several adjustments to their data governance policies to address the new reality.

- Strengthened data encryption protocols, particularly for data in transit and at rest.

- More rigorous access control mechanisms, limiting data access to authorized personnel and systems.

- Enhanced data anonymization and pseudonymization techniques to minimize the risk of data breaches.

- Implementation of more robust data loss prevention (DLP) measures.

- Development of clearer data residency policies, specifying where data can be stored and processed.

- Increased investment in security audits and compliance certifications.

- Establishment of clearer processes for handling data subject access requests.

- More transparent data governance frameworks, providing greater visibility into data handling practices.

Ethical and Societal Considerations

Restricting access to AI training resources based on geographical location raises complex ethical questions about fairness, equity, and the global advancement of knowledge. The potential ramifications extend far beyond the immediate impact on specific companies and industries, touching upon fundamental principles of scientific collaboration and global societal progress. This ban necessitates a careful examination of its ethical implications and long-term consequences.The decision to limit Chinese access to cloud-based AI training resources has significant ethical implications.

It raises concerns about the fairness and equity of access to vital technological resources necessary for scientific advancement and economic development. Such restrictions risk creating a two-tiered system, where certain nations enjoy privileged access to cutting-edge technologies, while others are left behind, exacerbating existing global inequalities. This raises concerns about the potential for increased technological and economic disparity between nations.

Exacerbation of Global AI Development Inequalities

The ban on Chinese AI training in certain cloud environments could significantly worsen existing inequalities in global AI development. Countries with less developed AI infrastructure and fewer resources might find themselves further disadvantaged, hindering their ability to compete in the rapidly evolving field of artificial intelligence. This disparity could lead to a concentration of AI expertise and innovation in a select few countries, potentially reinforcing existing power imbalances.

For example, consider the disparity between the advanced AI research capabilities of the US and the EU versus those in many African nations. This ban could widen this gap further. Access to large datasets and powerful computational resources is crucial for AI development, and restricting access to these resources disproportionately impacts nations with less developed technological infrastructure.

Consequences for Scientific Collaboration and Knowledge Sharing

Restricting access to AI training resources based on geography inhibits the free flow of information and the collaborative spirit essential to scientific progress. AI research is inherently global; breakthroughs often build upon previous work from researchers across the world. Limiting access to resources prevents the sharing of knowledge and the synergistic collaboration that fuels innovation. The lack of cross-border collaboration will likely slow the pace of AI advancement globally, as potential breakthroughs might be missed due to the isolated development of AI technology.

This echoes historical examples where scientific progress was hampered by limitations on information sharing, such as during the Cold War.

The recent bans on Chinese AI training by cloud companies highlight the growing anxieties around data security. This focus on safeguarding sensitive information is impacting development choices, leading many to explore solutions like low-code/no-code platforms to streamline the process. For instance, check out this insightful article on domino app dev the low code and pro code future to see how this shift is impacting development.

Ultimately, these security concerns are pushing the tech industry towards more controlled and secure development practices.

Comparison to Other Instances of Technological Restrictions Based on Ethical Concerns

The current situation shares similarities with past instances where technological restrictions were imposed due to ethical concerns. The control of nuclear technology and the debates surrounding the development and deployment of autonomous weapons systems offer relevant parallels. In these cases, the potential for misuse and catastrophic consequences led to international agreements and regulations aimed at limiting access and promoting responsible development.

However, unlike these examples which often involved weapons of mass destruction, the current situation focuses on the development of a potentially dual-use technology, AI. The ethical considerations are thus subtly different, focusing more on economic and societal disparities rather than immediate existential threats.

Impact on Global AI Research and Development

The potential impact on global AI research and development is substantial. Restricting access to powerful cloud computing resources limits the ability of researchers in affected regions to conduct large-scale experiments and develop advanced AI models. This could lead to a loss of potential advancements in various fields, from medicine and climate science to materials engineering and transportation. Imagine, for instance, the delay in developing a crucial medical diagnostic AI because a team of researchers in China lacked access to the necessary computing power.

The loss of potential advancements, caused by limiting access to AI training resources, will likely hinder the progress of solving complex global challenges. This could have far-reaching and potentially negative implications for society as a whole.

Alternative Approaches to Mitigating Risks

The complete ban on Chinese AI training by cloud companies, while seemingly a drastic solution to data security concerns, isn’t the only approach. A more nuanced strategy involves implementing robust security measures and fostering international collaboration to address the risks without sacrificing innovation and global participation in the AI field. This allows for a more measured response, balancing security with the benefits of technological advancement.

More effective mitigation strategies focus on strengthening existing security protocols and promoting transparency rather than outright prohibition. This approach recognizes the complexity of the issue and the need for a multi-faceted solution that addresses the root causes of data security vulnerabilities, rather than simply reacting to the symptoms.

Enhanced Data Encryption and Access Control Mechanisms

Implementing strong encryption protocols, both at rest and in transit, is paramount. This involves using advanced encryption standards (AES) with strong key management practices. Access control should be granular, employing role-based access control (RBAC) and least privilege principles to limit who can access sensitive data and AI models. Multi-factor authentication (MFA) should be mandatory for all users, further enhancing security.

For example, the adoption of homomorphic encryption, which allows computations on encrypted data without decryption, could significantly reduce the risk of data breaches during AI training.

Best Practices for Secure Data Handling and AI Model Training

Secure data handling practices should include rigorous data sanitization before training, minimizing the risk of sensitive information leaking into the models. Regular security audits and penetration testing are crucial to identify vulnerabilities. Model versioning and rollback capabilities allow for quick responses to security incidents. Employing federated learning techniques, where models are trained on decentralized data without direct data sharing, can significantly reduce security risks.

For instance, a financial institution could use federated learning to train a fraud detection model across multiple branches without centralizing sensitive customer data.

International Collaborations on Data Security Standards

International collaboration is essential to establishing globally recognized data security standards for AI. This requires coordinated efforts between governments, industry stakeholders, and research institutions to develop common guidelines and best practices. The creation of a global framework for data security certification could provide a benchmark for trustworthy AI development and deployment. An example of such a collaboration could involve the development of a shared international standard for AI model security audits, similar to existing standards in other industries.

Framework for a Secure and Transparent AI Training Environment, Data security concerns make us cloud companies impose ban on china ai training

A robust framework should incorporate several key elements: Firstly, a clear and concise data governance policy defining data access, usage, and disposal procedures. Secondly, a comprehensive risk assessment and management plan to identify and mitigate potential threats proactively. Thirdly, a transparent and auditable training process, allowing for independent verification of the security measures employed. Fourthly, a robust incident response plan to handle data breaches or security incidents effectively.

Fifthly, continuous monitoring and improvement of security measures, adapting to evolving threats and vulnerabilities. This framework should be implemented and regularly reviewed by an independent security oversight committee to ensure accountability and transparency.

Concluding Remarks

The ban on Chinese AI training by cloud companies, driven by legitimate data security concerns, is a watershed moment. It underscores the growing tension between technological advancement and geopolitical strategy. While the immediate impact is felt by cloud providers and Chinese AI developers, the long-term consequences will shape the global AI landscape, influencing everything from research collaborations to the very structure of the digital world.

The need for transparent, globally-accepted data security standards is clearer than ever. This isn’t just about security; it’s about the future of innovation.

FAQ

What specific data vulnerabilities are at play in China?

Concerns center around potential government access to data, lack of robust independent oversight, and the possibility of data being used for purposes beyond AI training, such as surveillance.

Will this ban stifle AI innovation globally?

Potentially. China is a major player in AI, and restricting access to resources could slow down overall progress. However, it might also spur innovation in alternative data security measures and training locations.

What alternatives exist to an outright ban?

Enhanced data encryption, stricter access controls, international collaboration on data security standards, and independent audits of Chinese data centers are all possibilities.

How might this impact smaller AI startups in China?

Smaller companies will likely be disproportionately affected, lacking the resources to easily relocate training or implement stringent security measures.