Artificial Intelligence Good, Bad, and Ugly

Artificial intelligence good bad and ugly – Artificial Intelligence: Good, Bad, and Ugly – that’s the complex reality we face. AI is rapidly transforming our world, offering incredible potential for progress in healthcare, accessibility, and the economy. But this powerful technology also presents significant ethical dilemmas, risks of bias, and the potential for misuse. This exploration delves into the multifaceted nature of AI, examining its benefits, drawbacks, and the downright unsettling possibilities that lie ahead.

We’ll navigate the thrilling advancements alongside the very real dangers, aiming for a balanced perspective on this game-changing force.

From life-saving medical applications and environmental conservation to the potential for job displacement, societal inequalities, and even autonomous weapons, we’ll unpack the intricate web of AI’s impact. We’ll consider the ethical responsibilities inherent in its development and deployment, exploring how we can harness its power for good while mitigating its inherent risks. Get ready for a deep dive into a future shaped by artificial intelligence – a future that’s both exhilarating and deeply concerning.

The Good of Artificial Intelligence

Artificial intelligence is rapidly transforming our world, and while concerns about its potential downsides are valid, it’s crucial to acknowledge the immense good AI is already doing and will continue to do. Its applications span numerous fields, offering solutions to complex problems and improving lives in profound ways. This section will explore some of AI’s most significant positive impacts.

AI’s Improvement of Healthcare

AI is revolutionizing healthcare through improved diagnostics, personalized treatments, and enhanced efficiency. The following table details some key applications:

| Application | Benefit | Example | Potential Challenges |

|---|---|---|---|

| Disease Diagnosis | Faster, more accurate diagnosis, leading to earlier intervention and better outcomes. | AI algorithms analyzing medical images (X-rays, CT scans) to detect cancerous tumors with higher accuracy than human radiologists in some cases. | Data bias, need for validation and regulatory approval, potential for misdiagnosis leading to incorrect treatment. |

| Drug Discovery and Development | Accelerated development of new drugs and therapies, reducing costs and time to market. | AI predicting the effectiveness of drug candidates based on molecular structures and biological data, significantly shortening the drug development process. | High computational costs, ethical considerations related to data privacy and intellectual property. |

| Personalized Medicine | Tailored treatment plans based on individual patient characteristics, improving treatment efficacy and reducing side effects. | AI algorithms analyzing patient data (genetics, lifestyle, medical history) to recommend personalized cancer treatment strategies. | Data privacy concerns, need for robust data security measures, potential for algorithmic bias leading to inequitable access to care. |

AI Enhancing Accessibility for People with Disabilities

AI offers powerful tools to enhance the independence and quality of life for individuals with disabilities.AI-powered assistive technologies are making significant strides. One example is screen readers, which use AI to interpret on-screen text and convert it into audio, enabling visually impaired individuals to access digital information. These advanced screen readers utilize natural language processing and machine learning to improve accuracy and context understanding, even interpreting complex layouts and visual elements.

They are constantly evolving to better understand nuances of language and different document formats.Another powerful application is AI-powered prosthetic limbs. These advanced prosthetics use machine learning algorithms to interpret brain signals, allowing amputees to control the limb with greater precision and naturalness. This technology is continually improving, incorporating sensors and feedback mechanisms to create more intuitive and responsive devices, offering greater dexterity and control.

For example, some advanced prosthetics can even sense temperature and pressure, providing a more natural feeling and interaction with the environment.

AI’s Economic Benefits Across Industries

AI’s economic impact is substantial, driving productivity and innovation across various sectors.

In the manufacturing sector:

- Increased efficiency through automation of tasks such as quality control and predictive maintenance, leading to reduced production costs and improved product quality.

- Enhanced supply chain management through AI-powered forecasting and optimization, reducing waste and improving delivery times.

In the financial services sector:

- Improved fraud detection and prevention through AI algorithms that identify suspicious transactions in real-time.

- Personalized financial advice and investment strategies based on individual customer profiles and market conditions, leading to better financial outcomes.

AI’s Positive Impact on Environmental Conservation

AI is playing an increasingly important role in environmental conservation efforts. For instance, AI-powered drones and satellite imagery analysis can monitor deforestation rates, track endangered species, and assess the impact of climate change on ecosystems with greater speed and accuracy than traditional methods. Furthermore, AI algorithms can optimize energy consumption in smart grids, reducing carbon emissions and promoting sustainable energy use.

AI is also being used to develop more efficient and sustainable agricultural practices, optimizing resource use and reducing the environmental footprint of food production.

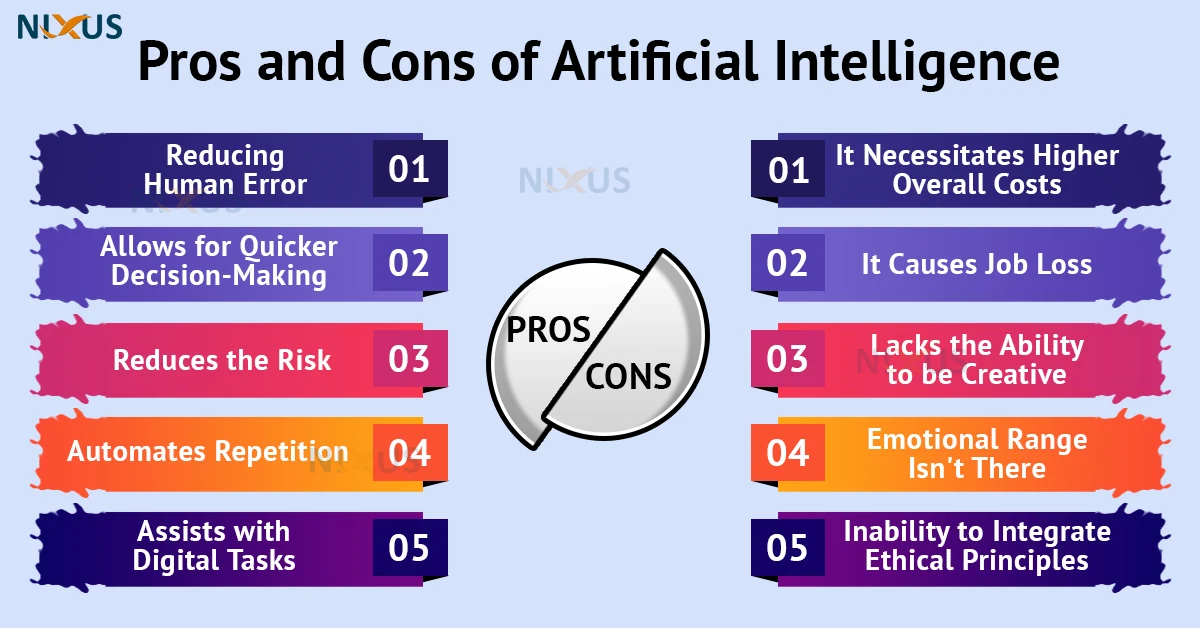

The Bad of Artificial Intelligence

Artificial intelligence, while offering incredible potential benefits, also presents significant challenges and risks. Its rapid advancement necessitates a careful consideration of its ethical implications and potential for societal harm. Failing to address these issues proactively could lead to unforeseen and potentially devastating consequences.

Ethical Concerns Surrounding AI Development and Deployment

Three major ethical concerns dominate the conversation around AI: privacy violation, algorithmic bias, and the lack of accountability for AI’s actions. These concerns are interconnected and highlight the need for robust ethical frameworks guiding AI development and deployment.

- Privacy Violation: AI systems often rely on vast amounts of personal data to function effectively. This data collection can lead to significant privacy violations if not properly managed. For example, facial recognition technology used in public spaces raises concerns about constant surveillance and the potential for misuse of collected data. Similarly, AI-powered marketing tools can track individuals’ online behavior to create highly personalized advertisements, potentially leading to a sense of being constantly monitored and manipulated.

- Algorithmic Bias: AI algorithms are trained on data, and if that data reflects existing societal biases, the AI system will perpetuate and even amplify those biases. For instance, a facial recognition system trained primarily on images of white faces may perform poorly when identifying individuals with darker skin tones, leading to misidentification and potentially unfair consequences. Similarly, AI-powered loan applications that rely on historical data might discriminate against certain demographic groups who have historically faced systemic disadvantages in accessing credit.

- Lack of Accountability: Determining responsibility when an AI system makes a harmful decision is a complex ethical challenge. If a self-driving car causes an accident, who is liable – the manufacturer, the software developer, or the owner? This lack of clear accountability hinders the development of effective mechanisms to prevent and address harmful AI outcomes. The complexity of AI decision-making processes often makes it difficult to understand why a particular outcome occurred, further complicating the process of assigning responsibility.

AI’s Exacerbation of Societal Inequalities

AI has the potential to exacerbate existing societal inequalities by disproportionately impacting vulnerable populations. This is particularly true when AI systems are used in areas such as criminal justice, employment, and healthcare.

- Criminal Justice: AI-powered predictive policing tools, trained on data reflecting existing biases in policing, might lead to increased surveillance and targeting of marginalized communities. This could lead to a vicious cycle of disproportionate arrests and convictions, further reinforcing existing inequalities.

- Employment: AI-driven automation has the potential to displace workers in various sectors, disproportionately affecting low-skilled workers and those in already marginalized communities. This can lead to increased unemployment and widening economic disparities.

- Healthcare: AI algorithms used in healthcare decision-making could perpetuate existing health disparities if trained on data that does not adequately represent diverse populations. For instance, an AI system used to diagnose diseases might be less accurate for certain racial or ethnic groups, leading to delayed or inaccurate treatment.

Risks of AI Bias and Discrimination

AI bias and discrimination can manifest in various ways, leading to unfair or discriminatory outcomes. These biases often stem from biased training data or flawed algorithm design.

For example, consider a hypothetical scenario involving an AI-powered recruitment tool. The tool is trained on historical hiring data from a company that has historically favored male candidates. As a result, the AI system learns to prioritize male applicants over female applicants, even if the female applicants are equally or more qualified. This bias is then replicated and amplified by the AI, leading to systemic discrimination against women in the hiring process.

This demonstrates how seemingly neutral algorithms can perpetuate and even worsen existing societal biases.

Job Displacement Potential of AI Across Various Sectors

The potential for AI-driven job displacement varies significantly across different sectors. While some sectors, such as manufacturing and transportation, are expected to experience significant job losses due to automation, others, such as healthcare and education, might see a shift in job roles rather than outright displacement. The impact of AI on employment will likely be complex and uneven, with some jobs being automated while new jobs are created in related fields.

For instance, while self-driving trucks might displace truck drivers, the development and maintenance of these trucks will create new job opportunities in engineering and software development. Similarly, AI-powered diagnostic tools in healthcare might reduce the workload of some medical professionals, while also creating new roles for specialists in AI-assisted diagnosis and treatment.

The Ugly of Artificial Intelligence

The potential benefits of artificial intelligence are undeniable, but its capacity for harm is equally significant. This darker side of AI, often overlooked in discussions of technological advancement, presents serious ethical, social, and political challenges that demand careful consideration. We must acknowledge the potential for misuse and proactively develop strategies to mitigate the risks.

AI in Surveillance and Authoritarian Regimes

The chilling prospect of AI-powered surveillance systems wielded by authoritarian regimes is a stark reality. Facial recognition technology, predictive policing algorithms, and pervasive data collection create a climate of fear and oppression, eroding fundamental human rights and freedoms. Imagine a city where every citizen is constantly monitored by AI-powered cameras, their movements tracked, their conversations analyzed, their thoughts potentially inferred.

A dissident’s online activity, flagged by an algorithm, could lead to immediate arrest without due process. This is not science fiction; such systems are already being deployed in various parts of the world, raising serious concerns about the erosion of privacy and the potential for widespread abuse. The narrative illustrates how seemingly innocuous technological advancements can be twisted into tools of oppression, silencing dissent and reinforcing authoritarian control.

Challenges in Regulating AI Development and Deployment

Effective regulation of AI is crucial to prevent its misuse. However, the rapid pace of technological advancement presents significant challenges.

- The global nature of AI development makes international cooperation essential, yet achieving consensus on regulatory frameworks proves difficult due to differing priorities and legal systems.

- The complexity of AI algorithms makes it challenging to establish clear standards for accountability and transparency. Determining responsibility when an AI system makes a harmful decision is a significant legal and ethical hurdle.

- Balancing innovation with safety and ethical considerations requires a nuanced approach. Overly restrictive regulations could stifle innovation, while insufficient regulation could lead to widespread harm.

- The constant evolution of AI technology necessitates adaptive regulatory frameworks that can keep pace with advancements. Legislation must be flexible enough to address emerging challenges without becoming obsolete quickly.

AI-Powered Autonomous Weapons Systems

The development of AI-powered autonomous weapons systems (AWS), also known as lethal autonomous weapons, raises profound ethical dilemmas. These systems, capable of selecting and engaging targets without human intervention, challenge traditional notions of warfare and accountability. A key concern is the potential for unintended escalation. An AWS malfunctioning or misinterpreting a situation could trigger a catastrophic chain of events.

Furthermore, the lack of human control raises questions about moral responsibility and the potential for dehumanizing warfare. Comparing this to traditional warfare, where human judgment (however flawed) remains a critical element, highlights the profound shift in the nature of conflict that AWS represent. The absence of human intervention eliminates the potential for empathy, restraint, and consideration of collateral damage, leading to potentially greater loss of life and suffering.

AI for Malicious Purposes: Deepfakes and Misinformation

AI technologies, particularly deepfake video and audio generation, are being increasingly used to create and disseminate misinformation. Deepfakes, realistic but fabricated videos or audio recordings, can be used to damage reputations, manipulate public opinion, and sow discord. For example, a deepfake video of a politician making a controversial statement could sway an election or incite violence. The ease with which such content can be created and spread, coupled with the difficulty of discerning authenticity, poses a serious threat to trust and social cohesion.

The consequences can range from personal harm and political instability to broader erosion of public trust in information sources. The rapid proliferation of deepfakes necessitates the development of effective detection and mitigation strategies, alongside education initiatives to increase public awareness of this threat.

AI’s Impact on Creativity and Innovation

Artificial intelligence is rapidly transforming how we approach creativity and innovation, sparking both excitement and apprehension. Its potential to augment human capabilities is undeniable, but concerns about its impact on the uniquely human aspects of creative expression remain. This section explores the dual nature of AI’s role, examining its potential as both a powerful tool and a potential disruptor.AI as a Tool for Creative Expression versus a Threat to Human CreativityThe integration of AI into creative processes presents a fascinating paradox.

While some fear AI will replace human artists and innovators, others see it as a powerful collaborator, expanding the boundaries of human imagination. The following points highlight this duality:

- AI as a Tool: AI can automate tedious tasks, freeing up human creators to focus on higher-level conceptualization and emotional expression. It can provide novel approaches, generate variations on existing ideas, and even assist in the technical aspects of creation.

- AI as a Threat: Concerns exist that AI-generated content might diminish the value of human artistry, potentially leading to an oversaturation of derivative works and a devaluation of the unique human perspective.

- AI as a Collaborator: The most promising scenario involves AI acting as a collaborative partner, enhancing human creativity rather than replacing it. AI can offer suggestions, explore alternative approaches, and help refine ideas, leading to more innovative and nuanced outputs.

AI’s Acceleration of Scientific Discovery and Technological Innovation

AI is already proving instrumental in accelerating scientific breakthroughs and driving technological advancements. Its ability to analyze vast datasets, identify patterns, and make predictions far surpasses human capabilities.

- Drug Discovery: AI algorithms are used to analyze massive datasets of molecular structures and biological activity, significantly reducing the time and cost required to identify potential drug candidates. For example, Atomwise uses AI to screen millions of molecules for potential drug interactions, dramatically accelerating the drug discovery process. Their AI predicted a potential treatment for Ebola, showcasing the speed and efficiency of this approach.

AI’s impact is a double-edged sword – offering incredible potential but also raising ethical concerns. The rapid advancements in AI are changing how we build software, and this is especially true with application development. For a deeper dive into the evolving landscape of app creation, check out this insightful article on domino app dev, the low-code and pro-code future , which highlights how these trends intersect with the good, bad, and ugly aspects of AI integration in development.

Ultimately, navigating the AI revolution responsibly will be key to harnessing its power for good.

- Materials Science: AI is employed to predict the properties of new materials, enabling the design of materials with specific characteristics. This is particularly valuable in fields like aerospace engineering, where lightweight, high-strength materials are crucial. Companies like Citrination utilize AI-powered databases and predictive modeling to discover and design novel materials, leading to faster innovation cycles.

AI’s Enhancement of Artistic Expression

AI’s influence extends across various artistic mediums, pushing creative boundaries and enabling new forms of artistic expression.

- Music: AI can compose music in various styles, generating melodies, harmonies, and rhythms based on learned patterns. Software like Amper Music allows users to specify parameters like genre and mood, resulting in custom-generated musical scores.

- Visual Arts: AI algorithms can generate unique images, manipulate existing images, and even create entirely new artistic styles. Programs like DALL-E 2 and Midjourney enable users to generate stunning visuals based on textual descriptions, opening up exciting new possibilities for visual artists.

- Literature: AI can assist in writing, suggesting plot points, generating character descriptions, and even drafting entire sections of text. While it cannot replace the human element of storytelling, it can act as a powerful writing tool, helping authors overcome writer’s block and explore new creative avenues.

Hypothetical Scenario: AI Collaboration in Cancer Research

Imagine a scenario where a team of oncologists collaborates with an AI system specializing in genomic analysis. The AI analyzes vast genomic datasets from cancer patients, identifying subtle patterns and mutations that are undetectable by human analysis alone. This leads to the discovery of a novel biomarker associated with a specific type of aggressive leukemia. The AI then helps design a personalized immunotherapy treatment targeting this biomarker, significantly improving treatment efficacy and patient survival rates.

This breakthrough, born from the synergistic collaboration between human expertise and AI’s analytical power, revolutionizes leukemia treatment.

The Future of AI and its Implications

The rapid advancement of artificial intelligence presents a future brimming with both unprecedented opportunities and significant challenges. Understanding the potential impact of AI on global affairs and societal structures is crucial for navigating the complexities ahead. This section explores potential scenarios, focusing on geopolitical shifts, societal transformations, and strategies for responsible AI development.

AI’s Geopolitical Impact

AI’s influence on global power dynamics is already evident. Nations are investing heavily in AI research and development, viewing it as a key determinant of future economic and military strength. This competition could lead to new alliances and rivalries, potentially destabilizing existing geopolitical structures. For example, the development of autonomous weapons systems raises ethical and security concerns, potentially lowering the threshold for armed conflict.

The concentration of AI power in the hands of a few nations or corporations also presents a risk of imbalance and control. Conversely, AI could foster cooperation through shared solutions to global challenges like climate change and disease.

Predictions for Future AI Development and Societal Impact

Predicting the future of AI is inherently complex, but we can extrapolate based on current trends. The following table offers a possible outlook:

| Timeframe | Technological Advancement | Societal Impact | Potential Challenges |

|---|---|---|---|

| 2025-2030 | Widespread adoption of generative AI in various industries; advancements in robotics and automation; improved natural language processing. | Increased automation of tasks, leading to job displacement in some sectors; personalized experiences in healthcare, education, and entertainment; potential for increased social inequality. | Ethical concerns surrounding algorithmic bias; need for robust cybersecurity measures; managing the transition of the workforce. |

| 2030-2040 | Development of more sophisticated AI systems capable of complex reasoning and problem-solving; emergence of artificial general intelligence (AGI) remains uncertain but possible. | Significant transformations in various sectors, including healthcare, transportation, and manufacturing; potential for increased productivity and economic growth; potential for widespread societal disruption if AGI is achieved. | Addressing the potential for job displacement on a massive scale; managing the ethical implications of AGI; ensuring equitable access to AI benefits. |

| 2040+ | Further advancements in AI capabilities, potentially leading to human-level or superhuman intelligence; integration of AI with other emerging technologies like biotechnology and nanotechnology. | Fundamental changes to human society, potentially altering the nature of work, relationships, and even human identity; potential for solving major global challenges; potential for unforeseen and potentially catastrophic consequences. | Managing the existential risks associated with advanced AI; ensuring AI aligns with human values; addressing the potential for AI misuse. |

Mitigating Negative Impacts and Maximizing Benefits, Artificial intelligence good bad and ugly

Proactive strategies are crucial for navigating the challenges posed by AI. These include investing in education and retraining programs to prepare the workforce for the changing job market; developing robust regulatory frameworks to address ethical concerns and prevent misuse; promoting international cooperation on AI governance; and fostering public dialogue to build trust and understanding. Furthermore, prioritizing research on AI safety and aligning AI development with human values are paramount.

Specific examples include initiatives like the Partnership on AI, which brings together researchers, industry leaders, and policymakers to address AI’s societal implications.

Responsible AI Development and Deployment

Responsible AI development necessitates a multi-faceted approach. This includes incorporating ethical considerations throughout the AI lifecycle, from design and development to deployment and monitoring. Transparency in algorithms, fairness in decision-making, and accountability for AI systems are critical. Building robust mechanisms for auditing and oversight is crucial to ensure that AI systems are used responsibly and do not perpetuate biases or harm individuals or society.

The focus should be on creating AI that serves humanity’s best interests, rather than prioritizing profit or power.

Conclusion: Artificial Intelligence Good Bad And Ugly

Ultimately, the story of artificial intelligence is far from over. It’s a narrative constantly being written, with each new development adding another layer of complexity. While the potential benefits of AI are undeniable, so too are the potential harms. The key, then, lies in responsible development and deployment – a commitment to ethical considerations, transparency, and robust regulation.

Only through careful navigation of this complex landscape can we hope to harness the power of AI for the betterment of humanity, mitigating its potential to exacerbate existing inequalities and create new forms of harm. The future of AI is not predetermined; it’s a future we create, together.

User Queries

What is the Turing Test, and why is it relevant to AI?

The Turing Test assesses a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. It’s a benchmark, though increasingly debated, for evaluating the progress of AI in mimicking human-like conversation and problem-solving.

How does AI impact privacy?

AI systems often rely on vast amounts of data, raising concerns about the privacy of individuals. Data collection, use, and storage practices need careful consideration to protect personal information and prevent misuse.

What are explainable AI (XAI) systems?

Explainable AI focuses on creating AI models whose decision-making processes are transparent and understandable. This is crucial for building trust and accountability, especially in high-stakes applications.

What is the role of AI in cybersecurity?

AI plays a dual role: it can be used to enhance cybersecurity defenses by detecting and responding to threats, but it can also be exploited by malicious actors to create more sophisticated attacks.