Developing Stateful Event-Driven Real-Time Applications

Developing stateful event driven and real time applications – Developing stateful event-driven and real-time applications is a fascinating challenge! It’s about building systems that react instantly to changing information, remembering past events to inform future actions. Think of a stock trading platform – every tick of the price is an event, and the system needs to maintain a consistent, up-to-the-second view of the market to execute trades effectively.

This journey delves into the intricacies of designing, building, and deploying these complex yet powerful systems, exploring everything from choosing the right technology stack to ensuring security and scalability.

We’ll explore the core concepts of stateful event-driven architectures, contrasting them with their stateless counterparts. We’ll dive deep into real-time data processing, examining strategies for handling high-velocity data streams and maintaining data integrity. Event sourcing and CQRS (Command Query Responsibility Segregation) will be dissected, showcasing their power in managing complex data flows. Finally, we’ll tackle crucial aspects like scalability, fault tolerance, security, and testing, equipping you with the knowledge to build robust and reliable real-time applications.

Defining Stateful Event-Driven Architecture

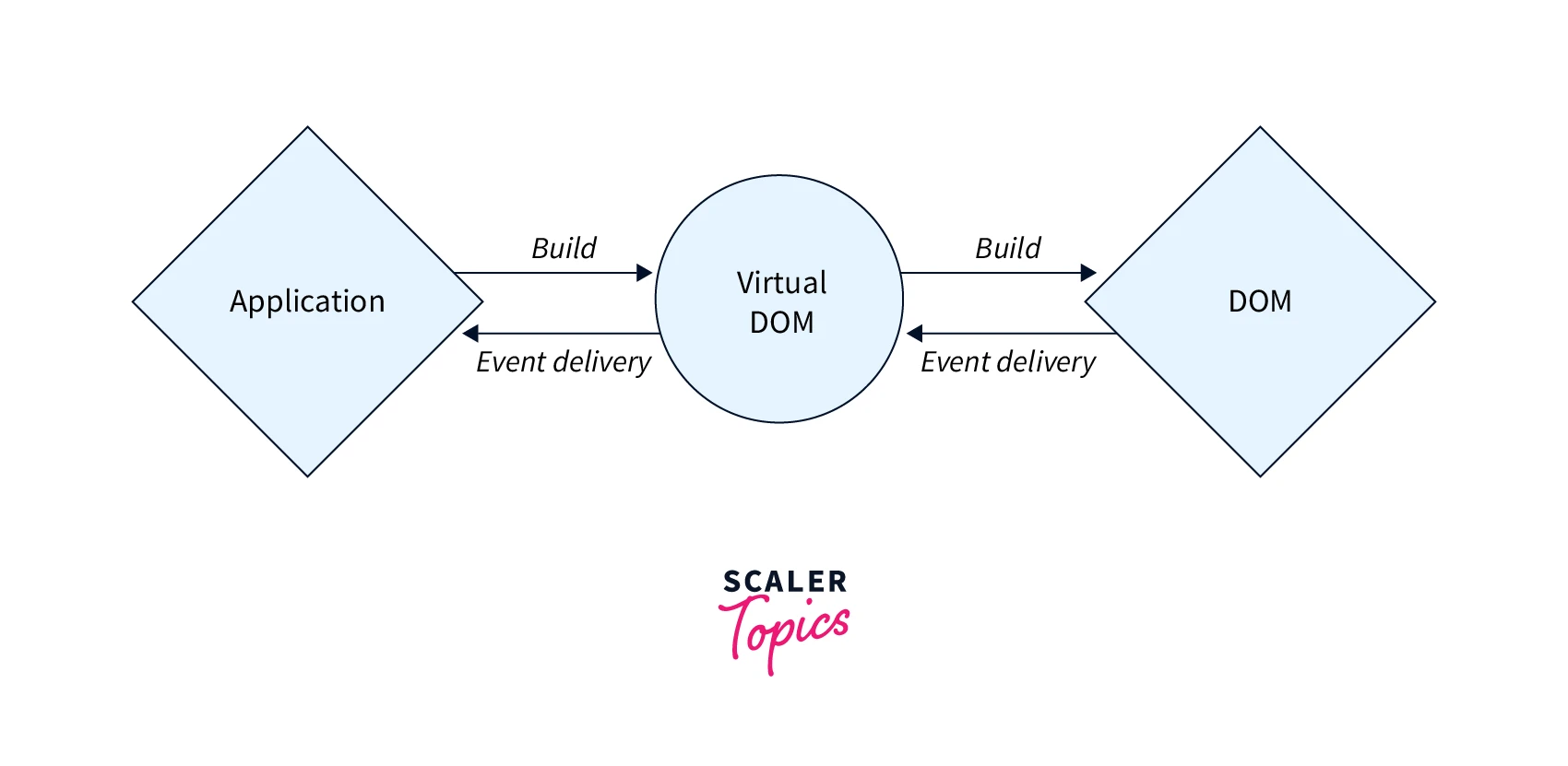

Stateful event-driven architectures represent a powerful paradigm shift in application design, moving beyond the limitations of stateless systems to handle complex, real-time interactions. This approach centers on events as the primary mechanism for communication and leverages the persistence of application state to provide context and continuity across interactions. Understanding the core principles and differences between stateless and stateful approaches is crucial for building robust and scalable applications.

The core principle of a stateful event-driven architecture lies in its ability to maintain and utilize application state throughout the lifecycle of an event. Unlike stateless systems, where each request is treated independently without memory of past interactions, a stateful system retains information about previous events and uses this information to process subsequent events. This context allows for more intelligent and efficient processing, enabling features like personalized experiences, real-time tracking, and sophisticated business logic.

Stateless versus Stateful Event-Driven Systems

The key difference between stateless and stateful event-driven systems lies in how they handle state. Stateless systems treat each event in isolation; the system’s response depends solely on the current event’s data. Stateful systems, conversely, maintain a persistent representation of the application’s state, using this state to influence the processing and outcome of each event. This means a stateful system remembers past events and their impact on the overall application state.

This persistent state allows for more complex interactions and improved user experience.

For instance, a stateless e-commerce system would process each order independently, without considering the user’s past purchase history or shopping cart. A stateful system, however, would maintain the user’s shopping cart, order history, and preferences, allowing for personalized recommendations and faster checkout.

Real-World Applications of Stateful Event-Driven Architectures

Many modern applications benefit significantly from a stateful event-driven approach. Examples include:

- Online gaming: Maintaining player state (health, score, inventory) across multiple interactions and game sessions requires a stateful system.

- Financial trading platforms: Tracking real-time market data, order books, and account balances necessitate a highly responsive and stateful architecture.

- IoT devices management: Managing and monitoring the state of numerous interconnected devices requires a system capable of handling and persisting the state of each device.

- Supply chain management systems: Tracking the location and status of goods in real-time necessitates a stateful system to maintain inventory levels and delivery information.

State Management Strategies

Several strategies exist for managing state in a stateful event-driven architecture, each with its own trade-offs in terms of performance, scalability, and complexity. The choice depends on the specific requirements of the application.

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| In-Memory | State is stored in the application’s memory. | Fast access, low latency. | Limited scalability, data loss on application restart. |

| Database (e.g., Relational, NoSQL) | State is persisted in a database. | High scalability, data persistence. | Higher latency compared to in-memory, database management overhead. |

| Distributed Cache (e.g., Redis, Memcached) | State is distributed across multiple cache servers. | High scalability, low latency, data persistence (with proper configuration). | Complexity in managing the distributed cache, potential for data inconsistency. |

| Event Sourcing | State is reconstructed from a sequence of events. | High data integrity, auditability, replayability. | Increased complexity in querying the state. |

Real-Time Data Processing and Handling

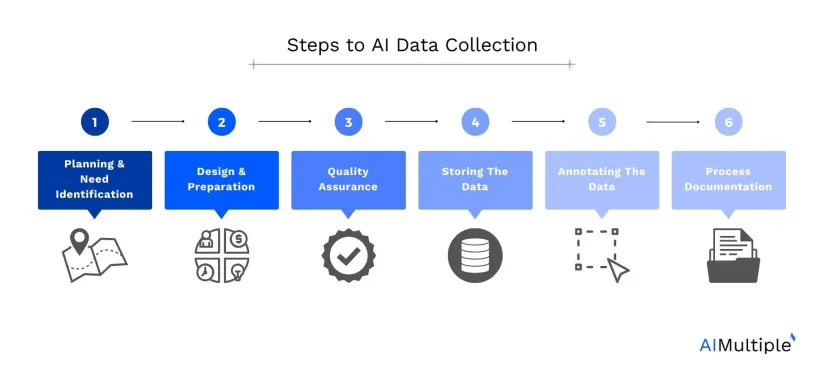

Building stateful, event-driven, real-time applications requires robust mechanisms for handling the continuous flow of incoming data. The sheer volume and velocity of this data present unique challenges that must be addressed strategically to ensure system performance, data integrity, and ultimately, the success of the application. This section delves into the complexities of real-time data processing and offers practical solutions for building resilient and efficient systems.

Challenges of Processing Real-Time Data Streams

Real-time data processing differs significantly from batch processing. The inherent constraints of time sensitivity introduce several key challenges. High-volume, high-velocity data streams necessitate efficient ingestion and processing pipelines to avoid data loss or unacceptable latency. Data streams are often unstructured or semi-structured, demanding flexible and adaptable processing techniques. Maintaining data consistency and accuracy across distributed systems in a real-time environment also requires careful consideration of fault tolerance and data recovery mechanisms.

Furthermore, the need for low latency processing frequently clashes with the need for complex data transformations or aggregations.

Designing a System for High-Volume, High-Velocity Data Ingestion

A system designed to handle high-volume, high-velocity data ingestion typically employs a distributed architecture. This architecture often involves a message queue (e.g., Kafka, RabbitMQ) to buffer incoming data and distribute it to multiple processing units. These units could be individual servers or a cluster of servers, each responsible for a subset of the data stream. The message queue acts as a decoupling layer, ensuring that the ingestion process doesn’t block the application’s core functionality.

For instance, imagine a system processing sensor data from thousands of connected devices. Kafka would efficiently buffer and distribute this data to multiple processors, each handling a specific type of sensor data or a geographical region. Load balancing mechanisms are crucial to ensure even distribution of the workload across processing units, preventing bottlenecks and maximizing throughput. The system should also incorporate robust monitoring and alerting capabilities to detect and respond to anomalies in data flow or processing performance.

Data Stream Processing Approaches

Several approaches exist for processing data streams effectively. Windowing techniques group data into time-based or count-based intervals, enabling calculations like averages or sums over specific periods. For example, a system monitoring website traffic might use a one-minute window to calculate the average number of requests per minute. Aggregation techniques summarize data from multiple events, reducing the volume of data that needs to be processed downstream.

This could involve summing values, calculating averages, or counting occurrences. Imagine an e-commerce application tracking sales data. Aggregation could combine sales events for the same product within an hour to generate hourly sales reports. These techniques are often used in combination to efficiently process large volumes of real-time data while maintaining meaningful insights.

Best Practices for Ensuring Data Consistency and Accuracy in Real-Time

Maintaining data consistency and accuracy in real-time is paramount. Implementing idempotent operations, where multiple executions have the same effect as a single execution, is critical for handling potential message reprocessing due to failures. Using distributed consensus algorithms (e.g., Raft, Paxos) ensures data consistency across multiple processing nodes. Data validation and error handling mechanisms are essential to identify and correct erroneous data before it propagates through the system.

Regular data quality checks and audits help maintain the accuracy and reliability of the processed data. Implementing robust logging and tracing mechanisms aids in debugging and troubleshooting issues related to data inconsistencies. Finally, incorporating version control for data schemas allows for seamless evolution of the system without disrupting data processing.

Event Sourcing and CQRS

Event sourcing and CQRS (Command Query Responsibility Segregation) are powerful architectural patterns that can significantly improve the scalability, maintainability, and auditability of stateful event-driven applications. They are particularly well-suited for systems requiring a high degree of data consistency and a detailed history of changes.Event sourcing represents a fundamental shift from traditional database approaches. Instead of storing the current state of an application, it stores a sequence of events that describe how the application’s state has changed over time.

This approach offers several key advantages, as we’ll explore below.

Event Sourcing Explained

Event sourcing is a pattern where instead of storing the current state of an application, we store a sequence of events that describe how the application’s state evolved. Each event is an immutable record representing a change. The current state is derived by replaying these events. This provides a complete and auditable history of all changes, enabling easy debugging, rollback capabilities, and a more robust system overall.

For example, if a user updates their profile, instead of directly modifying the database record, we record an event like “UserProfileUpdated” containing the new data.

Comparison of Event Sourcing and Traditional Database Approaches

Traditional databases, like relational databases, store the current state of the data. Changes are made directly to the data, often without a detailed record of the previous state. This makes it difficult to track changes over time, perform audits, or easily recover from errors. Event sourcing, in contrast, provides a complete audit trail and simplifies rollback operations. Imagine trying to reconstruct a user’s profile history in a traditional database versus easily replaying events in an event-sourced system.

The difference is clear in terms of auditability and maintainability. Data consistency is also enhanced in event sourcing, as the immutability of events prevents accidental data corruption.

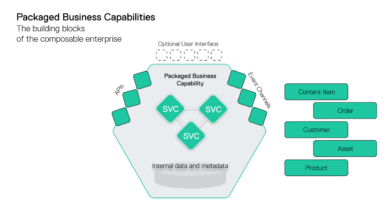

CQRS Implementation with Event Sourcing, Developing stateful event driven and real time applications

CQRS (Command Query Responsibility Segregation) separates the read and write operations of the system. The write side focuses on creating and persisting events, using the event sourcing approach. The read side, on the other hand, focuses on efficiently querying and displaying the data. This separation allows for optimization of both read and write paths independently. The read side can use a separate database (like a denormalized database or a NoSQL database) optimized for querying, providing fast response times for user interfaces.

This contrasts with a traditional approach where the same database handles both reads and writes, potentially leading to performance bottlenecks. The write side maintains the event stream, and the read side keeps a materialized view of the data, updated asynchronously through event processing.

User Profile Management System Design

Let’s design a user profile management system using event sourcing and CQRS. The write side would handle commands like “CreateUser,” “UpdateUserProfile,” and “DeactivateUser.” Each command would result in the creation of an event (e.g., “UserCreated,” “UserProfileUpdated,” “UserDeactivated”). These events are appended to the event store (a database designed to efficiently handle append-only operations). The read side would maintain a separate database, perhaps a NoSQL document database, containing denormalized user profile data.

A separate process would consume the events from the event store and update the read database, creating a materialized view optimized for querying. When a user requests their profile, the read side quickly retrieves the data from its optimized database. If a user updates their profile, the write side processes the command, appends the “UserProfileUpdated” event to the event store, and the event processor updates the read database accordingly.

This ensures data consistency while optimizing read performance. The event store serves as the single source of truth, providing a complete audit trail of all changes to user profiles.

Choosing the Right Technology Stack

Building a robust and scalable stateful event-driven, real-time application requires careful consideration of the technology stack. The choices you make here will significantly impact performance, maintainability, and overall system architecture. This section explores key components and offers guidance on making informed decisions.

Messaging Systems: Kafka, RabbitMQ, and Pulsar

Selecting the right messaging system is crucial for handling the high-volume, real-time data streams inherent in these applications. Kafka, RabbitMQ, and Pulsar each offer unique strengths and weaknesses. Kafka excels at high-throughput, distributed streaming, making it ideal for scenarios with massive data ingestion and processing. Its durability and fault tolerance are also significant advantages. RabbitMQ, known for its reliability and ease of use, provides a more lightweight and flexible solution, well-suited for applications requiring more sophisticated routing and message handling.

Pulsar, a newer entrant, offers a compelling blend of scalability, performance, and features like geo-replication and serverless functions, making it a strong contender for modern, distributed architectures. The optimal choice depends on the specific needs of your application, balancing throughput requirements with operational complexity.

State Management Technologies: Redis, Cassandra, and Hazelcast

Efficient state management is paramount in stateful event-driven systems. The choice of technology depends on factors such as data size, access patterns, and consistency requirements. Redis, an in-memory data store, offers exceptional speed for read/write operations, making it suitable for applications demanding low latency. However, its in-memory nature limits scalability for very large datasets. Cassandra, a distributed NoSQL database, provides high availability and scalability, handling massive amounts of data with ease.

Its eventual consistency model, however, might not be suitable for all applications requiring strong consistency guarantees. Hazelcast, an in-memory data grid, combines the speed of in-memory storage with the scalability and fault tolerance of a distributed system, offering a good balance between performance and reliability.

Programming Languages for Stateful Event-Driven Applications

The choice of programming language significantly impacts development speed, maintainability, and performance. Java, with its mature ecosystem of libraries and frameworks, remains a popular choice for enterprise-grade applications, offering robust concurrency features and a large talent pool. However, it can be more verbose than some other languages. Go, known for its efficiency and concurrency features, is increasingly popular for building high-performance, distributed systems.

Its simplicity and ease of deployment are attractive advantages. Node.js, with its asynchronous, event-driven nature, aligns well with the characteristics of event-driven architectures. However, its single-threaded nature can pose challenges in handling computationally intensive tasks. The best choice often depends on team expertise, project requirements, and performance trade-offs.

Open-Source Libraries and Frameworks

A rich ecosystem of open-source tools supports the development of stateful event-driven architectures. Leveraging these tools can significantly accelerate development and improve code quality.

- Akka: A toolkit and runtime for building highly concurrent, distributed, and fault-tolerant applications on the JVM.

- Spring Cloud Stream: A framework for building event-driven microservices on Spring Boot.

- Apache Camel: A versatile integration framework supporting various messaging protocols and data formats.

- Vert.x: A polyglot reactive toolkit for building scalable and responsive applications.

- Lagom: A framework for building microservices based on the principles of reactive architectures and event sourcing.

Scalability and Fault Tolerance

Building scalable and fault-tolerant stateful event-driven applications requires careful consideration of architectural choices and implementation strategies. The inherent complexity of managing state while handling real-time events necessitates a robust design that can gracefully handle increased load and unexpected failures. This section explores key strategies for achieving both scalability and resilience in such systems.

Scalable Architecture Design

A scalable architecture for a stateful event-driven application typically employs a distributed, microservices-based approach. This involves breaking down the application into smaller, independent services that communicate asynchronously through an event bus. Each microservice manages a specific part of the application’s state, allowing for horizontal scaling by adding more instances of each service as needed. Load balancing distributes incoming events across these instances, ensuring that no single service becomes a bottleneck.

Data partitioning strategies, such as sharding or consistent hashing, distribute the state across multiple databases or data stores, further enhancing scalability. The event bus itself should be highly available and scalable, capable of handling a high volume of events with low latency. Examples of such buses include Kafka or RabbitMQ, which can be clustered for high availability and scalability.

Fault Tolerance and High Availability Strategies

Ensuring fault tolerance and high availability is crucial for maintaining the reliability of a stateful event-driven application. Key strategies include:

- Redundancy: Employing redundant components across multiple availability zones or data centers. This includes replicating databases, message queues, and application services. In case of failure in one zone, the system can seamlessly switch to the redundant components in another zone.

- Automatic Failover: Implementing automatic failover mechanisms that detect and respond to failures quickly. This might involve using health checks and monitoring tools to detect failing components and automatically redirect traffic to healthy instances.

- Circuit Breakers: Utilizing circuit breakers to prevent cascading failures. If a service is unavailable, the circuit breaker prevents repeated attempts to access it, preventing overload on other services.

- Idempotency: Designing services to handle duplicate events without causing unintended side effects. This is critical in distributed systems where message delivery might not always be guaranteed.

These strategies work together to ensure that the system can continue operating even in the face of hardware or software failures. For example, if a database instance fails, the system can automatically switch to a replica, minimizing downtime.

Handling Failures in Message Processing and State Management

Handling failures in message processing and state management requires a combination of techniques. Message queues often employ acknowledgment mechanisms to ensure that messages are processed successfully. If a message fails to process, it can be moved to a dead-letter queue for investigation and potential reprocessing. State management strategies, such as event sourcing, provide mechanisms for recovering from failures by replaying events from a persistent log.

Implementing transactions or two-phase commit protocols can ensure data consistency across multiple services during state updates. Regular backups and disaster recovery plans are essential to mitigate the impact of major outages.

Fault-Tolerant System Diagram

Imagine a diagram showing multiple instances of each microservice (e.g., Order Service, Payment Service, Inventory Service) distributed across multiple availability zones. Each service communicates asynchronously via a distributed message queue (e.g., Kafka cluster) acting as the event bus. Each service instance maintains its own local state, potentially backed by a distributed database (e.g., Cassandra or a replicated relational database) sharded across multiple nodes.

A load balancer distributes incoming requests to the service instances. Monitoring tools track the health of all components, and an automated failover mechanism switches traffic to healthy instances in case of failures. The message queue ensures at-least-once message delivery, and idempotent service designs handle potential message duplicates. A dead-letter queue captures messages that fail processing for later analysis and potential retry.

This architecture ensures high availability and scalability by distributing the workload and providing redundancy. The diagram would visually represent this interconnectedness, showcasing the flow of events and the failover mechanisms in place.

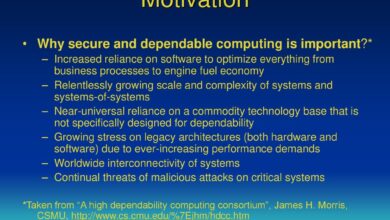

Security Considerations: Developing Stateful Event Driven And Real Time Applications

Stateful event-driven architectures, while offering significant advantages in terms of scalability and responsiveness, introduce unique security challenges. The persistence of state and the continuous flow of events create numerous potential attack vectors that require careful consideration and proactive mitigation strategies. Failing to address these vulnerabilities can lead to data breaches, service disruptions, and significant reputational damage.

Securing these systems requires a multi-layered approach encompassing data protection, robust authentication and authorization mechanisms, and secure coding practices. This approach must account for both data at rest and data in transit, ensuring confidentiality, integrity, and availability throughout the application lifecycle.

Data Security at Rest and in Transit

Protecting data at rest and in transit is paramount. Data at rest, stored in databases or message queues, should be encrypted using strong, industry-standard encryption algorithms like AES-256. Regular key rotation and secure key management practices are essential to minimize the impact of potential compromises. For data in transit, HTTPS should be mandatory for all communication between components.

Furthermore, message queues should utilize secure protocols like TLS to encrypt messages during transmission. Consider implementing intrusion detection and prevention systems to monitor network traffic for suspicious activity and block malicious attempts.

Authentication and Authorization Mechanisms

Authentication verifies the identity of users or services accessing the system, while authorization determines what actions they are permitted to perform. For event-driven systems, robust authentication mechanisms are crucial. This often involves using tokens (JWT, OAuth 2.0) or certificates for mutual authentication between microservices. Authorization can be implemented using role-based access control (RBAC) or attribute-based access control (ABAC), allowing fine-grained control over access to specific events or data.

Centralized authorization services can simplify management and ensure consistency across the system. Consider using strong password policies and multi-factor authentication (MFA) where appropriate.

Secure Coding Practices

Secure coding practices are vital for preventing vulnerabilities from being introduced into the application code. This includes input validation to prevent injection attacks (SQL injection, cross-site scripting), proper error handling to avoid information leakage, and the use of parameterized queries to prevent SQL injection. Regular security audits and penetration testing are necessary to identify and address potential vulnerabilities.

Following secure coding guidelines (OWASP, SANS) and utilizing static and dynamic application security testing (SAST/DAST) tools can help automate the process of identifying and mitigating security risks. For example, always validate user input before using it in database queries or event processing logic. Never directly embed user-supplied data into SQL queries; instead, use parameterized queries.

Testing and Debugging

Testing and debugging stateful event-driven, real-time applications presents unique challenges compared to traditional applications. The asynchronous nature of events, the persistence of state, and the high volume of data necessitate specialized strategies to ensure correctness, reliability, and performance. This section explores effective techniques for testing, debugging, and monitoring these complex systems.

Strategies for Testing Stateful Event-Driven Applications

Testing these applications requires a multifaceted approach. We can’t simply rely on unit tests; integration and end-to-end tests are crucial. Unit tests can verify individual components, such as event handlers or state transition functions, in isolation. However, the real complexities emerge from the interactions between these components and the cumulative effect of numerous events. Therefore, integration tests simulating event sequences are essential.

These tests should cover various scenarios, including normal operation, edge cases, and error handling. End-to-end tests, involving the entire system, validate the overall functionality and data consistency across all components. Consider using tools like Cucumber or similar Behavior Driven Development (BDD) frameworks to streamline the specification and execution of these tests. These frameworks help bridge the communication gap between developers and business stakeholders, ensuring everyone is on the same page regarding system behavior.

Techniques for Debugging Complex Event Streams and State Transitions

Debugging event streams and state transitions requires specialized tools and techniques. Traditional debugging methods often fall short due to the asynchronous nature and distributed nature of these systems. Event stream replay is a powerful technique; it allows you to replay a recorded event stream to reproduce a bug in a controlled environment. This replay functionality is often built into event sourcing platforms.

Tools that visualize the event stream and state transitions are invaluable for understanding the flow of data and identifying anomalies. These tools typically display the sequence of events, the resulting state changes, and any errors that occurred. Logging is also critical, with detailed logging of events, state changes, and any relevant context. The logs should be structured and searchable to facilitate efficient debugging.

Consider using a centralized logging system to aggregate logs from different components.

Best Practices for Monitoring and Logging in Real-Time Applications

Real-time applications require continuous monitoring to detect and respond to issues promptly. Metrics such as event processing latency, queue lengths, and error rates should be monitored closely. Alerting mechanisms should be set up to notify operations teams of critical issues. Effective logging is crucial for debugging and troubleshooting. Logs should include timestamps, event IDs, relevant context, and error messages.

Structured logging, using formats like JSON, enables efficient searching and analysis of logs. A centralized logging system, like Elasticsearch with Kibana or the ELK stack, provides a scalable and searchable solution for managing large volumes of logs. Consider using distributed tracing to track requests across multiple services. This helps to pinpoint performance bottlenecks and identify the root cause of errors.

Real-time dashboards, providing visualizations of key metrics, aid in quick identification of problems.

Testing Approach for Ensuring Data Consistency and Accuracy

Data consistency and accuracy are paramount in stateful event-driven applications. Testing should focus on verifying that the system’s state accurately reflects the sequence of events processed. This often involves comparing the system’s state with an expected state calculated independently. Techniques like snapshot testing can capture the system’s state at specific points and compare it against expected snapshots.

Another approach is to employ checksums or hash functions to verify data integrity. These techniques can detect data corruption or inconsistencies. Regular data audits and reconciliation processes are also important to ensure data accuracy and consistency over time. These audits can involve comparing the system’s state with external data sources or conducting manual checks. Furthermore, the use of idempotent event handlers is crucial.

Idempotent handlers guarantee that reprocessing an event multiple times will not alter the system’s state beyond the effect of a single processing.

Final Conclusion

Building stateful event-driven real-time applications is a rewarding but demanding endeavor. It requires a deep understanding of distributed systems, data processing techniques, and security best practices. While the initial complexity might seem daunting, the resulting systems are incredibly powerful and scalable, capable of handling massive data volumes and reacting to events in real-time. By mastering the concepts and techniques discussed here, you’ll be well-equipped to create applications that are not only responsive but also resilient and secure, opening up a world of possibilities for innovative and dynamic solutions.

FAQ Explained

What are the biggest challenges in developing stateful event-driven applications?

Maintaining data consistency across distributed systems, handling failures gracefully, and ensuring scalability are major hurdles. Debugging can also be significantly more complex than in traditional applications.

How do I choose the right messaging system for my application?

The best messaging system depends on your specific needs. Consider factors like throughput, message ordering requirements, fault tolerance, and the overall complexity of your application. Kafka is popular for high-throughput scenarios, while RabbitMQ is often preferred for its ease of use.

What are some common security vulnerabilities in these systems?

Common vulnerabilities include unauthorized access to event streams, data breaches due to insecure storage, and denial-of-service attacks targeting message brokers. Robust authentication, authorization, and data encryption are crucial.

How do I test a stateful event-driven application effectively?

Testing involves simulating event streams and verifying the application’s state transitions. Techniques like event replay and mocking external services are essential. Comprehensive monitoring and logging are also critical for debugging and identifying issues.