Developing the Data You Need for On-Demand Testing

Developing the data you need for on demand testing – Developing the data you need for on-demand testing is more than just pulling numbers from a database; it’s about crafting the perfect digital replica of your system’s reality. Think of it as building a miniature, perfectly functioning version of your entire operation, ready to be stressed, tested, and analyzed at a moment’s notice. This allows for rapid iteration and feedback, saving time and resources in the long run.

This post dives deep into the strategies and considerations involved in creating this essential digital twin.

We’ll explore everything from identifying your testing needs and choosing appropriate data sources to mastering data transformation, implementing robust storage solutions, and ensuring the utmost security and compliance. We’ll even walk through a real-world example, using a financial transaction system to illustrate the practical application of these concepts. Get ready to level up your testing game!

Defining “On-Demand Testing” Needs

On-demand testing requires a paradigm shift in how we approach data management for software testing. Instead of relying on pre-prepared, static datasets, it necessitates the ability to generate or access the specific data needed at the precise moment a test is executed. This approach is crucial for maximizing test efficiency and ensuring relevant test coverage, especially in dynamic environments.On-demand data generation is not merely about speed; it’s about the ability to tailor data characteristics to match specific test scenarios, mimicking real-world conditions and edge cases.

This contrasts sharply with traditional testing methods that often rely on generic or limited datasets, leading to potential gaps in test coverage and reduced confidence in the system’s overall reliability.

Characteristics of Systems Requiring On-Demand Data

Systems that benefit most from on-demand testing are those characterized by high data volatility, complex data dependencies, and a need for highly customized test scenarios. For instance, a real-time financial trading platform needs to simulate a massive influx of transactions with varying parameters (e.g., trade volume, asset types, market conditions) to thoroughly test its performance and stability under pressure.

Similarly, a personalized recommendation engine requires on-demand data to test the accuracy and effectiveness of its algorithms based on various user profiles and preferences. The key is the system’s need for data that reflects the dynamic nature of its operational environment.

Types of On-Demand Testing Scenarios and Their Data Requirements

Several testing scenarios demand on-demand data generation capabilities. Performance testing, for example, requires generating massive datasets to stress the system under realistic load conditions. This involves creating synthetic data that accurately reflects the expected volume, velocity, and variety of real-world data. Functional testing, on the other hand, might require smaller, precisely crafted datasets to verify specific functionalities or edge cases.

Security testing needs data that can simulate malicious attacks or exploit vulnerabilities, requiring data with carefully crafted patterns and anomalies. Finally, integration testing needs data to verify the smooth interaction between different system components, demanding data that accurately represents the interface between them.

Key Performance Indicators (KPIs) for Successful On-Demand Testing

The success of on-demand testing hinges on several KPIs. Data generation speed is paramount, as delays can significantly impact testing cycles. Cost-effectiveness is crucial, as on-demand data generation can be resource-intensive. Data quality is non-negotiable; inaccurate or inconsistent data can lead to unreliable test results. Finally, test coverage, reflecting the extent to which the on-demand data effectively exercises different aspects of the system, is a critical indicator of comprehensive testing.

Comparison of Data Generation Methods

The choice of data generation method depends on the specific needs of the testing scenario. Below is a comparison of common methods, considering speed, cost, and data quality:

| Method | Speed | Cost | Data Quality |

|---|---|---|---|

| Manual Data Entry | Very Slow | High | High (if done carefully) |

| Data Copying from Production | Moderate | Low (if permitted) | High (but potentially sensitive) |

| Using Test Data Management Tools | Fast | Moderate to High | High |

| Automated Data Generation Scripts | Fast | Low | Moderate to High (depending on script complexity) |

Data Sources and Acquisition Strategies: Developing The Data You Need For On Demand Testing

On-demand testing hinges on having the right data readily available. The efficiency and accuracy of your testing directly correlate with the quality and accessibility of your data sources and the strategies employed to acquire them. This section dives into the various options available and the best practices for data acquisition and validation.Data sources for on-demand testing are diverse, reflecting the complexity of modern software systems.

Efficient data acquisition requires a well-defined strategy that considers the nature of the data, its volume, and the speed at which it needs to be accessed. Validation is crucial to ensure the reliability of test results.

Database Integration

Databases, whether relational (like MySQL or PostgreSQL) or NoSQL (like MongoDB or Cassandra), are frequently used data sources for on-demand testing. They provide structured data, allowing for precise querying and retrieval of specific data subsets needed for targeted tests. Efficient extraction involves using optimized SQL queries or database-specific APIs to retrieve only the necessary data, minimizing latency and improving performance.

Data validation involves checking for data integrity, consistency, and completeness against pre-defined rules or schema definitions. For example, a database holding customer order information could be queried to retrieve orders placed within a specific timeframe, with validation ensuring that order IDs are unique and that associated customer data is consistent.

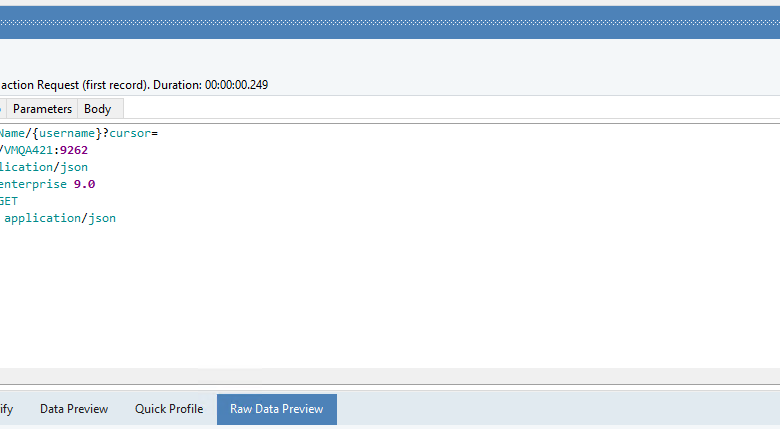

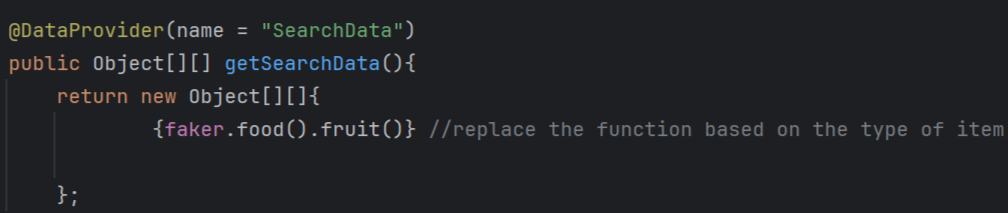

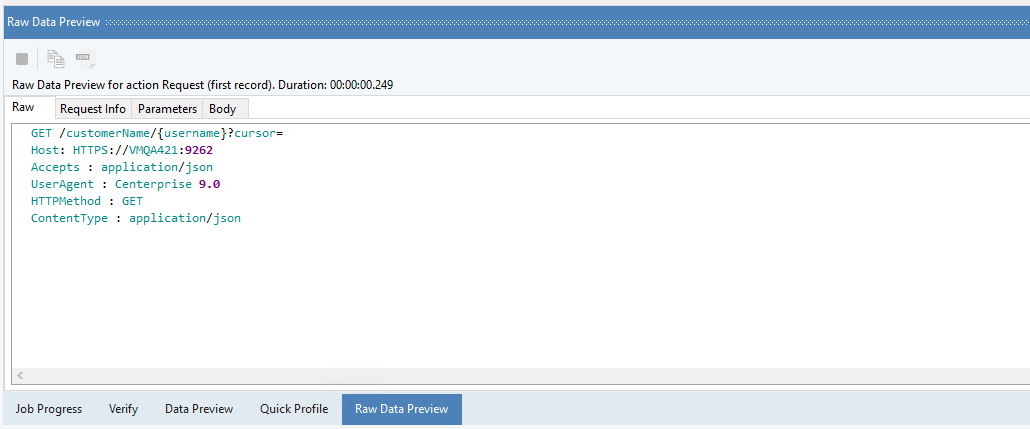

API Data Acquisition, Developing the data you need for on demand testing

Many modern applications expose their data through APIs (Application Programming Interfaces). These APIs offer a standardized way to access and manipulate data, making them ideal for on-demand testing. Efficient extraction techniques involve utilizing API clients and libraries to automate data retrieval. Error handling and rate limiting are crucial considerations. Data validation in this context might involve verifying the API response structure, checking for expected data types and ranges, and comparing retrieved data against known values or expected patterns.

For example, an e-commerce platform’s API could be used to retrieve product details, with validation confirming that prices are positive and product descriptions are within the expected length.

Simulation and Synthetic Data Generation

When real data is unavailable or insufficient, simulation and synthetic data generation become vital. Simulations can model complex real-world scenarios, providing data representative of typical system behavior. Synthetic data generation tools create realistic but artificial data, useful for testing edge cases or privacy-sensitive scenarios. The efficiency of this method depends on the sophistication of the simulation or data generation algorithm.

Validation involves verifying that the generated data conforms to predefined statistical distributions and reflects the characteristics of real-world data, often through statistical analysis. For instance, a simulation could generate network traffic patterns for testing network performance, with validation ensuring that the generated traffic volume and patterns align with realistic network behavior.

Real-Time Data Acquisition vs. Pre-generated Datasets

Real-time data acquisition provides the most up-to-date information, mirroring live system conditions. This is ideal for testing responsiveness and handling of unexpected events. However, it can be more complex to implement and manage, requiring robust data streaming and processing capabilities. Pre-generated datasets, on the other hand, are easier to manage and control, providing consistent data for repeatable tests.

They are less susceptible to fluctuations and external factors affecting real-time data. The choice depends on the testing objectives. For instance, a test focusing on system stability under high load might benefit from pre-generated data representing peak usage, while testing a new feature’s integration with a live data stream would require real-time data acquisition.

Data Validation Process

A robust data validation process is essential to ensure the accuracy and reliability of on-demand testing. This typically involves multiple steps:

- Schema Validation: Verifying that the data conforms to the expected structure and data types.

- Data Completeness Checks: Ensuring that all required fields are populated.

- Data Consistency Checks: Verifying that data relationships are correct and consistent across different sources.

- Range and Validity Checks: Confirming that data values fall within acceptable ranges and meet predefined criteria.

- Cross-referencing and Reconciliation: Comparing data from multiple sources to identify discrepancies.

Implementing automated validation checks, using tools and scripting, is crucial for efficient and reliable data validation within an on-demand testing environment.

Data Transformation and Preparation

Getting your data ready for on-demand testing is crucial. Raw data is rarely in a format suitable for immediate use; it often contains inconsistencies, missing values, and irrelevant information. This phase involves cleaning, transforming, and potentially synthesizing your data to create a reliable and efficient testing environment. The goal is to produce a dataset that accurately reflects the characteristics of your real-world data, but is also optimized for your specific testing needs.

Data Cleaning Techniques

Data cleaning is the first step, addressing issues like missing values, outliers, and inconsistencies. Missing values can be handled through imputation (replacing missing values with estimated values based on other data points), removal of rows or columns with excessive missing data, or using advanced techniques like multiple imputation. Outliers, data points significantly different from the rest, can be identified using statistical methods (e.g., box plots, Z-scores) and handled through removal, transformation (e.g., logarithmic transformation), or winsorization (capping outliers at a certain percentile).

Inconsistencies, such as variations in data formats or spelling, require careful review and standardization. For example, inconsistent date formats (e.g., MM/DD/YYYY, DD/MM/YYYY) need to be unified.

Data Normalization and Standardization

Normalization scales data to a specific range, often between 0 and 1, while standardization transforms data to have a mean of 0 and a standard deviation of These techniques are essential for algorithms sensitive to scale, such as machine learning models. For example, if you have features with vastly different scales (e.g., age in years and income in dollars), normalization or standardization prevents features with larger values from dominating the model.

A common normalization technique is min-max scaling:

xnormalized = (x – x min) / (x max

xmin)

where x is the original value, x min is the minimum value, and x max is the maximum value. Standardization uses the Z-score

z = (x – μ) / σ

where x is the original value, μ is the mean, and σ is the standard deviation.

Synthetic Data Generation

When real data is scarce, insufficient, or contains sensitive information, creating synthetic data is a viable solution. Several methods exist, including statistical modeling (e.g., generating data based on distributions fitted to the real data), rule-based generation (creating data based on predefined rules and patterns), and generative adversarial networks (GANs), a deep learning technique that generates realistic synthetic data. For example, if you need to test a system’s response to rare events, you can use synthetic data generation to create a larger sample of those events.

The key is to ensure that the synthetic data accurately reflects the statistical properties and relationships present in the real data, while protecting sensitive information.

Data Anonymization and Security

Protecting sensitive data is paramount. Data anonymization techniques, such as removing identifying information (e.g., names, addresses, social security numbers), generalization (e.g., replacing specific values with broader ranges), and data perturbation (e.g., adding noise to data values), are crucial. Furthermore, access control mechanisms, encryption, and secure storage practices are essential to protect data during the entire lifecycle, from acquisition to disposal.

Implementing robust security protocols is vital to comply with data privacy regulations and maintain the confidentiality, integrity, and availability of your data.

Data Management and Storage

Efficient data management is the backbone of any successful on-demand testing strategy. Without a robust system for storing, retrieving, and managing your test data, your ability to perform rapid, reliable testing will be severely hampered. This section explores various data storage solutions and Artikels strategies for building a highly effective data management system specifically designed for on-demand testing needs.Choosing the right data storage solution involves careful consideration of factors like scalability, cost, access speed, and security.

The ideal solution will depend heavily on the volume and type of data you’re working with, as well as your specific testing requirements.

Cloud Storage Solutions

Cloud storage offers several advantages for on-demand testing. Services like AWS S3, Azure Blob Storage, and Google Cloud Storage provide scalable, cost-effective solutions for storing large volumes of test data. Data can be accessed quickly from anywhere with an internet connection, making it ideal for geographically distributed teams. Furthermore, cloud providers often offer robust security features, including encryption and access control lists, to protect your sensitive data.

However, reliance on a third-party provider introduces potential vendor lock-in and concerns about data sovereignty and compliance with specific regulations. Cost can also escalate rapidly with significant data volumes and frequent access. For example, a company using AWS S3 might find that their storage costs increase substantially as their test data grows beyond a certain threshold.

Local Database Solutions

Local databases, such as PostgreSQL, MySQL, or MongoDB, offer a more controlled environment for managing test data. They provide faster access speeds compared to cloud storage for smaller datasets and offer the benefit of greater control over data security and compliance. However, local databases require dedicated server infrastructure, increasing upfront costs and ongoing maintenance responsibilities. Scalability can also be a challenge, requiring significant investment in hardware upgrades as data volumes grow.

For instance, a company using a local MySQL database might need to invest in a more powerful server to handle increased testing data loads. This can lead to significant capital expenditure and potential downtime during upgrades.

Data Retrieval and Management System Design

An efficient data retrieval and management system should prioritize speed and ease of access. A well-designed system might incorporate features like data cataloging, metadata management, and search capabilities. This allows testers to quickly locate and access the specific data they need for their tests, minimizing wait times and improving overall efficiency. Implementing a robust API is crucial for integrating the data management system with the testing framework, allowing for automated data retrieval and updates.

For example, a system might use a REST API to allow test automation scripts to easily fetch relevant datasets based on specific test criteria.

Version Control and Data Lineage Tracking

Maintaining data integrity and traceability is crucial for debugging and ensuring test results are reliable. Implementing version control for test data allows for easy rollback to previous versions if needed, minimizing the impact of errors or unintended changes. Data lineage tracking provides a complete history of data transformations and modifications, facilitating auditability and reproducibility of test results. This could involve logging every data modification, including timestamps and user information, creating a comprehensive audit trail.

Comparison of Storage Options

The following table summarizes the advantages and disadvantages of cloud and local database solutions for on-demand testing:

| Feature | Cloud Storage | Local Database |

|---|---|---|

| Scalability | High | Moderate to Low |

| Cost | Variable, can be high for large datasets | High upfront costs, lower ongoing costs for smaller datasets |

| Access Speed | Moderate | High |

| Security | High, dependent on provider’s security measures | High, dependent on internal security measures |

| Maintenance | Low (managed by provider) | High (requires dedicated IT resources) |

Data Security and Compliance

On-demand testing, while offering significant advantages in speed and efficiency, introduces new challenges related to data security and compliance. The ease of access to potentially sensitive data necessitates robust security measures and a rigorous approach to regulatory compliance to prevent breaches and maintain data integrity. Failing to address these concerns can lead to severe legal repercussions, reputational damage, and financial losses.

Potential Security Risks Associated with On-Demand Data Access

The accessibility inherent in on-demand testing creates several security vulnerabilities. Unauthorized access, data breaches, and insider threats become more likely when data is readily available. Improperly configured access controls, insufficient data encryption, and a lack of robust authentication mechanisms can all contribute to significant risks. For instance, a poorly secured API endpoint providing on-demand data could be exploited by malicious actors to gain unauthorized access to sensitive customer information.

Furthermore, the increased volume of data accessed during on-demand testing can strain existing security infrastructure, creating potential weaknesses that attackers can exploit.

Security Measures to Protect Sensitive Data During On-Demand Testing

Protecting sensitive data during on-demand testing requires a multi-layered approach. This includes implementing strong authentication and authorization mechanisms, such as multi-factor authentication (MFA) and role-based access control (RBAC). Data encryption, both in transit and at rest, is crucial to protect data from unauthorized access even if a breach occurs. Regular security audits and penetration testing are essential to identify and address vulnerabilities proactively.

Data masking and anonymization techniques can be used to protect sensitive data elements while still allowing for meaningful testing. Finally, robust logging and monitoring systems are necessary to detect and respond to suspicious activity promptly. A well-defined incident response plan is crucial to minimize the impact of any security incidents.

Compliance with Relevant Data Privacy Regulations

Adhering to data privacy regulations like GDPR and CCPA is paramount. These regulations mandate specific requirements for data handling, including consent management, data minimization, and the right to be forgotten. Compliance requires a thorough understanding of the applicable regulations and the implementation of appropriate technical and organizational measures. This includes documenting data processing activities, conducting data protection impact assessments (DPIAs) for high-risk processing activities, and establishing clear data retention policies.

For example, ensuring that all data subjects have the ability to exercise their rights under GDPR, such as the right to access, rectify, or erase their personal data, is crucial. Regular audits and assessments should be conducted to verify ongoing compliance.

Checklist for Ensuring Data Security and Compliance Throughout the On-Demand Testing Lifecycle

Prior to commencing on-demand testing, a comprehensive checklist should be implemented to ensure data security and compliance.

- Identify and classify all data used in on-demand testing, categorizing it based on sensitivity levels.

- Implement strong authentication and authorization mechanisms, including MFA and RBAC.

- Encrypt all data both in transit and at rest using industry-standard encryption algorithms.

- Conduct regular security audits and penetration testing to identify and mitigate vulnerabilities.

- Implement data masking and anonymization techniques where appropriate.

- Establish robust logging and monitoring systems to detect and respond to suspicious activity.

- Develop and regularly test an incident response plan.

- Ensure compliance with all relevant data privacy regulations, such as GDPR and CCPA.

- Document all data processing activities and conduct DPIAs where necessary.

- Establish clear data retention policies and procedures.

Illustrative Example: On-Demand Testing for a Financial Transaction System

On-demand testing in a financial transaction system requires a robust and realistic data set to effectively simulate real-world scenarios. This allows testers to identify vulnerabilities and ensure the system’s resilience under pressure, minimizing the risk of costly errors and security breaches. The following example illustrates the data requirements, transformation steps, and crucial security considerations.

Data Requirements for On-Demand Testing

To effectively test a financial transaction system, we need a diverse dataset encompassing various transaction types, account details, and associated metadata. This data should reflect the system’s complexity and volume of real-world transactions. The data should be anonymized to protect sensitive information while maintaining its representative nature.

Sample Transaction Data

The table below shows a sample of the data required. Note that this is a simplified example and a real-world system would require a significantly larger and more comprehensive dataset.

| Transaction ID | Transaction Type | Account Number (Debtor) | Account Number (Creditor) | Amount | Transaction Date | Currency | Status |

|---|---|---|---|---|---|---|---|

| 12345 | Deposit | 1111222233334444 | NULL | 1000.00 | 2024-10-27 | USD | Success |

| 67890 | Withdrawal | 1111222233334444 | NULL | 500.00 | 2024-10-27 | USD | Success |

| 13579 | Transfer | 1111222233334444 | 5555666677778888 | 250.00 | 2024-10-27 | USD | Success |

| 24680 | Payment | 1111222233334444 | 9999000011112222 | 100.00 | 2024-10-27 | EUR | Pending |

Data Transformation Steps

Before the data can be used for testing, several transformation steps are necessary. These steps ensure data quality, consistency, and security.

Firstly, data cleansing is crucial. This involves identifying and correcting inconsistencies, such as missing values, incorrect data types, and duplicates. For example, ensuring all currency values are formatted correctly and transaction dates are in a consistent format (YYYY-MM-DD).

Secondly, data masking is essential for anonymization. This involves replacing sensitive data elements, such as account numbers, with non-sensitive substitutes while preserving the data’s structural integrity and statistical properties. Techniques like tokenization or data shuffling can be used to achieve this.

Finally, data subsetting might be necessary to create smaller, manageable datasets for specific test scenarios. This helps to focus testing efforts on particular aspects of the system without overwhelming the testing environment.

Security Considerations

Security is paramount when handling financial transaction data. The following measures are critical:

Data encryption both in transit and at rest is crucial to protect sensitive data from unauthorized access. Strong encryption algorithms and key management practices should be implemented.

Access control mechanisms must restrict access to the test data to authorized personnel only. Role-based access control (RBAC) can be implemented to ensure only those with the necessary permissions can access the data.

Regular security audits and penetration testing of the testing environment itself are necessary to identify and address any vulnerabilities. This ensures that the test data itself is not compromised.

Compliance with relevant regulations, such as PCI DSS for payment card data, is mandatory. Adherence to these regulations ensures the secure handling of sensitive financial information.

Final Conclusion

Mastering on-demand testing data isn’t just about having the right data; it’s about having the right

-process*. By thoughtfully planning your data acquisition, transformation, and storage, you can significantly improve the efficiency and effectiveness of your testing cycles. Remember, the key is a well-defined strategy that balances speed, cost, and data quality. This allows for faster feedback loops, improved software quality, and ultimately, a more robust and reliable product.

So, embrace the power of on-demand testing data – your future self (and your users) will thank you!

User Queries

What if my data contains personally identifiable information (PII)?

Prioritize data anonymization techniques like data masking or pseudonymization to protect sensitive information while maintaining data utility for testing.

How do I choose between real-time data and pre-generated datasets?

Real-time data offers the most current and realistic representation, but can be more complex to manage. Pre-generated datasets are simpler to handle but might not reflect the latest system behavior.

What are some common data quality issues to watch out for?

Inconsistencies, missing values, duplicates, and outliers are common problems. Data validation and cleaning steps are crucial to address these.

How can I ensure my on-demand testing data remains secure?

Implement robust access controls, encryption, and regular security audits. Comply with relevant data privacy regulations (GDPR, CCPA, etc.).