Dont Shut Off AI Implement a Managed Allowance Instead (Netskope)

Dont shut off chatgpt implement a managed allowance instead netskope – Don’t shut off AI: implement a managed allowance instead (Netskope)! In today’s hyper-connected world, completely blocking access to powerful AI tools feels like slamming the brakes on innovation. But unrestricted access? That’s a security nightmare. So, what’s the sweet spot? The answer lies in a carefully crafted managed allowance system, leveraging the power of tools like Netskope to balance productivity and security.

This post dives into how to build that system, navigate the complexities, and ultimately, unlock the true potential of AI in your organization without sacrificing security.

We’ll explore the potential pitfalls of a complete shutdown – from lost productivity to increased security risks from employees using unauthorized AI tools. Then, we’ll walk you through the practical steps of implementing a managed allowance system with Netskope, covering everything from policy creation to ongoing monitoring. We’ll also examine the cost-benefit analysis, showing how a managed approach can actually save money in the long run by preventing security breaches and boosting employee efficiency.

Get ready to rethink your AI access strategy!

The Risks of Shutting Down Access to AI Tools

The complete shutdown of access to AI tools within a business environment, especially those integrated with security platforms like Netskope, presents a significant risk to overall operational efficiency and security. Blocking access isn’t simply a matter of inconvenience; it can lead to substantial productivity losses, operational disruptions, and even heightened security vulnerabilities. Understanding these risks is crucial before implementing such a drastic measure.The potential negative impacts on productivity are substantial.

AI tools streamline workflows, automate tasks, and provide valuable insights, significantly boosting employee output. Removing this support would force employees to revert to slower, less efficient manual processes, leading to decreased output and potentially missed deadlines. This impact is particularly pronounced in sectors heavily reliant on data analysis, automation, and rapid information processing.

Business Disruption from Cessation of AI-Powered Workflows

A sudden cessation of AI-powered workflows can cause significant business disruption. Imagine a customer service department relying on AI-powered chatbots for initial customer interaction. Suddenly shutting down access would lead to overwhelmed human agents, longer wait times, and frustrated customers, potentially damaging brand reputation and impacting sales. Similarly, in finance, AI-driven fraud detection systems prevent significant losses; their removal exposes the business to increased risk.

The ripple effect of such disruptions can be far-reaching and costly.

Security Risks Associated with Unauthorized AI Tool Use

Restricting access to approved AI tools doesn’t eliminate the use of AI; it merely drives it underground. Employees facing productivity bottlenecks may resort to using unauthorized, and potentially insecure, AI tools. These tools lack the security features and oversight of approved platforms, increasing the risk of data breaches, malware infections, and exposure to malicious actors. This shadow IT presents a significant security challenge, harder to manage and monitor than sanctioned tools.

Critical Scenarios Requiring Immediate Access to AI Tools

There are numerous scenarios where immediate access to AI tools is crucial for business operations. For instance, in a cybersecurity incident, AI-powered threat detection and response systems are critical for containing the damage and minimizing losses. Similarly, in healthcare, AI tools assist in diagnosis, treatment planning, and drug discovery; restricting access could have life-threatening consequences. In financial markets, AI-driven trading algorithms operate on extremely tight timeframes; any disruption can lead to significant financial losses.

These examples highlight the critical role AI plays in ensuring business continuity and even public safety in certain sectors.

Implementing a Managed Allowance System with Netskope

Implementing a managed allowance system for AI tool usage is crucial for balancing the benefits of AI with the need for security and responsible use within an organization. Netskope, with its comprehensive cloud security capabilities, provides a robust platform for achieving this. This approach allows employees access to AI tools while mitigating potential risks associated with uncontrolled usage.

This post Artikels a step-by-step process for implementing such a system, comparing different access control methods, establishing a clear acceptable use policy, and detailing best practices for monitoring and auditing.

Step-by-Step Implementation of a Managed Allowance System

Implementing a managed allowance system using Netskope involves several key steps. First, you need to identify the specific AI tools your employees use. Then, you’ll configure Netskope’s policies to control access based on your chosen method (discussed in the next section). Regular monitoring and adjustments are crucial to ensure the system remains effective and adapts to evolving needs and threats.

Finally, employee training on the acceptable use policy is essential for successful implementation.

This phased approach allows for iterative refinement, minimizing disruption and maximizing effectiveness. Continuous monitoring and feedback mechanisms ensure the policy remains relevant and effective.

Comparison of Access Control Methods

The following table compares different methods for controlling access to AI tools, considering their pros, cons, and implementation complexity within a Netskope environment.

| Method | Pros | Cons | Implementation Complexity |

|---|---|---|---|

| Time-Based Restrictions | Simple to implement, easy to understand by users. | May not be effective for all use cases; can be easily circumvented. | Low |

| Usage Limits (Data Volume, API Calls) | Controls resource consumption, prevents overuse. | Requires careful monitoring and adjustment; may disrupt workflows if limits are too restrictive. | Medium |

| Content Filtering (s, Categories) | Prevents access to inappropriate or sensitive content. | Can lead to false positives; requires regular updates to stay effective against evolving threats. | Medium-High |

| User-Based Access Control (Role-Based Access) | Granular control, aligns access with job responsibilities. | Requires detailed user and role management; can be complex to set up. | High |

Acceptable Use Policy for AI Tools

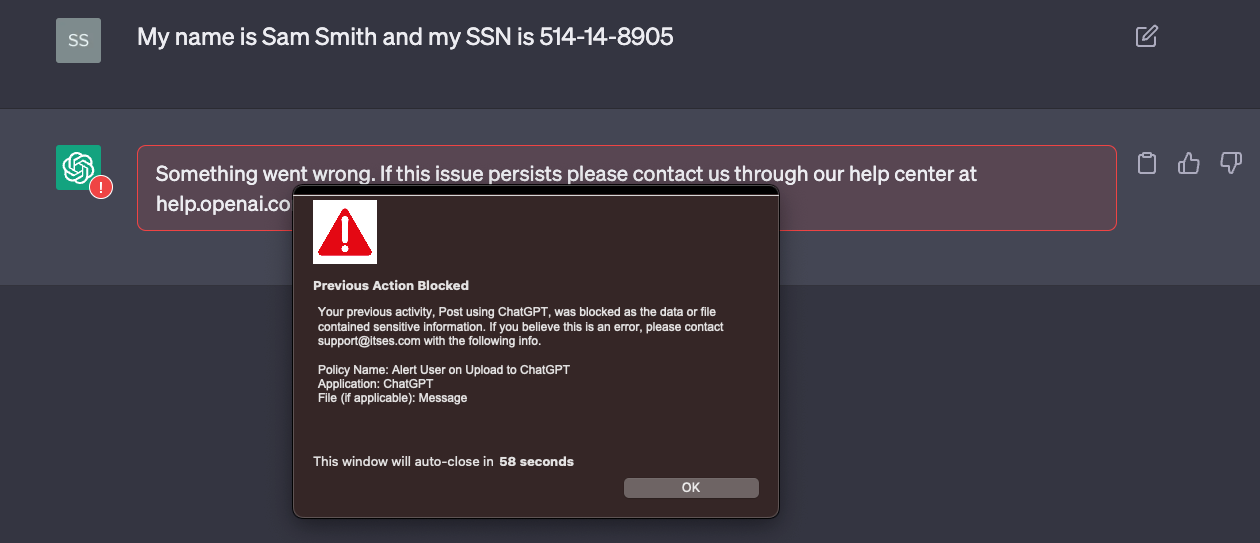

This policy Artikels the acceptable use of AI tools within the organization. Employees are expected to use AI tools responsibly, ethically, and in compliance with all applicable laws and regulations. Unauthorized access, misuse, or distribution of confidential information is strictly prohibited. Specific limitations may include restrictions on the types of data processed, the use of AI for illegal activities, and the sharing of AI-generated content externally without authorization.

Violations may result in disciplinary action, up to and including termination of employment.

The policy should be reviewed and updated regularly to reflect changes in technology, regulations, and organizational needs. It should be easily accessible to all employees and include clear examples of acceptable and unacceptable use.

Monitoring and Auditing AI Tool Usage

Effective monitoring and auditing are crucial to ensure compliance with the managed allowance policy. Netskope provides tools for monitoring user activity, data usage, and potential security threats related to AI tool usage. Regular audits should be conducted to identify areas for improvement and to ensure the policy remains effective. This includes reviewing logs, analyzing usage patterns, and investigating any suspicious activity.

Automated alerts and reports can help streamline this process.

The organization should maintain detailed records of all monitoring and auditing activities, including any identified violations and the actions taken to address them. This documentation is vital for compliance and demonstrating responsible AI usage.

Balancing Security and Productivity with AI Access

The rise of AI tools presents a compelling opportunity to boost workplace productivity, but it also introduces significant security challenges. Finding the right balance between harnessing the power of AI and mitigating potential risks is crucial for any organization. This requires a strategic approach that goes beyond simply allowing or blocking access, opting instead for a carefully managed system that prioritizes both security and employee efficiency.The security implications of unrestricted AI access are substantial.

Employees might inadvertently expose sensitive data through insecure AI platforms, use unvetted AI tools that introduce malware or phishing risks, or fall victim to AI-powered social engineering attacks. Conversely, a completely blocked approach stifles innovation and productivity, hindering the ability of employees to leverage AI’s capabilities for tasks such as data analysis, content creation, and problem-solving. This can lead to decreased efficiency and a competitive disadvantage.

The Importance of Employee Education on Responsible AI Usage

Educating employees about responsible AI usage and security best practices is paramount. This isn’t a one-time training session but an ongoing process of awareness and reinforcement. Employees need to understand the potential risks associated with using AI tools, including data breaches, malware infections, and phishing attempts. They also need to learn how to identify and report suspicious activity.

Furthermore, training should cover best practices such as using only approved AI tools, verifying the authenticity of AI-generated content, and adhering to company data security policies.

Mitigating Security Risks with a Managed Allowance System

A managed allowance system, such as one implemented with Netskope, offers a practical solution for balancing security and productivity. This approach allows employees to access approved AI tools while simultaneously monitoring their usage and enforcing security policies. For instance, Netskope can prevent access to unapproved AI platforms, monitor data exfiltration attempts, and apply content filtering to prevent sensitive information from being shared with unauthorized parties.

The system can also enforce multi-factor authentication and other security measures to protect against unauthorized access. By carefully controlling access and monitoring usage, organizations can significantly reduce the risks associated with AI while still enabling employees to benefit from its capabilities.

Examples of Training Materials for Secure AI Tool Usage

A comprehensive training program should include a variety of materials to cater to different learning styles. Potential training resources include:

- Interactive online modules covering topics such as identifying phishing attempts involving AI, recognizing and avoiding malicious AI tools, and understanding data security policies related to AI usage.

- Short videos demonstrating best practices for using AI tools securely and responsibly, with real-world examples of security breaches and how to prevent them.

- Regularly updated FAQs and knowledge base articles addressing common questions and concerns about AI security.

- Simulated phishing exercises to help employees practice identifying and reporting suspicious AI-related communications.

- In-person workshops or webinars led by security experts, providing opportunities for interactive learning and Q&A sessions.

Integration of Netskope with AI Tools

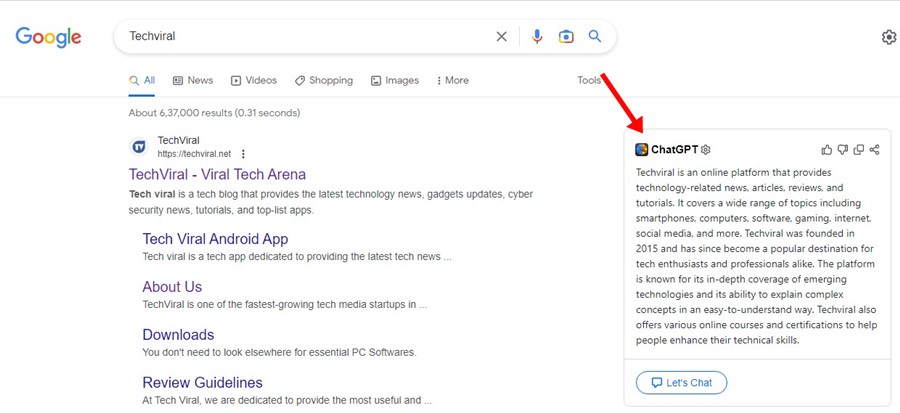

Integrating Netskope with AI tools to manage access effectively requires a multi-faceted approach. This involves understanding the technical capabilities of both Netskope’s security platform and the diverse architectures of various AI platforms, as well as anticipating potential integration hurdles. A well-planned integration ensures a secure yet productive environment for employees utilizing AI-powered applications.Netskope’s integration with AI tools typically leverages its cloud-native security platform, which can inspect and control traffic to various AI services through its various deployment options (e.g., cloud, on-premises, hybrid).

This involves configuring Netskope to identify and classify traffic destined for specific AI platforms (such as OpenAI’s API, Google’s Vertex AI, or Azure OpenAI Service). Netskope then applies pre-defined policies based on the managed allowance system, controlling access based on factors like user identity, location, device posture, and the specific AI function being accessed.

Technical Aspects of Netskope’s Integration with AI Tools

Netskope utilizes several techniques for integration. API integration allows for direct communication with the AI platform to enforce policies and gather usage data. For platforms lacking robust APIs, Netskope can use its advanced inspection capabilities to analyze network traffic, identifying and controlling access based on patterns and signatures. This includes the ability to identify and block unauthorized AI tool usage attempts, as well as applying data loss prevention (DLP) policies to prevent sensitive data from being shared with these platforms.

Furthermore, Netskope’s CASB (Cloud Access Security Broker) capabilities enable granular control over access, allowing administrators to define specific permissions for each user or group.

Challenges in Integrating Netskope with Different AI Platforms and Proposed Solutions

Integrating Netskope with various AI platforms can present unique challenges. Different platforms have different security models and API functionalities. For instance, some platforms might have limited API access or utilize proprietary authentication methods. Solutions include employing a combination of API integration and network-based inspection to accommodate various AI platforms. Another challenge is the constant evolution of AI tools and their APIs.

To mitigate this, Netskope’s automated policy updates and machine learning capabilities are crucial to adapting to the evolving threat landscape and new AI platforms. Finally, the sheer volume of data generated by AI tools can impact performance; optimization techniques, such as traffic shaping and caching, can help manage this.

Data Flow Workflow Between Netskope and a Sample AI Tool

Imagine a user attempting to access OpenAI’s API through a web application. The user’s request first passes through Netskope’s security gateway. Netskope inspects the request, verifying the user’s identity and checking the request against pre-defined policies (e.g., allowed API calls, data usage limits). If the request complies with the policy, Netskope allows the traffic to proceed to OpenAI’s API.

The response from OpenAI’s API then travels back through Netskope, where it undergoes further inspection to ensure no sensitive data is being leaked. Netskope logs all activity, providing a comprehensive audit trail of AI tool usage. This entire process occurs transparently to the end-user, ensuring seamless access while maintaining security.

Netskope’s Role in Monitoring and Enforcing Managed Allowance Policies

Netskope plays a central role in enforcing the managed allowance policy by acting as a central point of control for all AI tool access. It monitors all traffic to and from AI platforms, enforcing access controls based on pre-defined policies. It generates detailed reports and alerts on policy violations, providing administrators with insights into AI tool usage patterns. Real-time monitoring allows for immediate responses to suspicious activity, preventing unauthorized access and data breaches.

Furthermore, Netskope’s reporting capabilities enable administrators to track AI tool usage, helping to optimize resource allocation and improve productivity.

Cost-Benefit Analysis of a Managed Allowance System

Implementing a managed allowance system for AI tool access presents a compelling alternative to the extremes of complete blockage or unrestricted use. This approach allows organizations to harness the productivity benefits of AI while mitigating associated risks. A thorough cost-benefit analysis is crucial to justify the investment and demonstrate its value proposition.The decision to implement a managed allowance system hinges on carefully weighing the potential costs against the anticipated benefits.

This analysis should consider both tangible financial aspects and intangible benefits impacting overall organizational performance and employee satisfaction.

Instead of completely blocking ChatGPT, a managed allowance approach with Netskope is far more effective. Think about how this controlled access mirrors the development choices we face – choosing between low-code and pro-code approaches when building apps, as discussed in this insightful article on domino app dev the low-code and pro-code future. Ultimately, responsible management, whether of AI tools or application development, leads to better outcomes.

The key is smart control, not outright prohibition.

Cost Savings from a Managed Allowance System

A managed allowance system offers significant cost savings by reducing the financial impact of security breaches and productivity losses associated with unrestricted AI access. For example, a company using generative AI for marketing might experience reduced costs in content creation, but without controls, the risk of data leaks through the AI tool increases. A managed allowance system minimizes this risk, directly impacting costs related to data breach response, legal fees, and reputational damage.

Similarly, improved employee productivity resulting from controlled AI access translates to cost savings in labor and project completion times. Consider a scenario where employees spend significant time on repetitive tasks; AI assistance through a managed system streamlines workflows, reducing overall labor costs.

Increased Costs Associated with a Managed Allowance System, Dont shut off chatgpt implement a managed allowance instead netskope

Implementing and maintaining a managed allowance system does introduce additional costs. These include the initial investment in the Netskope platform (or similar solution), the cost of employee training on the new system, and the ongoing expense of system maintenance and updates. Furthermore, dedicated IT personnel might be needed to manage and monitor the system, adding to the personnel costs.

The complexity of integrating the system with various AI tools also contributes to the overall implementation cost. A realistic budget should account for all these factors. For instance, a small company might outsource the management, while a large corporation might dedicate an internal team. This decision itself has cost implications that must be considered.

Intangible Benefits of a Managed Allowance System

Beyond the quantifiable financial benefits, a managed allowance system yields significant intangible advantages.

- Increased Employee Morale: Providing employees with access to powerful tools while ensuring responsible use fosters a sense of trust and empowerment, boosting morale and job satisfaction.

- Improved Innovation: Controlled access to AI tools encourages experimentation and innovation by providing a safe and secure environment for exploring new applications.

- Enhanced Data Security and Compliance: The peace of mind associated with knowing that sensitive data is protected enhances trust within the organization and with external stakeholders, ensuring compliance with relevant regulations.

- Reduced Risk of Data Breaches: A managed system minimizes the risk of accidental or malicious data exposure, thereby protecting sensitive information and preventing costly legal repercussions.

A comprehensive cost-benefit analysis should meticulously weigh these tangible and intangible factors to determine the overall return on investment for implementing a managed allowance system for AI tools. The long-term benefits of improved security, enhanced productivity, and increased employee morale often outweigh the initial implementation and maintenance costs.

Closure: Dont Shut Off Chatgpt Implement A Managed Allowance Instead Netskope

Ultimately, the decision to block or allow AI access shouldn’t be a binary choice. A managed allowance system, strategically implemented with a tool like Netskope, provides a powerful alternative. By carefully defining acceptable use, monitoring activity, and educating employees, organizations can harness the transformative power of AI while mitigating the associated risks. It’s about finding the equilibrium between innovation and security – a balance that protects your business while empowering your workforce.

Ready to take control of your AI access? Let’s get started!

User Queries

What if my employees need access to AI tools outside of the approved list?

Establish a clear process for requesting access to new AI tools. This should involve a justification, security assessment, and approval from relevant stakeholders.

How do I measure the success of my managed allowance system?

Track key metrics such as employee productivity, the number of security incidents related to AI tool usage, and overall compliance with the established policy. Regular review and adjustment are crucial.

What training resources are available to help employees understand the policy?

Many vendors offer training materials, and you can also create internal guides, videos, or workshops tailored to your specific policy and AI tools.