Enhancing Test Results via Unified Reports

Enhancing test results via unified reports is a game-changer! Imagine having all your testing data – performance, security, usability – neatly compiled into one, easily digestible report. No more juggling spreadsheets, deciphering conflicting data sources, or struggling to present a cohesive picture to stakeholders. This post dives into the world of unified reporting, exploring how it simplifies complex testing processes and unlocks actionable insights that significantly boost the quality and efficiency of your projects.

We’ll cover everything from data integration and visualization to maximizing actionable insights and future trends.

Unified reporting isn’t just about consolidating data; it’s about transforming how you approach testing. By centralizing information from disparate sources, you gain a holistic view of your application’s health. This allows for faster identification of critical issues, more efficient resource allocation, and ultimately, a higher-quality end product. We’ll explore practical strategies for building and implementing a unified reporting system, addressing the challenges and showcasing the tangible benefits it offers.

Defining Unified Reporting for Enhanced Test Results

Unified reporting for test results represents a significant advancement in software quality assurance. It moves beyond isolated reports from individual testing phases to create a holistic view of the software’s overall health and readiness for deployment. This comprehensive approach allows for faster identification of issues, improved collaboration among teams, and ultimately, higher quality software releases.The core benefit of unified reporting lies in its ability to consolidate information from diverse sources into a single, easily digestible format.

This eliminates the need to sift through multiple reports, spreadsheets, and dashboards, saving valuable time and reducing the risk of overlooking critical information. Instead, stakeholders gain a clear, concise summary of the testing process, including successes, failures, and areas needing further attention.

Components of a Unified Test Reporting System

A robust unified test reporting system typically incorporates several key components. These include a centralized data repository to store test results from various sources, a reporting engine capable of generating customized reports, and visualization tools to present the data in a clear and understandable manner. Integration with existing project management and defect tracking systems is also crucial for efficient workflow and issue resolution.

Finally, robust security measures are essential to protect sensitive test data.

Benefits of Consolidating Test Results

Consolidating test results from disparate sources offers numerous advantages. Firstly, it significantly improves efficiency by providing a single source of truth for all testing activities. Secondly, it enhances communication and collaboration among testing teams and other stakeholders, as everyone is working from the same information. Thirdly, it facilitates better decision-making by providing a complete picture of the software’s quality, enabling more informed risk assessments and prioritization of fixes.

Finally, it supports continuous improvement by providing valuable data for identifying trends, bottlenecks, and areas for process optimization.

Examples of Unified Test Results

The power of unified reporting is evident across various testing types. Below is a table illustrating how diverse test results can be brought together for a comprehensive overview.

| Test Type | Data Source | Key Metrics | Challenges in Unification |

|---|---|---|---|

| Performance Testing | LoadRunner, JMeter, custom scripts | Response times, throughput, error rates, resource utilization | Different reporting formats, varying levels of detail, integrating custom metrics |

| Security Testing | OWASP ZAP, Burp Suite, Nessus | Vulnerability counts, severity levels, remediation efforts | Standardizing vulnerability reporting, correlating findings across tools, integrating with vulnerability databases |

| Usability Testing | User feedback surveys, session recordings, heatmaps | Task completion rates, error rates, user satisfaction scores, navigation patterns | Qualitative vs. quantitative data integration, handling unstructured feedback, aligning diverse data sources |

| Unit Testing | JUnit, pytest, NUnit | Code coverage, pass/fail rates, test execution time | Aggregating results from multiple modules and frameworks, mapping test results to specific code changes |

Data Integration and Standardization for Unified Reports

Creating a truly unified test report requires more than just pulling data together; it demands a meticulous approach to data integration and standardization. This ensures consistent, reliable, and actionable insights, regardless of the source or format of the original test data. The process is crucial for achieving a single source of truth for your testing efforts, enabling better decision-making and improved efficiency.Data integration from diverse testing tools and platforms involves overcoming significant hurdles.

Different tools often employ unique data structures, formats, and naming conventions. For example, one tool might report test results as JSON, while another uses XML, and yet another might output a CSV file. Successfully integrating these disparate data sources necessitates a well-defined strategy.

Methods for Integrating Data from Various Testing Tools and Platforms

A robust data integration strategy typically involves several key components. Firstly, a central repository is needed to collect and store the integrated data. This could be a database (like PostgreSQL or MySQL), a data warehouse, or a cloud-based data lake. Secondly, you’ll need to employ appropriate connectors or APIs to extract data from each individual testing tool. These connectors act as bridges, allowing the data to flow from the source to the central repository.

Thirdly, an ETL (Extract, Transform, Load) process is necessary to handle the complexities of data transformation and loading into the central repository. This might involve using scripting languages like Python with libraries such as Pandas, or specialized ETL tools. For example, a Python script could use the `requests` library to fetch JSON data from an API endpoint, then use `pandas` to clean and transform the data before writing it to a SQL database.

Strategies for Standardizing Diverse Data Formats to Ensure Compatibility Within a Unified Report

Standardization is paramount. Inconsistencies in data formats can lead to errors and difficulties in interpretation. One effective strategy is to define a common data model that all integrated data will conform to. This model should include clearly defined fields, data types, and relationships between different data points. For instance, instead of having different tools use varying field names for “test case ID”, a common field named “testCaseId” should be enforced.

Schema mapping and data transformation are crucial here, ensuring all data adheres to the common model. This often involves using data transformation tools or writing custom scripts to map data from source formats to the target standardized format. Regular expression matching might be used to extract specific data from unstructured text reports.

Challenges of Data Cleansing and Transformation in the Context of Unified Reporting and Potential Solutions

Data cleansing and transformation are significant challenges. Raw data from various sources is often incomplete, inconsistent, or contains errors. Missing values, duplicate entries, and incorrect data types are common issues. For example, a test case ID might be recorded as a string in one tool and as an integer in another, leading to integration problems. To address this, data cleansing techniques like outlier detection, imputation of missing values (using mean, median, or mode), and data validation are essential.

Data transformation involves converting data into the standardized format defined by the common data model. This might include data type conversions, unit conversions, and data normalization. Robust error handling and logging are crucial to ensure data quality and identify potential problems during the ETL process. Implementing automated data quality checks and validation rules can help catch and correct errors early on.

Employing version control for data transformation scripts ensures reproducibility and maintainability.

Visualizing Test Results for Improved Understanding

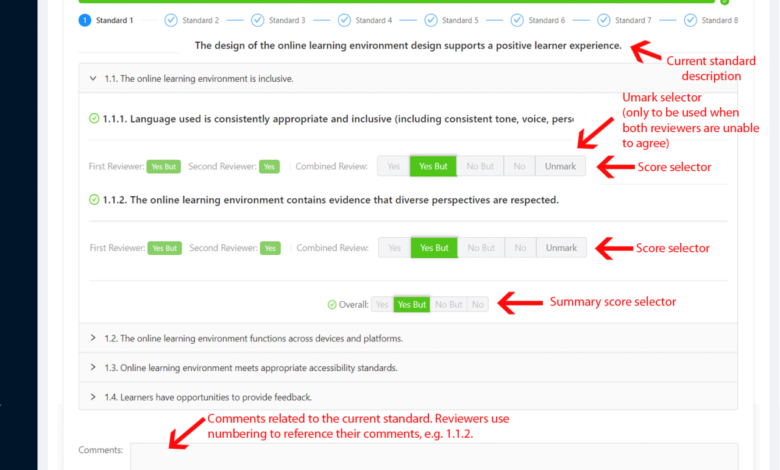

Data visualization is crucial for transforming raw test results into actionable insights. A unified report, by consolidating data from various sources, provides the perfect foundation for creating clear and impactful visualizations. Effective visuals help both technical and non-technical stakeholders quickly grasp the performance of the system under test, identifying areas needing improvement and celebrating successes.Effective communication of complex test results relies heavily on the choice of visualization techniques.

Different stakeholders have different needs and levels of technical understanding. A well-designed report caters to this diversity, providing summaries for executive-level reviews and detailed breakdowns for technical teams.

Visual Representation of Key Performance Indicators (KPIs)

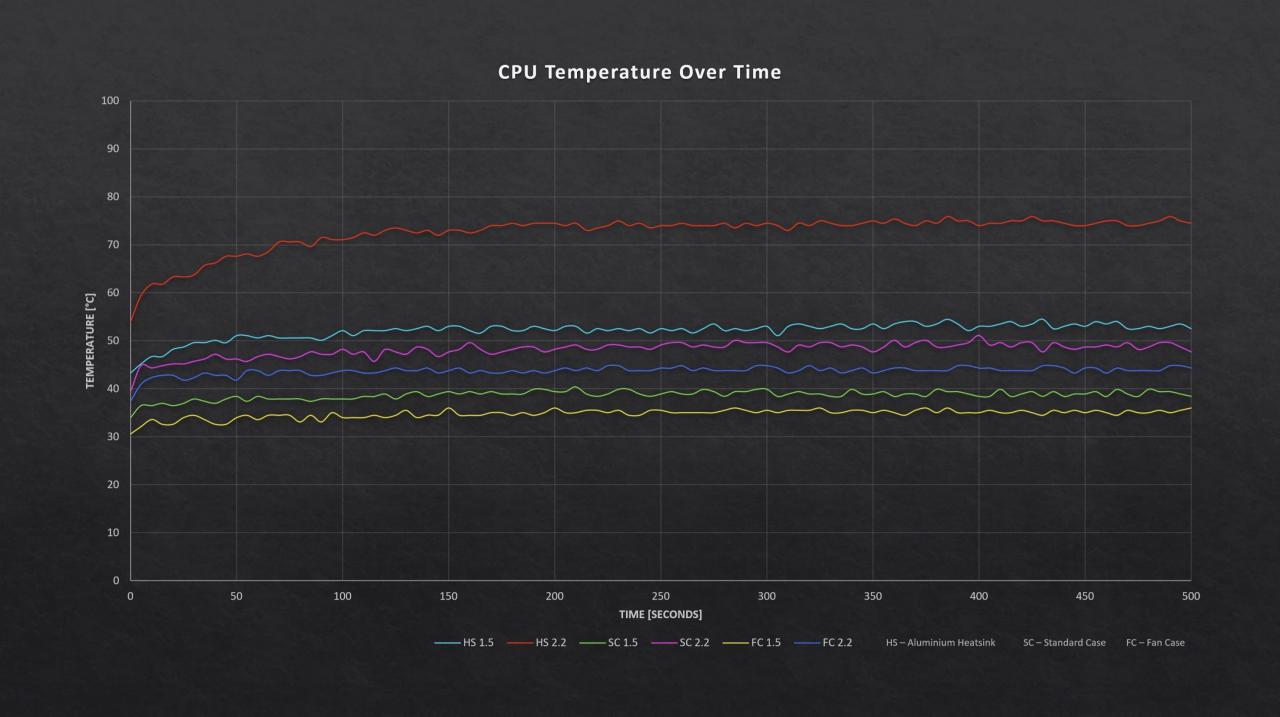

A unified report should present key performance indicators (KPIs) using a combination of charts and graphs. For example, a bar chart could effectively illustrate the pass/fail rates across different test suites. A pie chart could visually represent the breakdown of defects by severity level (critical, major, minor). Line graphs are ideal for tracking performance metrics over time, showing trends and identifying areas of improvement or regression.

Each visual element should have a clear, concise title and descriptive labels for axes and data points. Consider using color-coding to highlight key trends or areas of concern. For instance, a red bar could highlight a test suite with a high failure rate, drawing immediate attention to a problem area.

Communicating Complex Test Results to Different Stakeholders

Tailoring the presentation of test results to different audiences is essential for effective communication. Executive summaries should focus on high-level KPIs, such as overall pass/fail rates and key performance bottlenecks, using simple charts and concise language. Technical teams, however, require detailed breakdowns of individual test cases, including logs, error messages, and performance data. This might involve using more complex charts, tables, and detailed reports.

Interactive dashboards, allowing stakeholders to drill down into specific areas of interest, can be highly effective in catering to diverse needs. Consider using a consistent color scheme and visual style across all reports to maintain a professional and easily understandable presentation.

Sample Report Layout Showcasing Different Visualization Techniques

The following table compares different visualization types, highlighting their strengths and weaknesses and suggesting suitable scenarios within a unified test report.

| Visualization Type | Strengths | Weaknesses | Suitable Scenario |

|---|---|---|---|

| Bar Chart | Easy to compare different categories; clearly shows relative magnitudes. | Can become cluttered with many categories; not ideal for showing trends over time. | Comparing pass/fail rates across different test suites. |

| Pie Chart | Excellent for showing proportions of a whole; visually appealing. | Difficult to compare small segments; not suitable for many categories. | Illustrating the breakdown of defects by severity level. |

| Line Graph | Clearly shows trends and patterns over time; ideal for monitoring performance metrics. | Can be difficult to interpret with many data series; requires sufficient data points. | Tracking response times over multiple test runs. |

| Scatter Plot | Shows the relationship between two variables; identifies correlations. | Can be difficult to interpret with large datasets; requires careful axis scaling. | Analyzing the relationship between code complexity and defect density. |

Enhancing Actionability and Insights from Unified Reports

Unified reporting isn’t just about consolidating data; it’s about transforming raw information into actionable insights that drive better decision-making. A well-designed unified report should immediately highlight critical areas needing attention, offering clear guidance on how to address them effectively. This empowers teams to react swiftly and efficiently, optimizing resource allocation and improving overall testing efficacy.The goal is to move beyond simply presenting test results and instead, deliver a clear, concise, and actionable plan for improvement.

This requires careful consideration of which metrics to emphasize and how to integrate practical, relevant recommendations directly within the report itself.

Key Metrics for Quick Decision-Making

The selection of key metrics is crucial for a report’s effectiveness. Prioritizing metrics that directly impact project goals allows for rapid assessment of progress and identification of bottlenecks. For example, a software development project might focus on metrics like overall pass rate, defect density, and test coverage. A marketing campaign might prioritize metrics such as conversion rates, click-through rates, and customer acquisition cost.

By strategically choosing metrics relevant to the specific context, the report becomes a powerful tool for rapid assessment and informed decision-making. Displaying these key metrics prominently, perhaps using visual cues like color-coding or highlighting, ensures they immediately grab the reader’s attention.

Incorporating Actionable Insights

Simply presenting data isn’t enough; the report needs to offer concrete guidance on how to address identified issues. This can be achieved by including suggested remediation steps directly within the report. For example, if a specific test case consistently fails, the report could suggest reviewing the test case design, investigating potential environmental issues, or even linking to relevant documentation.

Prioritizing these issues based on severity and impact is equally crucial. A high-severity bug affecting core functionality should be highlighted more prominently than a minor UI issue.

Prioritizing Issues within the Report

Effective prioritization is key to focusing resources on the most critical issues. Several methods can be employed to achieve this within the unified report:

- Severity and Priority Matrix: A visual matrix combining severity (impact) and priority (urgency) allows for quick identification of critical issues. Issues are categorized based on a combination of their impact on the system and the urgency of their resolution.

- Risk-Based Prioritization: Issues are ranked based on their potential risk to the project. This considers the likelihood of occurrence and the potential impact of the issue if it remains unresolved. For example, a security vulnerability might rank higher than a minor usability issue.

- Cost-Benefit Analysis: Issues are prioritized based on the cost of remediation versus the benefit of resolving them. This approach is particularly useful when resources are limited and decisions need to be made about which issues to address first.

- Weighted Scoring System: A numerical scoring system assigns weights to different criteria (severity, priority, risk, etc.). Issues are then ranked based on their total score. This method allows for a more nuanced and objective prioritization.

By implementing these prioritization methods, the report guides users towards addressing the most impactful issues first, optimizing their efforts and ensuring efficient resource allocation. This structured approach ensures the report is not merely a collection of data, but a dynamic tool for driving improvements.

Improving Test Efficiency Through Unified Reporting

The shift from disparate, siloed reporting systems to a unified approach significantly boosts testing efficiency. Imagine juggling multiple spreadsheets, email chains, and individual test management tools – a chaotic scene that wastes valuable time and resources. Unified reporting streamlines this process, offering a single, comprehensive view of all testing activities. This consolidated perspective leads to faster identification of issues, quicker resolution times, and ultimately, a more efficient testing lifecycle.Unified reports drastically reduce the time spent on manual data aggregation and analysis.

In traditional testing environments, testers often spend hours compiling data from various sources, manually calculating metrics, and creating presentations. This manual process is prone to errors and significantly slows down the feedback loop. A unified system automates this data gathering and analysis, providing real-time insights into test progress and results. This allows teams to focus on strategic decision-making rather than tedious data manipulation.

Time Savings from Automated Data Aggregation

Automated reporting features are the key to unlocking significant time savings. For example, a unified system can automatically generate daily or weekly reports summarizing test execution, defect counts, and test coverage. These reports can be customized to highlight critical information, such as failing tests or areas with low test coverage, allowing for immediate action. Furthermore, automated dashboards provide at-a-glance views of key performance indicators (KPIs), eliminating the need for manual report generation and interpretation.

Consider a scenario where a team previously spent two days a week compiling test results from multiple tools. With automated reporting, this time could be reduced to a few hours, freeing up valuable time for more strategic testing activities. The resulting increase in efficiency translates directly to cost savings and faster time-to-market.

Security and Access Control in Unified Reporting Systems

Protecting sensitive test data within a unified reporting system is paramount. A breach could expose confidential information, impacting both the organization and its clients. Robust security measures are crucial to ensure data integrity and compliance with relevant regulations. This requires a multi-layered approach encompassing data encryption, access control, and regular security audits.Implementing robust security measures necessitates a layered approach.

This involves securing the infrastructure, protecting data at rest and in transit, and controlling user access to sensitive information. These measures help minimize the risk of unauthorized access, data breaches, and potential legal repercussions.

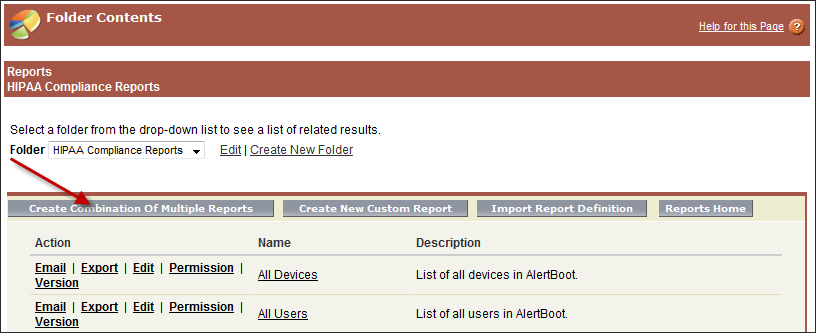

Role-Based Access Control Implementation

Role-based access control (RBAC) is a fundamental security mechanism for unified reporting systems. It allows administrators to define specific roles with varying levels of access to different report sections and data points. For example, a “viewer” role might only have access to summary reports, while a “manager” role could access detailed reports and underlying test data. An “administrator” role would possess complete control over the system, including user management and configuration settings.

This granular control minimizes the risk of data exposure by limiting access to only the information needed to perform specific tasks. Properly implemented RBAC ensures that only authorized personnel can view and interact with sensitive data, significantly enhancing security.

Data Privacy and Compliance Best Practices

Maintaining data privacy and compliance is crucial. This involves adhering to relevant regulations such as GDPR, CCPA, and HIPAA, depending on the nature of the data being handled and the geographical location of users. Best practices include data anonymization or pseudonymization techniques to protect personally identifiable information (PII). Regular security audits and penetration testing help identify vulnerabilities and ensure the system remains secure.

Implementing strong password policies and multi-factor authentication (MFA) further enhances security and prevents unauthorized access. Detailed audit logs tracking all user activities provide valuable insights for security monitoring and incident response. Furthermore, establishing clear data retention policies and procedures for secure data disposal are critical for maintaining compliance and minimizing risk. For instance, a company handling healthcare data must strictly adhere to HIPAA guidelines, implementing robust security measures and data encryption to protect patient information.

Similarly, companies operating in the European Union must comply with GDPR, ensuring data subjects’ rights are protected.

Future Trends in Unified Test Reporting: Enhancing Test Results Via Unified Reports

The landscape of software testing is constantly evolving, driven by the increasing complexity of applications and the demand for faster release cycles. Unified test reporting, already a powerful tool, stands to be significantly enhanced by emerging technologies, leading to more efficient testing processes and improved decision-making. The integration of AI and machine learning, in particular, promises to revolutionize how we analyze test data and derive actionable insights.The adoption of AI and machine learning in unified reporting will drastically improve several aspects of software testing.

These technologies can automate the analysis of vast amounts of test data, identifying patterns and anomalies that might be missed by human analysts. This automation will not only save time but also increase the accuracy and depth of analysis, leading to a more comprehensive understanding of software quality.

AI-Powered Anomaly Detection, Enhancing test results via unified reports

AI algorithms can be trained to recognize patterns indicative of software defects or performance issues within the unified reports. For example, an AI system could analyze trends in error logs, identifying recurring problems or predicting potential failures before they occur in production. This proactive approach to defect detection significantly reduces the time and resources spent on debugging and improves overall software quality.

Imagine a scenario where the system flags an unusual spike in database query times, even before manual testers notice any performance degradation in the application. This early warning allows developers to address the issue proactively, preventing a potential production outage.

Predictive Analytics for Test Optimization

Machine learning models can analyze historical test data to predict the effectiveness of different testing strategies. This allows teams to optimize their testing processes by focusing on the most impactful tests, reducing unnecessary testing efforts and accelerating the release cycle. For instance, a model could analyze past test results and predict which test cases are most likely to uncover critical bugs in a new release, allowing the team to prioritize those tests.

This targeted approach improves test efficiency and reduces the overall testing time.

Improved Test Result Visualization and Reporting

AI can enhance the visualization of test results within unified reports. Instead of simply presenting raw data, AI can generate interactive dashboards and visualizations that highlight key trends and insights. This improved visualization makes it easier for stakeholders to understand the software’s quality and make informed decisions. For example, an AI-powered dashboard could automatically generate a heatmap visualizing the areas of the application with the highest concentration of bugs, immediately pinpointing problem areas for developers.

This level of detail and clarity surpasses what traditional reporting can offer.

Evolution of Unified Reporting: Predictions

In the coming years, we can expect unified reporting systems to become increasingly intelligent and automated. AI and machine learning will play a crucial role in this evolution, enabling more proactive, predictive, and insightful testing processes. The integration of these technologies will lead to a shift from reactive debugging to proactive quality assurance, where potential problems are identified and addressed before they impact end-users.

Furthermore, the use of natural language processing (NLP) will allow for more intuitive interaction with unified reports, enabling stakeholders to ask questions and receive relevant insights in plain language, without needing specialized technical expertise. The integration of these advanced technologies will significantly improve the efficiency and effectiveness of software testing and development.

Closure

So, there you have it – a journey into the world of unified test reporting. By embracing a unified approach, you’re not just streamlining your testing process; you’re empowering your team to make data-driven decisions, improve product quality, and ultimately deliver exceptional results. The benefits extend far beyond mere data consolidation; they encompass improved efficiency, enhanced communication, and a significant boost in overall project success.

Ready to ditch the data silos and embrace the power of unified reporting?

FAQ Insights

What types of testing tools can be integrated into a unified reporting system?

Most modern testing tools offer APIs or export functionalities allowing integration. This includes performance testing tools (e.g., JMeter, LoadRunner), security testing tools (e.g., OWASP ZAP, Burp Suite), and UI testing frameworks (e.g., Selenium, Cypress).

How much does implementing a unified reporting system cost?

Costs vary widely depending on the complexity of your testing landscape, the tools you’re using, and whether you build a custom solution or leverage existing platforms. Open-source options can be cost-effective, while commercial platforms offer more features but come with a price tag.

What are the biggest challenges in implementing unified reporting?

Key challenges include data standardization across different tools, ensuring data security and access control, and selecting appropriate visualization techniques to effectively communicate insights to diverse stakeholders.

How can I convince my team of the benefits of unified reporting?

Highlight the time saved through automated reporting, the improved decision-making based on a holistic view of test results, and the enhanced collaboration fostered by a centralized reporting system. Show them a clear ROI by quantifying the time and resources saved.