Facebook Acquires Bloomsbury AI to Curb Fake News

Facebook acquires Bloomsbury AI to curb fake news – that headline alone sparks a whirlwind of questions, doesn’t it? Is this a game-changer in the fight against misinformation? Will it truly impact the tidal wave of fake news flooding our feeds? This acquisition marks a significant move by Facebook, signaling a potential shift in their approach to content moderation and a deeper dive into AI-powered solutions.

We’ll explore the motivations behind this deal, examine Bloomsbury AI’s capabilities, and delve into the ethical implications of using AI to police online content. Buckle up, it’s going to be a fascinating ride!

The acquisition of Bloomsbury AI isn’t just another tech buyout; it’s a statement. Facebook is facing increasing pressure to combat the spread of misinformation on its platform, and this acquisition suggests a significant investment in AI-driven solutions. We’ll examine how Bloomsbury AI’s technology could be integrated into Facebook’s existing systems to detect and remove fake news, and also consider the potential challenges and limitations of such an approach.

The ethical considerations are particularly crucial, as we explore the delicate balance between freedom of speech and the need to protect users from harmful misinformation.

The Acquisition

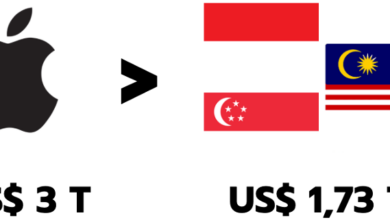

Facebook’s acquisition of Bloomsbury AI, a relatively unknown AI company specializing in natural language processing, sent ripples through the tech world. While the acquisition cost remains undisclosed, the move signifies Facebook’s continued commitment to combating misinformation and enhancing its content moderation capabilities. This isn’t just about cleaning up the platform; it’s a strategic play for the future of online information.

Facebook’s Motivations for Acquiring Bloomsbury AI

The primary motivation behind this acquisition likely lies in Bloomsbury AI’s expertise in natural language processing (NLP). NLP is crucial for identifying and mitigating the spread of fake news. Bloomsbury AI’s technology could significantly improve Facebook’s ability to detect subtle forms of misinformation, including sophisticated deepfakes and cleverly disguised propaganda, which often evade simpler detection methods. Beyond fake news, this technology could also enhance other aspects of Facebook’s platform, such as improving search functionality and providing more accurate and relevant content recommendations.

Furthermore, acquiring a smaller, innovative company allows Facebook to gain access to cutting-edge talent and technology without the complexities and potential pitfalls of developing these capabilities internally.

Strategic Implications for Facebook’s Business Strategy

The acquisition of Bloomsbury AI has significant implications for Facebook’s overall business strategy. By bolstering its AI capabilities in the fight against misinformation, Facebook aims to improve user trust and engagement. A platform plagued by fake news is a platform users will actively avoid. This acquisition demonstrates Facebook’s commitment to addressing a major criticism levelled against it for years.

Moreover, improved content moderation through advanced AI could reduce the regulatory scrutiny and potential fines Facebook faces globally. This proactive approach to addressing the issue could present a stronger defense against future regulatory challenges. The strategic value also extends to enhancing Facebook’s advertising business. A cleaner, more trustworthy platform attracts higher-quality advertisers willing to pay more for their ads.

Comparison with Other Facebook Acquisitions

The acquisition of Bloomsbury AI is just one piece in Facebook’s larger strategy of acquiring companies to enhance its core services and expand into new markets. Here’s a comparison with three other notable acquisitions:

| Acquisition Year | Target Company | Acquisition Cost (if available) | Strategic Goal |

|---|---|---|---|

| 2012 | $1 billion | Expand into photo and video sharing, enhance user engagement. | |

| 2014 | $19 billion | Dominate the mobile messaging market, expand user base globally. | |

| 2014 | Oculus VR | $2 billion | Enter the virtual reality market, explore new avenues for social interaction and entertainment. |

Bloomsbury AI’s Technology and Capabilities

Bloomsbury AI, before its acquisition by Facebook, was a promising startup specializing in advanced natural language processing (NLP) and machine learning. Their core strength lay in developing AI systems capable of understanding and reasoning with complex textual information, far beyond simple matching. This sophisticated approach was key to their potential in combating misinformation.Bloomsbury AI’s technology leveraged a combination of techniques, including deep learning models trained on massive datasets of text and code.

This allowed their AI to not only identify patterns indicative of fake news but also to understand the nuances of language, context, and intent. Unlike simpler systems that might flag articles based on s alone, Bloomsbury AI’s systems could analyze the entire article, including its structure, sources, and writing style, to assess its credibility.

Bloomsbury AI’s Core Capabilities in Combating Fake News

Bloomsbury AI’s capabilities directly addressed several critical challenges in identifying and mitigating the spread of fake news. Their AI could analyze the textual content of posts, articles, and comments, identifying inconsistencies, logical fallacies, and manipulative language techniques often employed in disinformation campaigns. For instance, their systems could detect discrepancies between claims made in an article and verifiable facts from reputable sources.

Furthermore, they could identify instances of plagiarism or the repurposing of previously debunked content. This multi-faceted approach provided a more robust defense against misinformation than simpler methods.

Integration with Facebook’s Infrastructure

Integrating Bloomsbury AI’s technology into Facebook’s existing infrastructure could involve several key steps. Firstly, the AI could be incorporated into Facebook’s content moderation systems. This would involve feeding the AI the text of posts and comments, allowing it to flag potentially false or misleading content for human review. Secondly, the AI could be used to enhance Facebook’s fact-checking partnerships.

By providing fact-checkers with AI-generated summaries and analyses of potentially false claims, the AI could streamline the fact-checking process and improve efficiency. Finally, the AI could power a new feature that provides users with warnings or explanations about potentially misleading content, educating users and empowering them to make informed decisions. Imagine a system that highlights questionable claims in a post with links to credible sources offering counter-evidence, a clear example of Bloomsbury AI’s potential impact.

Examples of Bloomsbury AI’s Technology in Action

While specific details of Bloomsbury AI’s algorithms remain confidential, we can extrapolate potential applications based on the general capabilities of similar NLP systems. For example, their AI could be trained to identify and flag posts containing fabricated quotes, manipulated images, or misleading headlines. It could also analyze the source of a post, assessing its credibility based on factors such as the publisher’s reputation and past accuracy.

Furthermore, the AI could detect patterns of coordinated disinformation campaigns, identifying accounts or groups spreading similar false narratives. This would allow Facebook to take proactive measures to limit the reach of such campaigns. Consider a scenario where an AI detects a network of accounts simultaneously posting a fabricated story about a political candidate; this system could be immediately alerted and take action to prevent further dissemination.

Combating Fake News

Facebook’s battle against the spread of misinformation is a constant, evolving challenge. The platform has implemented numerous strategies to identify and remove fake news, but the sophisticated nature of disinformation campaigns necessitates continuous improvement and adaptation. The acquisition of Bloomsbury AI represents a significant step in this ongoing fight, leveraging advanced AI capabilities to bolster existing defenses.Facebook’s current methods for combating fake news are multifaceted.

They involve a combination of automated systems and human review. Automated systems analyze content for indicators of falsity, such as inconsistencies with known facts, use of misleading headlines, and patterns of coordinated sharing from known disinformation sources. Human reviewers then assess flagged content, making final judgments about its veracity and applying appropriate actions, such as removing the post or adding fact-checking labels.

The platform also relies heavily on partnerships with third-party fact-checkers who verify information and provide context. Furthermore, Facebook employs techniques to reduce the visibility of potentially false content in users’ newsfeeds through algorithmic adjustments.

Bloomsbury AI’s Contribution to Fake News Detection

Bloomsbury AI’s technology, specializing in natural language processing and machine learning, offers significant potential to enhance Facebook’s existing capabilities. Its advanced algorithms can analyze the nuances of language, identifying subtle cues that might indicate deception or manipulation that current systems may miss. For example, Bloomsbury AI’s technology could be used to better detect sophisticated forms of disinformation, such as subtly biased narratives or the strategic use of emotionally charged language to manipulate reader responses.

By integrating Bloomsbury AI’s capabilities, Facebook can improve the accuracy and efficiency of its automated systems, allowing for quicker identification and removal of fake news, and reducing the workload on human reviewers. Furthermore, Bloomsbury AI’s technology can help in better understanding the spread patterns of misinformation, allowing for more proactive interventions.

Facebook’s acquisition of Bloomsbury AI to combat fake news is a huge step, but building effective solutions requires powerful tools. Developing these tools efficiently is where the future of app development comes in, and that’s why I’ve been diving into domino app dev, the low-code and pro-code future , to see how it can help create robust anti-misinformation systems.

Ultimately, combating fake news needs innovation, and this approach could be a game-changer for Facebook’s efforts.

Challenges in Using AI to Combat Fake News

The use of AI in combating fake news presents several significant challenges. While AI offers powerful tools, it is not a silver bullet solution.

- The Adaptability of Disinformation Tactics: Disinformation campaigns constantly evolve, employing new strategies and techniques to evade detection. AI systems need to be continuously updated and retrained to keep pace with these evolving tactics.

- Contextual Understanding: Determining the truthfulness of information often requires a deep understanding of context, cultural nuances, and even satire or sarcasm. AI systems may struggle with these subtleties, leading to misclassifications.

- Bias and Fairness: AI algorithms are trained on data, and if that data reflects existing biases, the algorithms themselves may perpetuate or even amplify those biases. This can lead to unfair or discriminatory outcomes in the identification and removal of content.

- The Arms Race with Disinformation Creators: As AI-powered detection systems improve, so too will the sophistication of the techniques used to create and spread fake news. This creates an ongoing “arms race” that requires constant innovation and adaptation.

- Over-reliance on AI: While AI is a valuable tool, it should not replace human judgment entirely. Over-reliance on automated systems can lead to errors and a lack of accountability.

Ethical and Societal Implications

The acquisition of Bloomsbury AI by Facebook, while potentially beneficial in curbing the spread of misinformation, raises significant ethical and societal concerns. The power to control the flow of information on such a massive scale necessitates a careful examination of the potential consequences for individual freedoms and the overall health of public discourse. Balancing the need to combat fake news with the protection of free speech is a complex challenge, demanding thoughtful consideration and robust safeguards.The use of AI in content moderation presents several ethical dilemmas.

Algorithms, however sophisticated, are trained on data, and biases present in that data can be amplified and perpetuated by the AI. This can lead to the unfair or discriminatory suppression of certain viewpoints, potentially silencing marginalized communities or those expressing dissenting opinions. Furthermore, the lack of transparency in how these algorithms function makes it difficult to understand their decision-making processes, leading to a lack of accountability and potential for abuse.

Potential Impact on Freedom of Speech and Expression

The concentration of power to control online content in the hands of a single entity, even one with noble intentions, raises serious concerns about freedom of speech. The line between combating misinformation and censorship can become blurred, particularly when algorithms are tasked with making subjective judgments about the truthfulness or acceptability of content. A system that is overly sensitive to even minor inaccuracies or controversial statements could inadvertently stifle legitimate debate and the free exchange of ideas, ultimately harming the democratic process.

Consider the potential for algorithmic bias to disproportionately affect minority groups or those expressing unpopular opinions. For example, an algorithm trained primarily on Western news sources might misinterpret cultural nuances or expressions from other parts of the world, leading to the suppression of legitimate content. This would severely restrict the ability of these communities to share their perspectives and participate in the global conversation.

Hypothetical Ethical Dilemma, Facebook acquires bloomsbury ai to curb fake news

Imagine a scenario where Bloomsbury AI’s technology flags a post detailing alleged government corruption. While the post contains some inaccuracies, it also includes verifiable evidence of unethical behavior. Facebook, relying on Bloomsbury AI’s assessment, decides to remove the post entirely, citing the presence of misinformation. This action, while technically adhering to Facebook’s policies on fake news, silences a potentially crucial piece of information that could have triggered a legitimate public inquiry into government misconduct.

The dilemma highlights the tension between preventing the spread of falsehoods and safeguarding the public’s right to access information, even if that information is imperfect or controversial. The algorithm’s decision, driven by its programmed parameters, lacks the nuanced judgment a human might apply, potentially leading to an unjust outcome. This exemplifies the complex challenges inherent in entrusting AI with such significant power over public discourse.

Future Outlook and Predictions

The acquisition of Bloomsbury AI by Facebook represents a significant step in the ongoing battle against misinformation. While the immediate impact is focused on integrating Bloomsbury AI’s technology into Facebook’s existing infrastructure, the long-term implications are far-reaching and will likely reshape the landscape of online content moderation and the development of AI-driven solutions for combating fake news across various platforms.

The success of this integration will hinge on several factors, including the adaptability of Bloomsbury AI’s technology to the sheer scale of Facebook’s user base and the ongoing evolution of sophisticated disinformation tactics.The integration of Bloomsbury AI’s advanced AI capabilities into Facebook’s systems promises a more proactive and effective approach to identifying and mitigating the spread of fake news.

This could lead to a measurable decrease in the visibility and reach of false or misleading content, ultimately improving the overall quality of information available to Facebook users. However, the challenge remains in balancing effective content moderation with the preservation of free speech and the avoidance of algorithmic bias. The long-term impact will depend on Facebook’s ability to navigate these complex ethical considerations.

Potential Long-Term Impact on Fake News Spread

The acquisition could significantly reduce the spread of fake news on Facebook. Bloomsbury AI’s technology, specializing in identifying subtle cues indicative of misinformation, could dramatically improve Facebook’s ability to detect and flag fabricated stories, manipulated images, and coordinated disinformation campaigns. We might see a reduction in the viral spread of harmful narratives, particularly those targeting vulnerable populations or influencing political discourse.

However, the constant evolution of disinformation tactics requires ongoing adaptation and improvement of the AI algorithms, necessitating continuous investment in research and development. The success will depend on the ability to stay ahead of the curve, anticipating and adapting to new methods of spreading misinformation. For example, a successful integration could mirror the impact of Google’s advancements in search algorithm technology, which significantly reduced the prominence of low-quality or misleading websites in search results over time.

Timeline for Technology Integration

The integration of Bloomsbury AI’s technology into Facebook’s vast infrastructure will likely unfold in phases.

- Phase 1 (6-12 months): Initial integration and testing. Focus will be on integrating Bloomsbury AI’s core algorithms into existing Facebook systems, conducting rigorous testing to ensure compatibility and accuracy. This phase might involve limited deployment in specific regions or on select user groups.

- Phase 2 (12-18 months): Gradual rollout and refinement. As confidence in the technology increases, a wider rollout will commence, with continuous monitoring and refinement based on real-world performance data. This phase will likely involve feedback loops with users and adjustments to algorithms to mitigate unintended consequences.

- Phase 3 (18-24 months and beyond): Full integration and expansion. Bloomsbury AI’s technology becomes a fully integrated component of Facebook’s content moderation system, continuously learning and adapting to new forms of misinformation. This phase might include the development of new features based on the insights gained from the integration.

Influence on Other Online Platforms

The success of Facebook’s integration of Bloomsbury AI’s technology could serve as a blueprint for other online platforms grappling with the problem of fake news. The acquisition could spur innovation and collaboration in the development of AI-based solutions for misinformation detection and mitigation. We might see other platforms adopting similar technologies or investing more heavily in their own AI research and development efforts.

This could lead to a more coordinated and effective global approach to combating the spread of false information online. For instance, Twitter, YouTube, and other social media companies might accelerate their own investments in AI-driven content moderation systems, potentially collaborating with Facebook or developing their own proprietary solutions based on lessons learned from the Bloomsbury AI integration.

Illustrative Example

Let’s imagine a realistic scenario where Bloomsbury AI could have made a significant difference in curbing the spread of misinformation on Facebook. The power of AI in identifying and mitigating fake news is best understood through a concrete example.This example details a scenario involving a fabricated story about a prominent politician and its rapid dissemination across Facebook. We’ll explore how Bloomsbury AI’s technology could have intervened.

Fake News Scenario: Political Scandal

Imagine a fabricated story alleging a major political scandal involving a well-known presidential candidate, just weeks before a crucial election. The story, published on a seemingly legitimate news website, claims the candidate engaged in illegal activities, providing fabricated evidence and manipulated quotes. This article is then shared widely on Facebook, gaining traction through carefully targeted advertising and boosted posts.

The visual representation on Facebook would show a typical news article share. The post would include a compelling headline designed to grab attention, such as “Candidate X Exposed: Shocking Revelations Before Election!” The accompanying image might be a manipulated photograph or a graphic designed to look official. The post would include links to the original article and possibly to other, seemingly corroborating, sources – all part of a sophisticated disinformation campaign.

Bloomsbury AI’s technology, with its advanced natural language processing and machine learning capabilities, would have likely detected this fake news post through several mechanisms. First, the AI would analyze the text for inconsistencies and stylistic cues often found in fabricated articles. It would compare the writing style to that of known legitimate news sources, identifying deviations. Secondly, it would cross-reference the information with other reliable news outlets and databases, flagging discrepancies. Finally, it would assess the source’s credibility, considering factors such as website age, domain registration information, and social media activity.

Facebook’s acquisition of Bloomsbury AI to combat fake news highlights the growing need for robust content moderation. This fight against misinformation is even more crucial in the cloud, where data security is paramount. Understanding the challenges requires looking at solutions like those offered by Bitglass, as detailed in this insightful article on bitglass and the rise of cloud security posture management , which helps organizations maintain control and security.

Ultimately, both Facebook’s initiative and improved cloud security are vital steps in creating a safer digital environment.

The user interface on Facebook would subtly reflect the AI’s assessment. A small flag or warning icon might appear next to the post, indicating a potential risk of misinformation. Clicking this icon would open a detailed explanation, providing links to fact-checking websites and other credible sources that debunk the claims. This would allow users to make informed decisions about the information they consume, reducing the impact of the fake news campaign. Furthermore, the AI’s detection system would flag the post to Facebook moderators for further review and potential removal. The algorithm could also adjust the post’s reach and visibility, limiting its spread.

Last Point: Facebook Acquires Bloomsbury Ai To Curb Fake News

Facebook’s acquisition of Bloomsbury AI represents a bold step in the ongoing battle against fake news. While the long-term impact remains to be seen, the potential for AI-powered solutions to significantly improve content moderation is undeniable. However, navigating the ethical complexities and potential unintended consequences will be crucial. This isn’t just about technology; it’s about the future of information, discourse, and the very fabric of our online interactions.

The success of this venture will depend not only on the technical capabilities of Bloomsbury AI but also on Facebook’s commitment to responsible AI development and deployment. It’s a story that will continue to unfold, and we’ll be watching closely.

FAQ Guide

What specific AI technologies does Bloomsbury AI possess?

Bloomsbury AI’s exact technologies aren’t publicly available in detail, but it’s likely they involve natural language processing (NLP) and machine learning algorithms to analyze text and identify patterns indicative of fake news.

How much did Facebook pay for Bloomsbury AI?

The acquisition cost hasn’t been publicly disclosed by either company.

What are the potential downsides of relying on AI to combat fake news?

AI can be biased, leading to the censorship of legitimate content. It can also be easily gamed by sophisticated disinformation campaigns, rendering it ineffective.

Could this lead to increased censorship on Facebook?

This is a major concern. The potential for over-reliance on AI to flag content could lead to the suppression of legitimate viewpoints and voices.