GenAI Regulation Why It Isnt One Size Fits All

Genai regulation why it isnt one size fits all – GenAI regulation: why it isn’t one size fits all – that’s the burning question facing us as generative AI explodes onto the scene. From crafting breathtaking art to diagnosing medical conditions, AI’s potential is vast, but so are the challenges. A single regulatory approach simply won’t cut it; the diverse applications and potential pitfalls demand a nuanced and adaptable strategy.

We need to consider everything from data privacy and intellectual property to algorithmic bias and the very real impact on jobs and society.

The rapid advancement of generative AI technologies across various sectors presents unprecedented opportunities and challenges. Healthcare, finance, art, and education are just a few areas where these tools are transforming processes and creating new possibilities. However, the unique risks associated with each application necessitate tailored regulatory frameworks. A “one-size-fits-all” approach risks stifling innovation in some areas while failing to adequately address the risks in others.

This post explores the complexities and argues for a more adaptable regulatory landscape.

The Diverse Landscape of Generative AI Applications

Generative AI, with its capacity to create new content ranging from text and images to code and music, is rapidly transforming various sectors. However, the diverse nature of these applications necessitates a nuanced approach to regulation, as a one-size-fits-all solution risks stifling innovation while failing to adequately address potential harms. Understanding this diverse landscape is crucial for developing effective and responsible regulatory frameworks.

Generative AI Applications Across Sectors

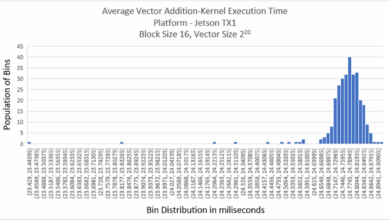

The potential of generative AI is vast, spanning numerous industries and impacting our daily lives in profound ways. The following table illustrates some key applications and their associated benefits and risks:

| Application | Sector | Potential Benefits | Potential Risks |

|---|---|---|---|

| Drug discovery and development using generative models | Healthcare | Accelerated drug discovery, personalized medicine, reduced development costs | Potential for inaccurate predictions, ethical concerns regarding data privacy and algorithmic bias, safety concerns with novel drug compounds. |

| Fraud detection and risk assessment models | Finance | Improved accuracy in identifying fraudulent transactions, enhanced risk management, more efficient allocation of resources | Potential for bias in algorithms leading to discriminatory outcomes, concerns about data privacy and security breaches, over-reliance on AI predictions leading to human error. |

| AI art generation and digital content creation tools | Art | Increased accessibility to creative tools, new forms of artistic expression, democratization of art creation | Concerns about copyright infringement and intellectual property rights, potential displacement of human artists, ethical considerations regarding authenticity and originality. |

| Personalized learning platforms and AI-powered tutoring systems | Education | Tailored learning experiences, improved student engagement, increased accessibility to education | Potential for algorithmic bias leading to inequitable outcomes, concerns about data privacy and security, over-reliance on technology leading to a decline in human interaction. |

Challenges and Opportunities Presented by Diverse Applications

Each application of generative AI presents unique challenges and opportunities. For example, while AI-powered drug discovery offers the potential to revolutionize healthcare, it also raises significant ethical concerns regarding data privacy and algorithmic bias, which must be carefully addressed. Similarly, the use of generative AI in finance requires robust safeguards to mitigate the risk of discriminatory outcomes and security breaches.

In art, the rise of AI art generation tools necessitates a re-evaluation of copyright laws and intellectual property rights. In education, personalized learning platforms must be designed to ensure equitable access and avoid exacerbating existing inequalities.

Illustrative Scenario: A One-Size-Fits-All Approach to Regulation

Imagine a scenario where a single, overly restrictive regulatory framework is applied to all generative AI applications. This could severely hamper the development of AI-powered drug discovery tools. The stringent requirements for data privacy and model validation, while necessary in some contexts, might become overly burdensome for smaller research teams working on novel drug compounds. This could lead to delays in research, hindering the development of life-saving treatments and ultimately harming patients.

The increased regulatory burden could also stifle innovation and push research overseas to regions with less stringent regulations.

Data Privacy and Security Concerns: Genai Regulation Why It Isnt One Size Fits All

Generative AI models, while offering incredible potential, present significant challenges to data privacy and security. The vast amounts of data used to train these models, coupled with the nature of their outputs, create a complex landscape of legal and ethical considerations that vary significantly across different jurisdictions. Understanding these differences and the unique challenges posed by generative AI is crucial for responsible development and deployment.The sheer scale of data used in training generative AI models is unprecedented.

These models often learn from massive datasets encompassing personal information, copyrighted material, and sensitive data. This raises concerns about the potential for unintended disclosure or misuse of this information, even if the model itself doesn’t explicitly reveal it. Furthermore, the outputs generated by these models can themselves contain sensitive information, reflecting biases present in the training data or even generating entirely new, potentially harmful content.

Data Privacy Regulations: A Comparison

The regulatory landscape for data privacy is fragmented, with different jurisdictions adopting distinct approaches. The European Union’s General Data Protection Regulation (GDPR) sets a high bar for data protection, granting individuals significant control over their personal data. The California Consumer Privacy Act (CCPA), while less stringent than the GDPR, provides California residents with certain rights regarding their personal information.

These differences create challenges for companies operating globally, requiring them to navigate a complex web of regulations. For instance, a company using a generative AI model trained on a global dataset needs to ensure compliance with GDPR for European users and CCPA for California users, among other potentially applicable laws. The absence of a harmonized global standard significantly complicates compliance efforts.

One-size-fits-all regulation for generative AI is a recipe for disaster; the nuances are vast. Consider the implications of data privacy – the recent news about Facebook asking for bank account info and card transactions of users, as reported here: facebook asking bank account info and card transactions of users , highlights the need for targeted, context-specific rules.

This incident underscores why a blanket approach to AI regulation simply won’t work; each application demands a unique regulatory framework.

Data Privacy Challenges Posed by Generative AI

Generative AI models present unique challenges to data privacy. During the training phase, the model ingests massive datasets, potentially including personal information without explicit consent. Even if this information is anonymized, techniques like membership inference attacks could potentially reveal the presence of specific individuals within the training dataset. Moreover, the outputs generated by the model can inadvertently reveal information about the training data, leading to unintended data breaches.

For example, a model trained on medical records might generate outputs that reveal patterns or details specific to individual patients, even if the outputs don’t explicitly name them. This “data leakage” poses a significant threat to individual privacy. Furthermore, the potential for generative AI to create deepfakes and other forms of synthetic media adds another layer of complexity to the privacy challenge, as these outputs can be used to spread misinformation or impersonate individuals.

Hypothetical Data Breach Case Study

Imagine a company uses a generative AI model to create personalized marketing content. The model is trained on a large dataset containing customer names, addresses, purchase history, and browsing behavior. A data breach occurs, exposing a portion of the training data.Under GDPR, the company would be obligated to notify affected individuals within 72 hours of discovering the breach, and face potentially substantial fines for non-compliance.

The company would also need to demonstrate that it implemented appropriate technical and organizational measures to protect the data.Under CCPA, the company would be required to provide California residents with information about the data breach and the types of personal information exposed. Residents would also have the right to request the company to delete their personal information.The differing requirements under GDPR and CCPA highlight the challenges of navigating a fragmented regulatory landscape.

A single data breach could trigger multiple investigations and legal actions, depending on the location of the affected individuals. This underscores the need for a more harmonized approach to data privacy regulation in the age of generative AI.

Intellectual Property Rights

The rise of generative AI presents a significant challenge to existing intellectual property (IP) frameworks. These frameworks, designed for human creativity, struggle to adapt to the automated generation of content by algorithms trained on vast datasets. The core questions surrounding ownership, infringement, and the very definition of authorship are thrown into sharp relief. Determining who holds the rights to AI-generated works – the developer of the AI, the user who prompted it, or even the AI itself – is a complex legal and ethical minefield.The complexities of copyright and patent law in the context of AI-generated content stem from the inherent nature of these systems.

Generative AI models don’t simply copy existing works; they learn patterns and styles from training data, then synthesize new outputs based on those patterns. This raises questions about whether the resulting work is sufficiently “original” to warrant copyright protection, a key requirement in most jurisdictions. Furthermore, the very act of training an AI on copyrighted material raises concerns about fair use and potential infringement, even if the AI’s output is distinct from any single work in the training set.

Patent law faces similar challenges, with questions arising around whether AI-generated inventions are patentable, considering the lack of a traditional “inventor.”

Copyright Protection of AI-Generated Works

The question of copyright protection for AI-generated works is highly contested. In the United States, for example, copyright law generally requires human authorship. This presents a clear hurdle for AI-generated works, where the creative process is largely automated. However, some argue that the user who provides the input or prompts to the AI should be considered the author, while others believe that the developers of the AI should hold the copyright.

Different jurisdictions are likely to adopt varying approaches, leading to potential conflicts and inconsistencies in the international landscape. One could imagine a scenario where a work is considered copyrighted in one country but not in another, creating a legal quagmire for creators and distributors. For instance, the EU is currently debating how to best address the issue of AI-generated content within its existing copyright framework.

Patent Protection for AI-Inventions

The patentability of AI-generated inventions is another area of significant uncertainty. Traditional patent law requires an inventor – a human being – to have conceived of and reduced the invention to practice. When an AI system generates an invention without direct human intervention, the question of inventorship becomes problematic. Some jurisdictions might consider the AI’s developer as the inventor, while others might refuse to grant patents for AI-generated inventions altogether.

The outcome will depend on how each legal system interprets the requirement of human inventiveness. Consider the example of an AI designing a novel chemical compound; determining who owns the patent for this invention – the company that developed the AI, the user who prompted it, or no one at all – remains a contentious and open question.

Potential Conflicts Between Existing IP Frameworks and Generative AI

Existing intellectual property frameworks, developed for a world of human creativity, often fail to account for the unique capabilities of generative AI. The ability of AI to learn from and synthesize vast amounts of data leads to potential conflicts in several areas. One major conflict arises from the use of copyrighted material in training datasets. While fair use doctrines exist, the scale and scope of AI training datasets often exceed what is traditionally considered fair use.

This could lead to widespread copyright infringement lawsuits against AI developers and users. Another significant conflict arises from the difficulty in determining ownership of AI-generated works. The lack of a clear “author” creates ambiguity in the assignment of copyright, leading to potential disputes and legal uncertainty. Finally, the potential for AI to generate works that infringe on existing patents presents another challenge, requiring a re-evaluation of patent protection mechanisms in the age of artificial intelligence.

Algorithmic Bias and Fairness

Generative AI models, while incredibly powerful, are not immune to the biases present in the data they are trained on. These biases, often subtle and unintentional, can lead to AI systems that perpetuate and even amplify existing societal inequalities. Understanding how these biases emerge and developing strategies to mitigate them is crucial for ensuring fairness and responsible AI development.The outputs of generative AI models are directly shaped by the data they’ve learned from.

If the training data overrepresents certain demographics or viewpoints while underrepresenting others, the model will inevitably reflect these imbalances. For instance, a language model trained on a dataset predominantly featuring male authors might generate text that consistently portrays women in stereotypical or subordinate roles. Similarly, a facial recognition system trained primarily on images of light-skinned individuals might perform poorly when identifying individuals with darker skin tones, leading to inaccurate and potentially harmful consequences.

This isn’t a matter of the AI being inherently prejudiced; it’s a consequence of biased input data.

Bias Amplification Through Lack of Tailored Regulation

A lack of tailored regulation in the generative AI space risks exacerbating these existing societal biases. Without clear guidelines and oversight, developers may prioritize speed and efficiency over fairness, leading to the widespread deployment of biased AI systems. This could have significant implications across various sectors, from recruitment and loan applications to criminal justice and healthcare. Imagine a recruitment AI trained on historical hiring data that reflects past gender or racial biases; such a system could perpetuate these inequalities by unfairly favoring certain candidates over others.

Without regulatory intervention, such biased systems could become entrenched, making it harder to address the underlying societal issues.

Methods for Mitigating Algorithmic Bias, Genai regulation why it isnt one size fits all

Addressing algorithmic bias requires a multi-pronged approach. It’s not enough to simply address the symptoms; we need to tackle the root causes within the data and the development process.

Effective mitigation strategies include:

- Data Auditing and Preprocessing: Before training, carefully examine the training data for imbalances and biases. This involves identifying and correcting skewed representations of demographics, perspectives, and other relevant factors. Techniques like data augmentation (adding underrepresented data) and re-weighting (adjusting the influence of overrepresented data) can help.

- Algorithmic Adjustments: Employ algorithmic techniques designed to reduce bias. This might involve using fairness-aware algorithms that explicitly consider and mitigate bias during model training. Examples include techniques like adversarial debiasing and fairness constraints.

- Diversity in Development Teams: Diverse development teams are crucial for identifying and addressing potential biases. Teams with varied backgrounds and perspectives are more likely to recognize and mitigate biases that might be missed by homogenous groups.

- Ongoing Monitoring and Evaluation: Regularly monitor the performance of deployed AI systems to identify and address emerging biases. This includes evaluating the model’s outputs for fairness across different demographic groups and continuously improving the model based on these evaluations. This continuous feedback loop is essential for adapting to evolving biases and ensuring long-term fairness.

- Transparency and Explainability: Develop AI systems that are transparent and explainable. This allows for better understanding of how the model makes decisions, facilitating the identification and correction of biases. Techniques like interpretable machine learning can help in this regard.

Economic and Societal Impacts

Generative AI’s rapid advancement presents a complex tapestry of economic and societal consequences, varying significantly depending on a nation’s technological infrastructure, economic structure, and social fabric. While offering immense potential for growth and innovation, it also poses challenges that require careful consideration and proactive policy responses. Understanding these multifaceted impacts is crucial for harnessing the technology’s benefits while mitigating its potential downsides.Generative AI’s economic impact is unevenly distributed globally.

Developed nations with robust technological ecosystems and skilled workforces are poised to reap significant benefits, particularly in sectors like healthcare, finance, and manufacturing. These nations are likely to experience increased productivity, new job creation in AI-related fields, and economic growth fueled by AI-driven innovation. However, developing nations might face a wider gap in technological capabilities, potentially leading to increased economic disparity and dependence on developed nations for AI-related services and technologies.

For example, the automation potential of generative AI in manufacturing could lead to job losses in developing countries heavily reliant on low-skill manufacturing jobs, while simultaneously creating high-skill jobs in developed nations capable of developing and deploying these technologies.

Generative AI’s Impact on Employment and the Workforce

The impact of generative AI on employment is a central concern. While some jobs will inevitably be automated, leading to displacement of workers in certain sectors, generative AI is also projected to create new jobs in areas like AI development, maintenance, and data science. The net effect on employment will depend on several factors, including the pace of technological adoption, the availability of retraining programs, and government policies aimed at supporting workforce transitions.

The complexities of regulating generative AI highlight why a one-size-fits-all approach is doomed to fail; the risks vary wildly depending on the application. Think about the security implications – managing data access in the cloud becomes paramount, which is why understanding platforms like Bitglass and the rise of cloud security posture management, as detailed in this excellent article bitglass and the rise of cloud security posture management , is crucial.

Ultimately, effective GenAI regulation needs to be nuanced and adaptable to the specific security context.

For instance, the legal profession could see a reduction in the need for paralegals performing routine document review tasks, while simultaneously requiring more lawyers skilled in using and interpreting AI-generated legal analyses. The healthcare sector could see increased efficiency through AI-driven diagnostics, but this may also require retraining medical professionals to effectively integrate these technologies into their practice.

Societal Impacts of Generative AI

The societal impacts of generative AI are profound and far-reaching. It’s essential to consider both the positive and negative consequences to ensure responsible development and deployment.

| Positive Consequences | Negative Consequences |

|---|---|

| Increased efficiency and productivity across various sectors | Job displacement and widening income inequality |

| Improved access to information and education through personalized learning tools | Spread of misinformation and deepfakes, eroding public trust |

| Advancements in healthcare through AI-driven diagnostics and drug discovery | Exacerbation of existing biases in algorithms, leading to unfair outcomes |

| Enhanced creativity and artistic expression through AI-powered tools | Ethical concerns surrounding the use of AI in surveillance and decision-making |

| Development of new solutions for environmental challenges | Potential for misuse of AI in autonomous weapons systems |

International Cooperation and Harmonization

The rapid advancement and global proliferation of generative AI necessitates a coordinated international approach to regulation. A “one-size-fits-all” solution is simply not feasible given the diverse technological landscapes, legal frameworks, and societal values across nations. Successfully navigating this complex terrain requires a delicate balance between establishing common ground and respecting national sovereignty.Establishing international standards for generative AI regulation presents significant challenges.

Differing interpretations of data privacy, intellectual property, and acceptable levels of algorithmic bias, among other factors, create friction points in forging a unified regulatory framework. The sheer pace of technological innovation further complicates matters, making it difficult for international bodies to keep up with emerging risks and opportunities. Moreover, the varying levels of technological development and regulatory capacity across countries present a significant hurdle to achieving global harmonization.

Challenges in Establishing International Standards

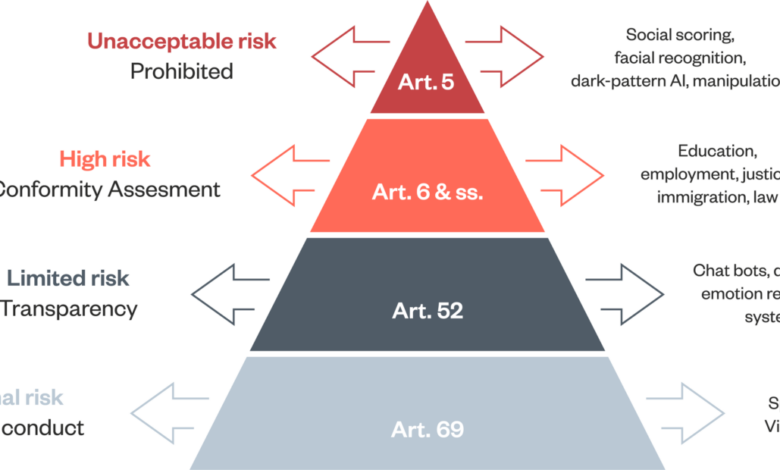

Several key obstacles hinder the creation of universally accepted generative AI regulations. Firstly, there’s a lack of consensus on fundamental definitions and classifications of generative AI systems. What constitutes “high-risk” AI varies considerably across jurisdictions. Secondly, the enforcement of international standards is problematic, as there isn’t a supranational body with the authority to directly enforce global regulations. Finally, the potential for regulatory arbitrage – where companies relocate to jurisdictions with less stringent regulations – poses a significant challenge to the effectiveness of any international agreement.

The EU’s AI Act, for example, while ambitious, could face challenges if companies simply move their operations to regions with less restrictive rules.

Potential Areas of International Cooperation

Despite the challenges, several areas offer promising avenues for international collaboration. One crucial area is the sharing of best practices and research findings on the risks and benefits of generative AI. International collaborations on developing technical standards for AI safety and security could significantly reduce risks and enhance trust. Joint initiatives to address algorithmic bias and ensure fairness in AI systems are also essential.

Finally, cooperation on data governance and cross-border data flows is critical, as generative AI models often rely on massive datasets from multiple countries. The establishment of international data sharing agreements, based on principles of transparency and accountability, would greatly facilitate the development of responsible AI.

Strategies for Promoting Harmonized Regulation

Promoting a harmonized approach requires a multi-pronged strategy. Firstly, fostering dialogue and collaboration between national regulatory bodies is crucial. This includes establishing working groups and task forces to share information and develop common standards. Secondly, the development of flexible, adaptable regulatory frameworks is necessary to accommodate the rapid pace of technological change. Instead of rigid, prescriptive rules, a principles-based approach, focusing on outcomes rather than specific technical requirements, might prove more effective.

Thirdly, recognizing and respecting national differences is vital. Harmonization should not come at the expense of national sovereignty or the ability of individual countries to tailor regulations to their specific contexts and priorities. A phased approach, starting with areas of broad agreement and gradually expanding to more complex issues, could be more effective than attempting a comprehensive, global agreement upfront.

The complexities of regulating generative AI highlight why a one-size-fits-all approach is doomed to fail; the technology’s applications are incredibly diverse. Consider the rapid advancements in application development, like what’s discussed in this insightful article on domino app dev the low code and pro code future , which shows how different development methods impact deployment and therefore regulatory needs.

Ultimately, effective GenAI regulation requires a nuanced, adaptable strategy that acknowledges this inherent variability.

The success of such a strategy hinges on a commitment to transparency, mutual respect, and a shared understanding of the importance of responsible AI development and deployment.

The Role of Government and Industry

The development and deployment of generative AI technologies present a complex challenge, demanding a careful balancing act between fostering innovation and mitigating potential risks. This necessitates a collaborative effort between governments and industry stakeholders, each with distinct yet interconnected roles in shaping the regulatory landscape. Effective governance requires a nuanced approach, recognizing the unique characteristics of different AI applications and their potential societal impacts.Governments are primarily responsible for establishing a robust legal framework that protects citizens while encouraging technological advancement.

Industry, on the other hand, possesses the technical expertise and practical experience necessary for the responsible development and implementation of AI systems. A successful regulatory environment will depend on the effective collaboration and communication between these two key players.

Government Responsibilities in Generative AI Regulation

Governments play a crucial role in setting the overall ethical and legal standards for generative AI. This includes establishing clear guidelines on data privacy, intellectual property rights, algorithmic bias, and the responsible use of AI in various sectors. Legislatures must create laws that are both comprehensive and adaptable to the rapidly evolving nature of AI technology. Furthermore, government agencies are responsible for enforcing these regulations, monitoring compliance, and investigating potential violations.

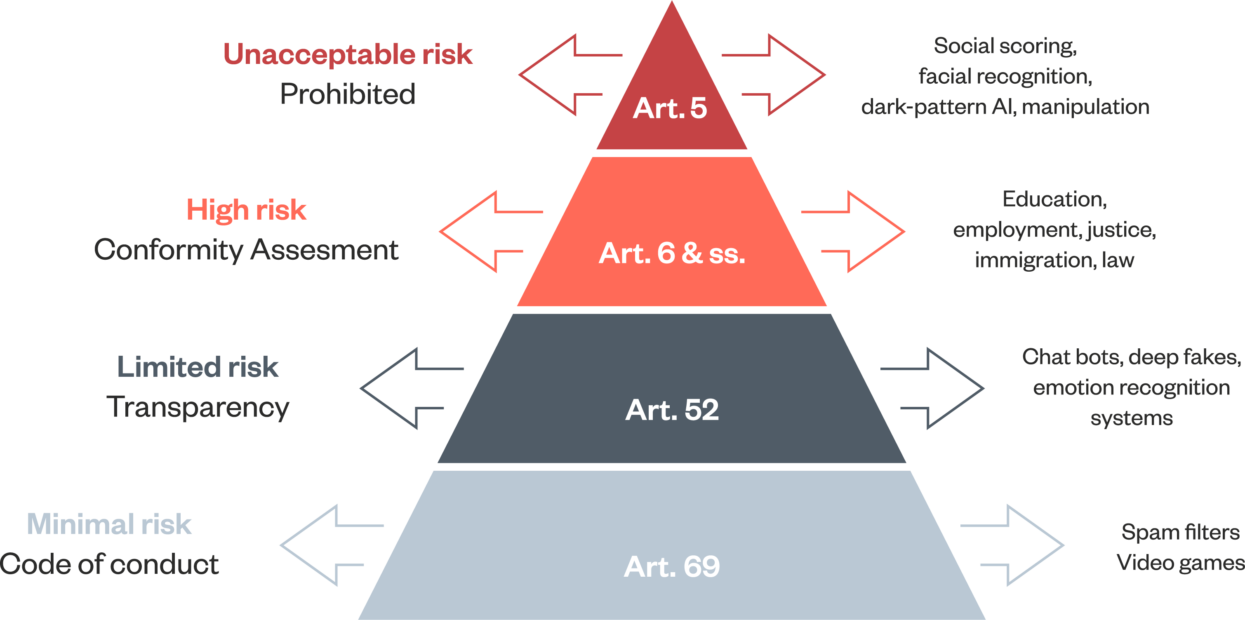

This requires significant investment in technical expertise and resources to effectively oversee the complex technological landscape. Examples include the EU’s AI Act, which aims to classify AI systems based on risk and establish specific requirements for each category, and the various state-level initiatives in the US focusing on algorithmic transparency and accountability.

Industry’s Role in Responsible AI Development

Industry stakeholders, including AI developers, technology companies, and research institutions, have a crucial role to play in ensuring the responsible development and deployment of generative AI. This involves implementing internal ethical guidelines, conducting rigorous testing to identify and mitigate biases, and proactively addressing potential risks associated with their AI systems. Companies must also prioritize data privacy and security, ensuring that user data is handled responsibly and in compliance with relevant regulations.

Transparency in the development and functioning of AI systems is paramount, allowing for public scrutiny and accountability. Examples of industry initiatives include the Partnership on AI, a multi-stakeholder organization working to advance responsible AI development, and various corporate commitments to ethical AI principles.

Models for Collaborative Governance

The most effective approach to generative AI regulation likely involves a combination of self-regulation and public-private partnerships. Self-regulation allows industry to leverage its technical expertise to develop internal standards and best practices, promoting innovation while adhering to ethical principles. However, self-regulation alone is insufficient, requiring government oversight to ensure accountability and prevent potential abuses. Public-private partnerships can facilitate a more effective dialogue and collaboration between government and industry, leveraging the strengths of both sectors to create a comprehensive regulatory framework.

This collaborative approach can also help to foster trust and transparency, promoting public acceptance of AI technologies.

Decision-Making Process for Implementing and Enforcing Generative AI Regulations

The following flowchart illustrates a simplified representation of the decision-making process for implementing and enforcing generative AI regulations:[Diagram description: A flowchart showing a cyclical process. It begins with “Identification of Risks and Needs,” leading to “Policy Development and Consultation (Government and Industry).” This then flows to “Regulation Drafting and Approval,” followed by “Implementation and Enforcement (Government Agencies).” The next step is “Monitoring and Evaluation,” which feeds back into “Identification of Risks and Needs,” completing the cycle.

Each stage includes decision points and feedback loops to ensure adaptability and continuous improvement.]

Ultimate Conclusion

Navigating the complex world of GenAI regulation requires a delicate balance. We can’t afford a rigid, one-size-fits-all approach that stifles innovation, yet we also need strong safeguards to mitigate the significant risks. The future of GenAI depends on fostering collaboration between governments, industry, and researchers to create adaptable frameworks that promote responsible development and deployment while encouraging the immense potential of this technology.

The conversation is just beginning, and the need for ongoing dialogue and adaptation is clear.

Answers to Common Questions

What are the biggest challenges in regulating GenAI internationally?

Harmonizing regulations across different countries with varying legal systems and priorities is a huge hurdle. Different nations may have different levels of technological advancement, data protection standards, and societal values, making a globally unified approach extremely difficult.

How can we ensure fairness and mitigate bias in GenAI systems?

Careful curation of training data is crucial. This includes actively seeking diverse datasets and employing techniques to detect and mitigate bias during the model development process. Ongoing monitoring and auditing of AI systems are also essential.

What is the role of self-regulation in the GenAI space?

Industry self-regulation can play a significant role, but it needs to be complemented by robust government oversight. Industry bodies can develop best practices and ethical guidelines, but independent audits and enforcement mechanisms are necessary to ensure accountability.

How will GenAI regulation impact small businesses?

The impact will vary greatly depending on the specific industry and the regulations implemented. Some small businesses may find it challenging to comply with complex regulations, while others may benefit from increased transparency and trust. Targeted support and resources for smaller companies navigating the regulatory landscape will be crucial.