Generative AI and Cybersecurity Fear, Uncertainty, Doubt

Generative ai and cybersecurity in a state of fear uncertainty and doubt – Generative AI and cybersecurity in a state of fear, uncertainty, and doubt – it’s a headline that’s grabbing everyone’s attention these days, and for good reason. The rapid advancement of generative AI brings incredible potential, but also introduces a whole new landscape of security risks. We’re facing a situation where the very technology designed to create is also capable of destruction – think deepfakes, sophisticated phishing scams, and AI-powered malware.

This isn’t just a tech problem; it’s a societal one, impacting everything from elections to healthcare. Let’s dive into the complexities and explore how we can navigate this brave new world responsibly.

The core issue lies in the inherent vulnerabilities of generative AI models. Their reliance on massive datasets, complex architectures, and the very nature of their generative capabilities create attack vectors that traditional cybersecurity measures struggle to address. The potential for misuse is immense, leading to a justified – though sometimes exaggerated – sense of fear and uncertainty. Understanding these risks, however, is the first step towards building a more secure future with generative AI.

Generative AI’s Vulnerability Landscape

Generative AI, while offering incredible potential, introduces a new and complex security landscape. Unlike traditional software with relatively well-defined attack surfaces, generative AI’s reliance on vast datasets, intricate training processes, and novel architectures creates unique vulnerabilities that require a different approach to security. Understanding these vulnerabilities is crucial for mitigating the risks associated with deploying and utilizing these powerful technologies.

Data Poisoning and Model Manipulation

The training data used to create generative AI models is a critical point of vulnerability. Malicious actors can attempt to “poison” this data by introducing subtly biased or malicious inputs during the training phase. This can lead to the model generating biased, inaccurate, or even harmful outputs. For example, an image generation model trained on a dataset containing subtly altered images could produce outputs reflecting those alterations, potentially leading to the creation of deepfakes or other malicious content.

Similarly, poisoning a large language model’s training data could cause it to generate biased or discriminatory text. The scale of the data involved makes detection and remediation incredibly challenging.

Prompt Injection Attacks

Generative AI models often interact with users through prompts. A prompt injection attack involves crafting malicious prompts that cause the model to behave unexpectedly or generate undesirable outputs. This could range from revealing sensitive information to generating malicious code. For instance, a carefully crafted prompt could trick a language model into disclosing its training data or internal parameters, potentially compromising its security and revealing confidential information.

The sophistication of these attacks can vary widely, from simple manipulation to complex adversarial prompts designed to exploit weaknesses in the model’s reasoning capabilities.

Model Extraction and Inference Attacks

Model extraction attacks involve attempting to reconstruct or steal a generative AI model’s internal workings. This can be achieved through repeated querying of the model with carefully selected inputs, effectively reverse-engineering its behavior. Once the model’s internal structure or parameters are compromised, attackers can replicate it, use it for malicious purposes, or exploit its weaknesses. Inference attacks are closely related, focusing on extracting sensitive information from the model’s outputs without directly accessing its internal workings.

For example, an attacker might try to deduce the training data used by analyzing the model’s generated outputs.

Architectural Vulnerabilities

The architecture of generative AI models themselves can contain inherent vulnerabilities. These can stem from design flaws, unexpected interactions between different model components, or insufficient security considerations during development. These vulnerabilities can be exploited to manipulate the model’s behavior, extract sensitive information, or cause unexpected failures. Identifying and mitigating these architectural weaknesses requires a deep understanding of the model’s inner workings and careful security analysis.

Comparison of Security Challenges Across Generative AI Model Types

| Model Type | Vulnerability Type | Severity | Mitigation Strategy |

|---|---|---|---|

| Large Language Models (LLMs) | Prompt Injection, Data Poisoning, Model Extraction | High | Robust input sanitization, adversarial training, differential privacy |

| Image Generators | Data Poisoning, Adversarial Examples, Deepfake Generation | High | Data provenance verification, robust watermarking, anomaly detection |

| Audio Generators | Data Poisoning, Voice Cloning, Phishing Attacks | Medium-High | Speaker verification, audio authentication, content analysis |

| Code Generators | Data Poisoning, Backdoor Attacks, Malicious Code Generation | High | Code analysis, sandboxing, secure development practices |

The Fear, Uncertainty, and Doubt (FUD) Factor

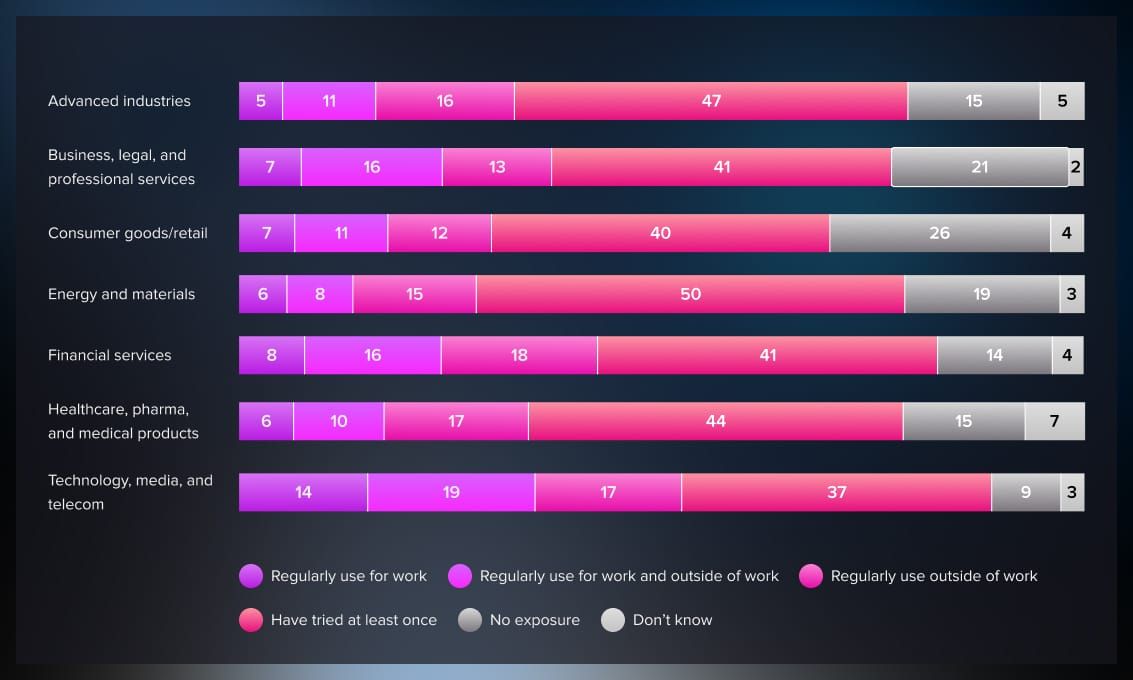

Generative AI, with its potential to revolutionize numerous sectors, is unfortunately battling a significant headwind: widespread Fear, Uncertainty, and Doubt (FUD). This FUD isn’t entirely unfounded; legitimate security concerns exist. However, the level of anxiety surrounding the technology is often disproportionate to the actual risks, significantly hindering its adoption and development.The rapid advancement of generative AI has outpaced the development of robust security protocols and public understanding.

This gap creates fertile ground for fear-mongering, misunderstandings, and ultimately, a hesitancy to embrace this powerful tool. This hesitancy manifests in various ways, from cautious investment strategies to outright rejection of the technology across different industries.

Media Portrayals and Public Perception of Generative AI Risks

Media portrayals, both in traditional and social media, play a crucial role in shaping public perception of generative AI. Sensationalized headlines focusing on potential misuse, like deepfakes and sophisticated phishing scams, contribute significantly to the FUD. Often, these portrayals emphasize worst-case scenarios, neglecting the broader context and the many positive applications of generative AI. For example, a news story about a deepfake video used for political manipulation might overshadow the numerous applications of generative AI in medical diagnosis or creative arts.

This skewed representation fosters a climate of fear, disproportionately emphasizing the negative aspects. Consequently, public perception becomes heavily influenced by these narratives, often leading to an overestimation of risks and an underestimation of benefits.

Comparison of Actual and Perceived Risks of Generative AI

The actual risks associated with generative AI are multifaceted and require a nuanced understanding. These include data breaches due to vulnerabilities in training datasets, the potential for malicious use in creating disinformation campaigns, and the risk of biased outputs reflecting biases in the training data. However, the perceived risks, fueled by FUD, often far exceed these actual threats.

For instance, while the creation of realistic deepfakes is a genuine concern, the widespread belief that deepfakes are indistinguishable from reality and easily created by anyone is an exaggeration. Similarly, while the potential for bias in AI outputs is a serious ethical consideration, the fear that all generative AI systems are inherently biased and untrustworthy is a simplification. The reality is a spectrum, with some systems exhibiting greater risks than others, and many efforts underway to mitigate these biases.

A Communication Strategy to Mitigate FUD Surrounding Generative AI Security

A comprehensive communication strategy is crucial to address and mitigate the FUD surrounding generative AI security. This strategy should involve multiple stakeholders, including researchers, developers, policymakers, and the media. Transparency is paramount; openly acknowledging the existing risks and actively working towards solutions builds trust. This includes clearly communicating the measures being taken to enhance security, such as developing robust detection mechanisms for malicious outputs and implementing ethical guidelines for AI development.

Furthermore, the focus should shift from highlighting only the negative aspects to showcasing the numerous beneficial applications of generative AI, emphasizing its potential to improve various sectors while addressing security concerns proactively. Educational initiatives, targeted at both the general public and professionals, can help demystify the technology and promote a more informed understanding of its capabilities and limitations.

Finally, collaborative efforts between researchers, industry leaders, and policymakers are crucial to establishing industry standards and best practices that foster a secure and responsible development and deployment of generative AI.

Defensive Strategies and Mitigation Techniques

Generative AI models, while offering incredible potential, present a new frontier in cybersecurity. Their vulnerability stems from the vast amounts of data they are trained on, their complex architectures, and their inherent ability to generate novel outputs which can be exploited. Protecting these models requires a multi-layered approach encompassing development, deployment, and ongoing monitoring. Robust security measures are crucial to mitigate the risks and build trust in this transformative technology.

Robust Security Measures During Development and Deployment

Securing generative AI models begins at the development stage. This involves implementing secure coding practices to prevent vulnerabilities from being introduced into the model’s core functionality. Regular security audits and penetration testing are essential to identify and address weaknesses before deployment. Furthermore, employing robust access control mechanisms, limiting access to sensitive data and model parameters to authorized personnel only, is paramount.

Deployment should occur within a secure environment, utilizing containerization technologies like Docker and Kubernetes to isolate the model from other systems and minimize the impact of potential breaches. Continuous monitoring of the model’s performance and behavior for any anomalies is also critical. For example, detecting unusual spikes in resource consumption or unexpected output could indicate an attack. Finally, implementing version control for the model and its associated code allows for rapid rollback in case of a compromise.

Data Sanitization and Anonymization Best Practices

The data used to train generative AI models often contains sensitive information. To mitigate risks, robust data sanitization and anonymization techniques are vital. This includes techniques like differential privacy, which adds noise to the training data to protect individual identities while preserving overall data utility. Data masking, which replaces sensitive data elements with pseudonyms or randomized values, is another effective method.

Furthermore, federated learning allows models to be trained on decentralized data sources without directly sharing sensitive information. For example, a healthcare organization could train a model on patient data without ever centralizing that data, reducing the risk of a large-scale data breach. Careful consideration should also be given to the data lifecycle, ensuring secure storage and deletion of sensitive data after training is complete.

Detecting and Responding to Adversarial Attacks

Generative AI models are susceptible to adversarial attacks, where malicious actors manipulate inputs to elicit undesired outputs. These attacks can range from subtle perturbations of input data to more sophisticated techniques designed to exploit model vulnerabilities. Effective defense requires a multi-pronged approach. This includes implementing robust input validation to detect and reject malicious inputs, employing adversarial training techniques to make the model more resilient to attacks, and developing anomaly detection systems to identify unusual model behavior that might indicate an attack in progress.

Regularly evaluating the model’s robustness against known attack methods is also crucial. For instance, a model trained on images might be tested against adversarial examples designed to misclassify objects. A well-defined incident response plan is essential to quickly contain and remediate any successful attacks.

Security Tools and Technologies for Generative AI

Several security tools and technologies are specifically designed to address the unique challenges of securing generative AI systems. These tools often integrate various security measures to provide comprehensive protection.

- Model Security Platforms: These platforms offer integrated solutions for securing the entire generative AI lifecycle, from development to deployment and monitoring.

- Data Loss Prevention (DLP) Tools: These tools monitor and prevent the unauthorized transfer of sensitive data used in training and model operation.

- Intrusion Detection and Prevention Systems (IDPS): These systems detect and respond to malicious activity targeting the generative AI infrastructure.

- Anomaly Detection Systems: These systems monitor model behavior for deviations from expected patterns, indicating potential attacks.

- Secure Enclaves: These hardware-based security modules provide a trusted execution environment for sensitive operations.

Ethical and Societal Implications

The rapid advancement of generative AI presents a complex tapestry of ethical and societal challenges. Its potential benefits across various sectors are undeniable, yet its misuse poses significant risks, demanding careful consideration of its implications for individuals, communities, and the global landscape. Navigating this requires a proactive approach focusing on responsible development, deployment, and regulation.Generative AI’s application in sensitive areas like healthcare and finance raises serious ethical questions.

Generative AI and cybersecurity are definitely causing a lot of FUD right now – it’s hard to know what’s truly secure. But building robust, secure apps is still crucial, and that’s where platforms like Domino come in; check out this article on domino app dev the low code and pro code future to see how they’re tackling this.

Ultimately, navigating this uncertain landscape requires a blend of innovative tech and secure development practices.

The potential for bias in algorithms, leading to unfair or discriminatory outcomes, is a primary concern. For instance, a generative AI system used for loan applications might inadvertently discriminate against certain demographic groups if the training data reflects existing societal biases. Similarly, in healthcare, an AI system diagnosing illnesses could misinterpret data from diverse populations, resulting in inaccurate diagnoses and potentially harmful treatment decisions.

Ethical Implications in Sensitive Applications

The use of generative AI in healthcare necessitates rigorous testing and validation to minimize bias and ensure accuracy. Transparency in algorithms and data used for training is crucial for building trust and accountability. Furthermore, robust oversight mechanisms are needed to prevent the misuse of these systems and protect patient privacy. In finance, similar safeguards are vital to prevent algorithmic bias in lending, investment strategies, and fraud detection.

Strict adherence to data privacy regulations, such as GDPR and CCPA, is paramount to prevent misuse of sensitive financial data.

Societal Risks Associated with Malicious Use

The potential for misuse of generative AI for malicious purposes is a significant societal risk. Deepfakes, convincingly realistic manipulated videos or audio recordings, can be used to spread misinformation, damage reputations, or even incite violence. Similarly, generative AI can be leveraged to create highly convincing phishing emails or other forms of social engineering attacks, making it harder to distinguish legitimate communication from malicious attempts.

The spread of misinformation through AI-generated text and images poses a threat to democratic processes and social cohesion.

The Regulatory Landscape and Cybersecurity Implications

The regulatory landscape surrounding generative AI is still evolving. Governments worldwide are grappling with how best to regulate the development and deployment of these powerful technologies while fostering innovation. The lack of clear and consistent regulations creates uncertainty and makes it challenging to address cybersecurity risks effectively. A collaborative effort between governments, industry, and researchers is needed to develop effective regulatory frameworks that balance innovation with safety and ethical considerations.

This includes establishing clear guidelines for data privacy, algorithmic transparency, and accountability for the misuse of generative AI.

Scenario: A Generative AI Cyberattack

A sophisticated cyberattack utilizes generative AI to create a highly convincing phishing campaign targeting a major financial institution. The AI generates personalized emails tailored to individual employees, mimicking the writing style and communication patterns of known colleagues. These emails contain malicious links leading to fake login pages, designed by the AI to perfectly replicate the institution’s website. The attackers use the stolen credentials to access sensitive financial data, resulting in significant financial losses and reputational damage for the institution. The sophisticated nature of the attack makes detection and attribution extremely difficult, highlighting the challenges posed by generative AI in the context of cybersecurity.

The Future of Generative AI and Cybersecurity

The rapid advancement of generative AI presents both incredible opportunities and significant cybersecurity challenges. While the technology holds immense potential to revolutionize various sectors, its inherent vulnerabilities demand a proactive and adaptive approach to security. The future hinges on a collaborative effort between AI developers, cybersecurity experts, and policymakers to navigate this complex landscape responsibly.The evolving relationship between generative AI and cybersecurity will be defined by a constant arms race.

As generative AI models become more sophisticated, so too will the attacks leveraging them. This dynamic necessitates a continuous cycle of innovation in both offensive and defensive capabilities.

Advancements in Cybersecurity Mitigating Generative AI Risks

The development of robust cybersecurity measures is crucial to counter the risks posed by generative AI. This includes advancements in anomaly detection systems specifically trained to identify the subtle signatures of AI-driven attacks. Furthermore, techniques like explainable AI (XAI) will play a vital role in understanding the decision-making processes of both malicious and benign AI systems, enabling faster identification and response to threats.

Strengthened data validation and sanitization techniques will become essential in preventing the injection of malicious prompts or data that could compromise generative AI models. Finally, blockchain technology could be leveraged for enhanced security and provenance tracking of AI-generated content, providing a verifiable audit trail. For example, imagine a system that uses blockchain to verify the origin and integrity of an AI-generated medical report, preventing fraudulent alterations.

Future Developments in Generative AI Exacerbating or Alleviating Security Concerns, Generative ai and cybersecurity in a state of fear uncertainty and doubt

Future iterations of generative AI may lead to more sophisticated deepfakes and other forms of AI-generated misinformation. This could severely impact public trust and societal stability. However, advancements in AI safety and security research could mitigate these risks. For instance, the development of more robust watermarking techniques for AI-generated content could help identify and flag deepfakes. Similarly, improvements in AI detection models will enhance the ability to distinguish between authentic and synthetic content.

Consider the potential of a future where AI can not only detect deepfakes but also pinpoint their origin and creator, significantly deterring malicious actors. Conversely, the increased accessibility and ease of use of generative AI tools could democratize cybercrime, lowering the barrier to entry for malicious actors.

The Evolving Relationship Between Generative AI and Cybersecurity

The coming years will witness a continuous evolution in the relationship between generative AI and cybersecurity. We can expect a rapid increase in AI-powered attacks, exploiting the vulnerabilities of generative AI models and leveraging them for malicious purposes. Simultaneously, we will see the development of advanced defensive strategies, incorporating AI itself to enhance security measures. This creates a dynamic equilibrium, where advancements in one area drive innovation in the other, resulting in a continuous cycle of improvement and counter-improvement.

This constant interplay will shape the future of cybersecurity, demanding continuous adaptation and collaboration across industries.

The Ideal Landscape for Responsible Development and Deployment of Generative AI

The ideal landscape for responsible generative AI development prioritizes security from the outset. This requires a multi-faceted approach, encompassing rigorous security testing throughout the development lifecycle, the implementation of robust access control mechanisms, and the adoption of ethical guidelines and regulations. Transparency and explainability in AI models are paramount, allowing for better understanding of potential vulnerabilities and biases.

Furthermore, fostering collaboration between researchers, developers, and policymakers is essential to create a shared understanding of the risks and establish best practices for responsible development and deployment. This collaborative effort should include open-source initiatives, fostering a culture of security awareness and knowledge sharing. Only through such a comprehensive and collaborative approach can we harness the immense potential of generative AI while mitigating its inherent risks.

Wrap-Up

Navigating the intersection of generative AI and cybersecurity requires a multi-pronged approach. We need robust security measures, ethical guidelines, and open communication to address the fear, uncertainty, and doubt surrounding this powerful technology. While the risks are real, they are not insurmountable. By focusing on proactive security strategies, responsible development practices, and a collaborative effort between researchers, developers, and policymakers, we can harness the incredible potential of generative AI while mitigating its inherent risks.

The future of AI security isn’t about fear, but about informed action and proactive mitigation.

Clarifying Questions: Generative Ai And Cybersecurity In A State Of Fear Uncertainty And Doubt

What are some examples of adversarial attacks against generative AI?

Adversarial attacks involve manipulating input data to cause the AI model to produce incorrect or malicious outputs. Examples include crafting specific prompts to elicit biased or harmful responses, or injecting malicious code into training data to compromise the model’s integrity.

How can I protect my organization from generative AI-based threats?

Implement strong data security practices, regularly update security software, train employees on identifying and reporting suspicious activities, and invest in AI-specific security tools that can detect and respond to sophisticated attacks.

What role does regulation play in mitigating generative AI risks?

Regulations can establish standards for responsible AI development and deployment, promote transparency and accountability, and provide legal frameworks for addressing misuse and malicious activities. However, the rapidly evolving nature of AI presents challenges for regulators in keeping pace with technological advancements.