Generative AI The Unseen Insider Threat

Generative ai the unseen insider threat – Generative AI: The Unseen Insider Threat. It sounds like something from a sci-fi thriller, right? But the reality is far more chilling. This powerful technology, capable of creating realistic text, images, and code, is quietly becoming a potent weapon in the hands of malicious insiders. We’re not talking about clumsy hackers; we’re talking about individuals with legitimate access, using AI to bypass security measures and wreak havoc from within.

Imagine a disgruntled employee using generative AI to craft incredibly convincing phishing emails, targeting colleagues and stealing sensitive data. Or perhaps an insider leveraging AI to automate the creation of sophisticated malware, capable of silently infiltrating systems and causing widespread damage. The potential for devastation is immense, and the insidious nature of these attacks makes them particularly dangerous. This post delves into the specifics of this emerging threat, exploring how it works, how to spot it, and most importantly, how to protect your organization.

Defining Generative AI in the Cybersecurity Context

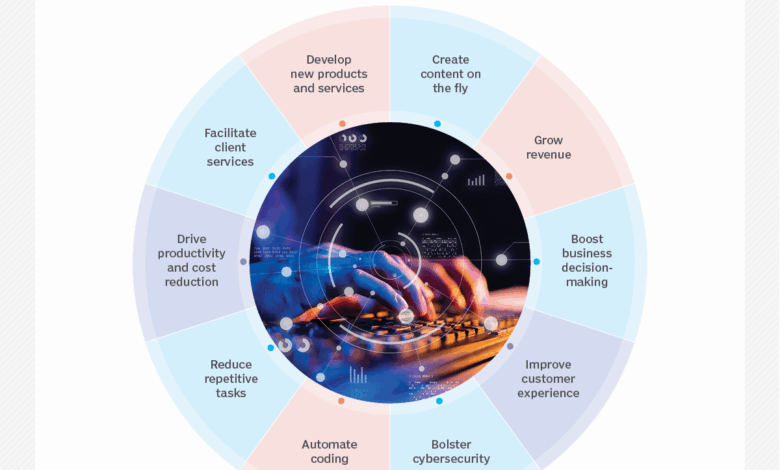

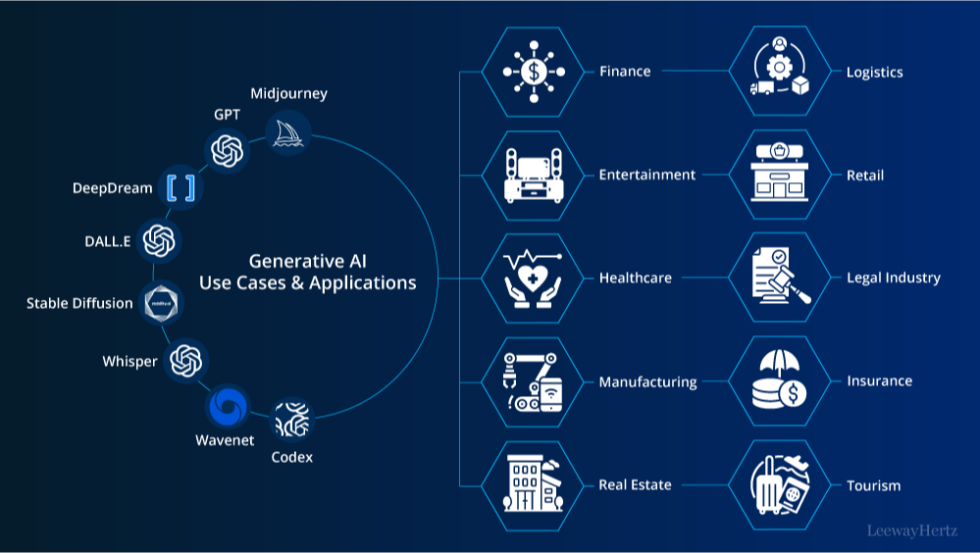

Generative AI, in the context of cybersecurity, refers to artificial intelligence models capable of creating new content, such as text, code, images, and audio. Unlike traditional AI systems that primarily analyze or classify existing data, generative AI models learn patterns from input data and then generate similar but novel outputs. This ability presents both immense opportunities and significant risks within the cybersecurity landscape.Generative AI models leverage advanced techniques like deep learning and large language models (LLMs) to achieve their generative capabilities.

These models are trained on massive datasets, allowing them to understand complex relationships and patterns within the data. This understanding is then used to generate new, realistic content, which can be remarkably convincing, even indistinguishable from human-created content in some cases. This power, however, makes them a potent tool for malicious actors.

Generative AI Capabilities Relevant to Cybersecurity Threats

Generative AI’s ability to produce realistic-looking phishing emails, malware code, and deceptive social media posts significantly amplifies the threat landscape. For example, a generative AI model could create highly personalized phishing emails tailored to individual targets, increasing the likelihood of successful attacks. Similarly, it could generate sophisticated malware code that is difficult to detect by traditional antivirus software, or craft convincing deepfakes to manipulate individuals or organizations.

The speed and scale at which generative AI can produce these threats dwarf the capabilities of human attackers, posing a significant challenge to existing security measures.

Differences Between Generative AI and Other AI Types in Malicious Use

While other AI types can be used for malicious purposes (e.g., using machine learning for targeted advertising or for automating attacks), generative AI possesses a unique capability to create entirely new, convincing content. Traditional AI primarily focuses on analysis and prediction; generative AI goes a step further by actively creating new data that can be directly used in attacks.

This creative capacity significantly increases the sophistication and effectiveness of cyberattacks. A simple example is the difference between a machine learning model identifying vulnerable systems (traditional AI) versus a generative AI model creating a custom exploit for those systems (generative AI).

Ways Generative AI Can Be Weaponized for Insider Attacks

Generative AI can be exploited by malicious insiders to launch devastating attacks. A disgruntled employee, for instance, could use generative AI to fabricate incriminating evidence against colleagues, creating realistic-looking emails or documents that could damage reputations and careers. They could also generate convincing fake system logs to cover their tracks or create malicious code that appears legitimate. Furthermore, generative AI could be used to automate the creation of fake credentials or access tokens, allowing for unauthorized access to sensitive data.

The ability to create convincing disinformation and tailored social engineering attacks further amplifies the threat posed by insider attacks fueled by generative AI. The scale and sophistication of such attacks significantly increase the difficulty of detection and remediation.

Insider Threats Enabled by Generative AI

Generative AI, with its ability to create realistic text, images, audio, and code, presents a significant escalation of the insider threat landscape. Malicious insiders, or even those unintentionally compromised, can leverage these capabilities to significantly amplify the impact of their actions, leading to more sophisticated and harder-to-detect breaches. The ease of access to these powerful tools, combined with a lack of robust security protocols specifically designed for this new threat vector, creates a dangerous combination.Generative AI’s ability to automate and enhance various stages of a cyberattack dramatically increases the speed, scale, and effectiveness of malicious activities.

This section will explore specific scenarios illustrating how generative AI facilitates insider data breaches and other malicious activities.

Generative AI Facilitating Data Breaches

A disgruntled employee, for instance, could use generative AI to create realistic-looking fake documents, replacing sensitive information with fabricated data. This could involve generating fake invoices, contracts, or even internal memos, potentially diverting funds or compromising sensitive business deals. Similarly, an insider could use AI to synthesize realistic-sounding audio recordings of conversations or meetings, altering the context or fabricating evidence to cover their tracks.

The AI could even generate realistic-looking images to create convincing fake ID cards or access badges, further aiding physical access to sensitive areas or equipment. The sophistication and ease of creating these forgeries make detection exceptionally difficult.

Generative AI in Phishing and Social Engineering

Generative AI significantly lowers the barrier to entry for creating highly convincing phishing emails and social engineering attacks. Instead of relying on generic, easily detectable templates, an insider could utilize AI to generate personalized phishing emails tailored to individual recipients. This personalization could include using specific details from the victim’s professional network, creating a sense of urgency or trust, and mimicking the writing style of a known colleague or superior.

The AI could even craft convincing narratives for pretexting attacks, making it incredibly difficult for recipients to identify the scam. For example, an AI could generate an email seemingly from the CEO requesting immediate wire transfer of funds to a fraudulent account, using specific details about a supposed urgent business deal, mimicking the CEO’s writing style learned from previous emails.

Generative AI in Malware and Exploit Creation, Generative ai the unseen insider threat

The automation capabilities of generative AI extend to the creation of malware and exploit code. An insider with malicious intent could leverage AI to generate variations of existing malware, evading traditional signature-based detection systems. The AI could also be used to automatically generate exploit code targeting specific vulnerabilities within a company’s network. This significantly reduces the technical expertise required to create and deploy effective malware, allowing even less skilled individuals to launch sophisticated attacks.

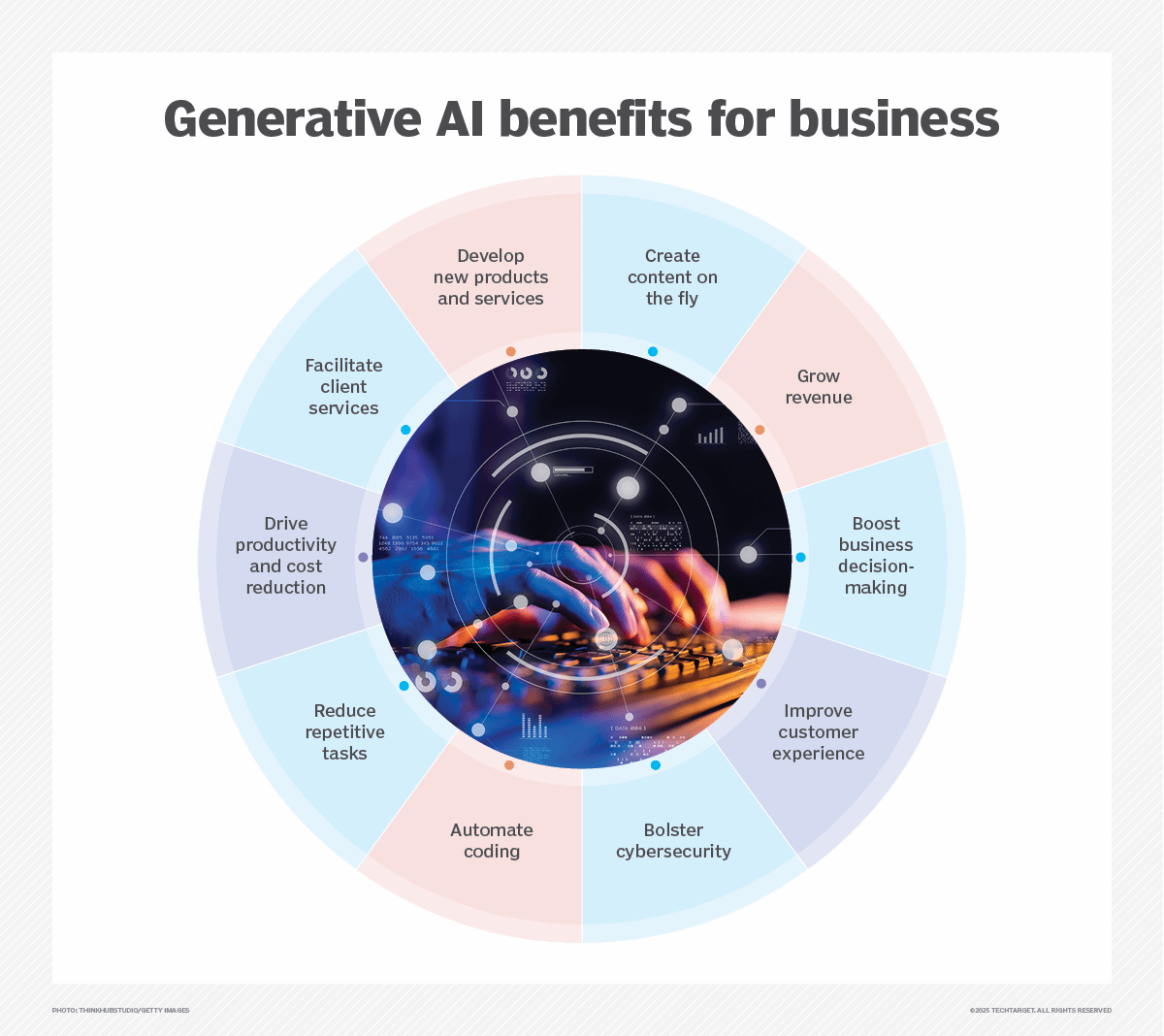

Generative AI’s potential for misuse as an insider threat is seriously worrying; employees might unknowingly leak sensitive data through seemingly harmless AI-generated content. This highlights the urgent need for robust cloud security, which is why understanding solutions like those offered by Bitglass, as detailed in this insightful article on bitglass and the rise of cloud security posture management , is crucial.

Ultimately, strengthening our cloud security posture is a key defense against the insidious threat of generative AI in the wrong hands.

The ability to quickly generate numerous variations of malware and exploits also makes it significantly harder to defend against. Imagine an AI generating thousands of variations of a single piece of ransomware, each subtly different, making traditional antivirus solutions less effective.

Detection and Prevention Strategies

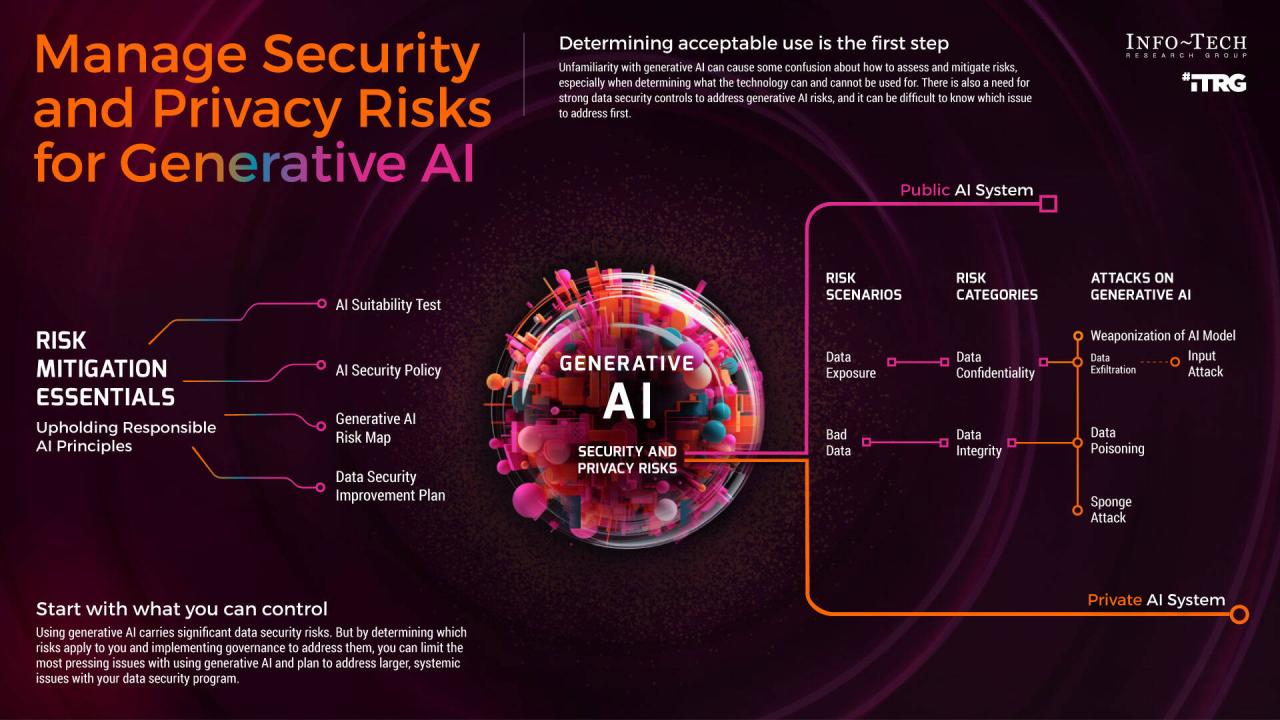

Protecting against insider threats leveraging generative AI requires a multi-layered approach combining advanced detection systems with robust preventative measures. The key is to identify anomalies in user behavior and data flow that deviate from established baselines, indicating potential misuse of generative AI tools for malicious purposes. Early detection is crucial to mitigate damage and prevent data breaches.

Detecting Unusual Activity Indicative of Generative AI Misuse

A system for detecting unusual activity should monitor several key areas. First, unusual access patterns to sensitive data should raise immediate concern. This includes accessing data outside of normal working hours or from unusual locations. Second, the volume and nature of data copied or downloaded should be analyzed. A sudden surge in large data transfers, especially involving sensitive information like customer data or intellectual property, warrants investigation.

Third, the use of generative AI tools themselves should be tracked. Monitoring access logs for these tools and correlating this data with unusual data access patterns can highlight suspicious activity. Finally, analyzing the content created by generative AI tools can reveal attempts at data exfiltration or the generation of malicious content. For example, an employee using a generative AI tool to create a seemingly innocuous report, but secretly embedding stolen data within the report’s metadata, would be detected by careful analysis of the output.

Identifying Patterns in Data Exfiltration Attempts

Identifying patterns in data exfiltration attempts facilitated by generative AI requires sophisticated techniques. Analyzing network traffic for unusual patterns, such as the use of encrypted channels or obfuscated data transfer methods, is crucial. Furthermore, examining the content of files transferred, particularly looking for unusual compression techniques or the presence of steganography (hidden messages within seemingly benign files), can uncover attempts to conceal exfiltrated data.

Monitoring the use of cloud storage services and external communication channels is also vital. AI-powered security information and event management (SIEM) systems can be employed to correlate these data points and identify potential threats based on learned patterns of malicious behavior. For instance, a sudden increase in encrypted file transfers to a previously unknown external IP address could indicate an ongoing data exfiltration attempt using generative AI to obfuscate the stolen data.

Implementing Robust Access Controls and Data Loss Prevention (DLP) Measures

Robust access controls are fundamental to preventing generative AI misuse. This includes implementing the principle of least privilege, granting users only the access they need to perform their job duties. Multi-factor authentication (MFA) should be mandatory for all users, especially those with access to sensitive data. Regular security awareness training should educate employees on the risks associated with generative AI and best practices for its safe use.

Data Loss Prevention (DLP) solutions should monitor data movement across the network, identifying and blocking attempts to exfiltrate sensitive data using generative AI or other means. These DLP solutions can be configured to recognize and block the transfer of specific file types or s, preventing the exfiltration of sensitive information embedded within seemingly innocuous files generated by AI.

Regular security audits and penetration testing should be conducted to identify vulnerabilities and ensure the effectiveness of security controls.

Comparison of Security Solutions

| Security Solution | Detection Capabilities | Prevention Capabilities | Integration Complexity |

|---|---|---|---|

| AI-powered SIEM | Anomaly detection, correlation of events, user behavior analysis | Alerting, automated response, blocking malicious activity | Medium |

| DLP solution | Data identification, monitoring data movement, content inspection | Blocking data exfiltration, encryption, data masking | Medium |

| User and Entity Behavior Analytics (UEBA) | Anomaly detection based on user and entity behavior | Alerting, investigation, access control adjustments | High |

| Generative AI security tools | Detection of malicious AI-generated content, identification of AI-based attacks | Blocking malicious AI-generated content, preventing AI-based attacks | High |

The Role of Human Factors

The seemingly technical threat of generative AI in cybersecurity is fundamentally driven by human actions and vulnerabilities. Understanding the psychological motivations and organizational contexts that enable malicious insider use of this technology is crucial for effective mitigation. Ignoring the human element renders even the most sophisticated technical safeguards ineffective.The potential for misuse stems from a complex interplay of individual and organizational factors.

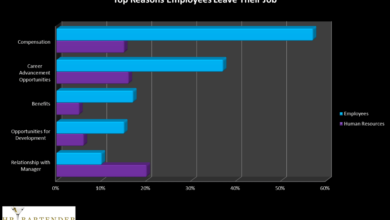

Employees might leverage generative AI for malicious purposes due to a combination of personal grievances, perceived injustices, financial pressures, or simply a desire to test their skills against organizational security measures. The ease of access to powerful tools, coupled with a lack of sufficient oversight, exacerbates these risks.

Psychological Factors Motivating Malicious Use

Several psychological factors contribute to insider threats involving generative AI. These include feelings of resentment or frustration stemming from workplace conflicts or perceived unfair treatment. Individuals might feel overlooked or undervalued, leading them to seek revenge or retribution through actions like data exfiltration or sabotage facilitated by AI tools. Financial difficulties, whether personal debt or external pressures, could also incentivize insider threats.

The anonymity offered by generative AI tools can embolden individuals to act on impulses that they might otherwise suppress. Finally, some insiders might simply be driven by curiosity or a desire to test their abilities, leading them to explore the boundaries of organizational security using AI-powered tools. For example, an employee facing redundancy might use generative AI to create sophisticated phishing emails targeting colleagues, hoping to cause chaos before their departure.

Organizational Culture and Security Awareness Training

A strong security culture, fostered through comprehensive security awareness training, is paramount in mitigating the risk of insider threats. Effective training programs should go beyond simple compliance-based instruction. They should actively promote a culture of security by emphasizing the importance of ethical conduct, data protection, and responsible technology use. This includes highlighting the potential consequences of malicious actions, both personally and professionally.

Regular security awareness campaigns, interactive simulations, and clear communication channels can reinforce the message and foster a sense of collective responsibility for security. For instance, role-playing scenarios that simulate phishing attacks or data breaches, coupled with post-training feedback and reinforcement, can significantly improve employee awareness and response capabilities.

Generative AI’s potential for misuse as an insider threat is a serious concern; malicious actors could leverage its capabilities for data breaches or sabotage. However, secure application development is crucial, and exploring options like the streamlined approaches offered in domino app dev the low code and pro code future might help mitigate some risks. Ultimately, understanding and addressing the vulnerabilities created by generative AI is vital for robust cybersecurity in the future.

Strategies for Fostering a Culture of Security and Trust

Building a culture of security and trust requires a multifaceted approach. This includes establishing clear policies and procedures regarding acceptable technology use, data handling, and reporting of security incidents. Open communication and a culture of psychological safety are crucial, enabling employees to report concerns or suspicious activities without fear of reprisal. Regular security audits and vulnerability assessments help identify weaknesses in the system and inform improvements in security protocols.

Furthermore, providing employees with the tools and resources they need to perform their jobs securely, such as secure access controls and data encryption tools, demonstrates a commitment to security and empowers employees to act responsibly. Finally, recognizing and rewarding employees who actively contribute to security strengthens the culture of security and reinforces the message that security is a shared responsibility.

For example, implementing a bug bounty program, where employees are rewarded for reporting security vulnerabilities, can incentivize proactive security behavior.

Future Implications and Mitigation

Generative AI’s rapid advancement presents both exciting opportunities and significant cybersecurity risks. Its capacity to create realistic and convincing content, from emails to code, dramatically increases the sophistication and scale of potential insider threats. Understanding the trajectory of this technology and developing proactive mitigation strategies are crucial for organizations to maintain their security posture.The evolution of generative AI will likely see increasingly sophisticated models capable of autonomously generating more complex and nuanced attacks.

We can expect to see AI-powered malware that adapts and evolves in real-time, evading traditional security measures. Furthermore, the ease of access to these tools will likely lower the barrier to entry for malicious actors, including disgruntled employees or external threat groups. This contrasts sharply with traditional insider threats, which often rely on human error or individual malice, leading to more predictable attack vectors.

Generative AI empowers attackers with unprecedented capabilities for scale and automation, blurring the lines between human and machine-driven attacks.

Generative AI’s Evolving Threat Landscape

The challenges posed by generative AI differ significantly from those of traditional insider threats. Traditional threats are often identifiable through established methods like access logs and behavioral analysis. However, generative AI can create highly convincing phishing emails, forge code that exploits vulnerabilities, or generate realistic fake data to cover tracks – making detection considerably more difficult. For example, a malicious insider could use generative AI to create a seemingly legitimate request for sensitive data, bypassing traditional authorization checks.

The scale of potential damage is also amplified; a single malicious actor could automate the creation of thousands of phishing emails, impacting a much larger number of victims compared to a manual effort. This requires a shift in security strategies, moving away from solely reactive measures towards proactive threat hunting and AI-powered defense mechanisms.

Leveraging Emerging Technologies for Enhanced Detection and Prevention

Emerging technologies offer promising solutions for mitigating the risks associated with generative AI-based insider threats. Advanced threat detection systems incorporating AI and machine learning can be trained to identify subtle anomalies in user behavior and data patterns that might indicate malicious activity. For instance, a system could detect unusual spikes in code generation or unusual access patterns to sensitive data, flagging them for further investigation.

Blockchain technology can be used to create immutable audit trails, providing increased transparency and accountability. This could help track changes to sensitive data and identify potential unauthorized modifications. Furthermore, the development and implementation of robust watermarking techniques for AI-generated content can help to verify the authenticity of data and identify forged documents or code. Imagine a system that automatically flags a document as potentially forged if its watermark doesn’t match the expected source.

This proactive approach shifts the focus from reacting to breaches to preventing them in the first place.

Enhanced Security Awareness Training

Effective security awareness training programs are essential to mitigate the risks associated with generative AI. Employees need to be educated about the capabilities of generative AI and the potential threats it poses. This training should focus on identifying and reporting suspicious activities, recognizing sophisticated phishing attempts, and understanding the importance of data security. Simulations and realistic scenarios can be used to enhance employee understanding and preparedness.

For example, training could include examples of AI-generated phishing emails that mimic legitimate communications, helping employees develop critical thinking skills to identify these deceptive tactics. This approach fosters a security-conscious culture and empowers employees to act as the first line of defense against these evolving threats.

Illustrative Scenarios

Generative AI’s ability to automate tasks and create convincing content presents a significant risk when combined with malicious insider intent. The following scenarios illustrate how generative AI can be weaponized to inflict substantial damage within an organization.

Phishing Attack Using Generative AI

A disgruntled employee, Alice, leverages a generative AI tool to craft highly personalized phishing emails targeting her colleagues. Alice feeds the AI with data gleaned from the company’s internal systems – names, job titles, project details, and even personal information obtained through casual conversations. The AI generates emails that appear to come from legitimate sources, such as the IT department or a senior manager, requesting password changes, urgent approvals, or access to sensitive files.

The attack vector is email, exploiting social engineering principles amplified by the AI’s ability to tailor each message to the recipient. The impact is widespread credential theft, leading to unauthorized access to sensitive data, financial loss, and reputational damage. Remediation involves immediate password resets across the organization, system-wide security audits, and enhanced employee security awareness training. A detailed forensic investigation traces the attack back to Alice’s workstation, revealing her involvement and leading to disciplinary action.

Generative AI’s potential for misuse as an insider threat is seriously concerning. Think about how easily it could be used to craft convincing phishing scams, like the one detailed in this article about Facebook requesting bank account details: facebook asking bank account info and card transactions of users. The scale of potential damage from such AI-powered attacks is truly frightening, highlighting the urgent need for robust security measures.

Automated Malware Deployment with Generative AI

Bob, a skilled programmer with access to the company’s network, uses generative AI to automate the creation and deployment of a novel polymorphic malware. The AI generates the malware code, adapting it to evade existing antivirus signatures. The malware’s core functionality is data exfiltration; it stealthily copies sensitive data from various servers and transmits it to a remote server controlled by Bob.

The AI also generates customized deployment scripts, tailoring the attack to different systems and vulnerabilities. The malware utilizes a combination of techniques, including fileless execution and process injection, to avoid detection. The deployment method involves exploiting a recently discovered vulnerability in the company’s network infrastructure, which Bob identified using AI-powered vulnerability scanning tools. Detection involves monitoring network traffic for unusual patterns and analyzing system logs for suspicious activities.

Remediation includes patching the vulnerability, implementing advanced threat detection systems, and conducting a full system cleanup to remove any traces of the malware.

Lifecycle of a Generative AI-Based Insider Threat

The lifecycle is depicted as a flow chart. First, a rectangle labeled “Initial Compromise” represents the point where the insider gains access to generative AI tools and/or sensitive company data. An arrow leads to a diamond shape labeled “Malicious Intent Formation,” representing the decision to use AI for malicious purposes. Another arrow leads to a rectangle labeled “AI-Assisted Attack Planning,” where the insider uses AI to develop attack strategies and craft malicious content.

An arrow proceeds to a rectangle labeled “Attack Execution,” depicting the actual implementation of the attack using AI-generated tools or content. An arrow leads to a diamond shape labeled “Detection,” representing the point where security systems or human analysts identify suspicious activity. If detection occurs, an arrow leads to a rectangle labeled “Incident Response and Remediation,” involving investigation, containment, and recovery efforts.

If detection fails, an arrow leads to a rectangle labeled “Successful Data Breach or Damage,” representing the successful compromise. The chart emphasizes the importance of proactive security measures and robust detection capabilities in mitigating the risks posed by generative AI-based insider threats.

Conclusion: Generative Ai The Unseen Insider Threat

Generative AI’s potential for misuse by insiders is a rapidly evolving threat that demands our immediate attention. While the technology offers incredible possibilities, its capacity for malicious use cannot be ignored. By understanding the mechanics of these attacks, implementing robust security measures, and fostering a strong security culture, we can significantly mitigate the risk. The key lies in proactive vigilance, continuous learning, and a commitment to adapting our security strategies to this ever-changing landscape.

Ignoring this threat is simply not an option.

Detailed FAQs

What are some early warning signs of generative AI misuse by an insider?

Unusual spikes in data access requests, especially outside normal working hours, a sudden increase in the creation of complex documents or code, and attempts to access systems or data outside an employee’s typical responsibilities are all potential red flags.

Can traditional security measures detect generative AI-based attacks?

While traditional measures offer some protection, they may not be sufficient. Generative AI can create highly sophisticated and tailored attacks that can bypass standard security controls. A multi-layered approach is crucial.

How can security awareness training help mitigate this threat?

Training employees to recognize and report suspicious activity, especially phishing attempts crafted with generative AI, is essential. This includes emphasizing the importance of strong password hygiene and cautious handling of sensitive data.

What role does organizational culture play in preventing generative AI-based insider threats?

A culture of trust and open communication, combined with a strong emphasis on security awareness, is crucial. Employees should feel comfortable reporting concerns without fear of reprisal.