Google Admits Its Virtual Assistants Indulge in Eavesdropping

Google admits its virtual assistants indulge in eavesdropping. This bombshell revelation has sent shockwaves through the tech world, raising serious questions about privacy and the ethical boundaries of data collection. We delve into the specifics of Google’s data collection practices, examining the mechanisms used, the types of data collected, and the potential implications for users. The debate surrounding user consent and transparency is central to this discussion, as is the public’s reaction and the potential legal ramifications for Google.

From seemingly innocuous voice commands to more sensitive conversations, the potential for misuse of this collected data is vast. We explore hypothetical scenarios, comparing Google’s practices to those of competitors and examining the technological underpinnings of this controversial data collection. Ultimately, we aim to understand the impact on user behavior, trust, and Google’s long-term reputation.

Google’s Virtual Assistant Privacy Practices

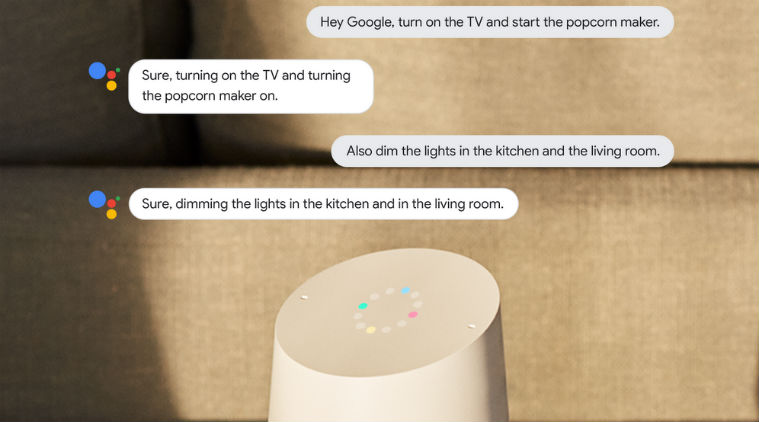

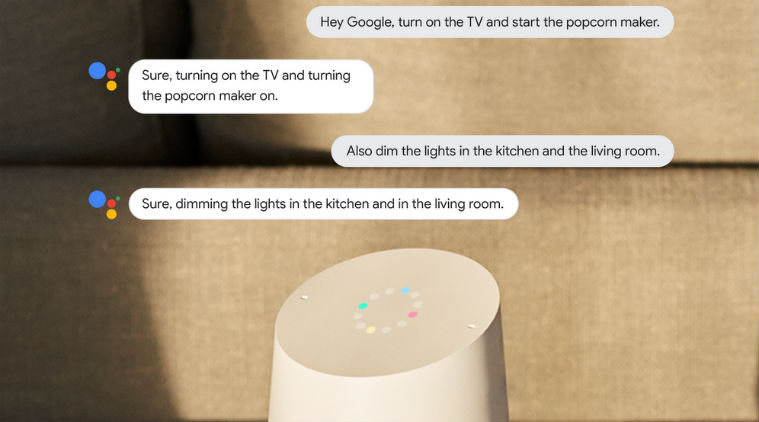

Google’s virtual assistants, like Google Assistant and others integrated into various devices, are powerful tools, but their convenience comes with a trade-off: data collection. Understanding Google’s official stance on this and how they handle user information is crucial for informed use.Google publicly states its commitment to user privacy, emphasizing transparency in its data collection practices. They maintain that data collection is necessary to improve the functionality and personalization of their virtual assistants.

So, Google admits its virtual assistants are listening in – a little creepy, right? This highlights the urgent need for robust security measures, especially as we increasingly rely on cloud services. Learning more about solutions like bitglass and the rise of cloud security posture management is crucial in this context. It’s all about protecting our data, even from the seemingly helpful digital assistants that might be secretly sharing our conversations.

However, the extent of this data collection and its uses have been subjects of scrutiny and debate.

Google’s Data Collection Mechanisms

Google employs several methods to gather user data through its virtual assistants. This includes collecting voice recordings of user interactions, analyzing the content of those interactions to understand user intent and context, and tracking usage patterns such as frequency of use and the types of requests made. Furthermore, location data is often collected to provide relevant information and services.

The data collected can also include information tied to Google accounts, enhancing personalization and creating a more comprehensive user profile. For example, if a user asks for directions to a restaurant, Google will not only collect the voice command but also link that request to their location and potentially their Google Maps activity.

Uses of Collected User Data

The collected data fuels various aspects of Google’s services. Primarily, it’s used to improve the accuracy and efficiency of the virtual assistant itself. Analyzing voice data helps refine speech recognition algorithms, leading to better understanding of user commands. The contextual data helps tailor responses and provide more relevant information. This data also contributes to Google’s broader advertising and personalization efforts, allowing for targeted ads and personalized recommendations within other Google services.

For example, if a user frequently asks about travel destinations, Google might show more travel-related ads and suggestions. Additionally, this data informs product development and future improvements to Google’s AI technologies.

Comparison with Competitors

Google’s data collection practices are comparable to those of other major tech companies offering similar virtual assistant services like Amazon’s Alexa and Apple’s Siri. All these companies collect user data to improve their services and personalize user experiences. However, the specific types of data collected, the extent of data retention, and the transparency of these practices can vary. While Google provides detailed information about its data collection policies in its privacy policy, users should carefully review the policies of all virtual assistant providers to understand the implications of using these services.

A key difference often lies in the extent to which user data is used for targeted advertising; some companies are more transparent about this than others. The ongoing debate surrounding data privacy and the use of AI highlights the need for users to be aware of these practices and to make informed choices about the technologies they utilize.

The “Eavesdropping” Allegation

The claim that Google’s virtual assistants are engaging in “eavesdropping” stems from concerns about the extent and nature of data collection practices, particularly concerning data gathered without explicit and informed user consent. While Google maintains that data collection is necessary for improving its services, critics argue that the line between legitimate data usage and unwarranted surveillance is blurred. This ambiguity has fueled public distrust and raised significant privacy concerns.The allegation isn’t based on a single incident but rather a confluence of reports, analyses, and user experiences suggesting that Google’s virtual assistants collect far more data than initially disclosed.

This data collection raises questions about the potential for misuse and the long-term implications for individual privacy.

Instances and Reports of Alleged Eavesdropping

Several instances and reports have contributed to the “eavesdropping” narrative. For example, investigative journalism pieces have highlighted the sheer volume of data collected by Google’s virtual assistants, including seemingly innocuous snippets of conversations, even when the “Okay Google” or equivalent activation phrase wasn’t explicitly used. Furthermore, analyses of Google’s privacy policies and user agreements have revealed clauses that allow for data collection beyond what many users might reasonably expect.

There have also been anecdotal reports from users who felt their privacy had been violated based on the apparent access Google’s virtual assistants seemed to have to their personal conversations and routines. These accounts, while not always verifiable independently, add to the overall perception of unchecked data collection.

Types of Data Allegedly Collected Without Explicit Consent

Beyond the obvious recordings triggered by activation phrases, allegations suggest Google’s virtual assistants collect a broader range of data. This includes ambient sounds picked up by microphones even when the assistant isn’t actively listening, contextual information derived from user interactions with other Google services, and location data linked to voice recordings. The potential for this data to be aggregated and analyzed to create detailed profiles of user behavior and preferences is a major concern.

The lack of transparency regarding the extent of this collection and its purpose further exacerbates the privacy issue.

Potential Implications for User Privacy

The potential implications of this data collection are significant. The aggregation of seemingly innocuous snippets of conversations, location data, and other personal information can create a highly detailed and intimate profile of a user’s life. This profile could be vulnerable to misuse, including targeted advertising, identity theft, or even blackmail. Furthermore, the lack of robust mechanisms for data deletion or control over data usage leaves users with limited agency over their own personal information.

The potential for bias in algorithms analyzing this data also presents a serious risk, potentially leading to discriminatory outcomes.

Hypothetical Scenario of Data Misuse

Imagine a scenario where a user frequently uses their Google virtual assistant to discuss health concerns, financial matters, or even personal relationships. Unknown to the user, their device’s microphone inadvertently picks up these conversations even when the assistant isn’t activated. This data, combined with location data and information gleaned from other Google services, could create a highly detailed profile revealing the user’s vulnerabilities.

A malicious actor gaining access to this data could potentially target the user with phishing scams, exploit their financial information, or even leverage sensitive personal details for blackmail. This scenario highlights the potential for significant harm resulting from seemingly innocuous data collection practices.

So Google admits its virtual assistants are listening in – a bit creepy, right? It makes you wonder what other data these tech giants are collecting. It’s especially unsettling when you consider that, according to this article, facebook asking bank account info and card transactions of users is happening, too. The whole situation highlights a larger issue about privacy and the extent to which our personal information is being monitored.

It reinforces my growing unease about the seemingly unchecked access these companies have.

User Consent and Transparency

Google’s virtual assistants, like Google Assistant and others integrated into various devices, collect and process vast amounts of user data. Understanding the extent of this data collection and the mechanisms by which users consent to it is crucial for evaluating the privacy implications. The clarity and comprehensiveness of Google’s privacy policies regarding this data collection are therefore subjects of ongoing discussion and scrutiny.Google’s privacy policies, while extensive, are often criticized for being overly complex and difficult for the average user to understand.

The sheer volume of information presented, coupled with the technical jargon employed, can make it challenging to grasp the specifics of data collection related to virtual assistants. This lack of transparency raises concerns about whether users are truly giving informed consent. Furthermore, the policies often lack specific details about the types of data collected in different contexts and the purposes for which this data is used.

So, Google admits its virtual assistants are listening in – kinda creepy, right? It makes you think about the security implications of all this data collection, especially when you consider how much easier it is to build powerful apps now, thanks to advancements like those discussed in this great article on domino app dev the low code and pro code future.

The potential for misuse of this kind of technology, combined with the ease of development, is something we really need to keep in mind as we move forward. The Google assistant situation highlights just how important data privacy really is.

This ambiguity undermines the ability of users to make informed choices about their privacy.

Google Virtual Assistant Consent Processes Across Devices

The user consent process for Google’s virtual assistants varies across different devices and platforms. This lack of consistency raises concerns about equitable and transparent practices. The following table summarizes these variations, highlighting inconsistencies and areas lacking transparency. Note that this information is based on publicly available information and may not reflect all nuances or regional variations.

| Device/Platform | Consent Method | Data Collected | Transparency Level |

|---|---|---|---|

| Google Home (Smart Speaker) | Initial setup requires acceptance of terms of service; ongoing data collection is implied through continued use. | Voice recordings, location data, device usage data, app usage data (if linked). | Low; specifics of data collection and usage are buried within lengthy terms of service. |

| Android Smartphone | Acceptance of Google services terms during initial device setup; granular control over data collection is available within device settings, but can be difficult to navigate. | Voice recordings (if Assistant is activated), location data, app usage data, contact information (if permitted). | Medium; some granular controls exist, but overall clarity remains limited. |

| iOS (iPhone/iPad) | Acceptance of Google app permissions during app installation; granular control over microphone access and other permissions within device settings. | Voice recordings (if Assistant is activated), location data (if permitted). | Medium-High; iOS’s permission system provides more user control, but the Google Assistant’s data collection practices still need to be understood separately. |

| Chrome Browser (Desktop) | Implied consent through use of Google Assistant features within the browser; limited control within browser settings. | Voice recordings (if used), search history, browsing data. | Low; data collection is less explicit compared to mobile platforms. |

Ethical Considerations and Public Perception: Google Admits Its Virtual Assistants Indulge In Eavesdropping

The allegations of Google’s virtual assistants engaging in “eavesdropping” raise serious ethical concerns that extend beyond simple privacy violations. The potential for misuse of collected data, the erosion of public trust, and the legal ramifications are all significant factors that impact Google’s reputation and the broader technological landscape. This issue highlights the complex relationship between technological advancement, user privacy, and corporate responsibility.The core ethical concern stems from the lack of transparency and the potential for undisclosed data collection.

While Google may argue that users consent to data collection through their terms of service, the implied consent surrounding voice data, particularly in private settings, is far more problematic. The collection of personal conversations, potentially sensitive information, and even medical details without explicit, informed consent raises significant ethical red flags. This practice undermines the trust users place in Google’s products, potentially leading to a decline in usage and a negative impact on brand loyalty.

Impact on Public Trust

This “eavesdropping” controversy significantly impacts public trust in Google and its products. The revelation erodes the confidence users have in the company’s commitment to protecting their privacy. News reports and social media discussions reflect widespread skepticism and anger, with many users expressing concern over the potential for misuse of their personal data. This loss of trust can manifest in several ways, including reduced usage of Google products, a shift towards competing services prioritizing privacy, and a general decline in Google’s reputation.

The long-term consequences could include reduced market share and difficulty attracting and retaining users. For example, the Cambridge Analytica scandal significantly damaged Facebook’s reputation and led to stricter regulations and increased user scrutiny. This incident serves as a cautionary tale for other tech giants, highlighting the potential for long-lasting negative impacts on public perception.

Comparison with Other Tech Controversies

The public reaction to Google’s alleged “eavesdropping” aligns with the responses to similar controversies in the tech industry. The Cambridge Analytica scandal, involving Facebook’s data misuse, and the various privacy concerns surrounding Apple’s data collection practices, demonstrate a consistent pattern: public outrage follows revelations of data collection practices perceived as intrusive or exploitative. The common thread is a feeling of betrayal of trust, a sense that tech companies prioritize profit over user privacy.

This pattern suggests a growing public awareness of data privacy issues and a demand for greater transparency and accountability from tech companies.

Potential Legal Implications

If the allegations of “eavesdropping” are substantiated, Google could face significant legal implications. Depending on the jurisdiction and the specifics of the data collection practices, Google could be subject to lawsuits for violations of privacy laws, including those relating to data protection and consumer rights. Regulatory bodies may also launch investigations, leading to fines and other penalties. The legal landscape surrounding data privacy is constantly evolving, and the consequences for companies found to be violating these laws can be severe.

For instance, the European Union’s General Data Protection Regulation (GDPR) imposes significant fines for non-compliance, setting a precedent for stricter global regulations. The potential legal costs and reputational damage could be substantial, underscoring the importance of ethical data handling practices.

Technological Aspects of Data Collection

Google’s virtual assistants, like Google Assistant and others integrated into various devices, rely on a sophisticated interplay of technologies to collect and process user data. Understanding these technologies is crucial to evaluating the privacy implications of their operation. This involves examining the methods used for audio capture, processing, and ultimately, data storage and analysis.

At the core of these systems is advanced signal processing. Microphones within devices capture ambient sound, constantly listening for the “wake word” – “Hey Google,” for example. Once detected, the system activates and begins recording audio until a period of silence or a user-initiated stop command. This audio stream is then transmitted to Google’s servers for processing.

This process involves techniques like noise cancellation and speech recognition, which leverage machine learning algorithms trained on vast datasets of speech and audio. These algorithms identify and transcribe the spoken words, transforming them into text that can be interpreted and used to fulfill user requests. This text data, alongside associated metadata such as timestamps and device identifiers, forms the basis of the data Google collects.

Audio Input Differentiation

Google’s virtual assistants employ complex algorithms to determine which audio input is relevant and which is not. This process involves analyzing the acoustic characteristics of the audio stream, looking for patterns consistent with speech directed at the device. Factors such as volume, pitch, and frequency characteristics are analyzed to distinguish between the user’s voice and background noise. Machine learning models, constantly refined through training on massive datasets, are essential to this process, allowing the system to improve its accuracy over time.

The system is designed to avoid recording and processing irrelevant audio, aiming to only capture and act on commands explicitly directed at the virtual assistant. However, the system is not perfect and can sometimes misinterpret background noise or conversations as commands.

System Vulnerabilities

Despite the sophisticated technologies employed, vulnerabilities exist within the system that could lead to unauthorized data collection or misuse. One potential vulnerability is the possibility of unintentional activation. The wake word detection system, while advanced, is not infallible and may occasionally trigger falsely, leading to unintended recording. Another concern is the potential for malicious actors to exploit vulnerabilities in the software or hardware to gain unauthorized access to recorded audio data.

Finally, the security of data transmitted between the device and Google’s servers presents a potential risk. While Google employs encryption to protect data in transit, sophisticated attacks could potentially compromise this security.

Addressing these vulnerabilities requires a multi-faceted approach. Strengthening security protocols, improving the accuracy of wake word detection, and enhancing the transparency of data collection practices are all crucial steps.

Potential Improvements to Data Collection Practices

Several improvements could significantly enhance user privacy and trust in Google’s virtual assistants.

- Implement more robust wake word detection to minimize false activations and reduce unintentional data collection.

- Enhance data encryption both in transit and at rest to protect against unauthorized access.

- Provide users with greater control over data retention policies, allowing them to specify how long their data is stored.

- Develop more transparent and user-friendly interfaces for managing data collection settings and reviewing collected data.

- Invest in research and development to improve the accuracy of differentiating between relevant and irrelevant audio inputs.

- Implement on-device processing options to reduce the amount of data transmitted to Google’s servers.

Impact on User Behavior and Trust

The revelation that Google’s virtual assistants may be engaging in “eavesdropping,” even with purported user consent, has the potential to significantly alter user behavior and erode trust in the company. This isn’t simply about a privacy breach; it’s a breach of the implicit contract of trust users have with tech giants who promise convenience in exchange for data. The impact will ripple across various aspects of user interaction and Google’s long-term prospects.The immediate impact will likely be a decrease in the usage of Google’s virtual assistants.

Users might become more hesitant to use voice commands, opting instead for manual input methods to avoid perceived surveillance. This shift could be particularly pronounced among users who are already privacy-conscious or have experienced data breaches in the past. Furthermore, the controversy could lead to a general decline in trust in Google’s services, potentially impacting the use of other Google products and services.

The perceived lack of transparency and potential for misuse of personal information could cause a significant portion of users to switch to competitors or adopt more privacy-focused alternatives.

Strategies for Regaining User Trust

Google needs a multi-pronged approach to rebuild trust. This involves more than just issuing apologies; it requires demonstrable changes to their data collection practices and a commitment to transparency. Firstly, Google should implement stricter default privacy settings, minimizing data collection unless explicitly authorized by the user. Secondly, a clearer, more accessible privacy policy is crucial. The current policy needs to be rewritten in plain language, avoiding legal jargon, and explaining in detail what data is collected, how it’s used, and who has access to it.

Finally, Google needs to invest heavily in independent audits of its data collection and usage practices, making the results publicly available to demonstrate accountability. Transparency and demonstrable action are key. The company might also consider offering users greater control over their data, including the ability to easily delete their data or opt out of specific data collection practices.

Long-Term Consequences for Google’s Brand Reputation and Market Share, Google admits its virtual assistants indulge in eavesdropping

The long-term consequences of this controversy could be severe. A damaged reputation can lead to a loss of market share, impacting Google’s dominance in the virtual assistant market and potentially other sectors. The Cambridge Analytica scandal serves as a cautionary tale. While Facebook survived, its reputation suffered lasting damage, impacting user trust and ultimately affecting its market value.

Similarly, if Google fails to adequately address these concerns, it risks facing a sustained decline in user trust, potentially opening the door for competitors to gain ground. This loss of trust could extend beyond virtual assistants, affecting the overall perception of Google’s entire product ecosystem.

A Hypothetical Public Relations Campaign

A successful PR campaign needs to focus on empathy, transparency, and action. The campaign could begin with a sincere apology from Google’s CEO, acknowledging the concerns and outlining specific steps the company is taking to address them. This should be followed by a series of advertisements and public statements emphasizing the company’s commitment to user privacy. These could showcase the new, improved privacy settings and the independent audits being conducted.

The campaign should also include educational materials explaining data privacy in simple terms, empowering users to make informed choices about their data. Finally, Google could partner with privacy advocacy groups to demonstrate its commitment to ethical data practices and build trust with a broader audience. This multifaceted approach, focusing on demonstrable action rather than just words, is crucial for regaining lost ground.

Ending Remarks

The revelation that Google’s virtual assistants are engaging in what many perceive as eavesdropping has ignited a critical conversation about privacy in the digital age. While Google maintains its data collection is necessary for improving its services, the lack of complete transparency and the potential for misuse remain significant concerns. The long-term impact on user trust and Google’s brand reputation remains to be seen, highlighting the urgent need for greater accountability and more robust privacy protections in the development and deployment of AI-powered virtual assistants.

FAQ Summary

What specific data does Google collect from its virtual assistants?

Google collects voice recordings, location data, and potentially other contextual information depending on the user’s interactions and device settings. The exact data collected varies.

Can I opt out of Google’s data collection for its virtual assistants?

While Google offers some privacy controls, completely opting out of all data collection might limit the functionality of the virtual assistant.

How does Google use the data collected from its virtual assistants?

Google claims to use the data to improve the accuracy and functionality of its virtual assistants, personalize user experiences, and develop new features.

What are the legal consequences for Google if the eavesdropping allegations are proven true?

The legal consequences could range from hefty fines to lawsuits from users and regulatory bodies, depending on the jurisdiction and specifics of the case.