How to Architect Continuous Delivery for the Enterprise

How to architect continuous delivery for the enterprise? It’s a question that keeps many DevOps engineers up at night. Building a robust and scalable CD pipeline isn’t just about stringing together tools; it’s about fundamentally changing how your organization develops, tests, and deploys software. This journey involves navigating organizational hurdles, choosing the right technology, and, critically, embedding security at every stage.

Get ready to dive into the nitty-gritty of enterprise-grade continuous delivery!

We’ll cover everything from defining what continuous delivery means in a large enterprise context to designing the pipeline architecture, selecting the right tools, implementing effective testing strategies, and ensuring robust security. We’ll explore different deployment approaches and show you how to monitor and improve your pipeline over time. This isn’t just theory; we’ll delve into practical examples and real-world scenarios to help you build a CD pipeline that works for your organization.

Defining Enterprise Continuous Delivery

Continuous Delivery (CD) in the enterprise isn’t just about faster releases; it’s a fundamental shift in how software is built, tested, and deployed. It requires a holistic approach, impacting every stage of the software development lifecycle (SDLC) and demanding significant organizational changes. This goes beyond simply automating deployments; it’s about building a culture of trust and collaboration.Enterprise continuous delivery is characterized by the automation of the entire software release pipeline, from code commit to deployment to production.

It emphasizes frequent, small releases, allowing for quicker feedback loops and reduced risk. Crucially, it necessitates a high degree of automation, robust testing strategies, and a strong emphasis on monitoring and feedback. In a large enterprise, this also includes considerations for compliance, security, and governance, all within a complex, often distributed, infrastructure.

Continuous Integration, Delivery, and Deployment: Key Differences

Continuous Integration (CI) focuses on the frequent integration of code changes into a shared repository. This is typically followed by automated builds and tests. Continuous Delivery extends CI by automating the entire release process, making it possible to deploy to production at any time. Continuous Deployment goes a step further, automating the actual deployment to production environments upon successful completion of the CI/CD pipeline.

The key distinction lies in the level of automation: CI automates the build and test phases, CD automates the release process, and CD automates the deployment process. In many enterprises, continuous delivery serves as a stepping stone towards continuous deployment, as the latter requires a higher degree of confidence in the automation and testing processes.

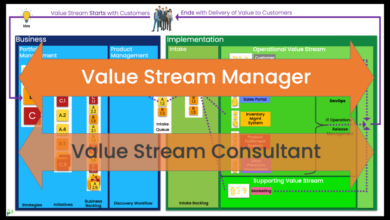

Organizational Challenges of Implementing Continuous Delivery at Scale

Implementing continuous delivery in a large enterprise presents numerous organizational challenges. These include:

- Legacy Systems: Integrating CD into existing legacy systems can be complex and time-consuming, requiring significant refactoring or even replacement.

- Organizational Silos: Traditional organizational structures often create silos between development, operations, and security teams, hindering collaboration and efficient workflow.

- Resistance to Change: Implementing CD requires a cultural shift, and resistance from teams accustomed to traditional development methodologies is common.

- Security and Compliance: Meeting stringent security and compliance requirements can significantly increase the complexity of the CD pipeline.

- Lack of Skills and Expertise: Implementing and maintaining a robust CD pipeline requires specialized skills and expertise, which can be difficult to find and retain.

- Tooling and Integration: Selecting and integrating the right tools and technologies across different teams and systems is crucial but often challenging.

Examples of Successful Enterprise Continuous Delivery Implementations

Many large enterprises have successfully implemented continuous delivery, reaping significant benefits. While specific details are often confidential, success stories commonly highlight the transformation of software delivery from infrequent, large releases to frequent, smaller ones. This often leads to faster time-to-market, improved quality, and increased customer satisfaction.

| Approach | Pros | Cons | Example Companies |

|---|---|---|---|

| Feature Flags/Toggles | Allows for controlled releases, A/B testing, and easy rollback; reduces risk. | Increased complexity in codebase; requires careful management of flags. | Netflix, Amazon |

| Canary Deployments | Reduces risk by deploying to a small subset of users first; allows for early detection of issues. | Requires robust monitoring and rollback mechanisms; may not be suitable for all applications. | Google, Facebook |

| Blue/Green Deployments | Minimizes downtime; allows for quick rollback if issues arise. | Requires twice the infrastructure capacity; can be complex to set up. | Etsy, Target |

Architecting the CD Pipeline

Building a robust continuous delivery (CD) pipeline for an enterprise requires careful planning and a well-defined architecture. It’s not just about automating deployments; it’s about creating a system that fosters speed, reliability, and stability across the entire software lifecycle. This involves integrating various tools and processes, managing dependencies effectively, and ensuring security at every step.

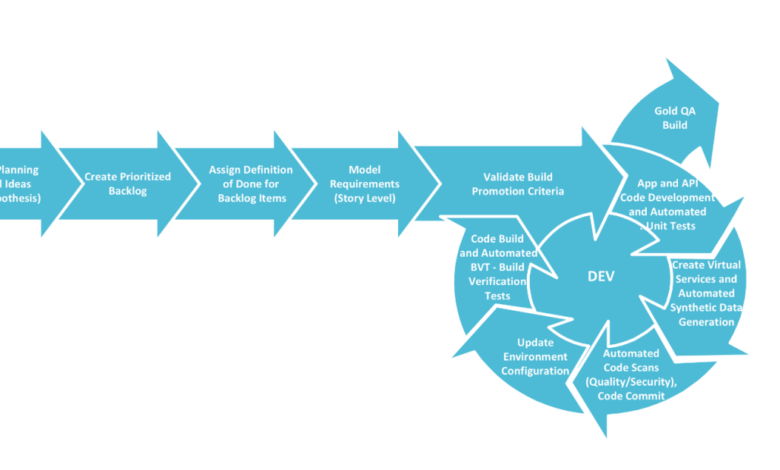

High-Level Architecture of an Enterprise CD Pipeline

A typical enterprise CD pipeline can be visualized as a series of interconnected stages, each with its specific responsibilities. The pipeline begins with code commits and progresses through automated builds, testing, deployment to various environments (development, testing, staging, production), and finally, ongoing monitoring and feedback. This architecture emphasizes automation, allowing for rapid iteration and deployment while maintaining a high level of quality and control.

A key aspect is the feedback loops at each stage, enabling quick identification and resolution of issues. The architecture should be designed to be scalable and adaptable to the evolving needs of the enterprise.

Stages of the Continuous Delivery Pipeline

The CD pipeline typically involves several distinct stages:

- Code Integration: This stage focuses on merging code changes from multiple developers into a shared repository. Tools like Git are crucial here, along with branching strategies like Gitflow to manage concurrent development and minimize merge conflicts. Automated build tools are then triggered upon successful code merges.

- Testing: Automated testing is a cornerstone of a successful CD pipeline. This includes unit tests, integration tests, system tests, and potentially UI tests. The goal is to catch bugs early in the development cycle, minimizing the cost and effort of fixing them later. Test automation frameworks like Selenium, JUnit, or pytest are commonly used.

- Deployment: This stage involves automatically deploying the tested code to various environments. Infrastructure as Code (IaC) tools like Terraform or Ansible are essential for managing and provisioning infrastructure consistently across different environments. Deployment strategies like blue/green deployments or canary releases can minimize downtime and risk during deployments.

- Monitoring: Continuous monitoring is crucial for ensuring the application’s stability and performance in production. Monitoring tools collect metrics on various aspects of the application, such as resource utilization, error rates, and user experience. Alerts are triggered when anomalies are detected, allowing for timely intervention and resolution of issues.

Managing Dependencies and Ensuring Environmental Consistency

Effective dependency management is vital for preventing conflicts and ensuring consistent behavior across different environments. Using a dependency management tool like Maven or npm is crucial for managing project dependencies. Containerization technologies like Docker and Kubernetes are instrumental in ensuring consistency across environments by packaging the application and its dependencies into isolated containers. This approach minimizes the “works on my machine” problem and simplifies deployment.

CI/CD Tools and Technologies for Enterprise Use

Several CI/CD tools cater to enterprise needs. Jenkins remains a popular open-source choice, offering extensive customization and plugin support. Azure DevOps and GitLab CI/CD provide comprehensive solutions integrated with their respective cloud platforms. CircleCI and GitHub Actions are cloud-based solutions that offer ease of use and scalability. The choice often depends on existing infrastructure, team expertise, and specific requirements.

Security Considerations in the CD Pipeline

Security should be integrated into every stage of the pipeline. Here’s a breakdown of essential security considerations:

- Code Integration: Secure code repositories with access controls, enforce code reviews, and implement static code analysis to identify vulnerabilities early.

- Testing: Include security testing in the automated test suite, such as penetration testing and vulnerability scanning.

- Deployment: Secure infrastructure using appropriate security measures, implement secrets management, and use secure deployment practices to prevent unauthorized access.

- Monitoring: Monitor for security threats and vulnerabilities in production, implement logging and auditing, and establish incident response procedures.

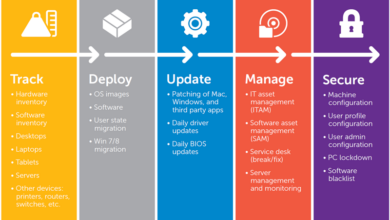

Infrastructure and Tooling

Building a robust and scalable continuous delivery (CD) pipeline requires careful consideration of the underlying infrastructure and the tools that orchestrate the process. The right choices here significantly impact the speed, reliability, and overall success of your CD initiatives. A poorly chosen infrastructure can lead to bottlenecks, downtime, and increased costs, while unsuitable tools can create complexity and hinder collaboration.Choosing the right infrastructure and tooling isn’t about picking the latest and greatest technology; it’s about selecting the best fit for your specific enterprise needs, current skillsets, and future scalability goals.

This involves understanding the trade-offs between various options and prioritizing factors like security, maintainability, and cost-effectiveness.

Cloud Platforms for Continuous Delivery

Cloud platforms like AWS, Azure, and Google Cloud Platform (GCP) offer significant advantages for building CD pipelines. They provide readily available compute resources, storage, and networking capabilities, eliminating the need for significant upfront investment in hardware. Furthermore, their scalable nature allows you to easily adjust resources based on demand, ensuring your pipeline can handle fluctuating workloads. These platforms also often integrate well with other CD tools, streamlining the overall process.

For example, AWS offers services like CodePipeline, CodeBuild, and CodeDeploy, while Azure provides Azure DevOps and GCP offers Cloud Build and Cloud Deploy. The choice depends on existing infrastructure, team expertise, and specific application requirements.

Containerization and Orchestration

Containerization technologies, primarily Docker and its ecosystem, are crucial for creating consistent and portable deployment environments. Containers package applications and their dependencies into isolated units, ensuring that the application behaves the same way regardless of the underlying infrastructure. Orchestration platforms like Kubernetes manage and automate the deployment, scaling, and management of these containers across a cluster of machines.

This allows for efficient resource utilization and high availability, vital for enterprise-grade CD pipelines. Using Kubernetes, for instance, you can easily scale your application based on demand, ensuring a smooth user experience even during peak traffic periods. Without containerization and orchestration, maintaining a consistent and scalable deployment environment across multiple environments would be significantly more challenging.

CI/CD Tool Selection Criteria

Selecting the right CI/CD tools requires careful evaluation of several factors. These include:

- Integration capabilities: How well does the tool integrate with your existing code repositories, testing frameworks, and deployment environments?

- Scalability and performance: Can the tool handle the volume and complexity of your projects and deployments?

- Security features: Does the tool provide robust security features to protect your code and infrastructure?

- Ease of use and maintainability: How easy is it to configure, use, and maintain the tool? Is there sufficient documentation and community support?

- Cost: What are the licensing costs and ongoing maintenance expenses?

Consider tools like Jenkins, GitLab CI, CircleCI, Azure DevOps, and GitHub Actions. Each offers different features and strengths, and the optimal choice depends on your specific context.

Tool Integration within the CD Pipeline

Effective CD relies on seamless integration between various tools. The following table illustrates how different tools can be integrated for a typical CD workflow.

| Tool | Function | Integration Method | Benefits |

|---|---|---|---|

| GitLab | Code Management | Webhooks to trigger CI/CD pipeline | Centralized code management and automated pipeline triggering |

| JUnit/Selenium | Automated Testing | Integration with CI/CD tool (e.g., GitLab CI) via plugins or scripts | Early detection of bugs and improved software quality |

| Docker | Containerization | Integrated into CI/CD pipeline to build and push container images | Consistent deployment environments and improved portability |

| Kubernetes | Orchestration | API calls from CI/CD tool to deploy containers | Automated deployment, scaling, and management of containers |

| Prometheus/Grafana | Monitoring | Integration via APIs or agents | Real-time monitoring of application performance and infrastructure health |

Testing and Quality Assurance

Continuous Delivery (CD) isn’t just about automating deployments; it’s about ensuring the quality of those deployments. A robust testing strategy is the backbone of a successful CD pipeline, preventing defects from reaching production and maintaining user trust. Without a comprehensive testing approach, the speed and automation of CD become meaningless, potentially leading to costly production issues and reputational damage.

Types of Testing in a Continuous Delivery Pipeline

Effective testing in a CD pipeline requires a multi-layered approach, incorporating various testing types to catch defects at different stages of the software development lifecycle. This ensures that issues are identified and resolved early, minimizing the cost and effort required for remediation.

- Unit Testing: Focuses on verifying the functionality of individual components or modules in isolation. These tests are typically written by developers and are crucial for catching bugs early in the development process. For example, a unit test might verify that a specific function correctly calculates a value or that a particular method returns the expected output given specific inputs.

High unit test coverage significantly reduces integration issues later on.

- Integration Testing: Tests the interaction between different modules or components to ensure they work together correctly. This stage often reveals integration problems that are not apparent during unit testing. Imagine testing the integration between a user authentication module and a database access module; integration testing would confirm that user credentials are correctly verified and accessed from the database.

- System Testing: Validates the entire system as a whole, ensuring that all components work together as expected to meet the specified requirements. System testing often involves testing user workflows and scenarios, such as verifying the complete checkout process in an e-commerce application. It’s a critical step before user acceptance testing (UAT).

- Performance Testing: Evaluates the system’s performance under various load conditions, including stress testing, load testing, and endurance testing. This ensures the system can handle the expected user traffic and maintain acceptable response times. Performance testing helps identify bottlenecks and areas for optimization. For instance, a performance test might simulate 10,000 concurrent users accessing a web application to assess its responsiveness and stability.

Automated Testing Strategies

Automation is paramount in a CD pipeline. Manual testing is slow, prone to human error, and doesn’t scale well. Automating tests allows for frequent execution, early defect detection, and faster feedback loops.

- Test Automation Frameworks: Utilizing frameworks like Selenium (for UI testing), JUnit (for Java unit testing), or pytest (for Python unit testing) provides structure and facilitates test maintenance. These frameworks offer features like test runners, reporting tools, and assertion libraries.

- Continuous Integration (CI) Integration: Tests should be integrated into the CI process, triggered automatically upon code commits. This allows for immediate feedback on code changes, preventing defects from accumulating.

- Test Data Management: Automated test data generation and management are crucial. This avoids relying on production data (for security reasons) and ensures consistent and reliable test environments. Tools that generate synthetic data or clone production data (while anonymizing sensitive information) are valuable.

Test Data Management and Security

Managing test data is critical. Using real production data poses significant security and privacy risks. Strategies include:

- Synthetic Data Generation: Creating realistic but fake data using tools that generate data conforming to specific patterns and distributions.

- Data Masking and Anonymization: Transforming real data to remove sensitive information while preserving data structure and relationships relevant to testing.

- Data Subsets: Creating smaller, representative subsets of production data for testing, reducing the volume of data handled and minimizing risk.

- Test Data Environments: Maintaining separate test environments isolated from production to prevent accidental data corruption or exposure.

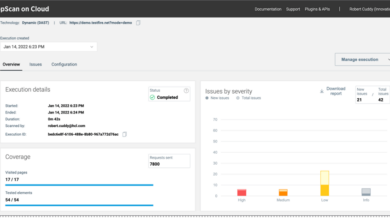

Integrating Test Results into the Pipeline Feedback Loop

Test results should be readily available and integrated into the CD pipeline’s feedback loop. This involves:

- Real-time Reporting: Displaying test results immediately after execution, allowing developers to quickly identify and address issues.

- Automated Reporting and Notifications: Generating reports and sending notifications (e.g., email alerts) based on test outcomes.

- Integration with Monitoring Tools: Connecting testing results with monitoring systems to gain a holistic view of system health and performance.

- Test Result Analysis and Trend Tracking: Analyzing test results over time to identify patterns and trends, proactively addressing potential quality issues.

Best Practices for High-Quality Software Releases

Several best practices enhance software quality within a CD environment:

- Shift-Left Testing: Incorporating testing early in the development cycle, ideally starting at the design phase.

- Test-Driven Development (TDD): Writing tests before writing code, ensuring testability and improving code quality.

- Code Reviews: Conducting peer reviews of code to identify potential defects and improve code quality.

- Continuous Monitoring and Feedback: Monitoring application performance and user feedback in production to identify and address issues quickly.

Deployment Strategies: How To Architect Continuous Delivery For The Enterprise

Choosing the right deployment strategy is crucial for successful continuous delivery in an enterprise setting. The ideal approach depends on factors like application complexity, risk tolerance, and the required level of downtime. Let’s explore some popular strategies and their implications.

Blue/Green Deployments

Blue/green deployments involve maintaining two identical environments: a “blue” environment representing the production system and a “green” environment used for staging and deploying new versions. Traffic is directed to the blue environment, while the green environment is updated. Once testing is complete in the green environment, traffic is switched to the green environment, making it the new production. The old blue environment remains available for rollback if necessary.

This minimizes downtime and reduces the risk associated with deploying new code.

- Advantages: Minimal downtime, quick rollback capability, reduced risk.

- Disadvantages: Requires twice the infrastructure capacity, increased complexity in managing two environments.

Canary Deployments

Canary deployments involve gradually rolling out a new version of the application to a small subset of users. This allows for monitoring the new version’s performance and stability in a real-world setting before a full rollout. If issues are detected, the rollout can be stopped, minimizing the impact of a faulty deployment. This approach is particularly useful for applications with a large user base or those where a disruption could have significant consequences.

- Advantages: Reduced risk, early detection of issues, gradual rollout allows for controlled exposure.

- Disadvantages: More complex to implement than other strategies, requires robust monitoring and logging.

Rolling Deployments

Rolling deployments involve gradually updating instances of the application one at a time. New versions are deployed to a subset of servers, and after successful verification, the update continues to the remaining servers. This approach minimizes downtime and allows for a smooth transition to the new version. It is suitable for applications where downtime needs to be kept to a minimum and where a gradual update is feasible.

- Advantages: Minimal downtime, gradual rollout, easier to manage than blue/green deployments.

- Disadvantages: Requires careful orchestration and monitoring, longer deployment time compared to blue/green.

Implementing a Deployment Strategy within the CD Pipeline

Implementing a chosen deployment strategy requires integrating it into the continuous delivery pipeline. This involves automating the steps involved in the chosen strategy, such as building, testing, deploying, and monitoring. Tools like Jenkins, GitLab CI, and Spinnaker can automate these processes, providing the flexibility to implement any of the strategies mentioned above. For example, a blue/green deployment would involve automating the steps of deploying to the green environment, running tests, and then switching traffic.

Rollback Procedures

Effective rollback procedures are critical for minimizing the impact of failed deployments. In a blue/green deployment, rollback simply involves switching traffic back to the blue environment. In a canary deployment, rolling back involves stopping the deployment to the canary group and reverting to the previous version. In a rolling deployment, a rollback might involve reverting the updates to the already updated instances.

These procedures should be automated as part of the deployment pipeline to ensure quick and efficient recovery. For example, a script can be used to automatically switch traffic or revert the deployment. Regular testing of rollback procedures is essential to ensure their effectiveness.

Blue/Green Deployment Diagram

Imagine a diagram showing two identical environments, labeled “Blue (Production)” and “Green (Staging)”. Arrows indicate the flow of traffic. Step 1: Traffic flows to the “Blue (Production)” environment. Step 2: A new version of the application is deployed to the “Green (Staging)” environment. Step 3: Thorough testing is conducted on the “Green (Staging)” environment.

Step 4: Once testing is successful, traffic is switched from “Blue (Production)” to “Green (Staging)”. The “Green (Staging)” environment becomes the new production environment. Step 5: The old “Blue (Production)” environment is available for rollback in case of issues. If everything works fine, it can be used for the next deployment cycle.

Monitoring and Feedback

Building a robust Continuous Delivery (CD) pipeline isn’t just about automating deployments; it’s about ensuring consistent quality and rapid response to issues. Effective monitoring and a well-structured feedback loop are crucial for achieving this. Without them, your pipeline might run smoothly, but you’ll lack the visibility to identify bottlenecks, predict failures, or adapt to evolving user needs.Monitoring and feedback form a closed loop, continuously improving your CD process.

By tracking key metrics, you gain insights into pipeline performance, identify areas for optimization, and proactively address potential problems. Feedback from users provides invaluable context, helping you prioritize improvements and ensure your releases align with business goals.

Key Metrics for CD Pipeline Monitoring

Effective monitoring starts with identifying the right metrics. These metrics should provide a comprehensive view of the pipeline’s health, performance, and efficiency. Focusing on a few key indicators will give you the best return on your monitoring efforts. Ignoring less important metrics prevents alert fatigue and keeps your focus on what truly matters. For example, monitoring deployment frequency, lead time for changes, and change failure rate provide insights into the speed and reliability of your delivery process.

Mean Time To Recovery (MTTR) helps assess how quickly you resolve issues.

Using Monitoring Data for Issue Resolution

Real-time monitoring dashboards provide a centralized view of your CD pipeline’s status. These dashboards should visually represent key metrics, making it easy to spot anomalies. Automated alerts, triggered by predefined thresholds, immediately notify relevant teams of potential problems. For example, if deployment failure rate exceeds a set threshold (say, 5%), an alert is sent to the development and operations teams.

This allows for rapid investigation and resolution, minimizing downtime and ensuring the pipeline remains operational.

Automated Alerting and Notification Systems

Implementing automated alerting is essential for rapid issue resolution. This involves integrating your monitoring tools with communication platforms like Slack, PagerDuty, or email. Alerts should be specific, providing clear information about the issue, its severity, and the affected components. For instance, an alert might state: “Deployment of version 1.2.3 to production environment failed due to database connection error.

See logs for details.” Different alert levels (critical, warning, informational) can be used to prioritize notifications and prevent alert fatigue.

Gathering and Incorporating User Feedback

User feedback is crucial for ensuring your releases meet user needs and expectations. Gathering feedback can be done through various channels, such as user surveys, in-app feedback forms, social media monitoring, and user support interactions. This feedback should be analyzed to identify areas for improvement in your product and your CD process. For example, consistently negative feedback on a specific feature might indicate a need for improved testing or a different development approach.

This feedback loop ensures continuous improvement and alignment with user expectations.

Actionable Steps to Improve the CD Pipeline, How to architect continuous delivery for the enterprise

Analyzing monitoring data reveals opportunities for improvement. Here are some actionable steps based on common issues:

- High Deployment Failure Rate: Investigate root causes through log analysis, automated tests, and code reviews. Improve testing strategies and implement more robust error handling.

- Long Lead Time for Changes: Optimize the pipeline by automating manual steps, improving code quality, and streamlining the approval process.

- High MTTR: Implement better incident management procedures, improve monitoring and alerting, and provide better documentation for troubleshooting.

- Low Deployment Frequency: Identify bottlenecks in the pipeline and address them through automation and process improvements. Encourage smaller, more frequent releases.

- Negative User Feedback on a Specific Feature: Prioritize addressing the issue based on its severity and impact. This might involve bug fixes, feature enhancements, or a complete redesign.

Security in Continuous Delivery

Continuous Delivery (CD) pipelines, while accelerating software releases, introduce new security challenges. The automated nature of CD means that vulnerabilities can be propagated rapidly throughout the entire system if not properly addressed. A robust security strategy is paramount to prevent breaches and maintain the integrity of the software and the organization.

Common Security Vulnerabilities in CD Pipelines

Several common security vulnerabilities are inherent to CD pipelines. These vulnerabilities can arise at various stages, from code development to deployment. Failing to address them effectively can lead to significant security risks. For example, insecure configurations in cloud environments, or insufficient access control to the pipeline itself, are frequent culprits. Compromised credentials, unpatched dependencies, and inadequate code scanning are further examples of common threats.

Furthermore, the use of outdated or insecure tools within the pipeline itself introduces vulnerabilities that can be easily exploited.

Securing the CD Pipeline: Best Practices

Implementing robust security practices is crucial throughout the entire CD pipeline. This includes implementing strict access control measures, such as role-based access control (RBAC), to limit who can access and modify different parts of the pipeline. Code signing ensures the authenticity and integrity of the code deployed, preventing unauthorized modifications. Regular vulnerability scanning, both at the code level and at the infrastructure level, helps identify and address security weaknesses proactively.

These practices help mitigate risks and ensure that only authorized personnel and verified code are integrated into the pipeline.

Security Automation in the CD Pipeline

Automation is key to effective security in a CD environment. Security checks should be integrated directly into the pipeline itself. This includes automated security testing, such as static and dynamic application security testing (SAST and DAST), as well as automated vulnerability scanning of dependencies and infrastructure. These automated checks should be triggered at various stages of the pipeline, such as after code commits, before deployments, and during runtime monitoring.

This proactive approach helps to identify and remediate security issues early in the development lifecycle, reducing the risk of deploying vulnerable software.

Responding to Security Incidents

A well-defined incident response plan is critical for handling security incidents effectively within a CD environment. This plan should include clear procedures for identifying, containing, eradicating, recovering from, and learning from security breaches. The plan should Artikel roles and responsibilities, communication protocols, and escalation paths. Regular security drills and simulations can help prepare the team for responding to real-world incidents.

Rapid rollback capabilities, built into the CD pipeline, are crucial for quickly mitigating the impact of a compromised release.

Security Tools and Technologies

Numerous security tools and technologies can be integrated into a CD pipeline to enhance security. Examples include SAST and DAST tools like SonarQube and OWASP ZAP, respectively, for identifying vulnerabilities in code. Dependency scanning tools, such as OWASP Dependency-Check, can analyze project dependencies for known vulnerabilities. Secret management tools like HashiCorp Vault can securely store and manage sensitive information.

Infrastructure-as-code (IaC) scanning tools, like Checkov, can identify security misconfigurations in cloud infrastructure. These tools, when integrated correctly, can significantly improve the security posture of the entire CD pipeline.

Last Point

Architecting continuous delivery for the enterprise is a significant undertaking, but the rewards are immense. By carefully considering the organizational, technical, and security aspects, you can build a pipeline that delivers high-quality software faster, more reliably, and with greater confidence. Remember, it’s an iterative process; continuous improvement is key. Start small, focus on incremental gains, and celebrate your successes along the way.

The journey towards efficient and secure software delivery is well worth the effort!

General Inquiries

What’s the difference between Continuous Integration, Continuous Delivery, and Continuous Deployment?

Continuous Integration (CI) focuses on automating the build and testing process. Continuous Delivery (CD) extends this by automating the release process to a staging environment. Continuous Deployment goes further, automating the release to production.

How do I get buy-in from stakeholders for a CD initiative?

Highlight the benefits – faster time to market, reduced risk, improved quality – and address their concerns about cost, complexity, and risk. Start with a small, manageable pilot project to demonstrate success.

What are some common pitfalls to avoid when implementing CD?

Ignoring security, neglecting proper testing, failing to address organizational challenges, and selecting inappropriate tools are all common mistakes. Start with a well-defined strategy and clear goals.

How do I measure the success of my CD pipeline?

Track key metrics like deployment frequency, lead time, mean time to recovery (MTTR), and change failure rate. Regularly review these metrics and adjust your approach as needed.