How to Maintain Your BigFix Inventory Environment Health

How to maintain your BigFix Inventory environment health? It’s a question every sysadmin wrestles with, and frankly, it’s more than just keeping the lights on. A healthy BigFix inventory is the bedrock of efficient patching, proactive security, and informed decision-making. We’re talking about the smooth operation of your entire IT infrastructure, a system humming along with accurate, readily available data.

This post dives into the nitty-gritty, offering practical advice and actionable strategies to keep your BigFix environment running smoothly.

From optimizing your database and managing server resources to ensuring client connectivity and implementing robust security measures, we’ll cover all the essential aspects of maintaining a healthy BigFix inventory. We’ll also tackle troubleshooting common issues and provide you with a framework for creating a proactive maintenance plan. Get ready to transform your BigFix experience from reactive firefighting to proactive management!

Understanding BigFix Inventory Data

Keeping your BigFix Inventory environment healthy relies heavily on understanding the data it collects. Accurate and timely inventory data is the bedrock of effective IT asset management, enabling informed decisions about software licensing, security patching, and overall system health. Without a solid grasp of your inventory data, your efforts to maintain a healthy environment will be significantly hampered.BigFix Inventory gathers a wide variety of data points, offering a comprehensive view of your IT infrastructure.

Understanding these different data types is crucial for interpreting the information effectively and proactively addressing potential issues.

BigFix Inventory Data Types

BigFix Inventory collects data across several key categories. This includes hardware information like CPU type and speed, memory capacity, and hard drive details. It also captures software inventory, detailing installed applications, their versions, and associated configurations. Operating system details, including version, service pack levels, and installed updates, are meticulously recorded. Network configurations, such as IP addresses and network adapters, are also part of the collected data.

Finally, BigFix Inventory often includes custom data points, allowing administrators to track specific attributes relevant to their organization’s unique needs. This comprehensive approach ensures a detailed and nuanced picture of the managed assets.

The Importance of Accurate and Up-to-Date Inventory Data

Accurate and current inventory data is paramount for several reasons. First, it provides a reliable basis for software license compliance audits. Knowing exactly which software is installed on which machine allows for precise license counting, avoiding potential legal and financial penalties. Second, it is essential for effective security patching. Outdated software represents a significant security vulnerability, and accurate inventory data enables targeted patching efforts, minimizing the attack surface.

Third, accurate inventory data aids in capacity planning. By understanding the resource consumption of your existing assets, you can make informed decisions about future hardware purchases and upgrades, optimizing resource allocation. Finally, it supports effective troubleshooting and problem resolution. When issues arise, having accurate inventory data significantly speeds up the diagnostic process.

Common Data Inconsistencies and Their Impact

Several factors can contribute to data inconsistencies within BigFix Inventory. Network connectivity problems can prevent data from being reported correctly. Software conflicts or errors might lead to incomplete or inaccurate software inventory. Manual data entry errors, if used, can also introduce inconsistencies. The impact of these inconsistencies can be substantial.

Inaccurate license counts could result in unnecessary software purchases or legal issues. Incomplete security patch data can leave systems vulnerable to exploits. Inaccurate hardware information can hinder capacity planning and lead to inefficient resource allocation. For example, an outdated inventory of operating systems could lead to missed security patches, creating vulnerabilities that could be exploited by malicious actors.

Similarly, incorrect software license counts could lead to substantial fines for non-compliance.

Auditing Inventory Data Quality

A regular audit process is essential to maintain the integrity of your BigFix Inventory data. This process should include several key steps. First, establish a baseline of expected data quality metrics. This might include the percentage of assets with complete hardware and software inventories, the frequency of data updates, and the number of data inconsistencies identified. Second, implement automated checks to identify inconsistencies and potential errors.

This could involve comparing inventory data against known good data sources or using data validation rules to flag anomalies. Third, regularly review the audit reports to identify trends and address recurring issues. Fourth, establish a clear process for resolving data inconsistencies, ensuring timely updates and corrections. Finally, document the audit process and findings, ensuring transparency and accountability.

This methodical approach ensures that your BigFix Inventory data remains reliable and supports effective IT asset management.

Maintaining Database Health

Keeping your BigFix Inventory database healthy is crucial for maintaining the overall performance and reliability of your asset management system. A sluggish or bloated database can lead to slow query responses, inaccurate reporting, and even system instability. Proactive database maintenance is key to preventing these issues and ensuring your BigFix Inventory environment remains efficient and effective.

Database Performance Optimization

Optimizing BigFix Inventory database performance involves a multifaceted approach. Regularly analyzing query execution plans can identify bottlenecks. This involves examining the queries that are taking the longest to execute and identifying areas for improvement, such as inefficient indexing or poorly written SQL. Consider adding indexes to frequently queried columns to speed up data retrieval. Regularly reviewing and adjusting database server resource allocation, including memory, CPU, and disk I/O, is also vital.

Insufficient resources can significantly impact performance. Finally, database tuning, which involves adjusting various database parameters to optimize performance for your specific workload, is a critical ongoing task. For example, adjusting the buffer pool size can dramatically impact query speed.

Preventing Database Bloat

Database bloat, characterized by excessive storage consumption due to unused data or inefficient data structures, can significantly degrade performance. Regularly purging outdated or unnecessary data is crucial. BigFix Inventory provides tools and features for this purpose; utilize them to remove obsolete data, such as historical data beyond a defined retention period. Implement archiving strategies for less frequently accessed data to reduce the size of the active database.

Consider using database compression techniques to reduce storage requirements without compromising data accessibility. For instance, implementing row-level compression can significantly reduce the overall database size.

Database Backups and Recovery Procedures

Regular database backups are paramount for data protection and disaster recovery. Implement a robust backup strategy that includes full backups and incremental backups. Full backups provide a complete copy of the database, while incremental backups capture only the changes since the last backup, minimizing backup time and storage requirements. Test your recovery procedures regularly to ensure you can restore your database quickly and efficiently in the event of a failure.

This involves restoring a backup to a test environment and verifying data integrity. Consider using a geographically separate backup location for disaster recovery purposes, safeguarding against site-wide failures.

Routine Database Maintenance Checklist

A well-defined checklist ensures consistent and thorough database maintenance.

- Weekly Tasks: Monitor database performance metrics (CPU usage, memory consumption, disk I/O), run database integrity checks, and analyze slow-running queries.

- Monthly Tasks: Execute database statistics updates, perform database cleanup (removing outdated data), and review and adjust database server resource allocation.

- Quarterly Tasks: Perform a full database backup, review and optimize database indexes, and analyze database space usage.

- Annually Tasks: Conduct a thorough database performance review, update database server software, and review and update the disaster recovery plan.

Server Resource Management

Keeping your BigFix Inventory server humming along requires diligent attention to its resource consumption. Ignoring resource constraints can lead to sluggish performance, impacting the accuracy and timeliness of your asset inventory data. This section Artikels key resources to monitor, effective monitoring techniques, optimization strategies, and a plan for scaling your infrastructure as your needs grow.BigFix Inventory, like any software application, relies heavily on several key server resources.

Understanding their interplay and potential bottlenecks is crucial for maintaining a healthy and responsive system. Insufficient resources can manifest as slow query responses, increased latency in data updates, and even complete system unresponsiveness.

Key Server Resources and Monitoring

Monitoring CPU usage, available memory, and disk space is essential for proactively identifying and addressing potential performance issues. High CPU utilization can indicate a heavy workload or inefficient code execution. Low available memory can cause the system to start using slower virtual memory (paging to disk), drastically slowing performance. Finally, insufficient disk space can lead to application errors and inability to write logs or new data.

Effective monitoring tools include the operating system’s built-in performance monitors (e.g., Windows Performance Monitor or Linux’s top/htop), and dedicated monitoring solutions like Nagios, Zabbix, or Prometheus. These tools allow you to set alerts based on predefined thresholds, notifying you of potential problems before they impact users. Regularly reviewing these metrics provides valuable insights into resource usage patterns and helps anticipate future resource needs.

Optimizing Server Resource Allocation

Optimizing resource allocation involves a multi-faceted approach. Firstly, ensure your BigFix Inventory server has sufficient hardware resources allocated, considering the size and complexity of your inventory. Secondly, regularly review and remove unnecessary data, such as outdated or irrelevant asset information. This reduces the database size and improves query performance. Thirdly, optimize your database queries to minimize resource consumption.

Inefficient queries can place an unnecessary burden on the CPU and memory. Finally, consider using database indexing and query optimization techniques to improve performance. These strategies can significantly improve the efficiency of your BigFix Inventory server, leading to faster response times and improved overall system stability.

Scaling BigFix Inventory Infrastructure

As your organization grows and the number of managed assets increases, your BigFix Inventory server may require additional resources to maintain performance. Planning for scalability involves anticipating future growth and proactively adjusting your infrastructure to accommodate increasing demands. This may involve upgrading to a more powerful server, adding more RAM or disk space, or implementing a distributed architecture with multiple BigFix Inventory servers.

Regularly assessing resource usage trends and projecting future needs are critical components of a successful scaling strategy. Consider using load balancing techniques to distribute the workload across multiple servers if your growth surpasses the capacity of a single machine.

Scaling Plan Example

The following table illustrates a sample scaling plan. Adapt this to your specific environment and resource monitoring data. Remember to establish clear thresholds based on your historical usage patterns and acceptable performance levels.

| Resource | Current Usage | Threshold | Action Plan |

|---|---|---|---|

| CPU | 70% average | 85% | Monitor closely. If consistently above 80%, consider adding more CPU cores or upgrading the server. |

| Memory | 6GB used of 8GB | 75% | If consistently above 70%, increase RAM or optimize database queries. |

| Disk Space | 50GB used of 100GB | 80% | Regularly purge old data and consider upgrading to a larger disk or implementing a data archiving strategy. |

| Database Connections | 20 concurrent | 50 concurrent | If consistently near 50, investigate database performance tuning or consider database replication. |

Client Health and Connectivity

Maintaining a healthy BigFix Inventory environment relies heavily on the consistent and reliable reporting of your clients. A single unresponsive client can skew your data and hinder accurate asset management. This section explores the factors impacting client health and connectivity, offering practical troubleshooting strategies and deployment best practices.Client connectivity and reporting in BigFix Inventory depend on several interwoven factors.

Network connectivity, firewall rules, client software version, and server-side configurations all play a crucial role. Intermittent network issues, improperly configured firewalls blocking communication ports, outdated client software, or server-side problems like certificate expiry can all lead to clients failing to report or reporting incomplete data. Understanding these dependencies is key to proactively managing your inventory.

Factors Influencing Client Connectivity and Reporting

Network connectivity is paramount. Clients must be able to reach the BigFix Inventory server on the specified ports. Firewalls on both the client and server machines must be configured to allow communication. Additionally, proxies, VPNs, or other network intermediaries can interfere with communication if not properly configured to allow BigFix traffic. The client’s operating system and its network configuration also play a role.

For example, a client with a misconfigured DNS setting might be unable to resolve the server’s hostname. Finally, the BigFix Inventory server itself must be running and accessible. Server-side issues, such as database problems or overloaded resources, can also impact client connectivity.

Common Client-Side Issues and Troubleshooting Steps

Several common client-side issues can prevent accurate reporting. One frequent problem is an outdated or corrupted BigFix client installation. This can manifest as a failure to check in, incomplete data reporting, or even client crashes. Another common issue is a client failing to connect due to network problems. This can be caused by temporary network outages, incorrect network settings on the client, or network security policies blocking BigFix communication.

Finally, resource constraints on the client machine, such as low disk space or high CPU utilization, can also prevent the BigFix client from operating correctly.

- Outdated Client: Upgrade the BigFix client to the latest version. This often resolves many compatibility and bug-related issues.

- Network Connectivity: Verify network connectivity by pinging the BigFix server. Check firewall rules to ensure BigFix ports are open. Investigate proxy settings and VPN configurations.

- Client Resource Constraints: Check client disk space, CPU usage, and memory. Free up resources if necessary.

- Client Logs: Examine the BigFix client logs for error messages. These logs provide valuable clues about the cause of the problem.

- Reinstall Client: As a last resort, uninstall and reinstall the BigFix client. Ensure a clean installation by removing any remaining configuration files.

Strategies for Ensuring Consistent Client Communication with the BigFix Server

Proactive monitoring and regular maintenance are vital for consistent client communication. Implementing a robust alerting system to notify administrators of connectivity issues is essential. This allows for swift intervention and prevents data gaps. Regularly reviewing client logs for errors and warnings provides valuable insights into potential problems. Furthermore, scheduling regular client software updates ensures that clients have the latest patches and improvements, enhancing reliability and security.

Finally, employing a well-defined client deployment and management strategy, detailed below, streamlines the process and reduces the likelihood of connectivity issues.

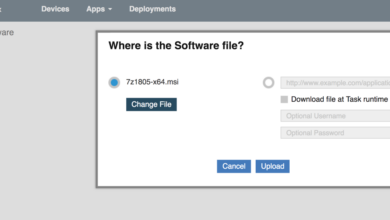

Deploying and Maintaining BigFix Clients Effectively, How to maintain your bigfix inventory environment health

Effective deployment and maintenance are crucial for a healthy BigFix Inventory environment. A well-defined process ensures consistent client reporting and minimizes administrative overhead.

- Plan Deployment Strategy: Determine the best deployment method (e.g., group policy, SCCM, manual installation) based on your infrastructure.

- Prepare Client Installation Package: Create a standardized installation package that includes all necessary components and configurations.

- Configure Client Settings: Properly configure client settings, such as the BigFix server address, reporting frequency, and other relevant parameters.

- Test Deployment: Deploy the client to a test environment before rolling it out to production to identify and resolve any potential issues.

- Monitor Client Health: Regularly monitor client health and connectivity using BigFix Inventory’s reporting capabilities.

- Implement Alerting: Set up alerts to notify administrators of client connectivity issues or errors.

- Schedule Regular Updates: Establish a schedule for updating BigFix clients to the latest version.

- Document Procedures: Maintain comprehensive documentation of your client deployment and maintenance processes.

Action Site Management

Keeping your BigFix Inventory Action Sites lean and efficient is crucial for maintaining a healthy environment. Uncontrolled growth can lead to performance degradation, increased storage costs, and difficulties in troubleshooting. Regular cleanup and optimization are essential practices to prevent these issues.Action Site cleanup involves identifying and removing obsolete or unnecessary Action Sites. This proactive approach prevents the accumulation of redundant data and improves the overall performance of your BigFix Inventory system.

A well-maintained Action Site environment simplifies reporting, analysis, and overall system management.

Identifying and Removing Obsolete Action Sites

Identifying obsolete Action Sites requires a systematic approach. Regularly review your Action Sites, paying close attention to their last used date. Sites that haven’t been utilized for an extended period (a timeframe determined by your organization’s specific needs, perhaps 6 months or a year) are prime candidates for removal. Additionally, analyze the data associated with each Action Site; if the data is no longer relevant or is duplicated elsewhere, removal is justified.

Before deleting any Action Site, back up the relevant data as a precautionary measure. This allows for recovery in case of accidental deletion or if the data is later needed.

Preventing Future Action Site Clutter

Proactive measures are key to preventing future Action Site clutter. Establish clear guidelines for Action Site creation, ensuring that only necessary sites are created. Implement a review process before creating a new Action Site to determine if an existing site can be reused or if the required data can be gathered through alternative methods. Consider implementing automated alerts that notify administrators when Action Sites haven’t been used for a specified period, triggering a review of their necessity.

This automated approach helps maintain consistent monitoring and control.

Action Site Lifecycle Management Workflow

A well-defined workflow streamlines Action Site management. This workflow should include the following steps:

1. Request

A formal request for a new Action Site should be submitted, justifying its necessity and outlining its purpose.

2. Approval

A designated individual or team approves the request, ensuring alignment with organizational standards and data management policies.

3. Creation

The Action Site is created according to established best practices.

4. Usage

The Action Site is used for its intended purpose.

5. Review

Regular reviews (e.g., monthly or quarterly) are conducted to assess the Action Site’s usage and relevance.

6. Retirement

If deemed obsolete, the Action Site is retired following a documented procedure, including data backup and final approval. This structured approach ensures that Action Sites are managed efficiently throughout their entire lifecycle.

Security Best Practices

Securing your BigFix Inventory environment is paramount to maintaining data integrity and preventing unauthorized access. A robust security posture involves proactive measures to mitigate potential vulnerabilities and implement strong access controls. Ignoring these best practices can expose your valuable asset inventory data to significant risk.Potential security vulnerabilities in a BigFix Inventory environment stem from several areas, including weak authentication mechanisms, insecure network configurations, and unpatched software.

A comprehensive approach to security requires addressing each of these potential weaknesses.

BigFix Server Hardening

Securing the BigFix server itself is the cornerstone of a secure environment. This involves implementing strong passwords, enabling multi-factor authentication (MFA) wherever possible, and regularly updating the operating system and BigFix server software with the latest security patches. Regular security scans using industry-standard vulnerability scanners are crucial for identifying and remediating potential weaknesses before they can be exploited.

Restricting network access to the BigFix server to only authorized users and systems via firewalls and network segmentation is also critical. Consider disabling unnecessary services and ports to minimize the attack surface.

Client Security Configuration

BigFix clients, deployed across your managed endpoints, also represent potential entry points for malicious actors. Ensure clients are regularly updated with the latest patches and security updates. Implement strong encryption for communication between clients and the server, using protocols like HTTPS. Configure client settings to restrict access to sensitive data and actions. Regularly review client logs for suspicious activity.

Using agentless methods for discovery where possible reduces the attack surface.

Access Control and User Authentication

Implementing granular access control is essential for limiting access to sensitive data and functionalities within BigFix Inventory. Utilize role-based access control (RBAC) to assign permissions based on user roles and responsibilities. Employ strong password policies, including password complexity requirements and regular password changes. Leverage MFA to add an extra layer of security to user logins, making it significantly harder for unauthorized individuals to access the system.

Regularly audit user accounts and permissions to ensure they remain appropriate and that inactive accounts are removed.

BigFix Inventory Security Checklist

A comprehensive security checklist should be developed and regularly reviewed to ensure the ongoing security of your BigFix Inventory environment. This checklist should include:

- Regular patching of the BigFix server and clients.

- Implementation of strong passwords and MFA.

- Regular security scans for vulnerabilities.

- Network segmentation and firewall rules to restrict access.

- Regular review and audit of user accounts and permissions.

- Encryption of communication between clients and server.

- Regular review of client and server logs for suspicious activity.

- Implementation of intrusion detection and prevention systems.

- Regular backups of the BigFix Inventory database.

- Development and testing of incident response plans.

Regularly reviewing and updating this checklist will ensure that your security posture remains robust and adapts to evolving threats. Remember that security is an ongoing process, not a one-time event.

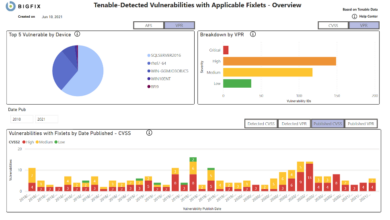

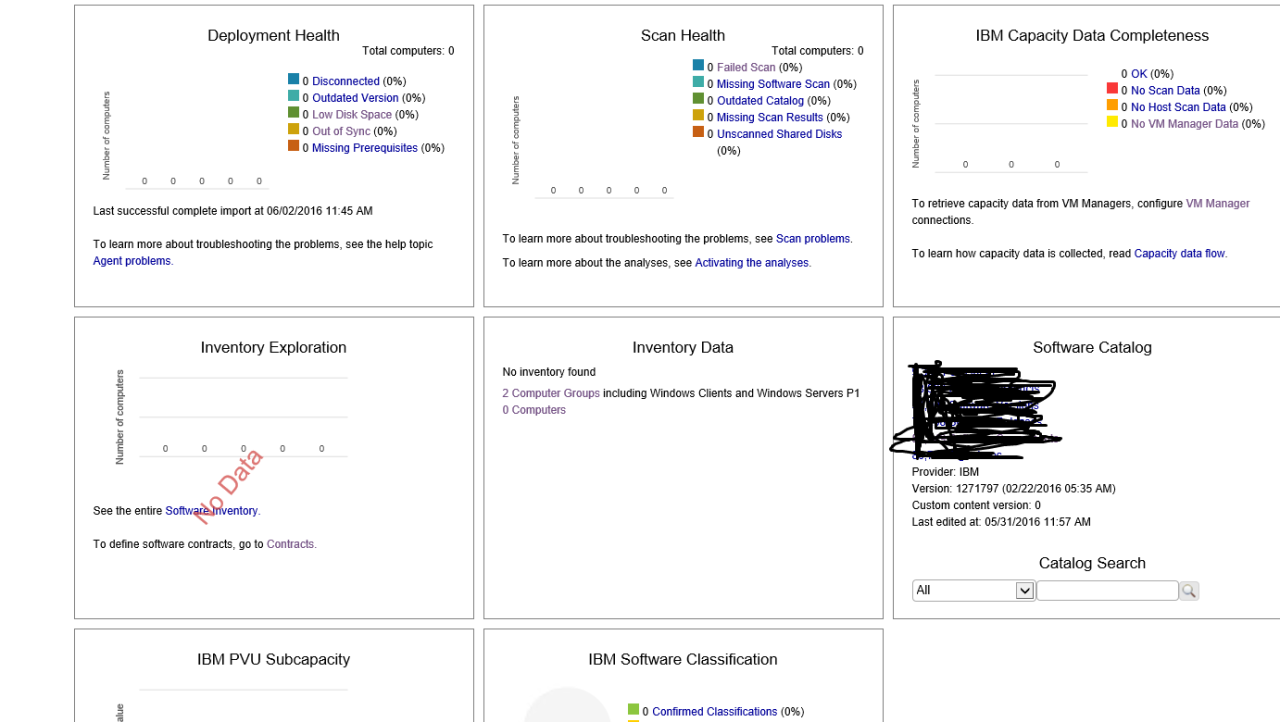

Reporting and Analysis

Regular reporting on your BigFix Inventory data is crucial for maintaining a healthy and efficient environment. Without consistent monitoring, potential problems can fester unnoticed, leading to inaccurate asset data, inefficient resource allocation, and ultimately, security vulnerabilities. Proactive reporting allows you to identify and address issues before they escalate, ensuring your IT infrastructure remains secure and well-managed.Understanding key metrics provides a clear picture of your inventory’s health and allows for data-driven decision-making.

This helps in optimizing resource allocation, improving security posture, and streamlining IT operations.

Key Metrics to Track

Tracking several key performance indicators (KPIs) provides a comprehensive view of your BigFix Inventory’s health. These metrics should be monitored regularly to identify trends and potential issues.

- Client Connection Rate: This metric indicates the percentage of clients successfully connecting to the BigFix Inventory server. A low connection rate suggests potential network issues or problems with client configurations, hindering data collection and accuracy.

- Data Collection Rate: This shows the speed and efficiency of data collection from managed devices. A low rate indicates potential bottlenecks or problems with the data collection process, resulting in outdated and incomplete inventory data.

- Number of Unmanaged Assets: Tracking the number of unmanaged assets highlights devices missing from your inventory. This metric is vital for ensuring comprehensive visibility and control over all IT assets within your organization.

- Average Inventory Update Time: This measures the average time taken for a client to complete an inventory scan and update the database. Longer update times could indicate performance issues on either the client or server side.

- Database Size: Monitoring the database size helps in identifying potential issues with data growth and storage capacity. Rapid growth could signal inefficiencies in data management or the need for database optimization.

- Error Rate: Tracking the number of errors during data collection and processing is crucial for identifying and resolving issues affecting data accuracy and completeness.

Generating Insightful Reports

BigFix Inventory offers robust reporting tools to generate customized reports based on the tracked metrics. These reports can be scheduled for regular delivery, providing a continuous stream of insights into your inventory’s health. For example, you could create a report showing the number of clients with outdated software, or a report summarizing the hardware resources of your entire organization.

The flexibility of the reporting engine allows you to tailor reports to specific needs and gain deeper insights into your inventory.

Dashboard Design: Visualizing KPIs

A well-designed dashboard provides a clear and concise overview of your inventory’s health. This visual representation of key metrics allows for quick identification of potential problems and informed decision-making.

| KPI | Description | Importance |

|---|---|---|

| Client Connection Rate | Percentage of clients successfully connecting to the BigFix Inventory server. | Indicates network connectivity and client health. Low rates signal potential problems requiring investigation. |

| Data Collection Rate | Speed and efficiency of data collection from managed devices. | Ensures up-to-date inventory data for accurate reporting and decision-making. Slow rates indicate potential bottlenecks. |

| Number of Unmanaged Assets | Number of devices not included in the inventory. | Highlights security risks and gaps in asset management. High numbers indicate a need for improved discovery and onboarding processes. |

| Average Inventory Update Time | Average time for a client to update the inventory. | Indicates performance issues on the client or server side. Long update times can lead to stale data. |

| Database Size | Size of the BigFix Inventory database. | Helps anticipate storage needs and identify potential performance bottlenecks. Rapid growth could indicate inefficiencies. |

| Error Rate | Number of errors during data collection and processing. | Identifies problems affecting data accuracy and completeness. High error rates require immediate attention. |

Troubleshooting and Problem Solving

BigFix Inventory, while robust, can occasionally present challenges. Understanding common issues and effective troubleshooting techniques is crucial for maintaining a healthy and efficient environment. This section details common problems, diagnostic methods, and solutions to help you swiftly resolve issues and keep your inventory data accurate and readily accessible.

Common BigFix Inventory Issues and Resolutions

A range of problems can affect BigFix Inventory, from database performance slowdowns to client communication failures. Identifying the root cause is the first step towards a successful resolution.

- Database Performance Issues: Slow query responses or database lock contention often indicate a need for database optimization. This might involve indexing improvements, query tuning, or even hardware upgrades (more RAM, faster storage). Regularly reviewing database logs for errors and warnings is essential. For example, a large number of “deadlock” entries in the log suggests concurrency issues requiring database schema adjustments or application code changes to reduce contention.

- Client Communication Failures: Clients failing to report inventory data often stem from network connectivity problems (firewalls, proxies, DNS issues), incorrect client configuration, or server-side certificate problems. Checking client logs for error messages and verifying network connectivity are crucial first steps. For example, a client might be unable to reach the server due to a misconfigured proxy server address in its settings.

- Data Synchronization Problems: Discrepancies between reported and expected inventory data can result from various issues, including incorrect data collection rules, client-side issues preventing data uploads, or problems with the BigFix Inventory server itself. Comparing the expected data with the actual data reported helps pinpoint the source of the inconsistency. A common scenario is a missing software package due to a faulty detection rule in the BigFix Inventory client.

- Server-Side Errors: Errors on the BigFix Inventory server, often logged in server-side logs, can manifest in various ways, including data corruption, application crashes, or service unavailability. Regular server maintenance, including backups and updates, helps prevent such errors. For instance, insufficient disk space can lead to server crashes and data loss.

Diagnosing and Resolving Performance Bottlenecks

Performance bottlenecks in BigFix Inventory can significantly impact its efficiency. Identifying and addressing these bottlenecks is vital for maintaining optimal performance.

Several methods can be employed to diagnose performance issues. Analyzing server logs for error messages and performance metrics provides valuable insights into potential bottlenecks. Using database monitoring tools to track query execution times and resource usage helps identify slow-running queries. Profiling the BigFix Inventory application itself can pinpoint performance hotspots within the application code. For example, a slow-running query might be identified by a database monitoring tool showing consistently high execution times for a specific query, suggesting the need for query optimization or index creation.

Troubleshooting Client Connectivity Problems

Client connectivity problems are among the most common issues encountered in BigFix Inventory deployments. Effective troubleshooting requires a systematic approach.

A decision tree approach is helpful:

- Verify Network Connectivity: Can the client ping the BigFix Inventory server? Is there a firewall blocking communication? Are there any network segmentation issues preventing communication?

- Check Client Configuration: Are the server address, port, and other settings in the client configuration file correct? Is the client properly registered with the server?

- Examine Client Logs: Review the client logs for error messages indicating connectivity problems. These messages often pinpoint the root cause of the issue.

- Inspect Server Logs: Check the server logs for any errors related to client communication or authentication. This can reveal server-side issues affecting client connectivity.

- Verify Certificates: Ensure that the client has the necessary certificates to communicate securely with the server. Certificate problems are a common cause of connectivity failures.

Troubleshooting Decision Tree

This decision tree guides users through common troubleshooting scenarios.

| Problem | Possible Cause | Solution |

|---|---|---|

| Slow Database Queries | Lack of indexes, poorly written queries | Add indexes, optimize queries |

| Client Reporting Failures | Network connectivity issues, incorrect client configuration | Check network, verify client settings |

| Data Inconsistency | Data collection rule errors, client-side issues | Review data collection rules, investigate client problems |

| Server Errors | Insufficient disk space, application errors | Increase disk space, review server logs |

Epilogue

Maintaining a healthy BigFix inventory environment isn’t a one-time task; it’s an ongoing commitment. By diligently following the strategies Artikeld in this post—regular database maintenance, optimized server resource allocation, proactive client management, and a robust security posture—you’ll significantly reduce the risk of downtime, improve the accuracy of your asset inventory, and ultimately gain greater control over your IT infrastructure.

Remember, a healthy BigFix environment is a happy, productive one. So, roll up your sleeves, put these tips into action, and enjoy the peace of mind that comes with a well-managed system!

FAQ Overview: How To Maintain Your Bigfix Inventory Environment Health

What are the common signs of a BigFix inventory health problem?

Slow report generation, frequent client connection failures, high database size, and inaccurate asset data are all red flags.

How often should I back up my BigFix database?

Implement a regular backup schedule, ideally daily, and consider incremental backups for efficiency.

How can I improve client reporting accuracy?

Ensure clients have proper network connectivity, are correctly configured, and regularly check for and address any reported client-side errors.

What are some key metrics to track for BigFix health?

Monitor database size, CPU and memory utilization, client connection success rate, and the number of outstanding actions.