How to Maximize the Effectiveness of Your Dynamic Testing Policies

How to maximize the effectiveness of your dynamic testing policies? It’s a question that keeps software developers, testers, and project managers up at night. We’re talking about making sure your software is rock-solid, secure, and performs flawlessly under pressure – and doing it efficiently. This isn’t just about ticking boxes; it’s about building a robust testing strategy that actually improves your product and saves you time and money in the long run.

Let’s dive in and explore how to make your dynamic testing a powerful asset.

Dynamic testing, unlike its static counterpart, involves actually running your software and observing its behavior under real-world (or simulated) conditions. This allows you to catch bugs and performance issues that simply wouldn’t surface during static code analysis. We’ll cover everything from choosing the right tools and techniques to integrating dynamic testing into your development lifecycle. Get ready to level up your testing game!

Defining Dynamic Testing Policies

Dynamic testing policies are crucial for ensuring software quality and reliability. A well-defined policy Artikels the processes, methodologies, and tools used to verify the functionality of a software application during its runtime. This contrasts with static testing, which examines the code without execution. A robust policy ensures efficient resource allocation and effective bug detection, ultimately leading to a higher-quality product.

Core Components of a Robust Dynamic Testing Policy

A robust dynamic testing policy encompasses several key components. First, it should clearly define the scope of testing, specifying which parts of the application will undergo dynamic testing and the specific functionalities to be examined. Secondly, it should Artikel the testing environment, including hardware and software specifications, network configurations, and data sets. Thirdly, the policy needs to detail the testing methodologies to be employed, specifying the types of dynamic tests to be conducted (e.g., functional, performance, security).

Finally, it should define the reporting and documentation procedures, outlining how test results will be recorded, analyzed, and communicated to stakeholders. These components work together to create a structured and effective testing process.

Dynamic vs. Static Testing Methodologies

Dynamic and static testing represent distinct approaches to software quality assurance. Static testing involves analyzing the code without execution, using methods like code reviews and static analysis tools to identify potential defects. In contrast, dynamic testing involves executing the code and observing its behavior under various conditions. Dynamic testing provides a more realistic assessment of the application’s performance and functionality, revealing defects that might be missed by static analysis.

Static testing is generally faster and cheaper, but dynamic testing offers more comprehensive coverage and identifies runtime errors.

Examples of Dynamic Testing Types and Their Applications

Several dynamic testing types exist, each serving a specific purpose. Functional testing verifies that the application meets its specified requirements. For example, testing a login function would involve verifying that valid credentials grant access while invalid credentials are rejected. Performance testing assesses the application’s responsiveness, stability, and scalability under different load conditions. A website’s performance testing might involve simulating a large number of concurrent users to assess its ability to handle high traffic.

Security testing aims to identify vulnerabilities and weaknesses that could be exploited by malicious actors. This could include penetration testing, which attempts to breach the application’s security measures. Usability testing focuses on the user experience, evaluating the ease of use and intuitiveness of the application. For instance, usability testing might involve observing users interacting with the application to identify areas of confusion or difficulty.

Comparison of Dynamic Testing Approaches

The choice of dynamic testing approach depends on various factors, including project constraints and priorities. The following table compares several common approaches based on cost, speed, and accuracy.

| Testing Approach | Cost | Speed | Accuracy |

|---|---|---|---|

| Functional Testing | Medium | Medium | Medium |

| Performance Testing | High | Slow | High |

| Security Testing | High | Slow | High |

| Usability Testing | Medium | Medium | Medium |

Selecting Appropriate Testing Tools and Techniques: How To Maximize The Effectiveness Of Your Dynamic Testing Policies

Choosing the right tools and techniques for dynamic testing is crucial for maximizing the effectiveness of your policies. The selection process should be driven by your specific project needs, considering factors like the application’s architecture, scale, and the types of dynamic tests required. A well-informed decision here can significantly impact the efficiency and accuracy of your testing efforts, leading to a more robust and reliable application.Selecting suitable dynamic testing tools requires a careful evaluation of several factors.

Firstly, you need to identify the specific testing needs of your project. Are you primarily concerned with performance, security, or load capacity? The answer to this question will significantly narrow down your options. Secondly, consider the size and complexity of your application. A simple web application might only require basic tools, while a large, complex system may necessitate more sophisticated solutions.

Finally, factor in your budget and the technical expertise of your team. Some tools are open-source and free, while others require significant investment. The complexity of the tool should also align with your team’s skillset to ensure effective utilization.

Tool Selection Based on Project Needs

The selection of dynamic testing tools should directly correlate with the project’s specific requirements. For instance, a project focused on e-commerce with high transaction volumes would prioritize load testing tools capable of simulating thousands of concurrent users. Conversely, a project emphasizing data security would benefit from tools specializing in vulnerability scanning and penetration testing. Consider the following: if your project involves a microservices architecture, tools capable of testing individual services independently and as a whole are essential.

For legacy systems, tools that can integrate with existing infrastructure and testing processes are crucial. Understanding these dependencies and prioritizing them during the selection process is key to success.

Automation Frameworks for Dynamic Testing

Different automation frameworks offer varying advantages and disadvantages. For example, Selenium, a popular framework for UI testing, provides excellent browser compatibility and supports multiple programming languages, but can be complex to set up and maintain, especially for large-scale projects. Cypress, on the other hand, is known for its ease of use and developer-friendly features, but might lack the extensive browser support of Selenium.

The choice often depends on team expertise, project complexity, and the desired level of integration with other tools. A smaller team might prefer Cypress’s simplicity, while a larger team with diverse skill sets might find Selenium’s flexibility more advantageous. Frameworks like JMeter are specifically designed for performance and load testing and are excellent choices for those scenarios.

Comparison of Dynamic Testing Techniques

Load testing simulates high user traffic to assess the application’s ability to handle peak demands. Performance testing measures response times, resource utilization, and overall system efficiency under various conditions. Security testing identifies vulnerabilities and weaknesses that could be exploited by malicious actors. These techniques are not mutually exclusive; they often complement each other. For example, load testing can uncover performance bottlenecks that might also represent security vulnerabilities.

A comprehensive dynamic testing strategy will typically incorporate all three techniques, each contributing to a holistic view of the application’s robustness and reliability.

Open-Source and Commercial Dynamic Testing Tools

Choosing between open-source and commercial tools involves weighing cost against features and support. Open-source tools often provide excellent functionality but may require more technical expertise to set up and maintain. Commercial tools typically offer more comprehensive support and features but come with a price tag.

- Open-Source Tools:

- JMeter: A popular open-source tool for performance and load testing, known for its versatility and extensive features. It allows for simulating a large number of users and analyzing performance metrics.

- Selenium: A widely used framework for automating web browser interactions, useful for functional and regression testing. It supports multiple programming languages and integrates well with other tools.

- K6: A modern open-source load testing tool, designed for developers and focused on ease of use and integration with CI/CD pipelines.

- Commercial Tools:

- LoadView: A cloud-based load testing platform that offers scalability and real-browser testing capabilities. It simplifies load testing by providing an intuitive interface and detailed performance reports.

- BlazeMeter: A comprehensive performance testing platform that integrates with JMeter and other open-source tools. It offers advanced features such as real-user monitoring and global load testing.

- HP LoadRunner: A long-standing industry-standard performance testing tool, known for its robustness and comprehensive features, but often comes with a higher price point and steeper learning curve.

Optimizing Test Coverage and Efficiency

Dynamic testing, while powerful, can quickly become unwieldy without a strategic approach to coverage and efficiency. Failing to optimize these areas can lead to wasted resources, missed defects, and ultimately, a compromised product. This section focuses on practical strategies to maximize the impact of your dynamic testing efforts.

Maximizing Test Coverage in Dynamic Testing

Comprehensive test coverage is paramount in dynamic testing. This involves ensuring all critical functionalities, potential failure points, and diverse user scenarios are adequately tested. Focusing on high-risk areas first ensures that the most impactful bugs are identified early. This approach minimizes the cost and effort associated with fixing issues later in the development lifecycle. Ignoring areas with high-risk potential can lead to significant problems down the road.

- Prioritize testing of core functionalities: These are the essential features of the application without which the system is largely unusable.

- Focus on areas with a history of defects: Past performance indicates areas prone to errors. Regression testing of these areas is crucial.

- Address complex logic and integrations: These areas are frequently sources of unexpected behavior and interactions.

- Test edge cases and boundary conditions: These scenarios often reveal hidden vulnerabilities.

- Employ various testing techniques: A combination of techniques such as unit, integration, system, and user acceptance testing ensures a more thorough evaluation.

Prioritizing Test Cases for Improved Efficiency

Effective test case prioritization is key to maximizing efficiency. Rather than a linear approach, prioritize tests based on risk, impact, and cost. This means focusing on the most critical areas first, those that are likely to cause the most damage if they fail, and those that are most costly to fix later.

- Risk-based prioritization: Tests covering high-risk functionalities should be executed first.

- Impact-based prioritization: Tests affecting a large number of users or critical business processes should take precedence.

- Cost-based prioritization: Tests that are expensive or time-consuming to execute should be prioritized based on their risk and impact.

Effective Test Data Management Techniques

Managing test data effectively is crucial for the success of dynamic testing. Poorly managed data can lead to inaccurate results, wasted time, and unreliable test outcomes. Effective strategies focus on creating realistic and representative data sets while ensuring data security and compliance.

- Data Subsetting: Create smaller, representative subsets of the full production data to reduce testing time and resources.

- Data Masking: Protect sensitive data by replacing real values with synthetic equivalents while maintaining data integrity for testing purposes.

- Data Generation: Utilize tools to generate realistic test data, ensuring sufficient volume and variation to cover various scenarios.

- Data Virtualization: Access and manipulate data without physically copying or moving it, reducing storage and management overhead.

Dynamic Testing Workflow

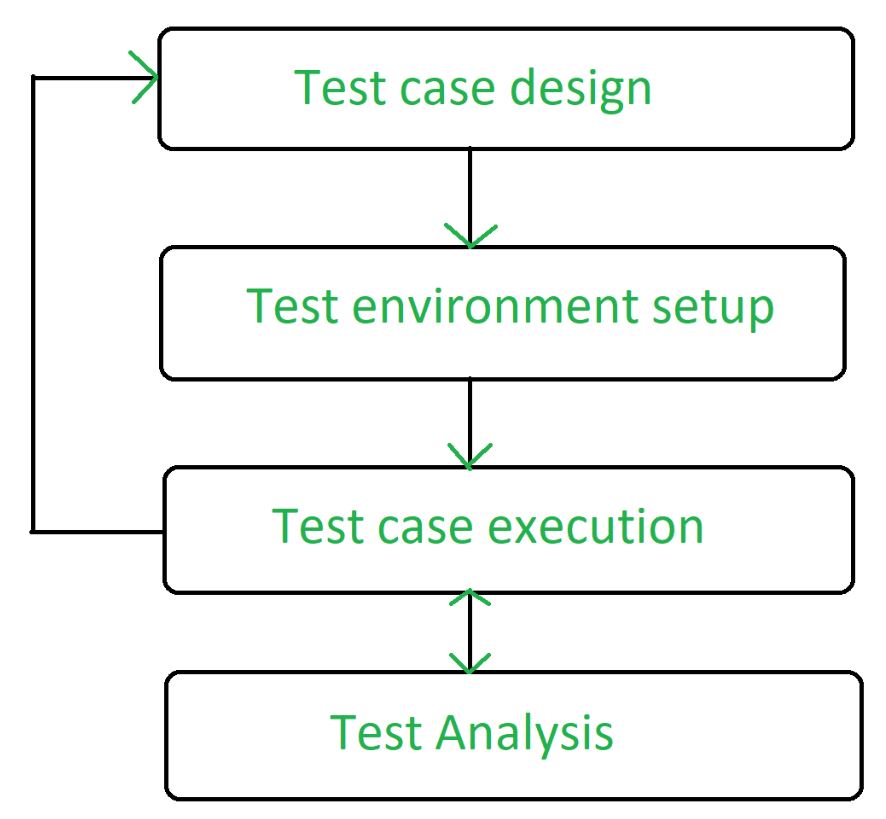

The following flowchart illustrates a typical dynamic testing workflow:[Imagine a flowchart here. The flowchart would start with “Test Planning,” branching to “Test Case Design,” “Test Environment Setup,” and “Test Data Preparation.” These would then converge to “Test Execution,” which branches to “Defect Reporting” and “Test Result Analysis.” “Defect Reporting” would loop back to “Test Case Design” for additional tests or fixes.

“Test Result Analysis” would lead to “Test Reporting,” concluding the workflow.] The flowchart visually represents the iterative nature of dynamic testing, highlighting the feedback loops between different stages and the importance of continuous monitoring and improvement. Each stage is dependent on the successful completion of the preceding steps, emphasizing the interconnectedness of the process. A clear understanding of this workflow is crucial for effective management and optimization of dynamic testing efforts.

Analyzing Test Results and Reporting

Dynamic testing, while crucial for ensuring software quality, is only half the battle. The other half lies in effectively analyzing the resulting data and communicating your findings clearly and concisely. Without proper analysis and reporting, valuable insights can be missed, leading to potential bugs slipping into production and impacting the user experience. This section focuses on best practices for extracting actionable information from your dynamic testing results.Analyzing test results involves more than just identifying whether tests passed or failed.

It’s about understandingwhy* tests failed, pinpointing the root causes, and prioritizing issues based on their severity and impact. This process requires a systematic approach, leveraging various tools and techniques to gain a comprehensive view of your software’s performance and stability.

Identifying Potential Issues from Test Results

The first step is to meticulously examine the test logs and reports generated during the dynamic testing process. This involves looking beyond simple pass/fail indicators to delve into detailed error messages, stack traces, and performance metrics. For example, a failed test might indicate a specific function is not behaving as expected, or a performance test might reveal unacceptable response times under load.

Analyzing these details helps in identifying potential issues such as memory leaks, race conditions, concurrency problems, or security vulnerabilities. By correlating failures with specific code sections or system components, developers can efficiently focus their debugging efforts. A common tool used is a test management system, which allows for detailed logging and traceability of each test execution and its outcome.

Visualizing Test Results

Effective visualization significantly enhances the understanding and interpretation of dynamic test results. Various methods can be employed, depending on the nature of the data and the target audience. For example, dashboards can provide a high-level overview of overall test success rates, highlighting critical areas needing attention. Charts, such as bar charts illustrating the frequency of different error types or line graphs showcasing performance trends over time, can provide valuable insights.

Heatmaps can visually represent code coverage, indicating areas of the codebase that have been thoroughly tested and those that require further attention. Using these visual aids makes complex data more accessible and easier to interpret, promoting better collaboration and faster decision-making.

Creating Concise and Informative Test Reports

Test reports should be concise, informative, and easy to understand for both technical and non-technical audiences. They should provide a summary of the testing process, including the scope, objectives, and methodology employed. A clear presentation of test results, including pass/fail rates, identified defects, and their severity levels, is essential. Furthermore, the report should offer recommendations for addressing the identified issues, along with an assessment of the overall software quality.

Including visual representations of the results, as mentioned previously, significantly improves the report’s readability and impact. The report should also clearly state the testing environment and any limitations or assumptions made during the testing process.

Organizing Test Results in a Clear and Actionable Manner

Organizing test results in a clear and actionable manner is crucial for effective communication and efficient problem-solving. A well-structured table can significantly aid in this process. The following example illustrates how to present key information concisely:

| Test Case ID | Test Description | Status | Severity |

|---|---|---|---|

| TC_001 | Login Functionality | Failed | Critical |

| TC_002 | User Registration | Passed | N/A |

| TC_003 | Product Search | Failed | Major |

| TC_004 | Payment Processing | Passed | N/A |

This table provides a clear overview of the test results, allowing stakeholders to quickly identify critical issues requiring immediate attention. Additional columns can be added to include details such as the error message, assigned developer, and resolution status. This structured approach facilitates efficient tracking of issues and ensures that necessary actions are taken promptly.

Integrating Dynamic Testing into the Software Development Lifecycle (SDLC)

Integrating dynamic testing effectively into your SDLC is crucial for delivering high-quality software. The timing and approach to integration vary depending on the chosen SDLC model, but the overarching goal remains consistent: to identify and address vulnerabilities and performance bottlenecks early in the development process. This proactive approach minimizes costly fixes later and improves the overall reliability and security of the final product.

The optimal integration points for dynamic testing differ significantly between Agile and Waterfall methodologies. In Waterfall, its integration is more structured and occurs at specific phases. Agile, with its iterative nature, allows for more continuous integration of dynamic testing.

Dynamic Testing Integration in Waterfall SDLC

In a Waterfall model, dynamic testing is typically concentrated in the testing phase, which follows the completion of development. However, to maximize effectiveness, some preliminary dynamic testing can be performed after the design phase to validate architectural decisions and identify potential vulnerabilities early on. A thorough dynamic testing suite is then executed before deployment. This approach, while less flexible than Agile integration, ensures a comprehensive test before release, minimizing the risk of critical issues surfacing in production.

For example, penetration testing might be performed after the design phase to assess the security architecture’s robustness. Performance testing would then be a major component of the final testing phase.

Dynamic Testing Integration in Agile SDLC

Agile’s iterative nature lends itself well to continuous integration of dynamic testing. Each sprint should incorporate dynamic testing, with automated tests running as part of the CI/CD pipeline. This allows for rapid feedback and early detection of issues, enabling quicker resolution and minimizing the risk of accumulating technical debt. For instance, automated security scans can be integrated after each sprint, providing immediate feedback on any newly introduced vulnerabilities.

Similarly, performance tests can be run frequently to ensure the application maintains acceptable performance levels as new features are added.

Benefits of CI/CD Pipelines for Dynamic Testing, How to maximize the effectiveness of your dynamic testing policies

Continuous Integration and Continuous Delivery (CI/CD) pipelines automate the process of building, testing, and deploying software. Integrating dynamic testing into a CI/CD pipeline provides several key advantages. Firstly, it enables automated execution of tests, saving significant time and resources compared to manual testing. Secondly, it allows for early detection of bugs and vulnerabilities, reducing the cost of fixing them later in the development cycle.

Finally, it fosters a culture of continuous improvement, as feedback from dynamic tests is readily available and can be used to improve the development process. For example, a CI/CD pipeline could automatically run unit tests, integration tests, and security scans after each code commit, providing immediate feedback to developers.

Examples of Dynamic Testing Integration in SDLC Phases

Effective integration requires a tailored approach for each SDLC phase. The following examples illustrate this:

- Requirements Gathering: Defining testability criteria and security requirements early on ensures that dynamic testing is considered from the outset. This can involve identifying potential security vulnerabilities in the design phase and implementing mitigations.

- Design: Reviewing the design for potential vulnerabilities and performance bottlenecks before implementation can significantly reduce the effort required during later testing phases. This proactive approach minimizes rework and enhances the overall quality.

- Development: Integrating automated security scans and performance tests into the development process allows for continuous monitoring of code quality and the early identification of issues.

- Testing: Performing comprehensive dynamic testing, including load testing, security testing, and performance testing, is essential to ensure the quality and stability of the software before deployment.

- Deployment: Monitoring the application’s performance and security in the production environment allows for continuous improvement and the early detection of issues that may arise after deployment.

Diagram: Integrating Dynamic Testing into a CI/CD Pipeline

Imagine a flowchart. The pipeline begins with a “Code Commit” box. An arrow points to a “Build” box, which then branches into two parallel paths. One path leads to a “Static Analysis” box (checking for coding errors and potential vulnerabilities). The other path goes to a “Dynamic Testing” box, encompassing automated security scans, performance tests, and other dynamic tests.

Both paths converge at a “Test Results Aggregation” box, where results from both static and dynamic analysis are combined. This box feeds into a “Deployment” box (either to staging or production), followed by a “Monitoring” box that tracks the application’s performance and security in the live environment. Feedback loops from the monitoring stage go back to the “Code Commit” stage, creating a continuous improvement cycle.

Managing and Monitoring Dynamic Testing Efforts

Effective management and monitoring are crucial for maximizing the return on investment (ROI) of your dynamic testing initiatives. Without a robust system in place, it’s easy to lose track of progress, miss critical issues, and ultimately fail to achieve your testing goals. This section will Artikel strategies for effectively managing and tracking dynamic testing, ensuring that your efforts are focused and productive.Successful dynamic testing hinges on proactive planning and consistent monitoring.

This involves establishing clear objectives, selecting the right metrics, and implementing processes to track progress and identify potential bottlenecks. By closely monitoring test execution and analyzing the results, you can optimize your testing strategy, identify areas for improvement, and ultimately deliver higher-quality software.

Establishing Clear Goals and Metrics for Dynamic Testing

Setting clear, measurable, achievable, relevant, and time-bound (SMART) goals is fundamental to any successful dynamic testing initiative. These goals should align with the overall software development objectives and clearly define what you hope to achieve through dynamic testing. For example, a goal might be to reduce the number of critical security vulnerabilities found in production by 50% within the next quarter.

Metrics, such as the number of vulnerabilities discovered, the severity of those vulnerabilities, and the time taken to resolve them, should be tracked to measure progress against these goals. Without defined metrics, it’s impossible to objectively assess the effectiveness of your dynamic testing strategy.

Monitoring Test Execution and Identifying Bottlenecks

Continuous monitoring of test execution is essential to identify and resolve bottlenecks early on. This involves tracking key metrics such as test case execution time, test coverage, and the number of defects found. Tools that provide real-time dashboards and reporting capabilities can greatly assist in this process. If bottlenecks are identified (e.g., slow test execution due to inefficient test scripts, insufficient test environment resources), immediate action should be taken to address them.

Regular reviews of test progress, including discussions with the testing team, can help pinpoint areas requiring attention and prevent delays. For instance, a bottleneck might be identified in the analysis of large datasets generated during penetration testing, requiring the team to optimize their analysis tools or processes.

Key Performance Indicators (KPIs) for Dynamic Testing Effectiveness

Tracking the right KPIs provides valuable insights into the effectiveness of your dynamic testing efforts. These metrics should reflect the goals and objectives set for the testing initiative. Here’s a list of key KPIs:

- Number of vulnerabilities found: Tracks the total number of security vulnerabilities identified during dynamic testing.

- Severity of vulnerabilities found: Categorizes vulnerabilities based on their potential impact (e.g., critical, high, medium, low).

- Number of vulnerabilities remediated: Monitors the progress of fixing identified vulnerabilities.

- Time to remediation: Measures the time taken to resolve vulnerabilities from discovery to implementation of a fix.

- Test execution time: Tracks the time taken to complete dynamic testing activities.

- Test coverage: Measures the percentage of the application codebase covered by dynamic tests.

- Defect leakage rate: The percentage of defects that escape dynamic testing and are found later in the development lifecycle or in production.

- False positive rate: The percentage of reported vulnerabilities that are not actual vulnerabilities.

- Cost of remediation: Tracks the cost associated with fixing identified vulnerabilities.

By regularly monitoring these KPIs, you can gain a comprehensive understanding of the effectiveness of your dynamic testing strategy and identify areas for improvement. For example, a high defect leakage rate might indicate the need for improved test coverage or more rigorous testing procedures. A high false positive rate might suggest a need for more refined testing tools or improved tester training.

Closure

Mastering dynamic testing isn’t just about finding bugs; it’s about building a culture of quality and efficiency. By implementing the strategies discussed – from careful tool selection and optimized test coverage to insightful result analysis and seamless SDLC integration – you can transform your dynamic testing from a necessary evil into a powerful engine for software excellence. Remember, consistent improvement is key.

Regularly review your processes, adapt to new technologies, and always strive to refine your approach. The payoff? Software that truly shines.

Question Bank

What are some common pitfalls to avoid in dynamic testing?

Common pitfalls include insufficient test coverage, neglecting non-functional testing (like performance and security), inadequate test data management, and failing to integrate testing into the SDLC effectively. Also, relying solely on automated tests without manual exploration can be problematic.

How often should I run dynamic tests?

The frequency depends on your development methodology and risk tolerance. Agile teams often integrate dynamic tests into their sprints, while waterfall projects might have dedicated testing phases. The key is to test frequently enough to catch issues early.

What’s the difference between load testing and stress testing?

Load testing assesses performance under expected user loads, while stress testing pushes the system beyond its limits to identify breaking points and resilience. Both are crucial for understanding system behavior under various conditions.

How can I justify the investment in dynamic testing tools and resources?

By quantifying the cost of bugs found in production versus those caught early through testing. Improved software quality, reduced downtime, and enhanced user satisfaction are all strong justifications for investing in robust dynamic testing.