Resiliency for CI/CD Pipeline Building Robust Systems

Resiliency for ci cd pipeline – Resiliency for CI/CD pipeline is no longer a luxury; it’s a necessity. In today’s fast-paced development world, downtime translates directly to lost revenue and frustrated users. This post dives deep into building a CI/CD system that can withstand the inevitable bumps in the road, from minor hiccups to major outages. We’ll explore strategies to not only survive these failures but to thrive, ensuring continuous delivery even in the face of adversity.

We’ll cover everything from designing self-healing pipelines to implementing robust monitoring and security measures.

Think of your CI/CD pipeline as a finely tuned machine. A single broken part can bring the whole thing to a grinding halt. But what if that machine could diagnose its own problems, automatically fix itself, and even predict potential issues before they arise? That’s the power of a resilient CI/CD pipeline. This isn’t just about avoiding downtime; it’s about building a system that’s adaptable, scalable, and ultimately, more efficient.

Defining Resiliency in CI/CD Pipelines

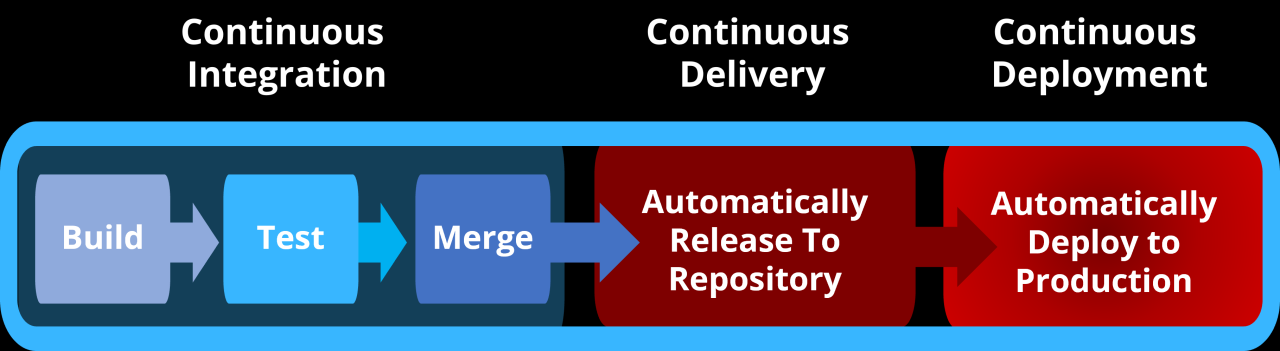

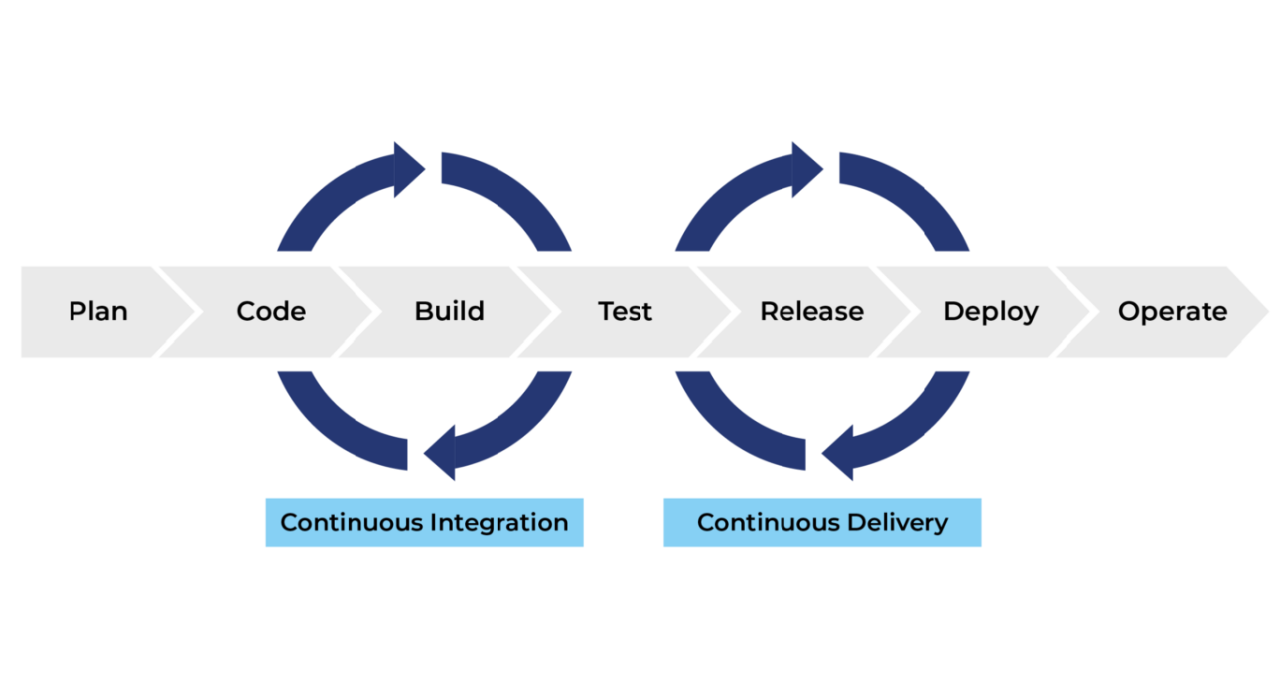

A resilient CI/CD pipeline is crucial for ensuring the smooth and reliable delivery of software. It’s about building a system that can withstand disruptions, recover quickly from failures, and minimize the impact on development timelines and software quality. Without resilience, even minor issues can snowball into significant delays and compromised product quality.

Core Components of a Resilient CI/CD Pipeline

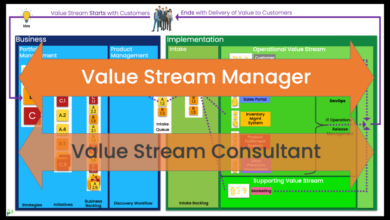

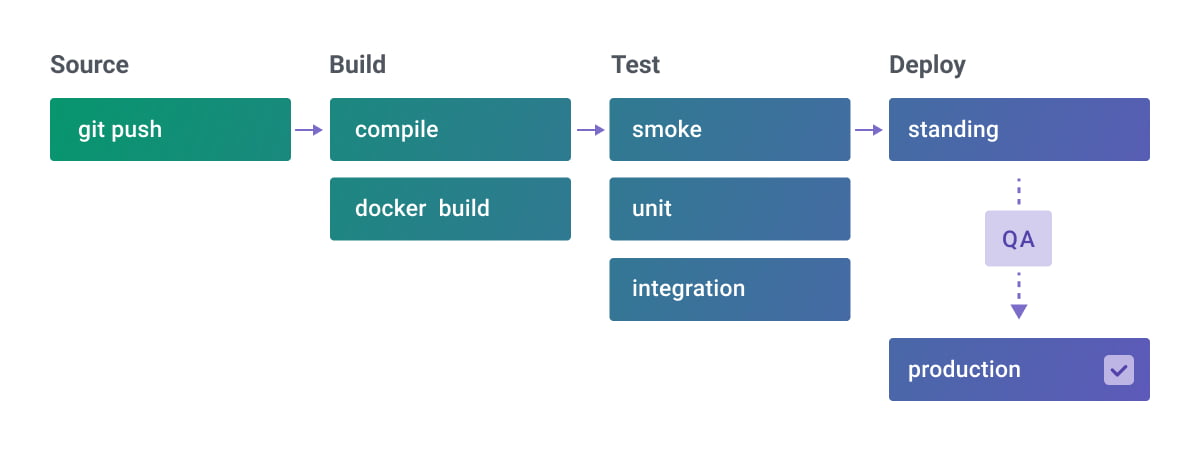

A resilient CI/CD pipeline relies on several key components working together. These components are interconnected and depend on each other to ensure that the entire system can handle failures gracefully. A robust design considers fault tolerance at each stage, from code commit to deployment.

Types of Failures in CI/CD Pipelines

CI/CD pipelines are complex systems, and failures can occur at various stages. These failures can range from simple, easily fixable problems to complex, cascading failures that require significant effort to resolve. Understanding the different types of failures is crucial for building a resilient system. For instance, a failed build due to a dependency issue is different from a deployment failure caused by a server outage.

Identifying these failure types allows for targeted mitigation strategies.

Impact of Failures on Software Delivery

Failures in the CI/CD pipeline directly impact software delivery timelines and quality. A single point of failure can halt the entire process, leading to significant delays in releasing new features or bug fixes. Furthermore, undetected errors can result in deploying faulty software to production, leading to issues like application crashes, security vulnerabilities, and degraded user experience. For example, a failure in the automated testing phase could lead to the deployment of software containing critical bugs, requiring a costly and time-consuming rollback and fix.

Conceptual Model of a Resilient CI/CD System

The following table Artikels a conceptual model of a resilient CI/CD system, highlighting key elements, potential failure modes, and mitigation strategies.

| Element Name | Description | Failure Mode | Mitigation Strategy |

|---|---|---|---|

| Version Control System | Centralized repository for code management. | Repository corruption, loss of access. | Regular backups, redundant servers, access control measures. |

| Build System | Automates the compilation and packaging of code. | Build failures due to dependency issues, resource exhaustion. | Robust dependency management, resource monitoring and scaling, parallel builds. |

| Automated Testing | Automated execution of unit, integration, and end-to-end tests. | Test failures due to bugs, flaky tests, insufficient test coverage. | Continuous improvement of test suites, use of mocking and stubbing, robust test infrastructure. |

| Deployment System | Automates the deployment of software to various environments. | Deployment failures due to server issues, configuration errors, network problems. | Blue/green deployments, canary deployments, infrastructure-as-code, automated rollbacks. |

| Monitoring and Logging | Provides real-time visibility into the pipeline’s health and performance. | Loss of monitoring data, insufficient logging, inability to correlate events. | Centralized logging, robust monitoring tools, effective alerting systems. |

| Rollback Mechanism | Enables quick reversion to a previous stable version in case of failures. | Failure to rollback, incomplete rollback. | Automated rollback procedures, thorough testing of rollback mechanisms. |

Implementing Resiliency Strategies

Building truly resilient CI/CD pipelines requires a proactive approach, moving beyond simply reacting to failures. It’s about anticipating potential points of weakness and implementing strategies to mitigate their impact, ensuring continuous delivery even in the face of unforeseen circumstances. This involves a blend of design choices, automated processes, and robust monitoring.Self-healing CI/CD pipelines aim to automatically recover from failures without human intervention.

This significantly reduces downtime and improves overall efficiency. The core principle is to build redundancy and automation into every stage of the pipeline.

Self-Healing Pipeline Design Best Practices

Designing self-healing pipelines involves several key practices. First, implement idempotent steps; each step should be able to be repeated without causing unintended side effects. This allows for automatic retries without data corruption or inconsistencies. Second, leverage infrastructure as code (IaC) to manage your pipeline’s infrastructure. IaC allows for automated provisioning and recovery of resources, ensuring rapid restoration after failures.

Finally, incorporate thorough logging and alerting to provide immediate visibility into pipeline health and facilitate rapid troubleshooting. For example, a self-healing deployment might automatically retry a failed deployment to a specific server, or even roll back to a previous stable version if multiple retries fail.

Automated Rollback Mechanisms

Automated rollback mechanisms are crucial for maintaining pipeline resilience. These mechanisms allow for rapid reversion to a previously known good state in the event of a deployment failure. They minimize the impact of faulty releases, preventing widespread disruption. A robust rollback strategy includes version control, automated testing in staging environments, and a well-defined process for reverting to a previous stable version.

Consider using tools that support blue/green deployments or canary deployments; these strategies minimize risk by gradually rolling out changes to a small subset of users before a full-scale deployment.

Monitoring Tools for Pipeline Health

Various monitoring tools offer diverse capabilities for assessing pipeline health. Tools like Prometheus and Grafana provide comprehensive metrics dashboards, visualizing key performance indicators (KPIs) and alerting on anomalies. Datadog offers similar functionalities with enhanced capabilities for tracing and distributed tracing. The choice of tool depends on factors such as the complexity of your pipeline, existing infrastructure, and specific monitoring needs.

While comprehensive tools offer in-depth insights, simpler solutions may suffice for less complex pipelines. The key is to choose a tool that provides real-time visibility into pipeline performance and alerts on potential issues before they escalate.

Handling Infrastructure Failures

Handling infrastructure failures requires a multi-faceted approach. High availability (HA) infrastructure, including redundant servers and load balancers, is paramount. This ensures that the pipeline can continue operating even if individual components fail. Cloud-based solutions often provide built-in HA capabilities, but careful configuration is crucial. Another critical aspect is disaster recovery planning.

This involves defining procedures for restoring pipeline functionality in the event of a major outage, such as a regional data center failure. Regular testing of disaster recovery plans is essential to ensure their effectiveness. For example, a geographically distributed deployment can help mitigate the impact of regional outages. Using a cloud provider’s multi-region capabilities allows for automatic failover to a backup region if the primary region experiences an outage.

Testing and Monitoring for Resilience

Building a resilient CI/CD pipeline isn’t just about implementing strategies; it requires rigorous testing and continuous monitoring to ensure those strategies are effective and to quickly identify and address weaknesses. Proactive testing and monitoring are crucial for preventing disruptions and maintaining the smooth flow of your software delivery process. This involves defining key metrics, implementing comprehensive testing, and leveraging powerful monitoring tools.

Key Metrics for Measuring CI/CD Pipeline Resilience

Understanding the resilience of your CI/CD pipeline necessitates the tracking of specific metrics. These metrics provide insights into the pipeline’s stability, speed, and ability to recover from failures. By consistently monitoring these metrics, you can identify potential bottlenecks and areas for improvement.

- Mean Time To Recovery (MTTR): This metric measures the average time it takes to restore the pipeline after a failure. A lower MTTR indicates a more resilient pipeline.

- Mean Time Between Failures (MTBF): This metric represents the average time between successive failures. A higher MTBF suggests greater pipeline stability.

- Deployment Frequency: A higher deployment frequency, while not directly a resilience metric, indirectly reflects a robust and reliable pipeline capable of handling frequent changes.

- Change Failure Rate: This metric tracks the percentage of deployments that result in failures. A lower rate points towards a more resilient pipeline.

- Pipeline Throughput: This measures the rate at which the pipeline processes changes, indicating efficiency and stability.

Essential Tests for Validating Pipeline Resilience

A comprehensive testing strategy is essential to ensure your CI/CD pipeline can withstand various disruptions and unexpected events. This involves testing various aspects of the pipeline, from individual stages to the overall flow.

- Unit Tests: Verify the functionality of individual components within the pipeline.

- Integration Tests: Ensure that different parts of the pipeline work together seamlessly.

- End-to-End Tests: Simulate a complete deployment cycle to validate the entire pipeline’s functionality.

- Chaos Engineering Tests: Introduce deliberate failures into the pipeline to observe its behavior and recovery capabilities. For example, simulating network outages or database failures.

- Rollback Tests: Verify the ability to successfully roll back to a previous stable version in case of a failed deployment.

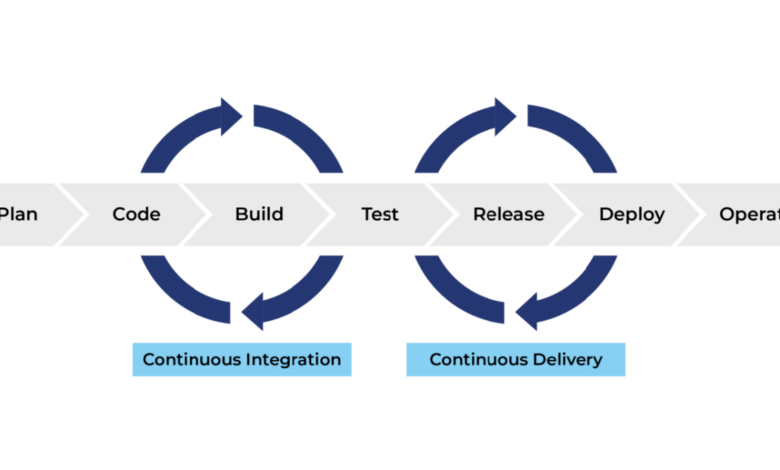

Integrating Automated Testing into the CI/CD Process

Automating your tests is crucial for achieving a truly resilient CI/CD pipeline. Automation ensures consistent testing, reduces human error, and accelerates the feedback loop. Tools like Jenkins, GitLab CI, and CircleCI offer excellent capabilities for integrating automated tests into your pipeline.

For example, you could configure your CI/CD system to automatically run unit, integration, and end-to-end tests after each code commit. If any test fails, the pipeline will halt, preventing the deployment of faulty code. This immediate feedback allows for quick identification and resolution of issues, minimizing downtime and improving overall resilience.

Monitoring Tools and Their Capabilities

Effective monitoring is crucial for detecting and responding to pipeline failures swiftly. Several tools provide real-time insights into pipeline health and performance, enabling proactive intervention.

- Datadog: Provides comprehensive monitoring of various aspects of the CI/CD pipeline, including infrastructure, application performance, and logs. It offers alerting capabilities for detecting anomalies and potential failures.

- Prometheus: An open-source monitoring system that collects metrics from various sources and provides visualization and alerting. It’s highly customizable and integrates well with other tools.

- Grafana: A popular open-source visualization and analytics platform that can be used to create dashboards for monitoring key CI/CD metrics. It can integrate with various data sources, including Prometheus and Datadog.

- Dynatrace: A comprehensive application performance monitoring (APM) tool that can provide detailed insights into the performance of applications deployed through the CI/CD pipeline. It can automatically detect and diagnose issues, reducing MTTR.

- Splunk: A powerful log management and analysis tool that can be used to monitor and analyze logs generated by the CI/CD pipeline. It can help identify patterns and root causes of failures.

Infrastructure as Code and Resiliency

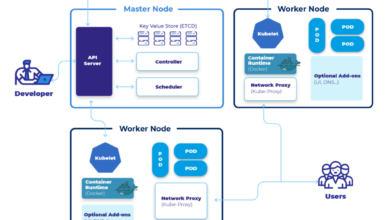

Infrastructure as Code (IaC) is a game-changer for building resilient CI/CD pipelines. By treating infrastructure as software, we can automate its provisioning, configuration, and management, leading to significantly improved reliability and reduced downtime. This approach allows for consistent, repeatable deployments, minimizing the risk of human error and ensuring a stable environment for your CI/CD processes.IaC enables the creation of highly available and fault-tolerant systems, crucial for maintaining continuous integration and delivery.

It facilitates easy replication and scaling of pipeline components, enabling quick recovery from failures and ensuring minimal disruption to the software development lifecycle. This proactive approach to infrastructure management dramatically enhances the overall resilience of the entire CI/CD pipeline.

IaC Tools for Enhanced Pipeline Resilience

Several powerful IaC tools contribute significantly to building resilient CI/CD pipelines. These tools provide automation capabilities, version control, and infrastructure-as-code methodologies, making it easier to manage and maintain consistent and reliable environments.

- Terraform: Terraform’s declarative approach allows you to define your infrastructure in a human-readable configuration file. Its ability to manage diverse cloud providers and on-premise infrastructure simplifies the creation of complex, highly available systems. Changes are tracked and version-controlled, facilitating easy rollback in case of issues.

- Ansible: Ansible’s agentless architecture simplifies configuration management and automation. It excels at deploying and managing applications and infrastructure across various platforms, ensuring consistency and reliability in your CI/CD environment. Its playbooks offer a structured way to define and manage infrastructure configurations, making it easier to maintain and troubleshoot.

- Pulumi: Pulumi leverages general-purpose programming languages (like Python, Go, JavaScript) to define and manage infrastructure. This allows for greater flexibility and control, enabling developers to use familiar tools and techniques to manage infrastructure. Its cloud-agnostic nature supports various providers, providing scalability and portability.

Impact of IaC on Reducing Human Error and Improving Pipeline Stability

Human error is a significant source of instability in CI/CD pipelines. IaC dramatically reduces this risk by automating infrastructure management. Instead of manual configurations prone to mistakes, IaC uses code that is version-controlled, reviewed, and tested, significantly lowering the chances of misconfigurations or accidental deletions. The automated and repeatable nature of IaC ensures consistency across environments, preventing inconsistencies that can lead to pipeline failures.

This predictability improves stability and reduces the need for manual intervention, leading to faster deployments and reduced downtime.

Sample IaC Configuration for a Highly Available CI/CD Pipeline Component, Resiliency for ci cd pipeline

Let’s consider a highly available build agent pool as a crucial component of our CI/CD pipeline. We’ll use a simplified Terraform configuration example to illustrate how IaC can ensure its high availability. This example assumes using a cloud provider like AWS.This configuration creates two build agents in separate availability zones. If one agent fails, the other continues to function, ensuring uninterrupted build processes.

Load balancing distributes incoming build requests across the available agents. This design uses multiple AWS resources to create a fault-tolerant setup.“`terraformresource “aws_instance” “build_agent_1” ami = “ami-0c55b31ad2299a701” # Replace with your AMI ID instance_type = “t3.medium” subnet_id = “subnet-xxxxxxxxxxxxxxxxx” # Replace with your subnet ID in AZ1 # …

other configurations …resource “aws_instance” “build_agent_2” ami = “ami-0c55b31ad2299a701” # Replace with your AMI ID instance_type = “t3.medium” subnet_id = “subnet-yyyyyyyyyyyyyyyyy” # Replace with your subnet ID in AZ2 # … other configurations …resource “aws_lb” “build_agent_lb” # … load balancer configuration …resource “aws_lb_target_group” “build_agent_tg” # …

target group configuration …# Attach instances to the target groupresource “aws_lb_target_group_attachment” “build_agent_attachment_1” target_group_arn = aws_lb_target_group.build_agent_tg.arn target_id = aws_instance.build_agent_1.id port = 8080 # Port your build agent listens onresource “aws_lb_target_group_attachment” “build_agent_attachment_2” target_group_arn = aws_lb_target_group.build_agent_tg.arn target_id = aws_instance.build_agent_2.id port = 8080 # Port your build agent listens on“`This is a simplified example; a production-ready configuration would require more detailed settings, security measures, and potentially additional resources like auto-scaling groups for dynamic scaling based on demand.

However, it effectively demonstrates how IaC can be used to create highly available components for a resilient CI/CD pipeline.

Security Considerations for Resilient CI/CD

A resilient CI/CD pipeline isn’t just about speed and automation; it’s also about security. Breaches in your CI/CD system can lead to compromised code, data leaks, and significant downtime, undermining all the benefits of automation. Building security into the pipeline from the ground up is crucial for long-term resilience. This means addressing vulnerabilities at every stage, from code development to deployment.

Potential Security Vulnerabilities in CI/CD Pipelines

Several security vulnerabilities can severely impact the resilience of your CI/CD pipeline. These vulnerabilities can range from simple misconfigurations to sophisticated attacks targeting specific pipeline components. Addressing these vulnerabilities requires a multi-layered approach encompassing infrastructure security, code security, and robust access controls.

Securing CI/CD Pipeline Infrastructure

Securing the infrastructure supporting your CI/CD pipeline is paramount. This involves implementing strong authentication and authorization mechanisms, regularly patching systems and software, and employing robust network security practices. Leveraging technologies like firewalls, intrusion detection systems, and virtual private networks (VPNs) is essential. Regular security audits and penetration testing can identify and address potential weaknesses before they can be exploited.

Implementing least privilege access control ensures that users only have access to the resources necessary for their roles. This minimizes the impact of compromised credentials.

Secure Coding Practices and Their Role in Pipeline Resilience

Secure coding practices are foundational to a resilient CI/CD pipeline. Insecure code introduces vulnerabilities that attackers can exploit, potentially leading to pipeline disruption or data breaches. Employing techniques like input validation, output encoding, and secure dependency management is critical. Regular code reviews and static/dynamic analysis can help identify and address vulnerabilities early in the development lifecycle. Implementing a robust security testing strategy, including penetration testing and security scanning, further enhances the security posture of the codebase.

This proactive approach minimizes the risk of vulnerabilities making their way into production.

Common Security Vulnerabilities, Impact, Mitigation, and Implementation

The following table summarizes common security vulnerabilities, their impact on resilience, mitigation strategies, and example implementations:

| Vulnerability | Impact on Resilience | Mitigation Strategy | Example Implementation |

|---|---|---|---|

| Unsecured Credentials in Configuration Files | Compromised pipeline access, code tampering, data breaches | Use of secrets management tools, encryption at rest and in transit | Using HashiCorp Vault or AWS Secrets Manager to store and manage sensitive information. Encrypting configuration files using tools like GPG. |

| Insecure Dependencies | Introduction of vulnerabilities through outdated or malicious libraries | Regular dependency updates, using dependency scanners, implementing software composition analysis (SCA) | Using tools like Snyk or Dependabot to automatically detect and update vulnerable dependencies. Implementing a strict policy for only using approved libraries. |

| Lack of Input Validation | Cross-site scripting (XSS) attacks, SQL injection, other injection attacks | Implementing robust input validation and sanitization techniques | Using parameterized queries for database interactions, escaping user inputs before displaying them on a webpage, validating all inputs against expected data types and formats. |

| Insufficient Access Control | Unauthorized access to pipeline components, data breaches, code tampering | Implementing role-based access control (RBAC), least privilege principle | Using tools like AWS IAM or Azure RBAC to manage user permissions. Granting users only the minimum necessary permissions to perform their tasks. |

Scaling for Resiliency

Scaling a CI/CD pipeline isn’t just about handling more builds; it’s about ensuring consistent performance and reliability even under pressure. A resilient, scalable pipeline maintains its speed and accuracy regardless of fluctuating demand, preventing bottlenecks and ensuring continuous delivery. This is crucial for maintaining a competitive edge and delivering value to users consistently.The core of a scalable and resilient CI/CD pipeline lies in its ability to adapt dynamically to changing workloads.

This requires a proactive approach, anticipating potential growth and implementing strategies to handle unexpected surges in demand without compromising performance or stability. This goes beyond simply throwing more hardware at the problem; it involves intelligent resource allocation and automated scaling mechanisms.

Load Balancing and Auto-Scaling

Load balancing distributes incoming requests across multiple pipeline components, preventing any single server from becoming overloaded. This ensures that the pipeline remains responsive even during peak activity. Auto-scaling automatically adjusts the number of resources (e.g., virtual machines, containers) based on real-time demand. When demand increases, more resources are provisioned; when demand decreases, resources are scaled down to optimize costs.

For instance, imagine a scenario where a new feature release triggers a significant increase in build requests. A well-designed system with auto-scaling would automatically spin up additional build agents, processing the increased workload without impacting build times or introducing delays. Conversely, after the initial surge subsides, the system would automatically scale down, reducing resource consumption and costs.

Designing for Unexpected Spikes in Demand

Designing a CI/CD pipeline that gracefully handles unexpected spikes requires a multi-faceted approach. This includes using queuing systems to buffer incoming requests during peak periods, preventing immediate overload. Implementing robust error handling and retry mechanisms is crucial; these prevent single failures from cascading and bringing down the entire pipeline. Consider using techniques like circuit breakers to temporarily stop sending requests to a failing component, allowing it time to recover before resuming operations.

For example, a sudden surge in pull requests could overwhelm the code analysis stage. A queuing system would hold these requests until the analysis stage has sufficient capacity to process them, preventing a backlog and ensuring that each request is eventually handled.

Distributed Architecture for Improved Resilience and Scalability

A distributed architecture is key to achieving high levels of resilience and scalability. By distributing pipeline components across multiple servers or regions, you reduce the impact of single points of failure. If one region experiences an outage, the pipeline can continue operating from other regions. Microservices architecture, where the pipeline is broken down into smaller, independent services, further enhances resilience and scalability.

Each microservice can be scaled independently based on its specific needs, allowing for optimized resource allocation. For example, a distributed architecture could deploy build agents across multiple availability zones. If one zone fails, the builds can seamlessly continue in the other zones, ensuring continuous delivery. This also allows for geographical distribution, enabling faster build times for developers in different locations.

Epilogue

Building a truly resilient CI/CD pipeline requires a multifaceted approach. It’s not a one-size-fits-all solution, but rather a continuous process of improvement and adaptation. By focusing on proactive strategies like automated rollbacks, robust monitoring, and secure coding practices, you can dramatically reduce the impact of failures and ensure the continuous delivery of high-quality software. Remember, a resilient pipeline isn’t just about surviving failures; it’s about thriving in the face of them, ensuring your team can focus on innovation instead of firefighting.

Popular Questions: Resiliency For Ci Cd Pipeline

What are some common causes of CI/CD pipeline failures?

Common causes include infrastructure issues (server outages, network problems), code errors, configuration problems, and security vulnerabilities.

How can I measure the effectiveness of my resiliency strategies?

Track metrics like Mean Time To Recovery (MTTR), Mean Time Between Failures (MTBF), and the frequency and duration of pipeline disruptions.

What’s the role of human error in CI/CD pipeline failures?

Human error plays a significant role. Implementing automation and rigorous testing can mitigate this risk.

How often should I test my CI/CD pipeline’s resilience?

Regular testing, ideally as part of your CI/CD process, is crucial. The frequency depends on your pipeline’s complexity and criticality, but aiming for at least weekly testing is a good starting point.