Simplify Oracle UCM/HCM Data Loading with Workload Automation

Simplify the data loading using oracle ucm and hcm data loader plugins with workload automation – Simplify Oracle UCM and HCM data loading using Oracle UCM and HCM data loader plugins with workload automation – sounds daunting, right? But it doesn’t have to be! This post dives into how you can dramatically streamline your data loading processes, saving you time, headaches, and potentially a lot of late nights. We’ll explore the power of workload automation tools integrated with Oracle’s UCM and HCM data loaders, showing you how to handle large datasets, complex transformations, and even navigate tricky error handling.

Get ready to transform your data loading workflow from a stressful chore into a smooth, efficient operation.

We’ll cover everything from understanding the core functionalities of the Oracle data loaders to implementing robust error handling and security measures. We’ll even provide practical examples and a step-by-step guide to help you implement these changes in your own environment. Whether you’re a seasoned Oracle pro or just getting started, this guide will equip you with the knowledge and strategies to master your data loading processes.

Understanding Oracle UCM and HCM Data Loaders

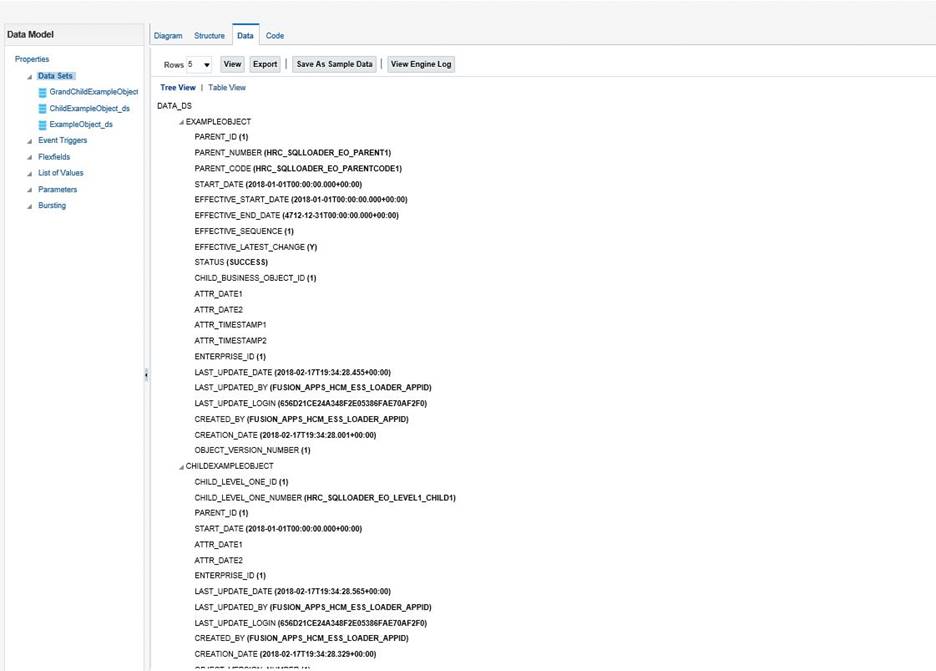

Oracle’s Unified Content Management (UCM) and Human Capital Management (HCM) systems are powerful tools for managing content and employee data respectively. However, efficiently populating these systems with large datasets requires specialized data loaders. Understanding their functionalities and limitations is crucial for successful data migration and ongoing maintenance.Oracle UCM and HCM Data Loaders provide a structured approach to importing and updating data, offering features to validate and transform data before loading it into the target system.

Automating data loading with Oracle UCM and HCM data loader plugins via workload automation is a game-changer for efficiency. This streamlined approach frees up valuable time, allowing developers to focus on more strategic initiatives, like exploring the exciting possibilities discussed in this article on domino app dev the low code and pro code future , which is crucial for future-proofing applications.

Ultimately, optimizing data loading processes using these plugins ensures a smoother workflow and allows for better integration with other systems.

This minimizes errors and ensures data integrity. The process typically involves several steps, from preparing the source data to verifying the loaded data. Efficient use of these loaders significantly improves data management processes.

Functionalities of Oracle UCM and HCM Data Loaders

The UCM data loader focuses on importing and managing content metadata and files into the UCM repository. It handles tasks like importing documents, updating metadata fields, and managing document relationships. Conversely, the HCM data loader is designed to manage employee data, including personal information, job details, compensation, and benefits. It supports bulk loading of employee records, updating existing records, and managing complex data relationships between different employee attributes.

Both loaders offer features for error handling and logging, facilitating troubleshooting and data validation.

Typical Data Loading Process Using Oracle UCM and HCM Data Loaders

The typical process for both loaders involves several key steps: First, data extraction from the source system is performed, usually via exporting data in a structured format like CSV or XML. Next, data transformation and cleansing occur, ensuring the data conforms to the target system’s requirements. This may involve data type conversions, field mapping, and data validation checks.

Then, the transformed data is loaded into the respective system using the dedicated data loader. Finally, post-load validation is performed to verify data integrity and identify any discrepancies. This typically involves comparing the loaded data against the source data or running system reports.

Common Challenges Encountered During Data Loading

Data loading with these tools often presents challenges. Data inconsistencies in the source data, particularly variations in data formats or missing values, can cause errors during the transformation process. Mapping source data fields to target fields correctly can be complex, especially with large datasets and complex data structures. Performance issues can arise with very large datasets, requiring optimization strategies to manage processing time.

Moreover, ensuring data integrity and accuracy throughout the process requires meticulous planning and rigorous testing. Insufficient error handling and logging mechanisms can also make troubleshooting difficult.

Comparison of UCM and HCM Data Loaders

While both loaders share similarities in their overall process, they differ significantly in their target systems and functionalities. The UCM loader focuses on content metadata and file management, dealing with document types, versions, and security permissions. The HCM loader, on the other hand, focuses on employee-related data, handling complex relationships between different data points and often integrating with other HR systems.

The data structures and validation rules differ substantially, reflecting the nature of the data they handle. The complexity of data transformation and mapping also varies significantly depending on the specific requirements of each system.

Integrating Workload Automation Tools

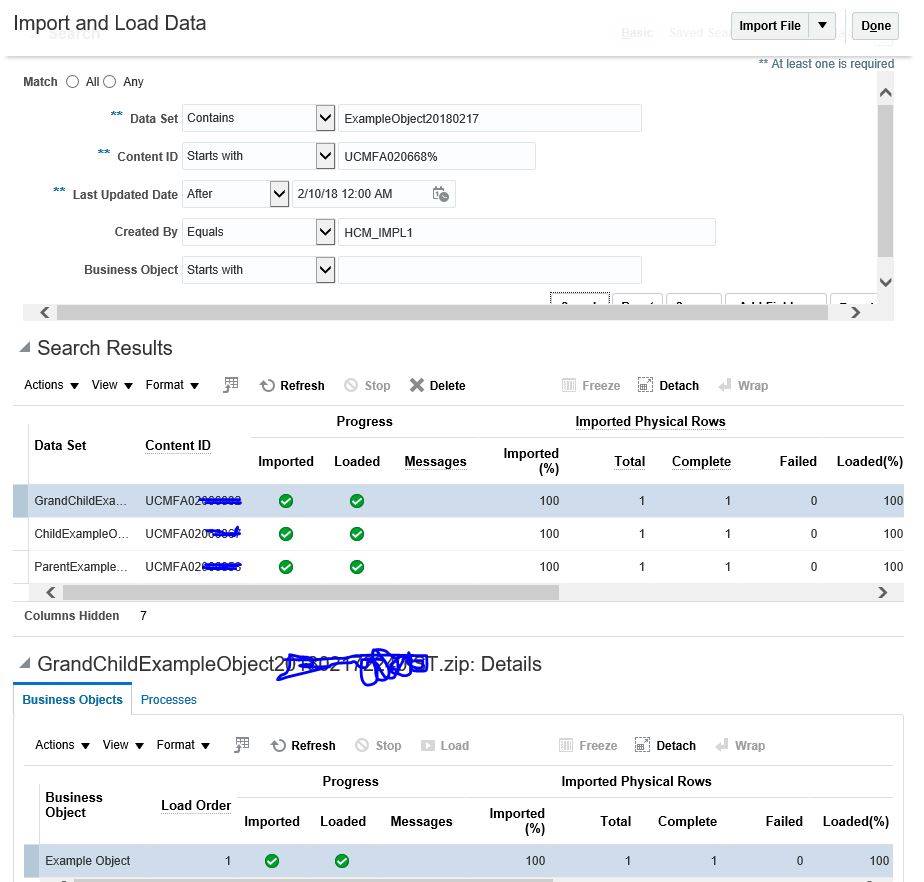

Automating data loading processes for Oracle UCM and HCM is crucial for efficiency and accuracy. Workload automation tools provide the necessary framework to orchestrate these complex data loading tasks, ensuring reliable and timely execution. These tools allow for centralized management, monitoring, and scheduling of data loading jobs, eliminating manual intervention and reducing the risk of human error.Integrating workload automation tools with Oracle UCM and HCM data loaders typically involves using the tool’s capabilities to execute the data loader scripts or executables.

This might involve creating jobs within the workload automation tool that trigger the data loader processes at specified times or based on certain events. The automation tool often handles error handling and logging, providing a centralized view of the entire data loading operation.

Popular Workload Automation Tools

Several robust workload automation tools are compatible with Oracle applications, offering varying levels of functionality and integration. The choice depends on factors such as the scale of operations, existing IT infrastructure, and budget constraints.

- AutoSys: A widely used enterprise-grade workload automation solution known for its robust scheduling and monitoring capabilities. It excels in managing complex, interdependent jobs and offers advanced features like workload balancing and resource management. AutoSys can directly execute the scripts or executables used by Oracle UCM and HCM data loaders.

- Control-M: Another popular enterprise-grade solution providing centralized control and monitoring of batch jobs. Control-M offers strong integration with various applications and platforms, simplifying the management of data loading processes across different systems. Similar to AutoSys, it can directly execute data loader processes.

- IBM Tivoli Workload Scheduler: A comprehensive workload automation platform capable of managing and scheduling a broad range of jobs, including those related to Oracle data loading. It offers advanced features like self-service capabilities and dynamic workload management.

Best Practices for Configuring Workload Automation

Effective configuration of workload automation for data loading requires careful planning and consideration of several best practices. These practices ensure reliable and efficient data loading operations.

- Centralized Job Management: Consolidate all data loading jobs within the workload automation tool for centralized monitoring and management. This provides a single point of control and simplifies troubleshooting.

- Robust Error Handling: Implement comprehensive error handling mechanisms within the workload automation tool to automatically detect and respond to failures. This might involve retry mechanisms, notifications, or escalation procedures.

- Detailed Logging and Monitoring: Configure the workload automation tool to generate detailed logs of all job executions. This information is crucial for auditing, troubleshooting, and performance analysis.

- Security and Access Control: Implement appropriate security measures to control access to data loading jobs and sensitive data. This ensures data integrity and prevents unauthorized access.

- Version Control and Change Management: Maintain version control of data loading scripts and configurations. Implement a change management process to track and approve modifications to these components.

Workflow Diagram

A typical workflow diagram would illustrate the integration. Imagine a box labeled “Workload Automation Tool” at the center. Arrows point from this box to boxes representing “Oracle UCM Data Loader” and “Oracle HCM Data Loader.” Another arrow points from each data loader box to a box labeled “Target Database.” A separate arrow from the “Workload Automation Tool” would point to a “Monitoring and Reporting Dashboard,” showing job status, execution times, and error logs.

This visualization demonstrates the central role of the automation tool in orchestrating and monitoring the entire data loading process. The workflow could also include pre- and post-processing steps, such as data validation or transformation, represented by additional boxes connected to the appropriate parts of the workflow. The entire process would be triggered by a scheduled event or manual initiation within the workload automation tool.

Simplifying Data Loading Processes: Simplify The Data Loading Using Oracle Ucm And Hcm Data Loader Plugins With Workload Automation

Streamlining data loading into Oracle UCM and HCM is crucial for efficient HR and content management. Manual processes are time-consuming, error-prone, and often lead to bottlenecks. Automating this process using workload automation tools offers significant improvements in speed, accuracy, and overall efficiency. This section details techniques to achieve this simplification and the benefits derived.

Employing techniques like automated scheduling, error handling, and data validation significantly reduces manual intervention and human error. This leads to faster data processing, improved data quality, and increased productivity across teams. The automation of data loading processes allows for a more predictable and reliable system, enhancing the overall operational efficiency of the organization.

Automated Data Loading Process Benefits

Automating data loading offers numerous advantages. Faster processing times translate directly into cost savings and improved turnaround times for HR-related tasks and content updates. Automated validation reduces errors, leading to cleaner data and better decision-making based on accurate information. Increased data integrity minimizes the risk of compliance issues and improves the overall reliability of the system. Furthermore, the freeing up of human resources allows staff to focus on more strategic and value-added tasks.

A well-implemented automated system allows for more frequent data updates, leading to more timely insights and better responsiveness to changing business needs.

Step-by-Step Guide for Simplified Data Loading with Workload Automation

This guide Artikels the steps to simplify data loading using workload automation tools in conjunction with Oracle UCM and HCM data loaders. Remember to tailor these steps to your specific environment and requirements.

First, a thorough understanding of your data sources and target systems is paramount. This includes identifying data transformations required and defining clear success criteria. Second, you’ll configure your workload automation tool to interact with the UCM and HCM data loaders, scheduling tasks and defining dependencies. Finally, thorough testing and monitoring are essential to ensure the smooth and reliable operation of the automated process.

| Task | Responsible Party | Timeline | Potential Issues |

|---|---|---|---|

| Data Source Preparation and Validation | Data Analyst/DBA | 1-2 days | Data inconsistencies, missing data, data type mismatches |

| Workload Automation Tool Configuration | System Administrator | 2-3 days | Incorrect configurations, connectivity issues, scheduling conflicts |

| UCM/HCM Data Loader Configuration | Data Integration Specialist | 1-2 days | Incorrect mapping, data transformation errors, plugin compatibility issues |

| Testing and Validation | QA Team | 2-3 days | Data integrity issues, performance bottlenecks, unexpected errors |

| Deployment and Monitoring | System Administrator | 1 day | Unexpected downtime, performance degradation, monitoring tool failures |

Handling Large Datasets and Complex Transformations

Tackling large datasets and intricate data transformations when loading data into Oracle UCM and HCM using data loaders and workload automation requires a strategic approach. Efficiency and data integrity are paramount, and careful planning can prevent significant delays and errors. This section details strategies for optimizing this process.Efficiently loading large datasets involves breaking down the process into manageable chunks.

Instead of attempting to load everything at once, consider using a batch processing approach. This involves dividing the dataset into smaller, more easily processed subsets. Each batch can then be loaded sequentially, allowing for better monitoring and error handling. This also reduces the overall memory footprint of the process, preventing potential system crashes or performance degradation. Furthermore, parallel processing, where multiple batches are loaded concurrently, can significantly reduce overall load time, provided your infrastructure supports it.

Careful consideration of database resources and network bandwidth is crucial for optimal performance.

Batch Processing Strategies, Simplify the data loading using oracle ucm and hcm data loader plugins with workload automation

Batch processing offers a robust method for managing large datasets. The size of each batch should be carefully determined based on available system resources and the complexity of the transformations involved. Smaller batches offer greater control and easier error recovery, but increase the overall number of processing cycles. Larger batches can be faster but demand more resources and pose a greater risk if errors occur.

Testing and iterative refinement are key to identifying the optimal batch size for a specific environment and dataset. For example, a batch size of 10,000 records might be suitable for a system with ample resources and relatively simple transformations, while a smaller batch size of 1,000 records might be more appropriate for a resource-constrained environment or a dataset requiring complex transformations.

Complex Data Transformation Techniques

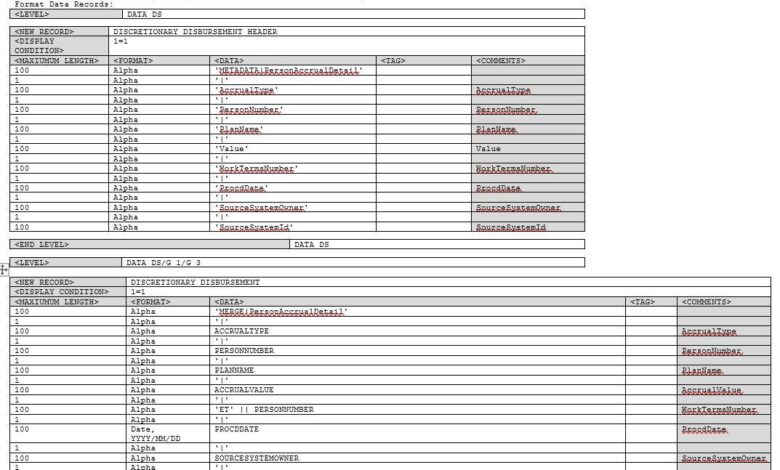

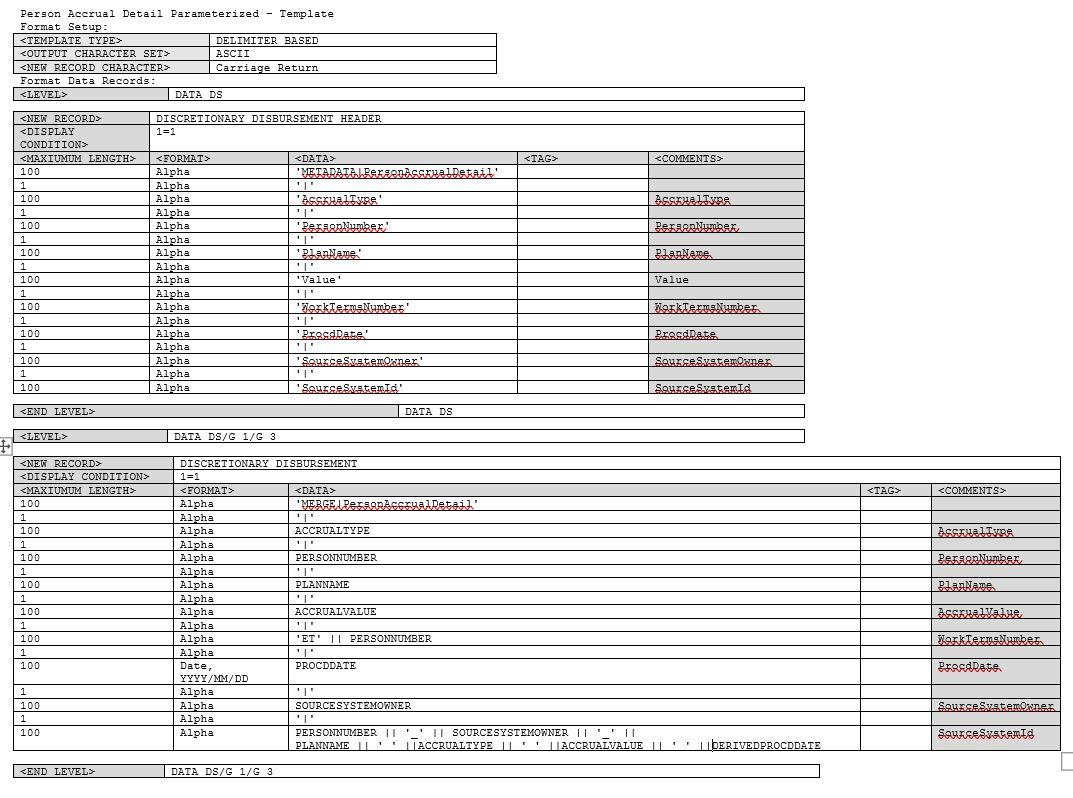

Handling complex transformations requires a structured approach. This often involves using scripting languages like PL/SQL or external ETL (Extract, Transform, Load) tools to perform the necessary manipulations. These tools provide functionalities for data cleansing, validation, and enrichment. For example, a complex transformation might involve merging data from multiple sources, applying complex business rules, or reformatting data to conform to the target system’s requirements.

Using a staging area within the database allows for intermediate data storage and manipulation, simplifying the transformation process and improving overall performance. This staging area enables the use of database features like stored procedures and functions to perform complex calculations or data manipulations before the final load into the UCM or HCM systems.

Data Validation Techniques for Ensuring Data Integrity

Data validation is critical to maintaining data integrity. This involves implementing checks at various stages of the loading process to ensure data accuracy and consistency. These checks can include range checks, format checks, and cross-referencing with other data sources. Data validation should be implemented both at the source and target systems. For instance, you can check if a date field is within a valid range, verify that a phone number adheres to a specific format, or confirm that a foreign key exists in a related table.

Automated validation procedures are preferred, minimizing manual intervention and ensuring consistency. The use of checksums or hash values can further improve the validation process by comparing the source and target data to ensure that no data is lost or corrupted during the transfer. Comprehensive logging of validation results is also important for troubleshooting and auditing purposes.

Identifying and Addressing Bottlenecks in the Data Loading Process

Potential bottlenecks in the data loading process can include network latency, database performance, insufficient processing power, or inefficient transformation logic. Careful monitoring of resource utilization (CPU, memory, disk I/O, network bandwidth) throughout the loading process is essential for identifying bottlenecks. Performance monitoring tools can help pinpoint the source of slowdowns. Solutions may involve optimizing database queries, upgrading hardware, improving transformation logic, or adjusting batch sizes.

For example, a slow network connection might necessitate optimizing data transfer methods, while a database performance bottleneck could be addressed by adding indexes or optimizing table structures. Regularly reviewing and refining the data loading process based on performance monitoring data is crucial for long-term efficiency.

Error Handling and Monitoring

Robust error handling and comprehensive monitoring are crucial for ensuring the smooth and reliable execution of data loading processes using Oracle UCM and HCM Data Loaders integrated with workload automation. Without these safeguards, even minor glitches can snowball into significant data inconsistencies or complete job failures, leading to delays and potential data loss. This section details best practices for building a resilient and observable data loading system.Effective error handling involves anticipating potential issues and implementing mechanisms to capture, log, and respond to them appropriately.

A comprehensive error handling mechanism within a workload automation framework allows for automated responses, minimizing manual intervention and maximizing efficiency. This includes not only identifying errors but also attempting to recover from them where possible, or at least gracefully shutting down the process to prevent further damage.

Error Handling Best Practices

Implementing a layered approach to error handling is key. This involves checking for errors at various stages of the data loading process – from file validation and data transformation to database insertion. For instance, before processing a data file, the system should validate its structure and content against predefined schemas or rules. During the transformation phase, data type mismatches or invalid values should be detected and handled.

Finally, database errors such as unique constraint violations or data integrity issues should be captured and reported. A combination of try-catch blocks in the code, along with database triggers and constraints, can effectively manage these errors.

Designing a Comprehensive Error Handling Mechanism

A well-designed error handling mechanism within the workload automation system should involve several key components. First, a centralized error logging system should record all errors, including timestamps, error messages, and relevant context such as the affected record or file. This allows for efficient troubleshooting and analysis. Second, the system should implement different levels of error handling: for minor errors, it might attempt automatic correction or logging; for critical errors, it might trigger alerts and potentially halt the entire job.

Finally, the system should provide mechanisms for retrying failed operations, perhaps with exponential backoff to avoid overwhelming the system. This might involve automatically resubmitting failed tasks after a delay, or triggering a manual review process for complex errors.

Monitoring Data Loading Jobs and Generating Alerts

Monitoring is equally important to error handling. The workload automation tool should provide real-time visibility into the progress of data loading jobs. This includes monitoring job status (e.g., running, completed, failed), processing time, and the number of records processed. Critical issues, such as prolonged processing times, high error rates, or job failures, should trigger automated alerts via email, SMS, or other notification channels.

Dashboards visualizing key performance indicators (KPIs) such as processing speed, error rates, and overall job success rates can provide valuable insights into the efficiency and reliability of the data loading process. These dashboards should allow for easy identification of trends and potential bottlenecks.

Utilizing Logging Mechanisms for Progress Tracking and Problem Identification

Effective logging is essential for tracking data loading progress and pinpointing potential problems. Logs should provide detailed information about each stage of the process, including timestamps, data volumes processed, errors encountered, and the actions taken to address those errors. Comprehensive logging enables thorough post-mortem analysis, facilitating the identification of root causes of errors and the implementation of preventative measures.

Key Logging Strategies:

- Structured Logging: Use a standardized format (e.g., JSON) to ensure consistent and easily parsable log entries.

- Centralized Logging: Aggregate logs from various sources into a central repository for easier monitoring and analysis.

- Log Levels: Implement different log levels (e.g., DEBUG, INFO, WARNING, ERROR) to filter and prioritize log messages.

- Contextual Logging: Include relevant context such as user IDs, transaction IDs, and affected records in log entries.

Security Considerations

Data loading processes, while crucial for maintaining up-to-date business information, present significant security vulnerabilities if not handled carefully. Protecting sensitive data during these operations is paramount, requiring a multi-layered approach encompassing access control, encryption, and robust monitoring. Neglecting these aspects can lead to data breaches, regulatory non-compliance, and significant financial losses.Implementing robust security measures during data loading is not merely a best practice; it’s a necessity.

The following sections detail key strategies for securing your data throughout the entire loading lifecycle, from initial data acquisition to final storage within your Oracle UCM and HCM systems.

Access Control Implementation

Implementing granular access control is foundational to data security during loading. This involves restricting access to sensitive data based on the principle of least privilege. Only authorized personnel and processes should have permission to access, modify, or delete data during the loading process. This can be achieved through role-based access control (RBAC) within your workload automation tool and Oracle’s security frameworks.

For example, a data loader user should only have permissions to execute the data loading scripts and not broader access to the database or file systems. Regular audits of user permissions are essential to ensure compliance and identify any potential security gaps. This proactive approach prevents unauthorized individuals from manipulating or stealing sensitive information.

Data Encryption in Transit and at Rest

Protecting data both during transmission (in transit) and when stored (at rest) is crucial. Data encryption uses algorithms to transform data into an unreadable format, making it incomprehensible to unauthorized parties. For data in transit, utilizing secure protocols like HTTPS or SFTP is essential. These protocols encrypt data as it travels between the source and the target systems.

For data at rest, employing database encryption features within Oracle databases, or using file-level encryption for data stored on file servers, is vital. This ensures that even if an attacker gains access to the storage system, the data remains protected. Consider using strong encryption algorithms like AES-256 for optimal protection. For example, encrypting HCM employee data at rest prevents unauthorized access to sensitive personal information even if the database server is compromised.

Mitigation Strategies for Potential Security Risks

Several potential security risks exist during data loading. Malicious code injection, for instance, could occur if data is not properly sanitized before loading. This could allow attackers to execute arbitrary code within the target system. To mitigate this, implement strict input validation and sanitization procedures. Another risk is data leakage through improperly configured logging or error handling mechanisms.

Sensitive data should never be logged in plain text. Instead, utilize anonymization or tokenization techniques. Regular security audits and penetration testing should be performed to identify and address vulnerabilities proactively. Implementing robust logging and monitoring allows for timely detection and response to security incidents. For instance, monitoring for unusual login attempts or data access patterns can help identify potential attacks early on.

Performance Optimization

Optimizing the performance of your Oracle UCM and HCM data loading jobs is crucial for maintaining efficient operations and minimizing downtime. Slow data loading can significantly impact business processes, so understanding and implementing performance optimization strategies is essential. This section explores several techniques to boost the speed and efficiency of your data loading processes.

Several factors contribute to the overall performance of data loading. These include the size and complexity of the data, the efficiency of data transformations, the performance of the database, and the effectiveness of the workload automation tools. Addressing each of these areas can lead to significant improvements.

Data Transformation Optimization

Efficient data transformation is key to fast data loading. Inefficient transformations can create bottlenecks, slowing down the entire process. Optimizing transformations involves several strategies. For instance, using optimized SQL queries within the data loader plugins can drastically reduce processing time. Pre-processing data to remove unnecessary fields or consolidate data before loading can also significantly improve performance.

Additionally, leveraging parallel processing capabilities within the transformation logic can further accelerate the process, especially when dealing with large datasets. For example, instead of processing each record sequentially, consider partitioning the data and processing each partition concurrently.

Database Settings Tuning

Database settings play a crucial role in data loading performance. Properly configuring database parameters can significantly impact the speed and efficiency of data insertion and updates. Increasing the database buffer cache size can reduce disk I/O operations, leading to faster loading times. Adjusting the size of the shared pool, which caches frequently used SQL statements, can also improve performance.

Furthermore, ensuring sufficient resources, such as CPU and memory, are allocated to the database instance is critical. Regularly monitoring database performance metrics, such as I/O wait times and CPU utilization, helps identify areas for improvement. For example, if I/O wait times are consistently high, increasing the database buffer cache size may be beneficial.

Identifying and Addressing Performance Bottlenecks

Identifying bottlenecks in the data loading process is essential for effective optimization. This often involves analyzing the performance of individual components, such as the data loader plugins, the database, and the workload automation tool. Tools like database monitoring utilities and profiling tools can help pinpoint performance bottlenecks. Once identified, appropriate actions can be taken. For instance, if a specific transformation step is consuming excessive time, optimizing the transformation logic, as discussed above, can resolve the issue.

If database I/O is a bottleneck, adjusting database settings as described earlier may be the solution. Similarly, if the workload automation tool is a bottleneck, it might be necessary to upgrade the tool or optimize its configuration.

Handling Large Datasets

Loading large datasets requires specialized techniques to maintain performance. Chunking the data into smaller, manageable batches can significantly reduce memory consumption and improve processing speed. This approach avoids overwhelming the system with a massive amount of data at once. Furthermore, employing parallel processing techniques, as previously mentioned, is crucial for handling large datasets efficiently. By processing multiple chunks concurrently, the overall loading time can be reduced substantially.

For example, a dataset of 10 million records could be divided into 100 chunks of 100,000 records each, and each chunk could be processed in parallel.

Final Thoughts

Mastering data loading in Oracle UCM and HCM doesn’t have to be a battle. By leveraging the power of workload automation and implementing the strategies discussed here, you can significantly simplify your processes, reduce errors, and improve overall efficiency. Remember, the key is to automate wherever possible, implement robust error handling, and prioritize security. Take control of your data loading, and reclaim your time and sanity! Now go forth and conquer those data loads!

Essential Questionnaire

What are the common causes of data loading failures?

Common causes include data integrity issues (invalid data formats, missing values), network problems, insufficient database resources, and incorrect configuration of the data loaders or workload automation tools.

How do I choose the right workload automation tool?

Consider factors like scalability, ease of integration with Oracle applications, cost, and the specific features you need (e.g., scheduling, monitoring, error handling). Research different options and evaluate their suitability for your environment.

What are the security implications of automated data loading?

Automated data loading increases the risk of unauthorized access or data breaches if not properly secured. Implement strong access controls, data encryption (both in transit and at rest), and regular security audits.

How can I monitor the performance of my data loading jobs?

Use built-in monitoring tools provided by the workload automation software, and consider implementing custom logging and alerting mechanisms to track job progress, identify bottlenecks, and receive notifications about critical issues.