Software Delivery Has Just Grown Up

Software delivery has just grown up, and it’s about time! Gone are the days of lengthy waterfall processes and unpredictable deployments. Today, we’re witnessing a revolution driven by automation, collaboration, and a relentless focus on speed and quality. This evolution isn’t just about faster releases; it’s about building more robust, secure, and ultimately, more valuable software.

This post dives deep into the transformative journey of software delivery, exploring the key milestones, technological advancements, and cultural shifts that have redefined how we build and deploy software. From the rise of agile and DevOps to the adoption of cloud-native architectures and sophisticated monitoring tools, we’ll unpack the factors that have propelled software delivery into a mature and sophisticated discipline.

The Evolution of Software Delivery

Software delivery has undergone a dramatic transformation, evolving from cumbersome, slow processes to streamlined, efficient systems. This evolution reflects a broader shift in software development philosophies, driven by the need for faster release cycles, increased customer satisfaction, and improved overall product quality. We’ve moved from monolithic approaches to highly agile and automated systems, a journey marked by significant technological advancements.

Stages in the Evolution of Software Delivery

The journey of software delivery can be broadly categorized into several key stages. Initially, the waterfall methodology reigned supreme. This sequential approach, with distinct phases (requirements, design, implementation, testing, deployment, maintenance), proved rigid and inflexible, often leading to delays and costly rework. The limitations of waterfall spurred the adoption of agile methodologies, emphasizing iterative development, collaboration, and frequent feedback.

Agile approaches like Scrum and Kanban enabled faster iterations and quicker adaptation to changing requirements. The next major leap was the emergence of DevOps, which extended agile principles to encompass the entire software delivery lifecycle, bridging the gap between development and operations teams. DevOps emphasizes automation, continuous integration and continuous delivery (CI/CD), and a culture of collaboration and shared responsibility.

This integrated approach dramatically accelerates the delivery process while improving quality and reliability.

Comparison of Traditional and Modern Software Delivery Practices

Traditional software delivery, exemplified by the waterfall method, was characterized by lengthy release cycles, limited customer feedback, and significant risk associated with late-stage defect detection. Changes were costly and time-consuming to implement. Modern approaches, built on agile and DevOps principles, contrast sharply. They prioritize shorter development cycles, continuous feedback loops, and automated testing, leading to faster time-to-market, improved product quality, and reduced risk.

The emphasis on collaboration and shared responsibility fosters a more efficient and responsive development process. For example, a traditional release might take months or even years, while a modern DevOps-driven release can happen multiple times a day.

Technologies and Tools Driving the Evolution

Several technologies and tools have been instrumental in driving this evolution. The following table summarizes some key players and their impact:

| Technology | Description | Benefits | Drawbacks |

|---|---|---|---|

| CI/CD Pipelines | Automated systems for building, testing, and deploying software. | Increased speed and frequency of releases, improved quality through automated testing, reduced manual effort. | Requires significant upfront investment in infrastructure and tooling, complexity in managing pipelines for large projects. |

| Containerization (Docker, Kubernetes) | Packaging applications and their dependencies into isolated units for consistent execution across different environments. | Improved portability, scalability, and consistency of applications, easier deployment and management of microservices. | Requires learning curve for developers and operations teams, potential for increased complexity in managing containerized environments. |

| Cloud Computing (AWS, Azure, GCP) | On-demand access to computing resources, including servers, storage, and networking. | Increased scalability and flexibility, reduced infrastructure costs, improved availability and reliability. | Vendor lock-in, potential security concerns, dependence on internet connectivity. |

| Infrastructure as Code (Terraform, Ansible) | Managing and provisioning infrastructure through code, enabling automation and reproducibility. | Improved consistency and repeatability of infrastructure deployments, reduced manual errors, faster infrastructure provisioning. | Requires expertise in scripting languages, potential for complex configurations, managing state can be challenging. |

Increased Focus on Automation

The modern software delivery landscape is fundamentally reshaped by automation. Gone are the days of manual processes and their inherent inefficiencies. Today, successful software delivery relies heavily on automating repetitive tasks, streamlining workflows, and ensuring consistent, high-quality releases. This shift not only accelerates the delivery process but also significantly enhances reliability and reduces costs.Automation’s impact on software delivery is multifaceted.

It allows teams to focus on higher-value activities like design, innovation, and problem-solving, rather than getting bogged down in tedious, error-prone manual steps. This increased efficiency translates directly into faster time-to-market, a crucial competitive advantage in today’s rapidly evolving digital world. Furthermore, by reducing human intervention, automation minimizes the risk of human error, leading to more reliable and stable software releases.

Finally, the cost savings achieved through automation are substantial, encompassing reduced labor costs, improved resource utilization, and fewer costly production errors.

Automation Tools and Techniques Across the SDLC

Various tools and techniques support automation throughout the software development lifecycle (SDLC). Early stages, like planning and requirements gathering, might leverage tools that automatically generate documentation or track progress. In the development phase, Continuous Integration/Continuous Delivery (CI/CD) pipelines, built using tools like Jenkins, GitLab CI, or CircleCI, automate the build, testing, and deployment processes. Testing itself is heavily automated with frameworks such as Selenium, JUnit, and pytest, ensuring comprehensive coverage and faster feedback loops.

Deployment automation often involves infrastructure-as-code (IaC) tools like Terraform or Ansible, enabling the consistent and repeatable provisioning of infrastructure. Finally, monitoring and logging tools like Prometheus and Grafana provide automated alerts and insights into application performance, facilitating proactive issue resolution.

Impact of Automation on Software Development

The impact of automation is far-reaching, affecting nearly every aspect of software development.

- Testing: Automated testing significantly reduces testing time and increases test coverage, leading to higher quality software and fewer bugs in production.

- Deployment: Automated deployments minimize downtime and ensure consistent deployments across different environments, reducing the risk of human error and deployment failures.

- Monitoring: Automated monitoring tools provide real-time insights into application performance, enabling proactive issue detection and resolution, reducing Mean Time To Resolution (MTTR).

- Security: Automated security testing and vulnerability scanning help identify and address security risks early in the development process, improving the overall security posture of the application.

- Cost Reduction: Automation reduces labor costs associated with repetitive tasks, improves resource utilization, and minimizes the cost of production errors, resulting in significant overall cost savings.

Enhanced Collaboration and Communication

The modern software development landscape demands more than just individual brilliance; it thrives on seamless collaboration and effective communication. Efficient software delivery is no longer a solo act but a carefully orchestrated symphony of shared knowledge, coordinated efforts, and rapid feedback loops. The shift towards collaborative practices has dramatically reduced bottlenecks, improved product quality, and accelerated time-to-market.Improved collaboration and communication have significantly impacted software delivery efficiency by fostering a shared understanding of project goals, streamlining workflows, and minimizing misunderstandings.

Open communication channels ensure that everyone is on the same page, reducing the risk of duplicated effort and costly rework. The ability to quickly identify and resolve issues through collaborative problem-solving significantly shortens development cycles and minimizes delays. This collaborative environment also promotes a culture of continuous learning and improvement, where team members readily share their expertise and learn from each other’s experiences.

Cross-Functional Teams and Shared Responsibility

The success of modern software delivery hinges on the effectiveness of cross-functional teams. These teams bring together individuals with diverse skill sets – developers, designers, testers, product owners, and operations specialists – to work collaboratively on a shared project. This approach breaks down traditional silos, fostering a sense of shared responsibility and ownership throughout the development lifecycle. Each team member understands their role in the overall process and how their contributions directly impact the final product.

For example, a developer might actively participate in user acceptance testing to gain firsthand feedback, while a designer might contribute to sprint planning to ensure the user experience is considered from the outset. This shared understanding leads to a more holistic and efficient approach to software delivery. The collective intelligence and diverse perspectives contribute to higher quality software and a more satisfying development process.

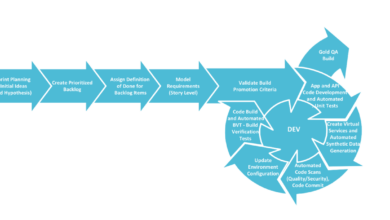

Workflow Diagram Illustrating Communication and Collaboration

Imagine a workflow diagram representing a typical sprint cycle within an agile software development team. The diagram would begin with a product backlog grooming session, where the product owner, developers, and designers collaborate to refine user stories and prioritize features. Communication here happens through shared online documents, collaborative whiteboards, and regular meetings. Next, the sprint planning meeting involves the entire team defining tasks and assigning responsibilities.

Daily stand-up meetings facilitate quick updates on progress, identify roadblocks, and coordinate tasks. Communication channels for this might include a project management tool, instant messaging, and email. During the sprint, developers work collaboratively, using version control systems and code review processes to ensure code quality. They communicate through code comments, pull requests, and online discussions. Testing and quality assurance are integrated throughout the process, with testers collaborating with developers to identify and resolve bugs.

Regular communication happens through bug tracking systems and collaborative testing sessions. Finally, the sprint review and retrospective meetings bring the entire team together to demonstrate the completed work, gather feedback, and identify areas for improvement. These sessions utilize presentations, demonstrations, and facilitated discussions. The entire process relies on a combination of synchronous and asynchronous communication, leveraging tools like project management software, instant messaging platforms, and version control systems.

The diagram would visually represent the flow of information and collaboration between these different stages and team members, highlighting the interconnectedness of each stage and the importance of continuous communication throughout the development cycle.

The Rise of Cloud-Native Development

The software delivery landscape has undergone a dramatic transformation, and at the heart of this change lies the rise of cloud-native development. This approach offers a compelling alternative to traditional on-premise deployments, promising faster release cycles, increased scalability, and improved resilience. By embracing cloud-native principles, organizations can significantly enhance their ability to deliver high-quality software that meets the demands of today’s dynamic market.Cloud-native development leverages the inherent capabilities of cloud platforms to build and deploy applications that are inherently scalable, resilient, and easily manageable.

This contrasts sharply with traditional on-premise deployments, which often involve complex infrastructure management and slower release cycles. The shift to cloud-native has been driven by the need for agility and efficiency in software development and deployment, allowing businesses to respond quickly to market changes and customer demands.

Traditional vs. Cloud-Native Deployments

Traditional on-premise deployments involve installing and managing software on physical servers within an organization’s own data center. This approach offers a high degree of control but can be expensive, time-consuming, and inflexible. Scaling applications requires significant upfront investment and planning. Cloud-native deployments, on the other hand, leverage cloud infrastructure (like AWS, Azure, or GCP) and utilize services like Infrastructure as Code (IaC) and containerization to manage and scale applications dynamically.

This approach offers greater flexibility, scalability, and cost-effectiveness, but requires a shift in organizational culture and expertise.

Key Architectural Patterns and Technologies

The following table summarizes some key architectural patterns and technologies associated with cloud-native development:

| Pattern/Technology | Description | Advantages | Disadvantages |

|---|---|---|---|

| Microservices | An architectural style that structures an application as a collection of small, independent services. | Increased agility, independent scalability, fault isolation, technology diversity. | Increased complexity, distributed tracing challenges, data consistency issues. |

| Serverless Functions | Event-driven functions that execute in response to triggers without the need for managing servers. | Cost-effectiveness, scalability, reduced operational overhead. | Vendor lock-in, cold starts, debugging challenges. |

| Containers (Docker) | Standardized units of software that package code and dependencies, ensuring consistent execution across different environments. | Portability, consistency, efficient resource utilization. | Security concerns if not properly managed, image size can impact performance. |

| Kubernetes | An open-source platform for automating deployment, scaling, and management of containerized applications. | Automated deployment, scaling, and management; self-healing capabilities; improved resource utilization. | Complexity, learning curve, operational overhead. |

| API Gateways | A reverse proxy that acts as a single entry point for all requests to a microservices architecture. | Improved security, traffic management, monitoring and logging capabilities. | Increased complexity, potential single point of failure. |

Improved Monitoring and Observability

The modern software delivery landscape demands more than just functional software; it requires systems that are robust, reliable, and readily adaptable to changing conditions. This is where improved monitoring and observability play a crucial role, transforming how we understand and manage our applications in production. By providing real-time insights into system behavior, we can proactively address potential issues before they impact users, drastically reducing downtime and improving overall system health.Advanced monitoring and observability tools offer a significant leap forward from traditional methods.

They move beyond simple alerts to provide a holistic view of the entire software stack, encompassing everything from infrastructure metrics to application performance and user experience. This comprehensive perspective allows development and operations teams to pinpoint the root cause of problems much faster, accelerating resolution times and minimizing disruption. Real-time feedback loops, facilitated by these tools, enable continuous improvement, fostering a culture of proactive problem-solving and preventative maintenance.

Real-Time Feedback and Continuous Improvement

Real-time feedback is paramount to achieving a truly agile and responsive software delivery process. Observability tools provide this feedback by constantly monitoring key metrics and generating alerts when thresholds are breached. This allows teams to immediately address issues, preventing minor problems from escalating into major outages. Furthermore, the data collected by these tools informs iterative improvements to the software itself, leading to a continuous cycle of refinement and enhancement based on actual usage patterns and performance data.

This proactive approach contrasts sharply with traditional methods that often relied on reactive problem-solving, responding to issues only after they impacted users.

Examples of Metrics and Dashboards

The effectiveness of monitoring and observability hinges on the right metrics and their effective visualization. Dashboards aggregate key performance indicators (KPIs) into easily digestible formats, offering a high-level overview of system health. Here are some examples of metrics commonly tracked:

- Application Performance Metrics: These include response times, error rates, throughput, and resource utilization (CPU, memory, disk I/O). A dashboard might show a graph of average response times over time, highlighting any sudden spikes or trends. For example, a sudden increase in response time might indicate a database query performance issue.

- Infrastructure Metrics: These metrics track the health and performance of underlying infrastructure components such as servers, networks, and databases. Examples include CPU utilization, memory usage, network latency, and disk space. A dashboard might display the CPU utilization of each server in a cluster, alerting on high utilization levels that could indicate resource constraints.

- Log Aggregation and Analysis: Centralized log management systems collect logs from various sources, allowing for efficient searching and analysis. Dashboards can visualize log events, highlighting errors and exceptions. For example, a dashboard could show the number of error logs per application component, aiding in pinpointing problematic areas.

- User Experience Metrics: Tracking user experience metrics, such as page load times, bounce rates, and conversion rates, provides valuable insights into the impact of application performance on user satisfaction. A dashboard could display the number of users experiencing slow page loads, indicating a need for performance optimization.

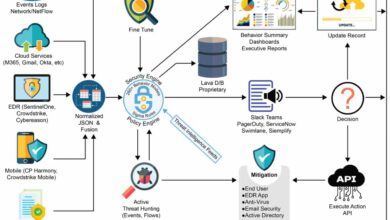

Security as a First-Class Citizen

The days of tacking security onto a software project as an afterthought are long gone. In today’s landscape, security is no longer a separate concern; it’s fundamentally woven into the fabric of the entire software delivery lifecycle. From initial design to deployment and beyond, a robust security posture is paramount, impacting everything from project timelines and budgets to brand reputation and user trust.Security is now deeply integrated into every phase, demanding a proactive and holistic approach.

This shift reflects the growing understanding that vulnerabilities discovered late in the process are exponentially more expensive and difficult to fix. A proactive security strategy, integrated from the start, leads to more secure, reliable, and ultimately, successful software.

Security Practices and Tools Throughout the Software Development Lifecycle

Implementing comprehensive security requires a multi-layered approach encompassing various practices and tools. These tools and practices work together to protect software at each stage of its journey, from conception to retirement. A lack of integration between these practices creates vulnerabilities, highlighting the importance of a cohesive security strategy.

- Secure Design Principles: Building security into the architecture from the outset, rather than adding it later, is crucial. This involves employing principles like least privilege, input validation, and secure coding practices. For example, a well-designed system might use role-based access control (RBAC) to restrict user permissions to only what’s necessary for their tasks.

- Static and Dynamic Application Security Testing (SAST/DAST): SAST analyzes code without execution, identifying potential vulnerabilities early in the development cycle. DAST, on the other hand, tests the running application to discover vulnerabilities that might not be apparent in the source code. These automated tests can significantly reduce the risk of deploying vulnerable software.

- Software Composition Analysis (SCA): SCA tools scan the software’s dependencies (libraries, frameworks) for known vulnerabilities. This is vital as many vulnerabilities stem from outdated or insecure third-party components. Regular SCA scans ensure that the software uses only secure and up-to-date dependencies.

- Security Automation: Integrating security tools and practices into the CI/CD pipeline ensures that security checks are automated and performed consistently at every stage. This can include automated security scans, vulnerability assessments, and penetration testing.

- Penetration Testing: Simulating real-world attacks to identify vulnerabilities that might have been missed by other security measures. This provides a valuable assessment of the software’s overall security posture and helps identify weaknesses before malicious actors can exploit them. A recent penetration test on a major e-commerce platform revealed a critical SQL injection vulnerability, demonstrating the value of proactive penetration testing.

Security Testing and Vulnerability Management, Software delivery has just grown up

Security testing isn’t a one-time event; it’s an ongoing process that must be integrated throughout the software lifecycle. Effective vulnerability management involves not only identifying vulnerabilities but also prioritizing them based on severity and risk, and then remediating them quickly and efficiently.Regular security testing, combined with robust vulnerability management practices, is essential for ensuring that software remains secure over its entire lifespan.

Ignoring vulnerabilities can lead to costly breaches, reputational damage, and legal consequences. A well-defined vulnerability management process includes clear workflows for reporting, triaging, fixing, and verifying the resolution of vulnerabilities. This process often involves collaboration between development, security, and operations teams. For example, a vulnerability scoring system (like CVSS) can be used to prioritize vulnerabilities based on their severity and potential impact.

The Impact on Business Agility

Improved software delivery practices aren’t just about faster releases; they’re fundamentally reshaping how businesses operate, fostering a new level of agility and responsiveness. This increased speed allows companies to adapt quickly to changing market conditions, customer feedback, and emerging technologies, ultimately leading to better business outcomes and a stronger competitive edge. The ability to iterate quickly and deploy changes frequently is no longer a luxury but a necessity for survival in today’s dynamic landscape.Faster software delivery directly translates to improved business outcomes.

By reducing the time it takes to bring new features and improvements to market, companies can capitalize on opportunities more effectively. This speed also allows for quicker responses to customer needs and issues, leading to higher satisfaction and increased loyalty. Furthermore, continuous feedback loops integrated into the delivery process enable proactive adjustments, minimizing risks and maximizing returns on investment.

Faster Time to Market and Revenue Generation

The direct correlation between faster software delivery and increased revenue is undeniable. Reducing the time it takes to launch a new product or feature allows companies to capture market share earlier, establishing a first-mover advantage. This early entry can lead to significant revenue gains before competitors catch up. Moreover, frequent updates and improvements keep products relevant and competitive, ensuring sustained revenue streams.

Imagine a company launching a new mobile app feature that instantly addresses a user pain point – this rapid response can translate into a significant increase in user engagement and, consequently, revenue.

Enhanced Customer Satisfaction and Loyalty

Rapid response to customer feedback and bug fixes is crucial for building and maintaining customer loyalty. When issues are addressed swiftly, customers feel valued and appreciated, fostering a sense of trust and confidence in the brand. This improved customer experience leads to increased satisfaction, positive word-of-mouth referrals, and ultimately, stronger customer retention. Conversely, slow response times can lead to frustration and churn, negatively impacting the bottom line.

For example, a company that quickly addresses security vulnerabilities in its software demonstrates a commitment to user safety, enhancing trust and loyalty.

Improved Competitive Advantage through Innovation

Organizations leveraging faster software delivery can iterate on their products and services more frequently, allowing them to continuously innovate and stay ahead of the competition. This continuous improvement cycle allows businesses to experiment with new features, test market reactions, and adapt their offerings based on real-time data. A prime example is Netflix, which continuously uses A/B testing and rapid iteration to improve its recommendation algorithm and user interface, maintaining its position as a market leader.

- Increased market share: Faster delivery allows companies to capitalize on emerging trends and opportunities before competitors.

- Improved product quality: Frequent releases allow for continuous feedback and improvement, leading to higher-quality products.

- Reduced development costs: Automation and streamlined processes reduce the time and resources required for software development.

- Enhanced employee morale: Faster delivery cycles can empower development teams and boost morale through increased autonomy and faster feedback loops.

Last Point: Software Delivery Has Just Grown Up

The maturation of software delivery isn’t just a technological advancement; it’s a fundamental shift in how we approach software development. By embracing automation, collaboration, and a data-driven approach, organizations are unlocking unprecedented levels of agility, efficiency, and business value. The future of software delivery promises even more innovation, with a continued emphasis on speed, security, and user experience. It’s an exciting time to be building software!

FAQ Compilation

What’s the difference between DevOps and Agile?

Agile focuses on iterative development and flexible project management, while DevOps extends this to encompass the entire software lifecycle, emphasizing collaboration between development and operations teams for faster and more reliable deployments.

How does containerization improve software delivery?

Containerization (e.g., Docker) packages applications and their dependencies into isolated units, ensuring consistent execution across different environments and simplifying deployment. This leads to faster and more reliable deployments.

What are some common challenges in modern software delivery?

Common challenges include maintaining security across complex systems, managing technical debt, ensuring sufficient testing coverage, and adapting to evolving technologies and market demands.

What is the role of observability in modern software delivery?

Observability provides deep insights into the behavior of software systems in production, allowing for proactive identification and resolution of issues, improved performance, and faster troubleshooting.