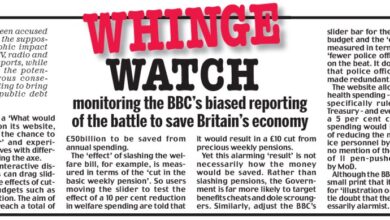

Australian Electric Grid Vulnerable to Cyber Attacks via Solar Panels

Australian electric grid vulnerable to cyber attacks via solar panels: That’s a pretty scary thought, isn’t it? We’re increasingly reliant on renewable energy sources like solar power, but what happens when those very sources become a backdoor for malicious actors? This post dives into the vulnerabilities lurking within our solar panel infrastructure and how they could be exploited to cripple the Australian power grid.

We’ll explore the potential attack vectors, the devastating consequences, and – crucially – what we can do to protect ourselves.

From compromised communication protocols to physical access points, the pathways for cyberattacks are surprisingly diverse. Imagine a scenario where a coordinated attack overwhelms the grid, plunging cities into darkness and causing widespread economic chaos. It’s not a far-fetched scenario; it’s a real and present danger that requires immediate attention. We’ll examine various types of solar systems, comparing their inherent security risks and highlighting the critical need for improved cybersecurity measures across the board.

Vulnerability of Australian Solar Panel Infrastructure: Australian Electric Grid Vulnerable To Cyber Attacks Via Solar Panels

Australia’s increasing reliance on solar energy presents a growing cybersecurity challenge. The integration of numerous solar panel systems, ranging from small rooftop installations to large-scale utility projects, into the national grid creates a complex and potentially vulnerable network. While significant strides have been made in grid security overall, the specific cybersecurity measures implemented for solar panel infrastructure require further scrutiny.

Current State of Cybersecurity Measures for Solar Panel Installations

The cybersecurity measures in place for Australian solar panel installations at grid connection points vary significantly. Larger utility-scale solar farms often employ more robust security protocols, including network segmentation, intrusion detection systems, and regular security audits. However, smaller-scale rooftop installations often rely on basic security features provided by the inverter manufacturer, which may not be sufficient to withstand sophisticated cyberattacks.

A lack of standardized security protocols across the industry contributes to this uneven level of protection. Many installations lack regular security updates and patching, leaving them vulnerable to known exploits. The regulatory landscape regarding solar panel cybersecurity is still developing, leading to inconsistencies in security practices.

Weaknesses in Communication Protocols

Solar inverters communicate with grid operators through various communication protocols, including Modbus, DNP3, and others. Many of these protocols are legacy systems with known vulnerabilities, lacking robust authentication and encryption mechanisms. This opens the door to various attacks, such as unauthorized access, data manipulation, and denial-of-service attacks. The lack of standardized security protocols across different inverter manufacturers further exacerbates this problem, making it difficult to implement comprehensive security measures across the entire grid.

Furthermore, the increasing use of internet-connected devices in solar installations introduces additional attack vectors, potentially compromising the entire system through unsecured internet gateways.

Potential Consequences of a Successful Cyberattack

A successful cyberattack targeting solar panel infrastructure could have severe consequences for the Australian grid. Attackers could manipulate the power output of solar panels, causing instability in the grid and potentially leading to blackouts or brownouts. They could also steal sensitive data, such as energy production data or grid operational information, potentially compromising national security or causing economic damage.

Furthermore, a successful attack could damage solar inverters or other critical components, resulting in costly repairs and prolonged outages. The disruption of solar energy production could have significant economic impacts, particularly given Australia’s growing reliance on renewable energy sources. The cascading effects of such an attack on other critical infrastructure systems connected to the grid are also a significant concern.

Comparison of Cybersecurity Risks Across Different Solar Panel Systems

Rooftop solar systems, while individually less powerful, represent a large aggregate risk due to their sheer number. Their dispersed nature and often less robust security measures make them attractive targets for large-scale distributed denial-of-service (DDoS) attacks. Utility-scale solar farms, on the other hand, present a higher individual risk due to their concentrated power output. A successful attack on a large solar farm could have a more significant and immediate impact on the grid.

However, these larger installations typically have more sophisticated security measures in place. The cybersecurity risks are not simply a matter of scale; the specific security practices employed by installers and operators are critical in determining the overall vulnerability.

Attack Vectors and Potential Impact on the Australian Grid

| Attack Vector | Target | Potential Impact | Mitigation Strategies |

|---|---|---|---|

| Malware Infection | Solar Inverter | Disruption of energy production, data theft | Regular software updates, strong anti-virus software |

| Denial-of-Service (DoS) Attack | Communication Network | Grid instability, power outages | Network segmentation, robust firewalls |

| Data Manipulation | Grid Monitoring System | Inaccurate grid readings, operational errors | Data validation, encryption |

| Man-in-the-Middle Attack | Communication Protocol | Unauthorized access, data interception | Secure communication protocols, encryption |

Attack Vectors and Exploitation Methods

The vulnerability of Australia’s electric grid, partially reliant on solar energy, extends beyond simple hardware failures. Malicious actors can leverage sophisticated cyberattacks targeting the communication protocols and physical access points associated with solar panel systems to disrupt grid stability and potentially cause widespread blackouts. Understanding these attack vectors is crucial for implementing effective security measures.

Solar panel systems, particularly large-scale installations, rely on intricate communication networks to monitor performance, optimize energy output, and feed data back into the broader grid. These networks, often utilizing Modbus, DNP3, or other industrial control system (ICS) protocols, are potential entry points for attackers. Compromising these protocols allows malicious actors to manipulate the behavior of individual panels or entire arrays, leading to significant consequences for grid stability.

Exploiting Communication Protocols for Grid Disruption

Attackers can exploit vulnerabilities in the communication protocols used by solar panels to inject malicious commands or disrupt data flow. For instance, they might use known vulnerabilities in Modbus TCP to send commands that override the normal operating parameters of the inverters, causing them to shut down or feed excessive power into the grid, potentially overloading transformers and causing cascading failures.

Similar attacks could be launched against DNP3 systems, exploiting flaws in their authentication and authorization mechanisms. This could lead to false readings being sent to the grid operator, hindering their ability to manage the system effectively.

Malware and Techniques for Compromising Solar Panel Systems

Several types of malware and techniques could be used to compromise solar panel systems. For example, a sophisticated piece of malware could be designed to target the firmware of solar inverters, potentially allowing attackers to remotely control their operation. This could involve exploiting vulnerabilities in the firmware update mechanisms or leveraging zero-day exploits. Another technique involves using phishing attacks to gain access to the administrative interfaces of solar panel monitoring systems.

Once inside, attackers could install malware, manipulate data, or disable security features. Furthermore, man-in-the-middle attacks targeting communication links between solar panels and monitoring systems could allow attackers to intercept and modify data, creating a range of disruptions.

Physical Access and Cyberattacks

Physical access to solar panel installations, while often requiring some level of effort, can significantly enhance the potential for a successful cyberattack. Attackers gaining physical access could install malicious hardware, such as rogue network devices or compromised data loggers, directly onto the system. This could provide a persistent foothold for launching further attacks, even if network-based security measures are in place.

They could also directly tamper with the physical components of the panels, though this is a less subtle and more easily detectable method.

Denial-of-Service Attacks Using Compromised Solar Panels

Compromised solar panels can be used as part of a distributed denial-of-service (DDoS) attack against the grid’s control systems. By coordinating the actions of many compromised panels, attackers could flood the grid operator’s network with illegitimate data, overwhelming its capacity to process legitimate information and potentially leading to grid instability. This is particularly effective if the attacker can manipulate the panels to generate false or conflicting data reports, confusing the grid’s control systems.

A real-world example could involve a coordinated attack causing the grid to interpret a sudden surge in power from many solar farms as an overload, leading to precautionary shutdowns.

Solar Panels as Entry Points for Wider Grid Intrusions

Compromised solar panel systems could serve as entry points for wider intrusions into the broader electric grid. Once an attacker has gained access to a solar panel system, they could potentially use it as a springboard to move laterally across the network, targeting other critical infrastructure components, such as substations or power generation plants. This could be achieved by exploiting vulnerabilities in the network infrastructure connecting the solar panels to the wider grid, or by using the compromised panels to launch further attacks against adjacent systems.

Such a scenario highlights the interconnectedness of modern energy systems and the potential for a localized attack to have far-reaching consequences.

Impact on Grid Stability and Reliability

A cyberattack targeting Australia’s solar panel infrastructure could have a devastating impact on the nation’s electricity grid, causing widespread instability and significant reliability issues. The sheer scale of solar deployment in Australia means a successful attack could ripple through the entire energy system, leading to cascading failures and widespread blackouts. This section explores the potential consequences of such an event.The immediate impact would be a sudden and significant drop in electricity generation.

Solar panels, once compromised, could cease operation entirely, or worse, be forced to feed unpredictable amounts of power back into the grid, creating voltage fluctuations and potentially overloading transmission lines. This disruption to supply would immediately impact electricity demand, leading to increased strain on other power generation sources, such as coal, gas, and hydro, which may struggle to compensate for the sudden loss.

The resulting imbalance between supply and demand could trigger cascading failures, affecting other grid components and leading to widespread power outages.

Cascading Effects on Grid Components

A cyberattack on solar panels wouldn’t be an isolated incident; its effects would cascade throughout the electricity grid. For example, the sudden loss of solar power could overload backup generators, leading to their failure. Similarly, increased demand on conventional power plants might exceed their operational limits, forcing them to shut down to prevent damage. Smart grid technologies, designed to manage the flow of electricity, could also be compromised if the attack extended beyond the solar panels themselves.

The resulting instability could spread rapidly, causing widespread and prolonged blackouts across the country. Imagine a scenario where a large portion of solar farms in South Australia, a state heavily reliant on solar power, are simultaneously disabled. This would lead to a sudden surge in demand on the remaining generators, potentially triggering blackouts not just in South Australia, but across interconnected grids, impacting neighboring states like Victoria and New South Wales.

Economic Consequences of a Large-Scale Cyberattack

The economic ramifications of a successful large-scale cyberattack on Australia’s solar panel infrastructure would be substantial. Businesses would suffer losses due to production halts, data loss, and damage to equipment. The cost of repairing the damaged infrastructure and restoring power would run into billions of dollars. Beyond direct economic losses, the disruption would severely impact various sectors, including manufacturing, transportation, healthcare, and finance.

Consider the 2003 Northeast Blackout in the US, which cost an estimated $6 billion. An attack on Australian solar infrastructure could easily exceed this figure given Australia’s increasing reliance on renewable energy. The impact on the tourism industry, a significant contributor to the Australian economy, would also be substantial, with disruptions to transportation, communication, and essential services.

Impacts on Renewable Energy Targets and Energy Security, Australian electric grid vulnerable to cyber attacks via solar panels

A significant cyberattack would severely hinder Australia’s progress towards its renewable energy targets. The loss of solar capacity would necessitate a reliance on fossil fuel-based power generation in the short term, leading to increased greenhouse gas emissions and undermining the country’s commitment to climate action. Moreover, it would erode public confidence in renewable energy technologies and potentially delay further investments in the sector.

The attack would also compromise Australia’s energy security, demonstrating the vulnerability of its electricity grid to cyber threats and highlighting the need for robust cybersecurity measures. This would increase reliance on imported energy and potentially create energy shortages, impacting national security.

Societal Impacts of a Significant Grid Disruption

The societal impacts of a widespread blackout caused by a cyberattack on solar panel infrastructure would be far-reaching.

- Disruption to essential services, including healthcare, transportation, and communication.

- Widespread food spoilage due to power outages affecting refrigeration.

- Increased risk of civil unrest and social disorder.

- Significant disruption to education and work.

- Loss of life due to medical emergencies and other unforeseen consequences.

- Economic hardship for individuals and families.

The scale and duration of these impacts would depend on the extent and duration of the power outage, but even a short-term disruption could have long-lasting consequences for Australian society.

Mitigation and Defense Strategies

Strengthening the cybersecurity of Australia’s solar panel infrastructure is crucial for maintaining grid stability and reliability. A multi-pronged approach encompassing technological solutions, robust communication protocols, and effective physical security measures is necessary to mitigate the risks posed by cyberattacks. This requires a collaborative effort between government agencies, energy providers, and solar panel manufacturers.

Enhanced Cybersecurity for Solar Panel Installations

Several solutions can significantly improve the cybersecurity posture of Australian solar panel installations. These include implementing robust authentication and authorization mechanisms to control access to system components and data. Regular software updates and patching are essential to address known vulnerabilities. Furthermore, the adoption of secure boot processes helps prevent unauthorized code execution, a common attack vector. Network segmentation, isolating the solar panel system from other critical infrastructure, limits the impact of a successful breach.

Finally, employing anomaly detection systems can help identify unusual activity that might indicate a cyberattack.

Improved Communication Protocols and Data Encryption

Secure communication protocols are fundamental to protecting data transmitted between solar panels, inverters, and the wider grid. Utilizing Transport Layer Security (TLS) or similar encryption protocols ensures that communication remains confidential and integrity is maintained. Data encryption, both in transit and at rest, is vital for protecting sensitive information from unauthorized access. Regular key rotation and strong cryptographic algorithms should be employed to enhance security.

Adopting standardized communication protocols reduces complexity and allows for easier implementation of security measures. For example, the use of secure protocols like MQTT over TLS can significantly enhance the security of data exchange between solar panels and monitoring systems.

Physical Security Measures for Solar Panel Systems

Physical security plays a critical role in preventing unauthorized access and tampering with solar panel systems. Regular physical inspections should be conducted to identify any signs of tampering or damage. Geofencing technologies can be implemented to alert authorities of unauthorized access to the solar panel installation site. Access control measures, such as fences, locks, and surveillance cameras, deter potential attackers and provide evidence in case of an incident.

Employing robust anti-theft measures, such as tamper-evident seals and GPS tracking devices, can aid in the recovery of stolen equipment. Regular maintenance and inspections are essential to ensure that physical security measures remain effective.

Cybersecurity Technologies for Securing Solar Panel Infrastructure

Various cybersecurity technologies can be deployed to enhance the security of solar panel infrastructure. Intrusion Detection Systems (IDS) monitor network traffic for malicious activity, providing early warning of potential attacks. Firewalls act as barriers, controlling network access and preventing unauthorized connections. Security Information and Event Management (SIEM) systems collect and analyze security logs from various sources, providing a centralized view of security events.

Antivirus and anti-malware software protect against known threats, while regular vulnerability scanning identifies and addresses security weaknesses. A robust and layered security approach, combining multiple technologies, offers the most effective protection. For instance, a firewall could prevent unauthorized external access, while an IDS monitors internal network traffic for suspicious behavior.

Incident Response and Recovery Plan

A comprehensive incident response plan is essential for minimizing the impact of a cyberattack. This plan should Artikel clear procedures for identifying, containing, eradicating, recovering from, and learning from security incidents. Regular security awareness training for personnel involved in the operation and maintenance of solar panel systems is crucial. The plan should include well-defined roles and responsibilities, communication protocols, and escalation procedures.

Regular testing and updates to the incident response plan are vital to ensure its effectiveness. Post-incident analysis helps identify vulnerabilities and improve future security measures. A well-rehearsed and regularly tested incident response plan, combined with a robust backup and recovery strategy, is essential for a swift and effective recovery after a cyberattack. For example, the plan should include procedures for isolating affected systems, restoring data from backups, and communicating with relevant stakeholders.

Regulatory and Policy Implications

The increasing integration of solar panel systems into the Australian electricity grid necessitates a robust regulatory framework to address the emerging cybersecurity threats. Current regulations, while addressing aspects of grid stability and consumer safety, often fall short in explicitly addressing the unique vulnerabilities introduced by the distributed nature and potential for remote manipulation of solar PV systems. This gap poses a significant risk to the overall resilience of the national grid and necessitates a proactive approach from policymakers and industry stakeholders.

Government Regulation and Solar Panel Cybersecurity

Government regulations play a crucial role in improving the cybersecurity of solar panel systems. Mandatory security standards for the design, installation, and operation of solar PV systems could significantly enhance the overall security posture. This includes requirements for secure communication protocols, robust authentication mechanisms, and regular security audits. Examples from other sectors, such as the automotive industry’s cybersecurity standards for connected vehicles, can serve as a valuable blueprint for developing similar regulations for the solar energy sector.

Effective enforcement mechanisms, including penalties for non-compliance, are essential to ensure the widespread adoption of these standards. Furthermore, government funding for research and development in solar panel cybersecurity can accelerate the development and deployment of innovative security solutions.

Insufficient Aspects of Current Regulations

Current regulations often focus on the physical safety and performance aspects of solar panel installations, neglecting the crucial dimension of cybersecurity. There is a lack of specific guidelines on secure data handling, software updates, and vulnerability management for solar inverters and communication systems. The decentralized nature of solar installations makes it challenging to enforce consistent security practices across different providers and installations.

Furthermore, the absence of standardized security protocols hinders interoperability and makes it difficult to implement effective security monitoring and incident response mechanisms across the entire grid. The lack of clear liability frameworks in case of cyberattacks targeting solar installations also creates a disincentive for proactive security investments.

Recommendations for Policymakers

To improve the resilience of the Australian electric grid to cyberattacks targeting solar panel infrastructure, policymakers should consider the following recommendations:

- Mandate the implementation of minimum cybersecurity standards for all new solar PV installations, encompassing aspects such as secure communication protocols, firmware updates, and access control mechanisms.

- Establish a national cybersecurity certification program for solar panel systems and related components, providing consumers with assurance of the security features of their installations.

- Invest in research and development to explore and develop advanced cybersecurity technologies specifically tailored to the needs of the solar energy sector.

- Develop clear liability frameworks and incident response protocols to manage cybersecurity incidents effectively and encourage proactive security investments.

- Foster collaboration between government agencies, industry stakeholders, and researchers to establish a robust cybersecurity ecosystem for the solar energy sector.

International Collaboration on Solar Panel Cybersecurity

International collaboration is crucial in addressing the global cybersecurity challenges related to solar panel infrastructure. Sharing best practices, research findings, and threat intelligence across different countries can help to develop more effective security solutions. Joint efforts in developing international standards and certifications for solar panel cybersecurity can promote interoperability and reduce the overall risk. Participating in international forums and working groups focused on energy cybersecurity can facilitate the exchange of knowledge and experience, leading to the development of more comprehensive and effective strategies.

For instance, collaboration with countries like Germany and the United States, which have significant experience in integrating renewable energy sources into their grids, can offer valuable insights and lessons learned.

Australia’s power grid is facing a serious threat: cyberattacks targeting increasingly prevalent solar panel installations. This vulnerability highlights the urgent need for robust security solutions, and developing these requires innovative approaches like those discussed in this article on domino app dev, the low code and pro code future , which explores how faster development cycles can lead to quicker deployment of critical security patches.

Ultimately, securing our energy infrastructure from these solar-panel-based attacks demands a blend of rapid development and advanced security measures.

Industry Standards and Certifications

The development and adoption of industry standards and certifications for solar panel cybersecurity can significantly improve the security posture of these systems. These standards should cover various aspects of security, including secure communication protocols, authentication mechanisms, data encryption, and vulnerability management. Independent third-party certification bodies can verify the compliance of solar panel systems with these standards, providing consumers and grid operators with assurance of the security features of the installations.

Industry-wide adoption of these standards can help to create a more secure and resilient solar energy ecosystem, reducing the overall risk of cyberattacks. The development of such standards should involve a collaborative effort between industry stakeholders, government agencies, and cybersecurity experts to ensure that they are comprehensive, practical, and effective.

Final Review

The vulnerability of the Australian electric grid to cyberattacks via solar panels is a serious concern that demands immediate action. While the potential consequences are alarming – from widespread blackouts to crippling economic losses – the situation isn’t hopeless. By implementing robust cybersecurity measures, improving communication protocols, and strengthening physical security, we can significantly reduce the risk. Collaboration between government, industry, and researchers is essential to build a more resilient and secure energy future.

Ignoring this threat is not an option; proactive measures are crucial to safeguarding our national energy infrastructure and maintaining stability in a world increasingly reliant on renewable energy sources.

Common Queries

What types of malware could be used to attack solar panels?

Various types of malware, including custom-designed viruses and existing malware adapted for specific vulnerabilities in solar inverter firmware, could be used. This could range from simple denial-of-service attacks to more sophisticated intrusions aimed at controlling the power output.

How can homeowners protect their solar panel systems?

Homeowners should ensure their inverters are regularly updated with the latest firmware patches, use strong passwords, and consider installing network security devices like firewalls to protect their home network from external threats.

What role does the Australian government play in mitigating this risk?

The government has a crucial role in setting cybersecurity standards, providing funding for research and development of secure technologies, and creating regulations to ensure the safety and security of the national grid. International collaboration is also essential.

Are there any industry standards for solar panel cybersecurity?

While standards are evolving, there’s a growing need for industry-wide standards and certifications that define minimum cybersecurity requirements for solar panel systems and their integration into the grid.