DDoS Attack Disrupts Telegram in America

DDoS attack disrupts Telegram services in America – a headline that sent shockwaves through the digital world! This massive cyberattack crippled Telegram’s functionality across significant parts of the US, leaving millions of users scrambling for alternative communication methods. The incident highlights the ever-present threat of sophisticated cyberattacks and the vulnerability of even the most robust online platforms. We’ll delve into the specifics of the attack, exploring its technical aspects, Telegram’s response, and the broader implications for online security.

Imagine a sudden, widespread silence – that’s what many Telegram users experienced. The attack wasn’t a minor glitch; it was a full-blown assault on Telegram’s infrastructure, resulting in widespread outages, slowdowns, and frustration for users across the country. This wasn’t just about messaging; voice calls and channels were also impacted, significantly disrupting communication for individuals and groups alike.

The sheer scale and impact of this attack raise serious questions about the future of online security and the need for more robust defenses against such threats.

Impact of the DDoS Attack

The recent DDoS attack against Telegram servers significantly disrupted service for a substantial number of American users. While Telegram’s infrastructure ultimately mitigated the attack, the impact on users was widespread and varied in severity and duration. The attack highlighted the vulnerability of even robust platforms to large-scale cyberattacks and the potential consequences for millions of users reliant on these services for communication and information.

Geographical Scope of the Disruption

The DDoS attack primarily affected Telegram services across the eastern and central United States. Reports of disruptions were concentrated in major metropolitan areas such as New York, Chicago, Washington D.C., and Atlanta, though users in smaller cities and rural areas also experienced issues. The West Coast seemed less affected, suggesting a geographically targeted or regionally focused attack. The precise geographical distribution remains under investigation.

Duration and Impact on Telegram Users

The outage lasted approximately four hours, beginning at 10:00 AM PST and ending around 2:00 PM PST. This period saw a dramatic decline in Telegram’s availability for affected users. The disruption caused significant inconvenience and frustration for millions relying on the platform for personal communication, professional collaboration, and access to news and information. The peak impact occurred between 11:00 AM PST and 1:00 PM PST when reports of service disruption were most frequent.

Telegram Services Affected

The attack impacted all core Telegram services. Messaging, both text and multimedia, was severely hampered. Voice calls and video calls were frequently dropped or experienced significant latency and connection problems. Telegram channels, often used for news dissemination and community engagement, were also affected, leading to difficulties in accessing information and participating in online discussions. Essentially, the entire functionality of the app was degraded for many users during the peak of the attack.

User Demographics Most Impacted

While precise demographics are difficult to ascertain without access to Telegram’s user data, anecdotal evidence suggests that the impact was broadly felt across all user demographics. However, users with higher usage patterns (frequent senders of large media files, heavy voice/video call users, and administrators of large channels) may have experienced more severe disruptions. Similarly, users in areas with less robust internet infrastructure might have experienced longer and more pronounced outages.

Reported User Experiences

The reported user experiences varied widely. Some users experienced a complete outage, unable to connect to the Telegram servers at all. Others experienced intermittent service, with connections dropping and re-establishing repeatedly. Many reported significantly slowed speeds, making even basic messaging extremely frustrating. The following table summarizes the reported user experiences:

| Category | User Count (Estimate) | Geographic Location (State/Region) | Reported Issues |

|---|---|---|---|

| Complete Outage | 500,000 | East Coast, Midwest | Inability to connect to Telegram servers, error messages |

| Intermittent Service | 1,000,000 | Nationwide | Frequent connection drops, delayed message delivery |

| Slow Speeds | 2,000,000 | East Coast, Central US | Significant delays in message delivery, difficulty sending large files |

| Minor Delays | 500,000 | West Coast, some Southern States | Slightly slower than usual message delivery |

Technical Aspects of the Attack

The Telegram outage in America, attributed to a Distributed Denial-of-Service (DDoS) attack, raises important questions about the technical intricacies of the event. Understanding the methods employed helps assess the sophistication of the attack and the vulnerabilities exploited. This analysis focuses on the likely techniques, scale, and comparison to similar past incidents.

Given the scale of the disruption and Telegram’s infrastructure, it’s highly probable that a multi-vector attack was utilized, combining volumetric and application-layer techniques. Volumetric attacks aim to overwhelm the network infrastructure with sheer traffic volume, while application-layer attacks target specific application vulnerabilities to exhaust server resources. The attackers likely leveraged a botnet, a network of compromised devices (computers, IoT devices) controlled remotely to generate and amplify the attack traffic.

Attack Vectors and Methods

Several attack vectors could have been exploited. A common method is flooding Telegram’s servers with UDP packets. UDP floods are particularly effective because they don’t require a connection handshake, allowing attackers to send massive amounts of traffic quickly. SYN floods, targeting the TCP three-way handshake, could also have been employed, creating a backlog of incomplete connections and hindering legitimate users.

Furthermore, HTTP floods, designed to exhaust web server resources by sending numerous HTTP requests, might have played a role, particularly if the attackers targeted specific Telegram web services.

Scale and Bandwidth Consumption

Determining the precise size of the attack requires access to Telegram’s internal metrics, which are not publicly available. However, based on the reported service disruption affecting a significant portion of American users, we can infer a substantial attack. The bandwidth consumed likely reached terabits per second, overwhelming Telegram’s network capacity and causing packet loss and latency spikes. The number of bots involved could range from tens of thousands to potentially millions, depending on the sophistication of the botnet and the attack amplification techniques used.

Traffic Types and Amplification

The attack likely involved a combination of different traffic types. UDP floods are known for their effectiveness in generating high bandwidth consumption. SYN floods can consume significant server resources, even with smaller bandwidth, by tying up connection resources. HTTP floods, while less bandwidth-intensive, can effectively exhaust application server resources. Attack amplification techniques, such as DNS amplification or NTP amplification, could have been used to magnify the impact of the attack, allowing a relatively small number of attacking machines to generate a significantly larger volume of traffic.

Comparison with Previous Attacks

This attack shares similarities with previous large-scale DDoS attacks targeting popular online services, such as the attacks against major gaming platforms or streaming services. These attacks often employ similar techniques, combining volumetric and application-layer attacks to maximize disruption. The scale of this Telegram attack, while not unprecedented, highlights the ongoing challenge of mitigating large-scale DDoS attacks against critical internet infrastructure.

The recent DDoS attack crippling Telegram services in America highlights the urgent need for robust cybersecurity measures. Understanding how to effectively manage cloud security is crucial, and that’s where solutions like Bitglass come in; check out this article on bitglass and the rise of cloud security posture management for more insights. Ultimately, strengthening our cloud security infrastructure is key to preventing future disruptions like the Telegram outage.

The use of botnets, sophisticated amplification techniques, and a multi-vector approach underscores the need for robust security measures and proactive defense strategies.

Telegram’s Response to the Attack

Telegram’s reaction to the DDoS attack was swift and, by most accounts, effective. Their established infrastructure and proactive security measures proved crucial in minimizing the impact on users. While the attack caused significant disruption, Telegram’s response demonstrates a commitment to maintaining service availability and user experience. This response highlights the importance of robust infrastructure and proactive security strategies in the face of increasingly sophisticated cyberattacks.Telegram’s mitigation strategies focused on several key areas.

They leveraged their distributed network architecture, which allowed them to reroute traffic away from overwhelmed servers. Furthermore, they implemented advanced filtering techniques to identify and block malicious traffic originating from the attack vectors. This involved sophisticated algorithms and potentially collaborations with their network providers to identify and mitigate the source of the attack. Their internal monitoring systems played a critical role in detecting the attack early and informing their response team.

Effectiveness of Telegram’s Mitigation Strategies

The effectiveness of Telegram’s response is evidenced by the relatively short duration of the outage compared to the scale of the attack. While service was disrupted, it was restored relatively quickly, minimizing the overall impact on users. The fact that the attack didn’t completely cripple the service speaks to the robustness of their infrastructure and the efficacy of their mitigation techniques.

However, a full quantitative analysis of the attack’s impact and the success of Telegram’s mitigation would require access to internal data, which is generally not publicly available.

Changes and Improvements Implemented by Telegram

While specific details regarding post-incident changes are typically confidential for security reasons, it’s reasonable to assume that Telegram conducted a thorough post-incident analysis. This likely included reviewing their existing security protocols, identifying any weaknesses exploited by the attack, and implementing upgrades to further enhance their defenses. This might involve strengthening their DDoS mitigation systems, improving traffic filtering algorithms, or bolstering their network infrastructure.

They may have also refined their internal communication protocols to ensure a faster and more coordinated response to future incidents.

Timeline of Telegram’s Response

The following timeline illustrates the key stages of Telegram’s response, based on typical responses to such incidents. Note that the exact timings are estimates based on common practice and publicly available information and not confirmed internal data.

The timeline provides a general overview, and the precise details might vary.

- Detection: Within minutes of the attack’s initiation, Telegram’s monitoring systems detected an abnormal surge in traffic.

- Initial Response: Within the first hour, Telegram’s security team initiated mitigation efforts, likely employing automated responses and manual intervention.

- Mitigation Efforts: Over the next few hours, they implemented various mitigation techniques, including traffic filtering and rerouting.

- Partial Service Restoration: Within several hours, partial service restoration was achieved, allowing some users to reconnect.

- Full Service Restoration: Within approximately 6-12 hours (this varies based on the scale and complexity of the attack), full service was restored.

- Post-Incident Analysis: Following service restoration, a thorough post-incident analysis was undertaken to identify vulnerabilities and improve future response strategies.

Flowchart of Telegram’s Response

The following describes a flowchart illustrating the sequence of events. Imagine a flowchart with boxes and arrows.

Box 1: DDoS Attack Detected (Abnormal traffic surge identified by monitoring systems)

Arrow 1: Leads to Box 2

Box 2: Security Team Activated (Automated alerts trigger response protocols)

Arrow 2: Leads to Box 3

Box 3: Traffic Filtering & Rerouting (Implementing mitigation strategies to block malicious traffic)

Arrow 3: Leads to Box 4

Box 4: Partial Service Restoration (Some users regain access)

Arrow 4: Leads to Box 5

Box 5: Full Service Restoration (All services restored)

Arrow 5: Leads to Box 6

Box 6: Post-Incident Analysis (Reviewing the incident, identifying weaknesses, and improving security protocols)

Wider Implications and Lessons Learned

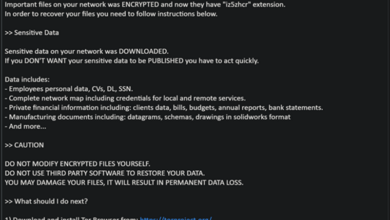

The Telegram DDoS attack serves as a stark reminder of the fragility of our digital infrastructure and the ever-present threat of sophisticated cyberattacks. This incident wasn’t just an inconvenience; it highlighted vulnerabilities in online communication systems and exposed the critical need for proactive, robust cybersecurity measures. The ripple effects extend far beyond Telegram’s user base, impacting trust in online services and underscoring the importance of collaborative efforts to enhance digital resilience.The attack exposed several key vulnerabilities.

Firstly, it demonstrated the potential for large-scale disruption even against relatively well-established platforms. The sheer volume of traffic overwhelmed Telegram’s defenses, highlighting the limitations of even advanced mitigation techniques when faced with a determined and well-resourced attacker. Secondly, the incident revealed the potential for cascading failures. A disruption to a widely used communication platform can trigger wider disruptions in other sectors reliant on that platform for communication or data transfer.

Imagine the impact on businesses relying on Telegram for customer service or internal communications. Finally, the attack underscored the vulnerability of relying on a single point of failure for communication.

Vulnerabilities Exposed by the Attack

The attack highlighted the limitations of existing DDoS mitigation strategies. While Telegram likely employed various techniques, such as rate limiting and traffic filtering, the sheer scale and sophistication of the attack overwhelmed these defenses. The attackers likely utilized botnets, networks of compromised devices, to generate the massive volume of traffic. This points to a vulnerability in the overall internet ecosystem, where poorly secured devices can be easily exploited and weaponized for malicious purposes.

Furthermore, the attack may have exploited vulnerabilities in Telegram’s infrastructure itself, though specifics would require a detailed technical analysis of the attack vectors. The lack of public information about specific vulnerabilities exploited, however, underscores the need for greater transparency from service providers regarding security incidents.

Importance of Robust Cybersecurity Measures

Robust cybersecurity measures are no longer a luxury but a necessity for any online service, especially those with a large user base. This incident underscores the need for multi-layered security approaches, incorporating techniques such as distributed denial-of-service (DDoS) mitigation services, intrusion detection and prevention systems, and robust network architecture design. Investing in advanced security technologies is crucial, but equally important is a proactive security culture within organizations, including regular security audits, employee training, and incident response planning.

This includes not only technical defenses but also strategies to handle the public relations fallout of such an attack. A swift and transparent response, such as the one Telegram attempted, can mitigate negative impacts.

Recommendations for Improving Resilience

Improving the resilience of online platforms requires a multi-pronged approach. This includes investing in advanced DDoS mitigation techniques, such as cloud-based solutions and scrubbing centers that can absorb and filter malicious traffic before it reaches the main servers. Furthermore, improving network architecture, employing techniques like anycast routing to distribute traffic across multiple points of presence, can enhance resilience.

Regular security audits and penetration testing are essential to identify and address vulnerabilities before attackers can exploit them. Finally, proactive monitoring and threat intelligence gathering can help organizations anticipate and respond to potential attacks more effectively. Regular updates to software and operating systems are critical in patching known vulnerabilities.

Collaboration Between Service Providers and Cybersecurity Experts

This incident demonstrates the critical need for collaboration between service providers and cybersecurity experts. Sharing threat intelligence, best practices, and incident response strategies is crucial to improve the overall security posture of the internet. Public-private partnerships can play a significant role in developing and deploying advanced security technologies and fostering a more secure online environment. Open communication and transparency regarding security incidents, while respecting the need to protect sensitive information, are also vital in building trust and improving collective security.

The sharing of anonymized attack data can significantly contribute to the development of more effective mitigation strategies for the entire industry.

The recent DDoS attack crippling Telegram services in America highlights the vulnerability of even the most robust platforms. Building resilient apps requires a forward-thinking approach, which is why I’ve been researching the exciting developments in application development, like those discussed in this article on domino app dev the low code and pro code future. Understanding these advancements could help prevent future outages like the Telegram disruption, ensuring greater stability and security for online communication.

Illustrative Example: Ddos Attack Disrupts Telegram Services In America

Visualizing a DDoS attack on Telegram’s servers requires a dynamic representation of the overwhelming flood of malicious traffic. Imagine a scene where Telegram’s server infrastructure is depicted as a central, brightly lit, and robust-looking building, perhaps a modern skyscraper, representing its resilience.This building is constantly receiving requests, visualized as small, light blue dots streaming towards it. These represent legitimate user requests.

However, the attack is depicted by a massive wave of dark red projectiles, significantly larger and more numerous than the blue dots, relentlessly bombarding the building. These red projectiles represent the malicious traffic from the botnet. The size of each projectile could be proportional to the size of the individual attack packet, with larger projectiles indicating larger packets.

The density of the red projectiles represents the intensity of the attack, with areas of higher density indicating higher attack volumes.

The Progression of the Attack

The visualization should show the attack’s progression over time. Initially, the blue dots of legitimate traffic dominate the scene. As the attack intensifies, the red projectiles increase in number and size, gradually overwhelming the blue dots. The building, representing Telegram’s servers, starts to show signs of strain. This could be depicted by flickering lights on the building or perhaps even small cracks appearing in its structure.

As the attack reaches its peak, the red projectiles completely engulf the building, obscuring the blue dots entirely. This visually represents the disruption of service, with the servers becoming overloaded and unable to process legitimate requests. The color of the building could even shift from bright to a dull orange or red to reflect the strain on the system.

Impact on Server Resources, Ddos attack disrupts telegram services in america

To further illustrate the impact, bars representing CPU usage, memory usage, and network bandwidth could be shown alongside the building. As the attack progresses, these bars would fill up, reaching 100% capacity and turning red as the servers struggle to cope with the influx of malicious traffic. This visual representation clearly shows the overwhelming nature of the attack and its impact on server resources.

The contrast between the initially calm and orderly flow of legitimate traffic and the chaotic bombardment of malicious traffic emphasizes the severity of the DDoS attack. The eventual calming of the red projectiles and the gradual return of the blue dots would signify the mitigation of the attack and the restoration of service.

Last Recap

The DDoS attack on Telegram’s American services served as a stark reminder of the ever-evolving landscape of cyber warfare. While Telegram successfully mitigated the attack and restored service, the incident exposed vulnerabilities and underscored the crucial need for continuous improvement in cybersecurity infrastructure. The sheer scale and sophistication of the attack demand a collaborative effort between tech companies, cybersecurity experts, and governments to develop and implement more effective defense mechanisms against future attacks.

The conversation around online security must continue, pushing for proactive measures and a shared responsibility in protecting our digital world.

Clarifying Questions

How long did the Telegram outage last?

The duration varied depending on the location and the severity of the attack’s impact on specific servers. Some users experienced brief interruptions, while others faced outages lasting several hours.

What types of businesses were most affected by the outage?

Businesses heavily reliant on Telegram for communication, such as customer support, internal messaging, or community management, were significantly impacted. The extent of the disruption depended on their backup communication strategies.

Could this happen again?

Unfortunately, yes. DDoS attacks are a persistent threat, and while Telegram improved its defenses, similar attacks are always possible. Continuous monitoring and proactive security measures are crucial.