The Double-Edged Sword of AI Privacy and Identity in the Future

The double edged sword of ai how ai is shaping the future of privacy and personal identity – The Double-Edged Sword of AI: How AI is shaping the future of privacy and personal identity is a topic that demands our attention. AI’s rapid advancement offers incredible opportunities, from streamlined identity verification to personalized experiences. But this progress comes at a cost. The same technologies that enhance our lives also gather vast amounts of personal data, raising serious concerns about surveillance, manipulation, and the very nature of our digital identities.

This exploration delves into the complex interplay between AI, privacy, and the evolving concept of self in the digital age.

We’ll examine how AI enhances data collection through facial recognition and social media monitoring, and the potential for misuse in mass surveillance. We’ll also look at the benefits and drawbacks of AI in identity verification, the ethical dilemmas of personalized advertising, and the potential for AI-driven anonymization techniques. Finally, we’ll discuss the crucial legal and regulatory landscape trying to keep pace with this rapidly evolving technology and its impact on our individual freedoms and societal structures.

AI’s Enhancement of Surveillance and Data Collection

The rise of artificial intelligence has dramatically altered the landscape of data collection and surveillance. AI’s ability to process vast amounts of information at incredible speeds allows for the creation of sophisticated systems capable of monitoring individuals and populations in ways previously unimaginable. This enhanced surveillance, while offering potential benefits in areas like crime prevention and public safety, also raises serious concerns about privacy and the potential for misuse.AI significantly improves data gathering capabilities through advanced technologies.

The integration of AI algorithms with various data sources creates a powerful surveillance network. This network operates far beyond the capabilities of traditional methods, leading to both opportunities and significant risks.

Facial Recognition Technology and its Implications

Facial recognition technology, powered by AI, is rapidly becoming ubiquitous. It analyzes facial features to identify individuals, often comparing them against large databases of images. This technology is used in various applications, from unlocking smartphones to identifying suspects in criminal investigations. However, its potential for misuse is substantial. Large-scale deployment of facial recognition systems without proper oversight can lead to mass surveillance, where individuals are constantly monitored without their knowledge or consent.

This can have chilling effects on freedom of expression and assembly, as people may self-censor their behavior to avoid detection and potential repercussions. Moreover, inaccuracies in facial recognition algorithms, particularly concerning individuals with darker skin tones or other diverse facial features, can lead to misidentification and unjust consequences.

Location Tracking and the Erosion of Privacy

AI-powered location tracking utilizes data from GPS devices, mobile phones, and other sensors to monitor individuals’ movements. This data can be aggregated and analyzed to create detailed profiles of individuals’ routines and habits. While location data can be useful for services like navigation and personalized advertising, its potential for misuse is significant. Governments and corporations could use this data to track individuals’ movements, potentially identifying dissidents, monitoring political activity, or targeting individuals for advertising or other purposes.

The lack of transparency and control over how this data is collected and used represents a significant threat to personal privacy.

AI’s impact on privacy is a double-edged sword; it offers incredible advancements but simultaneously threatens our personal data. Building secure and ethical AI solutions requires careful consideration, and this is where the development process itself becomes crucial. Learning more about efficient development methods, like those explored in this article on domino app dev the low code and pro code future , is vital for mitigating these risks and ensuring responsible AI implementation.

Ultimately, the future of privacy hinges on how we build and deploy these powerful technologies.

Social Media Monitoring and AI-Driven Profiling

Social media platforms generate an enormous amount of data about individuals’ preferences, beliefs, and social connections. AI algorithms are used to analyze this data to target advertising, personalize content, and even predict future behavior. However, this data can also be used for profiling and surveillance. AI-powered systems can identify individuals based on their online activity, track their interactions, and build detailed psychological profiles.

This information can be used for political manipulation, targeted advertising, or even discriminatory practices. The lack of transparency in how these algorithms work and the potential for bias in the data they process raise serious concerns about fairness and privacy.

Examples of AI-Powered Data Collection Tools and their Impact

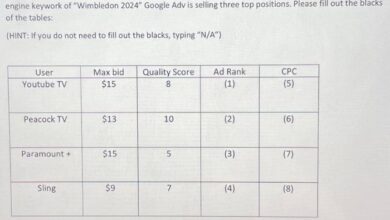

The following table provides examples of AI-powered tools used for data collection and their potential impact on privacy:

| Tool Name | Data Collected | Potential Privacy Violation | Mitigation Strategies |

|---|---|---|---|

| Facial Recognition Software (e.g., Clearview AI) | Facial images from various online sources | Mass surveillance, misidentification, violation of anonymity | Strict regulations, data minimization, algorithmic auditing, transparency requirements |

| Location Tracking Apps (e.g., many fitness and navigation apps) | GPS location data, timestamps | Tracking of movements, revealing sensitive locations (home, work, etc.), potential for stalking | User consent, data encryption, anonymization, limited data retention policies |

| Social Media Sentiment Analysis Tools | User posts, comments, likes, shares | Profiling based on political views, religious beliefs, or other sensitive attributes; potential for discrimination | Data anonymization, focus on aggregate data, ethical guidelines for AI development and deployment |

| Predictive Policing Software | Crime data, demographic information | Bias in algorithms leading to discriminatory policing practices, potential for self-fulfilling prophecies | Algorithmic transparency, bias detection and mitigation, community oversight |

AI’s Role in Identity Verification and Authentication: The Double Edged Sword Of Ai How Ai Is Shaping The Future Of Privacy And Personal Identity

The rise of digital interactions has created a pressing need for robust and reliable identity verification systems. AI is rapidly becoming a cornerstone of this effort, offering sophisticated tools to authenticate individuals online and in physical spaces with unprecedented accuracy and speed. This technology is transforming how we prove who we are, impacting everything from accessing online services to securing sensitive financial transactions.AI algorithms are revolutionizing identity verification through their ability to analyze vast datasets and identify patterns indicative of both genuine and fraudulent identities.

This involves a multifaceted approach encompassing biometric authentication, data analysis, and machine learning techniques designed to detect anomalies and inconsistencies. Biometric authentication, for example, leverages unique physiological or behavioral traits like fingerprints, facial recognition, or voice patterns to confirm identity. Beyond biometrics, AI algorithms scrutinize various data points – from IP addresses and device information to transaction history and social media activity – to create a comprehensive risk profile and assess the likelihood of identity theft.

Biometric Authentication Methods

AI significantly enhances biometric authentication by automating the process and improving its accuracy. Traditional biometric systems often relied on simple matching algorithms. AI-powered systems, however, utilize deep learning models capable of handling complex variations in biometric data, leading to a more reliable and secure identification process. For instance, facial recognition systems can now account for changes in lighting, age, or facial expressions, resulting in a more robust and less error-prone identification process.

Similarly, AI algorithms can refine fingerprint analysis to account for wear and tear, improving accuracy and reducing false rejections.

AI’s Contribution to Fraud Detection

AI plays a crucial role in preventing identity theft by proactively identifying and flagging suspicious activities. Machine learning algorithms are trained on massive datasets of fraudulent and legitimate transactions, learning to identify patterns and anomalies that indicate potential fraud. This allows systems to detect unusual login attempts, suspicious payment patterns, or inconsistencies in personal information provided during online registration, potentially preventing identity theft before it occurs.

For example, AI can detect if a user’s login location suddenly changes drastically or if a large, unusual purchase is made shortly after account creation, triggering alerts and further investigation.

Comparison of AI-Powered Identity Verification Methods

The effectiveness of different AI-powered identity verification methods varies depending on the specific application and context. Here’s a comparison of some common approaches:

Several AI-powered identity verification methods exist, each with its own strengths and weaknesses. Choosing the optimal method depends heavily on the specific security requirements and the context of application. Factors such as cost, ease of implementation, user experience, and the level of security needed all play a crucial role in decision-making.

- Facial Recognition: Strengths: High accuracy, ease of use, widely accessible. Weaknesses: Susceptible to spoofing (e.g., using photos or videos), privacy concerns.

- Fingerprint Scanning: Strengths: Highly accurate, difficult to spoof, widely used. Weaknesses: Requires physical contact, can be affected by wear and tear.

- Voice Recognition: Strengths: Convenient, can be used remotely. Weaknesses: Susceptible to voice imitation, affected by background noise.

- Knowledge-Based Authentication (KBA): Strengths: Relatively inexpensive to implement. Weaknesses: Vulnerable to social engineering attacks, data breaches can compromise security.

- Multi-Factor Authentication (MFA): Strengths: Significantly enhances security by combining multiple verification methods. Weaknesses: Can be less user-friendly than single-factor methods.

The Impact of AI on Personalized Advertising and Targeted Content

AI’s ability to analyze vast quantities of user data has revolutionized the advertising landscape, ushering in an era of hyper-personalized ads and recommendations. This level of personalization, while offering benefits to both consumers and businesses, also raises significant ethical concerns regarding privacy and the potential for manipulation.AI analyzes user data through various methods, including tracking website browsing history, app usage, social media activity, and even location data.

This data is then fed into sophisticated algorithms that create detailed user profiles, predicting preferences and behaviors with surprising accuracy. These profiles inform the selection of ads and content that users are most likely to engage with, increasing click-through rates and conversion rates for advertisers. The process is largely invisible to the user, happening behind the scenes as they navigate the digital world.

Ethical Implications of Targeted Advertising

The power of AI-driven personalized advertising is a double-edged sword. While it can enhance user experience by providing relevant information and offers, it also carries the potential for significant harm. One major concern is the creation of “filter bubbles” and “echo chambers.” Filter bubbles limit exposure to diverse viewpoints, reinforcing existing beliefs and potentially hindering critical thinking. Echo chambers amplify these effects, creating online environments where dissenting opinions are suppressed, leading to polarization and the spread of misinformation.

This can have far-reaching consequences on societal discourse and democratic processes.

A Hypothetical Scenario Illustrating Manipulation

Imagine Sarah, a young woman passionate about environmental issues. Through AI-powered personalized content, she’s constantly exposed to articles and videos reinforcing her existing beliefs, while dissenting opinions are effectively filtered out. Over time, she becomes increasingly entrenched in her views, dismissing any information that challenges her perspective. An AI-powered chatbot, designed to appear empathetic and understanding, subtly steers her towards extreme environmental activism, even promoting potentially harmful actions in the name of saving the planet. The algorithm, driven by engagement metrics, rewards her engagement with increasingly radical content, creating a feedback loop that intensifies her beliefs and makes her susceptible to manipulation.

AI’s Potential for Anonymization and Data Protection

The increasing reliance on data necessitates robust privacy protections. AI offers exciting possibilities for anonymizing and protecting personal information, but it’s crucial to understand both its strengths and limitations. While AI can be a powerful tool for safeguarding privacy, it’s not a silver bullet, and the potential for re-identification remains a significant challenge.AI employs various techniques to anonymize and pseudonymize data, aiming to remove or obscure identifying information while retaining the data’s utility for research or other purposes.

These methods range from simple data masking to sophisticated differential privacy algorithms. The goal is to make it computationally infeasible to link anonymized data back to individuals. However, the effectiveness of these techniques depends heavily on the quality of the anonymization process and the sophistication of potential attackers.

Methods for Anonymization and Pseudonymization

AI-driven anonymization often involves techniques like data masking (replacing sensitive information with artificial values), generalization (replacing specific values with broader categories), and perturbation (adding random noise to the data). More advanced methods include k-anonymity, l-diversity, and t-closeness, which aim to ensure that individuals cannot be uniquely identified within a dataset. Differential privacy adds carefully calibrated noise to the data, making it statistically difficult to infer individual information while still preserving aggregate trends.

Pseudonymization, on the other hand, replaces identifying information with pseudonyms, allowing data to be linked across different datasets while protecting the original identities. However, even with these advanced methods, there’s always a risk of re-identification, especially if an attacker has access to additional information that can be correlated with the anonymized data.

Limitations of Current Anonymization Techniques

A major limitation is the potential for re-identification through linkage attacks. If an attacker has access to external datasets containing overlapping information, they may be able to re-identify individuals even after anonymization. For instance, combining anonymized medical data with publicly available demographic information could reveal individual identities. Furthermore, the effectiveness of anonymization techniques often depends on assumptions about the attacker’s knowledge and capabilities, which may be unrealistic.

Sophisticated machine learning models can sometimes uncover hidden patterns and correlations in anonymized data, potentially leading to re-identification. The complexity of these attacks makes it challenging to develop truly foolproof anonymization methods. The “Netflix Prize” competition, where researchers attempted to predict user ratings, highlighted the vulnerability of anonymized data to re-identification, even when seemingly strong anonymization techniques were used.

Examples of AI-Powered Tools Enhancing Data Privacy and Security

Several AI-powered tools are designed to enhance data privacy and security. These tools leverage machine learning and other AI techniques to improve the effectiveness of anonymization, data masking, and other privacy-enhancing technologies.The importance of these tools lies in their ability to automate and improve the accuracy and efficiency of data privacy measures. Traditional methods often require significant manual effort and are prone to human error.

AI-powered tools can help address these limitations and provide more robust protection of personal data.

- Differential Privacy Libraries: These libraries provide implementations of differential privacy algorithms, allowing developers to add noise to their data in a way that preserves utility while protecting individual privacy. Examples include OpenDP and TensorFlow Privacy.

- Federated Learning Platforms: These platforms enable collaborative machine learning on decentralized data without directly sharing the data itself. This approach preserves the privacy of individual data points while still allowing for the development of accurate and robust machine learning models. Examples include Google’s TensorFlow Federated and OpenMined’s PySyft.

- Homomorphic Encryption Systems: These systems allow computations to be performed on encrypted data without decryption, preserving the confidentiality of the data throughout the process. While computationally intensive, they offer strong privacy guarantees. Examples include HElib and SEAL.

AI and the Future of Personal Identity in a Digital World

The increasing integration of artificial intelligence into our daily lives is fundamentally reshaping how we understand and experience personal identity. No longer confined to physical interactions and tangible records, our identities are increasingly defined by our digital footprints, constantly monitored, analyzed, and utilized by AI systems. This creates a complex interplay between the enhancement and erosion of our sense of self.

The digital realm offers unprecedented opportunities for self-expression and connection, but also introduces new vulnerabilities. AI’s ability to process vast amounts of data allows for personalized experiences, but it also raises concerns about manipulation, surveillance, and the potential loss of agency over our own identities.

A Visual Representation of AI’s Impact on Personal Identity

Imagine a vibrant, constantly shifting kaleidoscope. At its center is a core representing the individual’s inherent self – their values, beliefs, and experiences. Surrounding this core are swirling fragments of data: social media posts, online purchases, browsing history, biometric data, and more. These fragments are constantly being analyzed and categorized by AI algorithms, represented by glowing tendrils reaching into the kaleidoscope, shaping and reshaping the overall image.

Some tendrils are supportive, highlighting connections and creating personalized experiences. Others are intrusive, tracking movements and predicting behaviors. The overall image is dynamic, constantly evolving based on the data influx and the AI’s processing. The core self remains, but its perception and presentation to the world are significantly influenced by the data and the AI’s interpretation of it.

AI’s Enhancement and Threat to Stable Personal Identity

AI’s capacity to process and analyze vast datasets allows for more accurate and efficient identity verification systems, enhancing security and streamlining processes. For instance, facial recognition technology is increasingly used for border control and access control, providing a rapid and secure method of identity authentication. However, this same technology, when used without proper safeguards, can lead to misidentification, bias, and the erosion of privacy.

The potential for mass surveillance and the creation of detailed behavioral profiles poses a significant threat to the freedom and autonomy of individuals. The constant monitoring and analysis of our digital activities can lead to a sense of being constantly scrutinized, affecting self-perception and potentially leading to self-censorship. The reliance on AI for identity verification also raises concerns about data breaches and the potential for identity theft on an unprecedented scale.

Societal Impacts of Widespread AI Adoption on Personal Identity and Self-Perception

The widespread adoption of AI will have profound societal impacts on personal identity and self-perception. The creation of detailed digital profiles, based on AI analysis of our online behavior, could lead to the formation of “digital selves” that differ significantly from our offline identities. This could exacerbate existing social inequalities, as individuals from marginalized communities may face algorithmic bias and discrimination.

Furthermore, the constant pressure to curate a perfect online persona, driven by AI-powered social media algorithms, could lead to increased anxiety and feelings of inadequacy. The potential for AI-generated deepfakes and synthetic media raises further concerns about the authenticity and trustworthiness of online information, blurring the lines between reality and fabrication. The impact on self-esteem and mental health is a significant concern as individuals may struggle to reconcile their perceived online and offline selves.

For example, the rise of social comparison fuelled by curated online profiles can negatively impact self-esteem, particularly among young people. Similarly, the spread of misinformation and manipulation via AI-generated content could lead to societal fragmentation and erode trust in institutions and individuals.

AI Bias and Discrimination in Privacy and Identity Management

The seemingly objective nature of AI often masks a deeply problematic reality: the perpetuation and amplification of existing societal biases. AI systems, trained on data reflecting historical inequalities and prejudices, can inadvertently—and sometimes overtly—discriminate against specific groups in privacy and identity management. This creates significant ethical and practical challenges, undermining the very principles of fairness and equal access that these systems are intended to uphold.

Understanding these biases and developing mitigation strategies is crucial for building trustworthy and equitable AI systems.

AI bias in privacy and identity management stems from flaws in the data used to train algorithms. If the training data overrepresents certain demographics or reflects biased societal norms, the resulting AI system will likely replicate and even exacerbate those biases in its decisions. This can manifest in various ways, from unfairly denying services to specific groups to creating inaccurate or incomplete representations of identity.

The consequences can be severe, leading to real-world harm and perpetuating systemic inequalities.

Examples of AI Bias in Privacy and Identity Systems

AI bias in identity and privacy systems is a growing concern, with real-world consequences. For example, facial recognition systems have demonstrated higher error rates for individuals with darker skin tones, leading to misidentification and potentially wrongful arrests. Similarly, loan application algorithms trained on historical data may unfairly deny credit to applicants from specific socioeconomic backgrounds, perpetuating cycles of financial inequality.

In identity verification systems, biases can lead to inaccurate or discriminatory outcomes in processes such as passport applications or online account creation, impacting access to essential services. These are not isolated incidents; they highlight a systemic problem requiring immediate attention.

Strategies for Mitigating Bias in AI Systems

Addressing AI bias requires a multi-faceted approach encompassing data collection, algorithm design, and ongoing monitoring. Simply put, creating fair AI systems requires careful consideration at every stage of the development process. It’s not enough to simply build the system and hope for the best; proactive measures are needed to ensure equity and fairness.

| Bias Type | Impact on Privacy | Affected Groups | Mitigation Strategies |

|---|---|---|---|

| Data Bias (e.g., skewed representation of certain demographics in training data) | Inaccurate profiling, unfair targeting of specific groups with surveillance or advertising | Racial and ethnic minorities, low-income individuals, women | Data augmentation, resampling techniques, careful data curation and cleaning, auditing for representation |

| Algorithmic Bias (e.g., algorithms designed with inherent biases) | Discriminatory access to services, unfair denial of privacy protections | Individuals from marginalized communities, those with disabilities | Algorithmic transparency, explainable AI techniques, fairness-aware algorithms, independent audits |

| Feedback Loop Bias (e.g., biased outputs reinforcing existing biases in future data) | Reinforcement of stereotypes and discrimination over time, limited opportunities for certain groups | Many vulnerable groups, disproportionately affecting those already marginalized | Regular monitoring and evaluation of system outputs, feedback mechanisms to identify and correct biases, human-in-the-loop systems |

The Legal and Regulatory Landscape of AI and Privacy

The rapid advancement of artificial intelligence (AI) has created a complex and evolving legal landscape surrounding its use and impact on personal data. Existing laws and regulations, designed for a pre-AI era, are struggling to keep pace with the innovative applications and potential harms of this technology. Navigating this terrain requires understanding the diverse approaches taken by different jurisdictions and the inherent challenges in regulating a technology that’s constantly changing.Existing laws and regulations concerning the use of AI and the protection of personal data vary significantly across jurisdictions, reflecting different cultural, political, and economic priorities.

The European Union, for instance, has taken a leading role with the General Data Protection Regulation (GDPR), a comprehensive framework focused on individual rights and data protection. The GDPR imposes strict rules on data processing, including consent requirements, data minimization, and the right to be forgotten. In contrast, the United States has a more sectoral approach, with laws like the Health Insurance Portability and Accountability Act (HIPAA) protecting health information and the California Consumer Privacy Act (CCPA) granting California residents specific rights regarding their personal data.

These differences highlight the challenges of creating a globally harmonized regulatory framework for AI.

The General Data Protection Regulation (GDPR) and its Implications for AI

The GDPR, while not explicitly mentioning AI, has significant implications for its use. Its principles of lawfulness, fairness, and transparency apply directly to AI systems processing personal data. The GDPR’s requirements for data minimization, purpose limitation, and accountability pose challenges for AI developers who often rely on large datasets for training and operation. For example, the use of facial recognition technology, a prominent AI application, requires careful consideration of the GDPR’s principles, especially regarding data minimization and purpose limitation.

Failure to comply with the GDPR can result in substantial fines, making it a crucial consideration for businesses using AI.

Challenges in Regulating AI’s Impact on Privacy

Regulating AI’s impact on privacy presents unique challenges due to its rapid evolution and inherent complexity. The speed at which new AI technologies emerge makes it difficult for lawmakers to create regulations that remain relevant and effective. Furthermore, the opaque nature of some AI algorithms, often referred to as “black boxes,” makes it challenging to assess their potential risks to privacy and to ensure accountability.

This lack of transparency hinders effective oversight and enforcement. Another significant challenge lies in defining and regulating the use of AI in areas like predictive policing or automated decision-making, where biases in algorithms can lead to discriminatory outcomes. These challenges necessitate a flexible and adaptive regulatory approach, potentially involving ongoing collaboration between policymakers, researchers, and industry stakeholders.

Cross-Jurisdictional Differences in AI Regulation, The double edged sword of ai how ai is shaping the future of privacy and personal identity

The lack of a unified global regulatory framework for AI creates complexities for businesses operating internationally. Companies must navigate a patchwork of differing laws and regulations, leading to compliance costs and potential inconsistencies. For instance, a company using AI for targeted advertising might face different requirements regarding data collection and consent in the EU compared to the US or Asia.

This highlights the need for international cooperation and harmonization efforts to create a more consistent and predictable regulatory environment for AI. The development of common standards and principles could help bridge these gaps and promote innovation while protecting individual privacy rights.

Final Summary

The future of privacy and personal identity in an AI-driven world is undeniably intertwined with the ethical considerations surrounding data collection and usage. While AI offers solutions for enhanced security and personalized experiences, the potential for misuse and the erosion of privacy remain significant challenges. Navigating this complex landscape requires a proactive approach, involving both technological innovation in data protection and robust legal frameworks that prioritize individual rights.

Ultimately, the responsible development and deployment of AI will be crucial in shaping a future where technology serves humanity, rather than the other way around. The conversation, however, is far from over, and ongoing dialogue is essential to ensure a future where technology and privacy can coexist.

Essential Questionnaire

What are some examples of AI bias in identity verification systems?

Facial recognition systems have shown bias against certain racial groups, leading to inaccurate or discriminatory outcomes. Similarly, algorithms used in loan applications or background checks can perpetuate existing societal biases.

How can I protect my privacy in an AI-driven world?

Be mindful of the data you share online, utilize strong passwords and multi-factor authentication, regularly review your privacy settings on social media and other platforms, and consider using privacy-enhancing technologies like VPNs and ad blockers.

What is the role of legislation in addressing AI’s impact on privacy?

Legislation plays a crucial role in setting guidelines and establishing accountability for the use of AI and personal data. Laws like GDPR in Europe and CCPA in California are examples of efforts to regulate data collection and usage, but the rapid pace of AI development presents ongoing challenges for lawmakers.