Businesses Shift Toward Compliance as Code

Businesses shift toward compliance as code – it sounds like a techy buzzword, right? But it’s actually a game-changer for how companies manage regulations. Imagine a world where compliance isn’t a mountain of paperwork and endless audits, but a streamlined, automated process. That’s the promise of “Compliance as Code,” and it’s rapidly transforming how businesses operate, especially in industries with stringent regulatory requirements.

This shift isn’t just about efficiency; it’s about reducing risk, improving accuracy, and freeing up valuable resources.

This new approach uses code to define and enforce compliance policies, automating many of the tedious tasks traditionally handled manually. Think of it as bringing the precision and repeatability of software development to the world of regulatory compliance. This allows businesses to react more quickly to changes in regulations, reducing the chance of costly non-compliance penalties. We’ll explore the practical steps involved in implementing this transformative approach, examining its benefits, challenges, and future potential.

Defining “Compliance as Code”

Compliance as Code (CaC) is revolutionizing how businesses manage regulatory compliance. It’s a paradigm shift from traditional, manual methods, leveraging automation and coding principles to build a more efficient and effective compliance program. Think of it as treating compliance rules and processes like software code, allowing for automation, testing, and continuous improvement.Compliance as Code embodies several core principles.

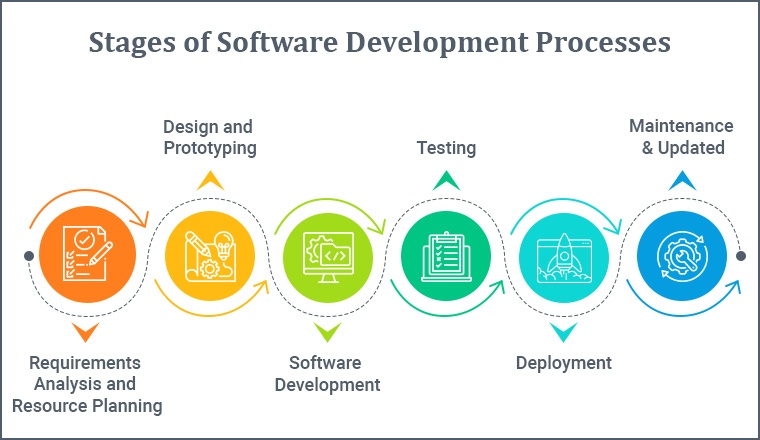

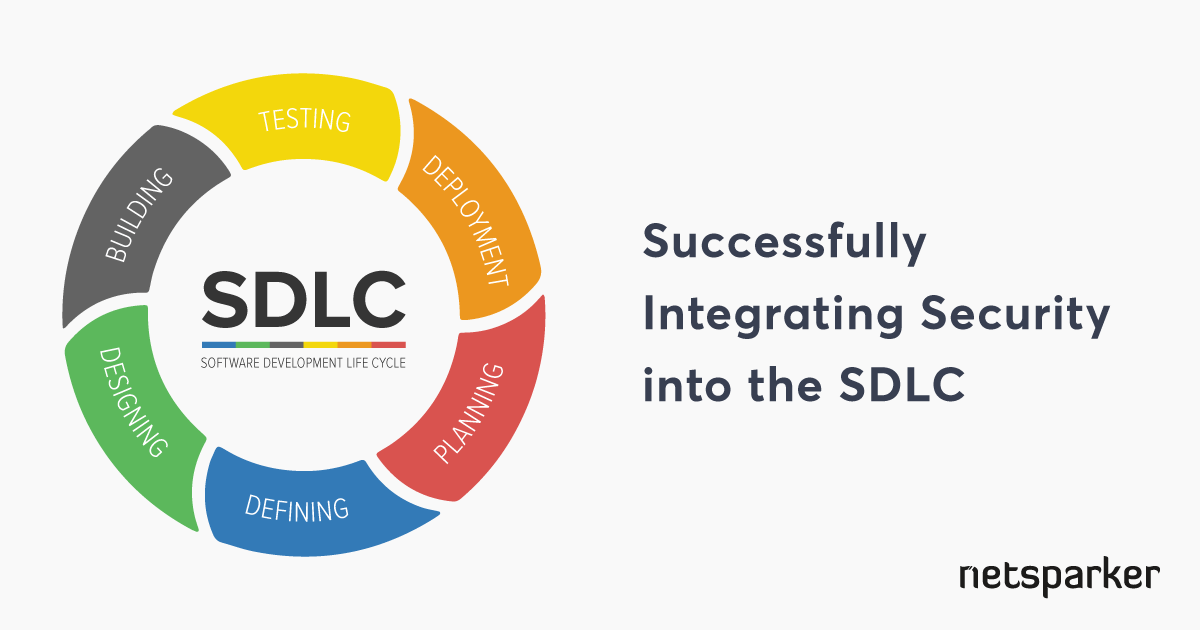

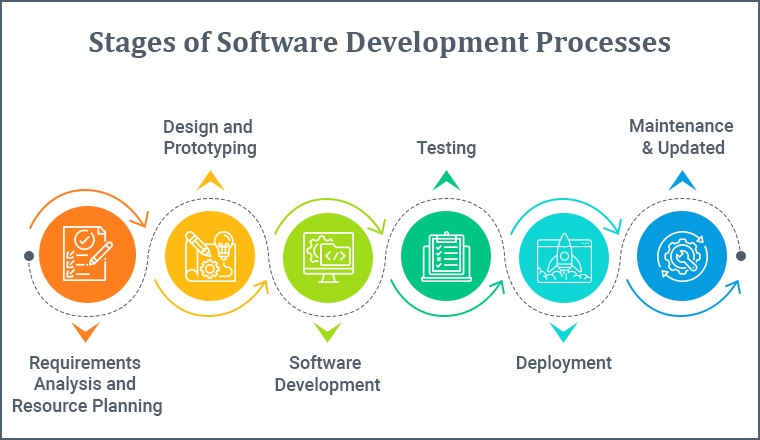

Firstly, it emphasizes automation. Repetitive compliance tasks, like data validation or policy checks, are automated, freeing up human resources for more strategic initiatives. Secondly, it promotes a proactive approach. By embedding compliance checks directly into the software development lifecycle (SDLC), issues are identified and addressed early, preventing costly remediation later. Finally, CaC relies on version control and continuous monitoring, ensuring that compliance configurations remain up-to-date and aligned with evolving regulations.

This creates a repeatable and auditable process.

Benefits of Adopting a Compliance as Code Approach

The advantages of adopting a Compliance as Code approach are numerous and impactful. By automating compliance tasks, businesses can significantly reduce operational costs and human error. This increased efficiency translates to faster time-to-market for new products and services, as compliance checks are integrated seamlessly into the development process. Furthermore, CaC fosters a culture of compliance, embedding best practices directly into the workflow and ensuring consistent adherence to regulations.

Improved visibility into compliance status through automated reporting and dashboards provides valuable insights for management and stakeholders. This proactive approach reduces the risk of non-compliance penalties and reputational damage. For example, a financial institution could automate KYC/AML checks, significantly reducing the risk of fines and reputational harm associated with failing to comply with anti-money laundering regulations.

Comparison of Compliance as Code and Traditional Compliance Methods

Traditional compliance methods typically rely on manual processes, spreadsheets, and disparate systems. This approach is often inefficient, prone to errors, and lacks the scalability to handle the increasing complexity of modern regulations. In contrast, Compliance as Code provides a centralized, automated, and auditable system. Traditional methods struggle to keep up with rapidly changing regulations, whereas CaC allows for agile updates and continuous monitoring.

Businesses are increasingly adopting “compliance as code” to automate security and regulatory checks. This shift is crucial as cloud adoption explodes, making robust security posture management essential. Understanding this need, check out this great article on bitglass and the rise of cloud security posture management to see how tools like Bitglass are helping. Ultimately, this focus on automation streamlines compliance within the increasingly complex cloud landscape, saving businesses time and resources.

The lack of automation in traditional methods often leads to increased costs and extended timeframes for compliance projects. CaC’s automated checks and continuous monitoring reduce both the financial and temporal burden associated with compliance. For example, a healthcare provider using traditional methods might spend significant time and resources manually reviewing patient data for HIPAA compliance, while a CaC approach could automate this process, freeing up staff and reducing errors.

Implementation Costs and Timeframes

| Method | Initial Investment | Ongoing Maintenance | Time to Implementation |

|---|---|---|---|

| Traditional Compliance | High (consultants, training, software licenses) | High (manual checks, audits, updates) | Long (months to years) |

| Compliance as Code | Moderate (software, infrastructure, developer training) | Moderate (software updates, monitoring) | Moderate (weeks to months) |

Implementation Strategies for Compliance as Code

Shifting to a Compliance as Code framework isn’t a simple switch; it’s a strategic journey requiring careful planning and execution. Success hinges on a well-defined implementation strategy that accounts for your organization’s specific needs and existing infrastructure. This involves a phased approach, leveraging automation and the right tools to ensure a smooth transition and lasting impact.Implementing a Compliance as Code framework involves a structured approach encompassing several key steps.

This isn’t a one-size-fits-all solution; the specifics will vary depending on the size and complexity of your organization and the regulatory landscape you operate within. However, a common thread runs through successful implementations: a commitment to automation and a phased rollout.

Key Steps in Implementing a Compliance as Code Framework

A successful implementation requires a systematic approach. The following steps provide a roadmap:

- Assessment and Planning: Begin by identifying your organization’s compliance requirements and current processes. This involves a thorough audit to pinpoint areas needing improvement and determine the scope of your Compliance as Code initiative. Consider the regulations you must adhere to (e.g., GDPR, HIPAA, PCI DSS) and the associated risks. This phase also includes defining success metrics and allocating resources.

- Tool Selection and Integration: Choose tools that align with your specific needs and integrate seamlessly with your existing infrastructure. This might involve Infrastructure-as-Code (IaC) tools like Terraform or Ansible, configuration management tools like Chef or Puppet, and security scanning tools like SonarQube or Snyk. Consider factors like scalability, ease of use, and integration capabilities.

- Policy Definition and Automation: Translate your compliance policies into machine-readable code. This is a crucial step that forms the foundation of your Compliance as Code framework. Use a consistent coding style and version control to manage changes effectively. Automate as many processes as possible to reduce manual intervention and human error.

- Testing and Validation: Thoroughly test your automated compliance checks to ensure accuracy and effectiveness. This includes unit testing, integration testing, and end-to-end testing. Regularly validate the framework against evolving regulatory requirements and organizational policies.

- Deployment and Monitoring: Deploy your Compliance as Code framework gradually, starting with a pilot project before scaling it across the organization. Continuously monitor the framework’s performance and make necessary adjustments to maintain its effectiveness. Regular audits and reviews are crucial.

The Role of Automation in Streamlining Compliance Processes

Automation is the cornerstone of a successful Compliance as Code implementation. It eliminates manual processes prone to human error, reduces the time and resources required for compliance checks, and allows for faster response times to potential violations. Automation can encompass various aspects, including:

- Automated policy enforcement: Automatically checking for compliance violations and triggering alerts.

- Automated remediation: Automatically fixing identified violations whenever possible.

- Automated reporting: Generating regular compliance reports to track progress and identify areas for improvement.

By automating these tasks, organizations can significantly improve their compliance posture, reduce operational costs, and free up personnel to focus on more strategic initiatives.

Examples of Tools and Technologies Used for Compliance as Code

Several tools and technologies facilitate the implementation of Compliance as Code. These tools vary in functionality and scope, catering to different needs and organizational structures. Some prominent examples include:

- Chef/Puppet/Ansible: Configuration management tools that automate the provisioning and configuration of infrastructure, ensuring consistency and compliance.

- Terraform: An Infrastructure-as-Code (IaC) tool that allows you to define and manage your infrastructure in a declarative manner, ensuring compliance from the outset.

- SonarQube/Snyk: Security scanning tools that automatically analyze code for vulnerabilities and compliance violations.

- InSpec: A testing framework specifically designed for compliance automation, allowing you to write tests that verify compliance with specific policies and regulations.

The choice of tools depends on your specific needs and existing infrastructure. Many organizations utilize a combination of tools to achieve comprehensive compliance coverage.

Phased Implementation Plan for a Medium-Sized Business

Let’s consider a hypothetical medium-sized business adopting Compliance as Code. A phased approach, starting with a pilot project, is recommended:

- Phase 1: Assessment and Pilot Project (3 months): Conduct a thorough assessment of compliance requirements, focusing on a specific area (e.g., security configurations). Select a pilot project involving a small subset of systems or applications. Implement basic automation for this area using chosen tools.

- Phase 2: Expansion and Refinement (6 months): Expand the Compliance as Code framework to include additional areas and systems. Refine the automation processes based on lessons learned from the pilot project. Implement more sophisticated reporting and monitoring capabilities.

- Phase 3: Full Integration and Optimization (12 months): Integrate the Compliance as Code framework across the entire organization. Optimize the framework for efficiency and scalability. Implement continuous integration and continuous delivery (CI/CD) pipelines to automate compliance checks throughout the software development lifecycle.

This phased approach allows for iterative improvement, minimizing disruption and maximizing the chances of success. Regular reviews and adjustments are essential throughout the implementation process.

Integrating Compliance as Code with Existing Systems: Businesses Shift Toward Compliance As Code

Integrating Compliance as Code (CaC) into your existing infrastructure isn’t a simple plug-and-play operation, especially when dealing with legacy systems. The complexities involved require careful planning and execution to avoid disrupting operations and compromising data integrity. Successfully navigating this integration is crucial for realizing the full benefits of CaC.The inherent challenges of integrating CaC with legacy systems stem from several factors.

Legacy systems often lack the flexibility and APIs necessary for seamless integration with modern CaC tools. Data structures might be incompatible, requiring extensive data transformation and mapping. Furthermore, these systems may rely on outdated technologies and processes that are difficult to adapt to the automated, policy-driven nature of CaC. Finally, the lack of comprehensive documentation for legacy systems can significantly hamper the integration process.

Challenges of Integrating Compliance as Code with Legacy Systems

Integrating CaC with legacy systems presents significant hurdles. The lack of well-defined APIs in older systems often necessitates custom integrations, increasing development time and costs. Data inconsistencies between the legacy system and the CaC platform need to be addressed through meticulous data cleansing and transformation processes. Furthermore, the risk of introducing vulnerabilities during the integration process is heightened due to the potential incompatibility between the security protocols of the different systems.

Finally, the absence of clear documentation for legacy systems makes understanding their functionality and data flow extremely difficult, slowing down the integration process.

Strategies for Mitigating Risks Associated with the Integration Process

A phased approach is essential for minimizing risks. Start with a pilot project, integrating CaC with a smaller, less critical part of the legacy system to test the integration process and identify potential issues before scaling up. Employ rigorous testing throughout the integration process, including unit tests, integration tests, and user acceptance testing, to ensure that the CaC implementation works as expected and does not introduce vulnerabilities.

Establish clear communication channels between the development team and the legacy system maintainers to facilitate knowledge sharing and problem-solving. Finally, document the entire integration process meticulously, including the decisions made, the challenges encountered, and the solutions implemented, to facilitate future maintenance and updates.

Ensuring Data Consistency and Accuracy Across Different Systems

Data consistency and accuracy are paramount. Implement robust data validation checks at each stage of the integration process to ensure that data is transformed and mapped correctly. Employ data quality tools to identify and correct inconsistencies in the legacy system’s data before integration. Establish a clear data governance framework to define data ownership, access controls, and data quality standards.

Regular data reconciliation processes should be implemented to compare data across the legacy system and the CaC platform, identifying and resolving any discrepancies. Consider using data virtualization techniques to provide a unified view of data across different systems without requiring complex data migration processes.

Step-by-Step Guide for Integrating Compliance as Code with a Customer Relationship Management (CRM) System, Businesses shift toward compliance as code

- Assessment: Analyze the CRM system’s architecture, data structures, and APIs to identify integration points and potential challenges.

- Planning: Define the scope of the integration, outlining the specific compliance requirements to be automated and the data to be exchanged between the CRM and the CaC platform.

- Development: Develop custom integrations or utilize existing connectors to link the CRM and the CaC platform. This may involve creating custom scripts or using APIs to automate data exchange.

- Testing: Conduct thorough testing, including unit, integration, and user acceptance testing, to ensure data accuracy and compliance with the defined requirements.

- Deployment: Deploy the integrated system to a production environment, ensuring proper monitoring and logging to track system performance and identify potential issues.

- Monitoring: Continuously monitor the system’s performance and compliance posture, making adjustments as needed to address any issues or changes in requirements.

Measuring the Effectiveness of Compliance as Code

Implementing Compliance as Code is a significant undertaking, promising streamlined processes and reduced risk. However, its success isn’t self-evident; regular measurement and evaluation are crucial to ensure the initiative delivers on its objectives. This involves establishing clear Key Performance Indicators (KPIs) and a robust tracking system to monitor progress and identify areas for improvement.Effective measurement provides data-driven insights into the effectiveness of your Compliance as Code framework.

This data allows for continuous refinement and optimization, ultimately maximizing the return on investment and minimizing compliance risks. Without this feedback loop, the initiative risks becoming a costly exercise with limited tangible benefits.

Key Performance Indicators (KPIs) for Compliance as Code

A well-defined set of KPIs provides a clear picture of the initiative’s performance. These metrics should be tailored to your specific organization and regulatory requirements, but some common and effective KPIs include:

| KPI | Target Value | Current Value | Trend |

|---|---|---|---|

| Number of Compliance Violations | < 10 per quarter | 5 this quarter | Decreasing |

| Time to Remediation of Violations | < 24 hours | 18 hours | Improving |

| Automation Rate of Compliance Checks | 90% | 85% | Stable |

| Accuracy of Automated Compliance Checks | 99% | 98% | Stable |

Tracking and Reporting on Compliance Violations

A robust system for tracking and reporting compliance violations is essential. This system should capture details such as the type of violation, the affected system or process, the date and time of the violation, and the remediation steps taken. Automated alerts triggered by violations are particularly valuable, allowing for rapid response and mitigation. Regular reports summarizing the number and type of violations, along with remediation timelines, should be generated and distributed to relevant stakeholders.Consider using a centralized compliance management platform that integrates with your Compliance as Code tools.

This allows for a unified view of compliance status across the organization and simplifies reporting. Such platforms often provide dashboards and reporting tools that can be customized to meet your specific needs. For example, a report might show a breakdown of violations by severity, team, or system, enabling targeted improvements.

Best Practices for Continuous Improvement

Continuous improvement is vital for maintaining the effectiveness of a Compliance as Code framework. Regular reviews of the KPIs, along with feedback from stakeholders, can identify areas needing attention. This might involve refining existing policies, improving automation, or enhancing the training provided to developers and other relevant personnel.Implementing a feedback loop is critical. Encourage developers and compliance officers to provide input on the effectiveness of the system.

Regular audits, both internal and potentially external, are important to verify the framework’s accuracy and completeness. Consider using automated tools to monitor the performance of the Compliance as Code system itself, identifying potential weaknesses or areas for optimization. Finally, staying abreast of changes in regulations and industry best practices ensures the framework remains relevant and effective over time.

Security and Risk Management within Compliance as Code

Implementing Compliance as Code offers significant advantages, streamlining regulatory adherence and reducing manual effort. However, this approach introduces new security considerations that must be carefully addressed to prevent vulnerabilities and maintain the integrity of your compliance program. A robust security strategy is paramount to realizing the full benefits of Compliance as Code without compromising sensitive data or introducing new risks.The shift to automating compliance processes inherently increases the attack surface.

Malicious actors could exploit weaknesses in the code, infrastructure, or access controls to manipulate compliance data, bypass controls, or even gain unauthorized access to sensitive information. Therefore, proactive security measures are crucial to safeguard the integrity and confidentiality of your compliance program.

Access Control Mechanisms

Effective access control is fundamental to securing your Compliance as Code infrastructure. This involves implementing granular permissions based on the principle of least privilege, ensuring that users only have access to the resources and data they absolutely need to perform their duties. Role-based access control (RBAC) is a widely used model that allows for the definition of roles with specific permissions, simplifying user management and enhancing security.

For example, a developer might have access to edit the compliance code, but not to view sensitive audit logs, while an auditor would have read-only access to the logs but not the ability to modify the code. Multi-factor authentication (MFA) should also be mandatory for all users accessing the Compliance as Code system to add an extra layer of security against unauthorized login attempts.

Data Encryption Strategies

Data encryption is essential for protecting sensitive information stored and processed within the Compliance as Code system. This includes encrypting data at rest (when stored on disks or in databases) and in transit (when transmitted over networks). Strong encryption algorithms, such as AES-256, should be used, and encryption keys should be securely managed and rotated regularly. Consider using a key management system (KMS) to automate key management tasks and enhance security.

For example, all compliance reports containing personally identifiable information (PII) should be encrypted both when stored in databases and when transmitted across the network. The encryption keys should be managed by a dedicated KMS with strict access control policies.

Security Audits and Vulnerability Assessments

Regular security audits are critical for identifying and mitigating potential vulnerabilities within the Compliance as Code system. These audits should encompass code reviews, penetration testing, and vulnerability assessments to identify weaknesses in the code, infrastructure, and access controls. The findings of these audits should be documented and addressed promptly to ensure the ongoing security and integrity of the system.

For instance, a regular penetration test should simulate real-world attacks to identify vulnerabilities that could be exploited by malicious actors. Following the test, remediation efforts should be implemented, and the system retested to verify the effectiveness of the fixes.

Securing the Compliance as Code Infrastructure

Securing the infrastructure underlying the Compliance as Code system is paramount. This involves implementing robust security measures at all levels, from the network to the operating system and applications. This includes using firewalls, intrusion detection/prevention systems (IDS/IPS), and regular security patching to protect against known vulnerabilities. Regular backups of the system should be performed and stored securely offsite to ensure business continuity in case of a disaster.

For example, implementing a robust virtual private cloud (VPC) with appropriate network segmentation and security group rules can significantly enhance the security posture of the Compliance as Code infrastructure. Furthermore, employing a secure configuration management tool to ensure consistent and secure configurations across all systems is essential.

The Future of Compliance as Code

Compliance as Code (CaC) is rapidly evolving, driven by technological advancements and the increasing complexity of regulatory landscapes. Its future trajectory promises significant changes in how organizations manage compliance, impacting efficiency, risk mitigation, and overall operational effectiveness. We can expect to see a deeper integration of CaC with other critical business functions, leading to a more holistic and proactive approach to regulatory adherence.The adoption and evolution of Compliance as Code will be shaped by several key factors.

Increasingly sophisticated AI and machine learning tools will play a crucial role in automating compliance tasks, identifying potential violations, and predicting future risks. The rise of cloud-based platforms and serverless architectures will further streamline CaC implementations, making them more accessible and scalable for organizations of all sizes. However, challenges remain, particularly in maintaining the accuracy and up-to-dateness of the codebase in the face of constantly changing regulations.

Emerging Trends and Technologies

The integration of artificial intelligence (AI) and machine learning (ML) is transforming CaC. AI-powered systems can analyze vast amounts of data to identify patterns and anomalies indicative of compliance violations. ML algorithms can learn from past compliance incidents to predict future risks and proactively suggest mitigation strategies. For example, an AI system could analyze financial transactions to detect potential instances of fraud or bribery, flagging them for human review and investigation, reducing the time and resources needed for manual audits.

This proactive approach significantly enhances the effectiveness of compliance programs. Furthermore, blockchain technology offers potential for enhanced transparency and immutability in tracking compliance-related data, creating an auditable trail that can significantly reduce the risk of disputes and enhance trust.

Predictions for Future Adoption and Evolution

We predict a significant increase in the adoption of CaC across various industries in the coming years. Smaller organizations, previously hindered by the perceived complexity and cost, will find CaC more accessible due to the emergence of user-friendly platforms and cloud-based solutions. The evolution of CaC will involve a greater emphasis on automation, integration, and predictive analytics. We foresee a move towards more standardized and interoperable CaC frameworks, allowing organizations to seamlessly integrate their compliance programs with other systems.

The use of CaC will also extend beyond traditional regulatory compliance, encompassing areas such as data privacy, security, and ethical AI development. For instance, a major financial institution might use CaC to automate KYC/AML checks, while a healthcare provider could utilize it to manage HIPAA compliance.

Potential Future Challenges

Despite its numerous benefits, the widespread adoption of CaC will face several challenges. Maintaining the accuracy and up-to-dateness of CaC codebases in response to evolving regulations will require significant effort and investment. Ensuring the security and integrity of CaC systems will be paramount to prevent unauthorized access or manipulation of compliance data. The need for skilled professionals capable of developing, implementing, and maintaining CaC systems will create a demand for specialized training and expertise.

Finally, integrating CaC with legacy systems and diverse organizational structures can prove challenging, requiring careful planning and execution.

Businesses are increasingly adopting “compliance as code,” automating regulatory checks within their development pipelines. This shift is especially relevant as we see advancements in application development, like those explored in this excellent article on domino app dev the low code and pro code future , which highlights how streamlined development can actually improve compliance efforts. Ultimately, faster, more efficient development processes, driven by low-code/no-code platforms, can lead to better adherence to regulations.

Potential Future Advancements in Compliance as Code Technology

The following advancements will likely shape the future of Compliance as Code:

- Increased Automation: Further automation of compliance tasks, reducing manual effort and improving efficiency.

- Advanced Analytics: More sophisticated predictive analytics capabilities to anticipate and mitigate future compliance risks.

- Improved Integration: Seamless integration with other business systems, creating a holistic compliance management solution.

- Standardized Frameworks: The development of widely accepted industry standards for CaC, enhancing interoperability.

- AI-Driven Compliance: Wider adoption of AI and ML to automate compliance checks, identify violations, and suggest mitigation strategies.

- Blockchain Integration: Leveraging blockchain technology for enhanced transparency, immutability, and auditability of compliance data.

- Enhanced Security: Improved security measures to protect CaC systems from unauthorized access and manipulation.

Final Review

The shift toward Compliance as Code represents a significant evolution in how businesses approach regulatory compliance. By embracing automation and codifying policies, organizations can dramatically improve efficiency, reduce risk, and gain a competitive edge. While challenges remain, particularly in integrating with legacy systems, the long-term benefits are undeniable. The future of compliance is clearly automated, and those who adapt early will be best positioned to navigate the ever-evolving regulatory landscape.

This is more than just a trend; it’s a necessary step towards a more efficient and secure future for businesses of all sizes.

FAQ Summary

What are the biggest challenges in implementing Compliance as Code?

Integrating with legacy systems and ensuring data consistency across different platforms can be challenging. Lack of internal expertise and the need for significant upfront investment are also common hurdles.

How does Compliance as Code improve security?

By automating security checks and enforcing policies through code, Compliance as Code reduces human error and strengthens the overall security posture. It allows for quicker identification and remediation of vulnerabilities.

Is Compliance as Code suitable for small businesses?

While the initial investment might seem daunting, even small businesses can benefit from Compliance as Code, potentially starting with a phased approach focusing on key areas.

What are some examples of Compliance as Code tools?

Several tools exist, ranging from open-source solutions to commercial platforms. Examples include Chef, Puppet, Ansible, and specialized compliance automation platforms.