The Power of Ansible Inside Workload Automation

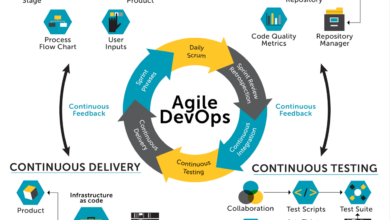

The power of Ansible inside workload automation is truly transformative. Imagine a world where deploying applications, managing infrastructure, and scaling your systems happens seamlessly, automatically, and with incredible speed. That’s the promise Ansible delivers, taking the complexity out of managing even the most intricate IT landscapes. This isn’t just about scripting; it’s about orchestrating your entire IT environment for optimal efficiency and control.

This post dives deep into how Ansible simplifies complex tasks, from provisioning virtual machines to orchestrating application deployments across multiple environments. We’ll explore best practices, security considerations, and advanced techniques to help you harness the full power of Ansible for your workload automation needs. Get ready to revolutionize your workflows!

Ansible’s Role in Workload Automation

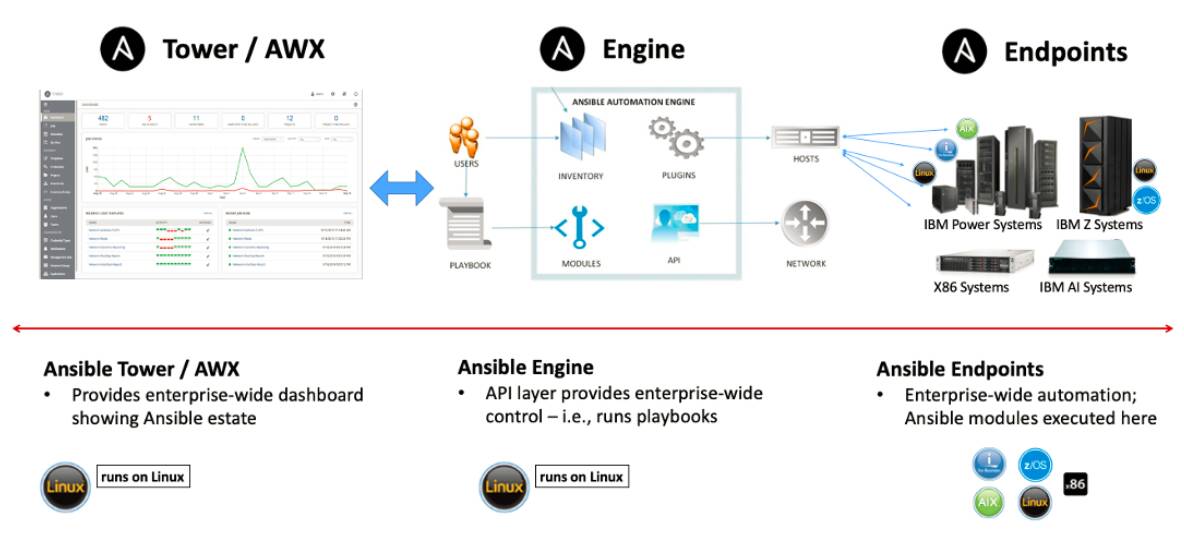

Ansible has emerged as a powerful tool in the realm of workload automation, significantly streamlining the management and deployment of applications and infrastructure. Its agentless architecture and declarative approach make it an attractive alternative to traditional methods, offering enhanced efficiency and scalability. This post will delve into Ansible’s core functionalities and illustrate how it simplifies complex automation tasks.Ansible’s Core Functionalities in Workload AutomationAnsible’s strength lies in its ability to automate repetitive tasks across diverse IT infrastructure.

It leverages a simple yet effective YAML-based configuration language to define desired states for systems and applications. This declarative approach allows for easy management and version control of automation workflows. Central to Ansible’s role in workload automation are its modules, which provide pre-built functionalities for managing various aspects of IT infrastructure, including network devices, cloud platforms, and container orchestration systems.

These modules handle tasks such as provisioning servers, deploying applications, configuring databases, and managing security policies.Simplifying Complex Automation Tasks with AnsibleTraditional methods of workload automation often involve intricate scripting and manual intervention, leading to increased operational complexity and potential for errors. Ansible simplifies this process dramatically. Its modular design allows for the creation of reusable playbooks, which encapsulate complex automation tasks into manageable units.

These playbooks can be easily shared and reused across different environments, promoting consistency and reducing the risk of human error. Furthermore, Ansible’s idempotency ensures that tasks are executed only when necessary, preventing unintended modifications to systems. This robustness is critical in maintaining stable and reliable IT infrastructure. For example, a playbook could be designed to deploy a web application across multiple servers, automatically configuring the necessary software, databases, and network settings.

This eliminates the need for manual configuration on each server, saving significant time and effort.

Ansible vs. Traditional Workload Automation Methods

The following table compares Ansible’s approach to workload automation with traditional methods, highlighting key differences in scalability, ease of use, and cost.

| Feature | Ansible | Traditional Methods (e.g., Shell Scripting, Manual Configuration) |

|---|---|---|

| Scalability | Highly scalable; easily manages thousands of nodes with minimal overhead. | Limited scalability; managing large numbers of systems becomes increasingly complex and error-prone. |

| Ease of Use | User-friendly YAML syntax; requires minimal coding expertise. Leverages reusable modules. | Requires significant scripting expertise and often involves complex and difficult-to-maintain scripts. |

| Cost | Open-source core; cost-effective for most deployments. Enterprise versions offer additional features. | Can be expensive due to the need for specialized personnel and potentially costly proprietary tools. |

| Maintainability | Easier to maintain and update due to its modular design and version control capabilities. | Difficult to maintain and update, especially for large and complex scripts. Prone to errors during modifications. |

Automating Infrastructure Provisioning with Ansible: The Power Of Ansible Inside Workload Automation

Ansible shines when automating the often tedious and error-prone process of infrastructure provisioning. Instead of manually creating virtual machines, configuring networks, and installing software, Ansible allows you to define this entire process in a declarative manner, ensuring consistency and repeatability across your infrastructure. This automation not only saves time and resources but also drastically reduces the risk of human error, leading to a more stable and reliable environment.Ansible’s agentless architecture simplifies the process significantly.

It leverages SSH to connect to target machines, eliminating the need for installing agents on each server. This reduces management overhead and improves security, as you don’t need to manage additional software on your infrastructure. The power lies in its ability to manage both the initial provisioning and ongoing configuration management seamlessly within a single workflow.

An Ansible Playbook for Virtual Machine Provisioning

This playbook utilizes the `ec2` module to create a virtual machine on Amazon Web Services (AWS). Adaptations for other cloud providers like Azure or Google Cloud Platform are straightforward, mainly requiring changes to the module and provider-specific parameters. This example focuses on creating a basic Ubuntu server. Remember to replace placeholders like `

hosts

localhost

connection: local become: false tasks:

name

Create EC2 instance ec2: region: us-east-1 instance_type: t2.micro image: ami-0c55b31ad2299a701 # Replace with your desired AMI ID key_name:

name

Output instance ID debug: msg: “EC2 instance ID: ec2_instance.instance_id ““`

Configuration Management During Provisioning

Ansible seamlessly integrates configuration management into the provisioning process. Following the VM creation, the playbook can execute tasks to install packages, configure services, and set up users. This ensures the VM is ready to use immediately after provisioning. For example, after creating the VM, the playbook can install Apache web server, configure firewall rules, and deploy a basic web application.

This eliminates the need for separate post-provisioning steps, streamlining the entire workflow.“`yaml

name

Install Apache apt: name: apache2 state: present update_cache: yes

name

Start Apache service: name: apache2 state: started enabled: yes“`

Securing Ansible Playbooks in Infrastructure Automation

Security is paramount when automating infrastructure provisioning. Never hardcode sensitive information like passwords and API keys directly into your playbooks. Ansible Vault provides a robust mechanism to encrypt sensitive data, protecting it from unauthorized access. Using Ansible Vault, you can store your credentials in an encrypted file and decrypt them only when needed during playbook execution. This significantly enhances the security of your automation process.Additionally, implementing role-based access control (RBAC) and utilizing Ansible’s inventory management features to manage access to different parts of your infrastructure is crucial.

Regularly auditing your playbooks and ensuring they adhere to security best practices is an ongoing responsibility. Employing version control for your playbooks enables tracking changes, facilitates collaboration, and aids in rollback in case of errors. This ensures both security and maintainability of your automation infrastructure.

Orchestrating Application Deployments using Ansible

Ansible’s strength lies not just in infrastructure management, but also in its seamless ability to orchestrate complex application deployments across diverse environments. This capability significantly reduces manual effort, minimizes errors, and accelerates the deployment process, leading to faster time-to-market and increased operational efficiency. This post delves into how Ansible achieves this, providing practical examples and a step-by-step guide.Ansible leverages its modular architecture and powerful scripting capabilities to handle application deployments with precision and consistency.

It allows you to define your deployment process as code, ensuring reproducibility and simplifying collaboration among development and operations teams. This approach is crucial for managing complex applications with multiple dependencies and configurations across various stages (development, testing, staging, production).

Ansible Modules for Application Deployment and Configuration

Ansible offers a rich set of modules specifically designed for application deployment and configuration management. These modules interact directly with the target systems, executing commands and making necessary changes according to your defined playbook. This eliminates the need for manual intervention and ensures uniformity across all deployed instances.

copymodule: This module is fundamental for transferring files and directories to remote servers. It’s frequently used to deploy application code, configuration files, and other necessary assets. For example, you could use it to copy your web application’s codebase from a local repository to a web server.apt(Debian/Ubuntu) oryum(Red Hat/CentOS) modules: These modules handle package management, enabling you to install application dependencies efficiently. You can specify package names and versions, ensuring consistency across your environments.servicemodule: This module controls the startup, shutdown, and status of system services. It’s essential for managing the lifecycle of your application services, ensuring they are running correctly after deployment.templatemodule: This module allows you to render Jinja2 templates, dynamically generating configuration files based on variables and facts gathered during the deployment process. This is incredibly useful for customizing configurations across different environments without modifying the core application code.unarchivemodule: This module extracts compressed archives, such as `.tar.gz` or `.zip` files, often used to deploy applications packaged as archives.

Deploying a Simple Web Application using Ansible

This section Artikels a step-by-step guide for deploying a basic web application using Ansible. We’ll assume a simple Python Flask application. This example showcases the core concepts and can be adapted for more complex applications.

- Prepare the Application: Create a simple Flask application (or use an existing one). Package the application code, including any necessary dependencies, into a compressed archive (e.g., `myapp.tar.gz`).

- Create an Ansible Inventory: Define your target servers in an Ansible inventory file (e.g., `hosts`). This file specifies the IP addresses or hostnames of the servers where you want to deploy the application.

- Write the Ansible Playbook: Create an Ansible playbook (e.g., `deploy.yml`) that describes the deployment process. This playbook will use the modules mentioned above to execute the deployment steps.

- Deploy the Application: Run the Ansible playbook using the command

ansible-playbook deploy.yml. Ansible will connect to the servers specified in the inventory, execute the tasks defined in the playbook, and deploy the application.

Example snippet from a `deploy.yml` playbook:“`yaml

hosts

webservers become: true tasks:

name

Copy application archive copy: src: ./myapp.tar.gz dest: /tmp/

name

Extract application archive unarchive: src: /tmp/myapp.tar.gz dest: /var/www/myapp

name

Install Python dependencies pip: requirements: /var/www/myapp/requirements.txt

name

Start the application (assuming a systemd service) service: name: myapp state: started“`

Managing and Monitoring Workloads with Ansible

Ansible’s power extends beyond provisioning and deployment; it’s a crucial tool for ongoing management and monitoring of your workloads. Effective monitoring provides insights into the health and performance of your systems, allowing for proactive problem-solving and ensuring the stability of your applications. This section explores key metrics, integration strategies, and alerting mechanisms to optimize Ansible’s role in maintaining your infrastructure.Effective monitoring of Ansible-managed workloads requires a multifaceted approach, combining automated data collection with intelligent alerting systems.

By integrating Ansible with monitoring tools, you can gain a comprehensive view of your infrastructure’s performance, allowing for proactive intervention and improved operational efficiency.

Key Metrics for Monitoring Ansible-Managed Workloads

Understanding which metrics to track is critical for effective monitoring. Focusing on the right data allows for quick identification of issues and prevents small problems from escalating into major outages. Prioritizing these metrics provides a strong foundation for a robust monitoring strategy.

- CPU Utilization: Tracking CPU usage across servers managed by Ansible helps identify overloaded systems and potential bottlenecks. High CPU consistently exceeding 80% for extended periods, for example, might indicate a need for resource scaling or application optimization.

- Memory Usage: Monitoring RAM consumption allows for early detection of memory leaks or resource exhaustion. A sudden spike in memory usage, coupled with slow application performance, could indicate a problem requiring immediate attention.

- Disk I/O: Tracking disk read/write operations helps identify performance bottlenecks related to storage. Sustained high disk I/O could signal a need for faster storage or database optimization.

- Network Traffic: Monitoring network bandwidth usage reveals potential network congestion issues. Unexpectedly high network traffic might indicate a security breach or a malfunctioning application.

- Application-Specific Metrics: Beyond system-level metrics, Ansible can be used to collect application-specific data like request latency, error rates, and transaction counts. This granular level of monitoring is crucial for pinpointing application-level problems.

Integrating Ansible with Monitoring Tools, The power of ansible inside workload automation

Several strategies exist for seamlessly integrating Ansible with popular monitoring tools. Choosing the right approach depends on your existing infrastructure and preferred monitoring solution. A robust integration ensures efficient data collection and analysis.

- Using Ansible Modules: Many monitoring tools provide Ansible modules for collecting metrics and managing alerts. These modules simplify the integration process, allowing for automated data collection directly within your Ansible playbooks.

- Custom Scripts and Modules: For tools lacking dedicated Ansible modules, custom scripts can be developed to collect metrics and feed them into the monitoring system. This approach offers flexibility but requires more development effort.

- API Integrations: Many monitoring tools offer APIs allowing for direct integration with Ansible. This method enables automated data pushing to the monitoring system, providing real-time visibility into your infrastructure.

Alerting on Critical Events

A well-designed alerting system is crucial for proactive problem-solving. This system should prioritize critical events, minimizing noise while ensuring timely notification of issues that require immediate attention.

An effective alerting system leverages the data collected through Ansible and your monitoring tools. Thresholds are set for critical metrics, triggering alerts when these thresholds are breached. For example, if CPU utilization exceeds 95% for more than 15 minutes, an alert is generated, notifying the relevant team to investigate and address the issue. These alerts can be delivered through various channels, such as email, SMS, or dedicated monitoring dashboards.

The system should also include mechanisms to acknowledge and resolve alerts, preventing alert fatigue and ensuring that all issues are addressed.

Scaling Workload Automation with Ansible

Ansible’s power shines brightest when managing numerous servers and complex applications. Scaling Ansible deployments effectively is crucial for maintaining efficiency and preventing bottlenecks as your infrastructure grows. This involves carefully considering inventory management, leveraging Ansible’s features for parallel execution, and adopting strategies for handling failures gracefully. We’ll explore techniques to ensure your Ansible automation remains robust and scalable even as your workload expands significantly.Ansible’s inherent flexibility allows for a variety of scaling strategies, depending on your specific needs and infrastructure.

The key is to avoid a monolithic approach and instead design a system that’s modular and easily adaptable to future growth. This includes smart inventory management, efficient task delegation, and robust error handling.

Strategies for Scaling Ansible Deployments

Scaling Ansible effectively often hinges on smart use of its built-in features and external tools. One crucial aspect is efficient task execution across many servers. Ansible’s built-in concurrency mechanisms allow you to run playbooks in parallel across multiple nodes, dramatically reducing execution time. This can be fine-tuned using parameters like `forks` to control the number of parallel processes.

Additionally, utilizing Ansible’s roles and reusable modules promotes code reusability and simplifies the management of large and complex automation tasks. Furthermore, employing techniques like intelligent inventory grouping, dynamic inventory, and Ansible Galaxy for reusable roles contribute significantly to efficient scaling.

Managing Ansible Inventory in Large-Scale Environments

Efficient inventory management is paramount when dealing with a large number of servers. Manually managing a sprawling inventory file becomes unwieldy and error-prone. Instead, dynamic inventory scripts become essential. These scripts can automatically generate your Ansible inventory based on data from your configuration management database or cloud provider APIs. This ensures that your inventory is always up-to-date and reflects the current state of your infrastructure.

For example, a script could query AWS EC2 to fetch all instances with a specific tag, dynamically creating the inventory. This approach minimizes manual intervention and reduces the risk of human error. Another approach is to utilize a dedicated inventory management system that integrates with Ansible. Such systems often provide features like version control, access control, and automated updates, further enhancing scalability and maintainability.

Automating Application Scaling with Ansible

Ansible excels at automating the scaling of applications based on demand. By integrating with cloud providers’ APIs or internal monitoring systems, Ansible can dynamically adjust the number of application instances running. For example, a playbook could monitor CPU utilization and automatically launch new instances if utilization exceeds a predefined threshold. Conversely, it could terminate idle instances to conserve resources.

This requires the use of Ansible modules that interact with your chosen cloud provider (like AWS EC2, Google Compute Engine, or Azure) or your internal orchestration system (like Kubernetes). A well-structured playbook can handle the entire process, from provisioning new instances to configuring them, deploying the application, and finally, registering them with a load balancer. This ensures that your application can seamlessly scale to meet fluctuating demand without manual intervention.

Consider a scenario where an e-commerce website experiences a surge in traffic during a holiday sale. Ansible can automatically spin up additional web server instances to handle the increased load, ensuring a smooth user experience. Once the traffic subsides, Ansible can then scale down, reducing costs by terminating the unnecessary instances.

Security Considerations in Ansible-based Workload Automation

Ansible, while powerful for automating infrastructure and application deployments, introduces security risks if not implemented carefully. The distributed nature of Ansible, combined with its access to sensitive information like credentials and configuration files, necessitates a robust security posture. Failing to address these risks can lead to compromised systems, data breaches, and significant operational disruptions. This section details potential vulnerabilities and Artikels mitigation strategies for secure Ansible-based workload automation.

The core security concerns revolve around the management of Ansible’s control node, the communication channels between the control node and managed nodes, and the security of the playbooks and associated data. Improperly secured credentials, insecure communication protocols, and vulnerabilities within Ansible itself can all expose your infrastructure to attack.

Securing Ansible Credentials

Proper credential management is paramount. Storing passwords directly in playbooks is a major security risk. Ansible offers several mechanisms to securely manage credentials, minimizing exposure and enhancing overall security. The most common and recommended approach is using Ansible Vault to encrypt sensitive information, including passwords, API keys, and certificates. This prevents unauthorized access even if the playbook files are compromised.

Additionally, integrating with a secrets management system like HashiCorp Vault or AWS Secrets Manager provides a centralized and auditable solution for managing and rotating credentials. This approach reduces the risk associated with storing sensitive information within the Ansible environment itself.

Secure Communication Channels

Ansible communicates with managed nodes using SSH by default. Ensuring secure SSH connections is crucial. This includes using strong SSH keys, enforcing key-based authentication (avoiding password-based authentication whenever possible), and regularly rotating SSH keys. Furthermore, consider using SSH tunneling to encrypt traffic between the Ansible control node and managed nodes, especially when traversing untrusted networks. Enabling SSH features like connection timeouts and disabling password authentication adds further layers of security.

Protecting Ansible Playbooks and Inventory Files

Ansible playbooks and inventory files contain sensitive information about your infrastructure. Access to these files should be strictly controlled. Storing these files in a secure repository with access control mechanisms (like Git with appropriate permissions) is essential. Regularly auditing these files and employing version control help in tracking changes and identifying potential vulnerabilities. Restricting access to only authorized personnel prevents accidental or malicious modifications.

Implementing Least Privilege

It’s crucial to follow the principle of least privilege: grant only the necessary permissions to Ansible and its associated users. Avoid running Ansible with excessive privileges on managed nodes.

This significantly limits the potential damage from compromised credentials or vulnerabilities. Using dedicated user accounts with restricted permissions for Ansible on both the control node and managed nodes greatly reduces the attack surface.

Ansible’s strength in workload automation lies in its ability to streamline complex processes, making deployments a breeze. This efficiency is especially crucial when dealing with application development, like the exciting advancements discussed in this article on domino app dev the low code and pro code future , where rapid iteration is key. Ultimately, Ansible’s automation complements these modern development approaches, ensuring smooth and reliable deployments across various environments.

Regular Security Audits and Updates

Regular security audits are essential to identify and address potential vulnerabilities. This includes scanning Ansible’s control node and managed nodes for vulnerabilities, reviewing access controls, and ensuring all components are up-to-date with the latest security patches. Staying current with Ansible updates and security advisories is critical for mitigating known vulnerabilities. Automated security scanning tools can significantly streamline this process.

Network Segmentation and Firewalls

Network segmentation can limit the impact of a potential breach by isolating different parts of your infrastructure. Firewalls should be configured to allow only necessary traffic between the Ansible control node and managed nodes. This prevents unauthorized access from external sources. Carefully managing network access controls is crucial for maintaining a secure Ansible deployment.

Advanced Ansible Techniques for Workload Automation

Ansible’s power extends far beyond basic automation. Mastering advanced techniques unlocks significant efficiency gains and allows for the creation of robust, scalable, and maintainable workload automation solutions. This section delves into key strategies for leveraging Ansible’s full potential in complex environments.

Ansible Roles and Modules for Reusability and Modularity

Ansible’s strength lies in its modular design. Roles encapsulate tasks related to a specific function (e.g., setting up a web server, configuring a database), promoting reusability across projects. Modules are the individual units of automation, performing specific actions like installing packages or managing services. By combining roles and modules effectively, you can create highly organized and easily maintainable playbooks.

For instance, a role for deploying a web application might include modules for installing the web server, configuring the application, and setting up security. This modular approach makes it easy to update individual components without affecting the entire system. Furthermore, roles can be version-controlled, facilitating collaboration and simplifying deployments across different environments. A well-structured role includes variables, tasks, handlers, and templates, allowing for customization and flexibility.

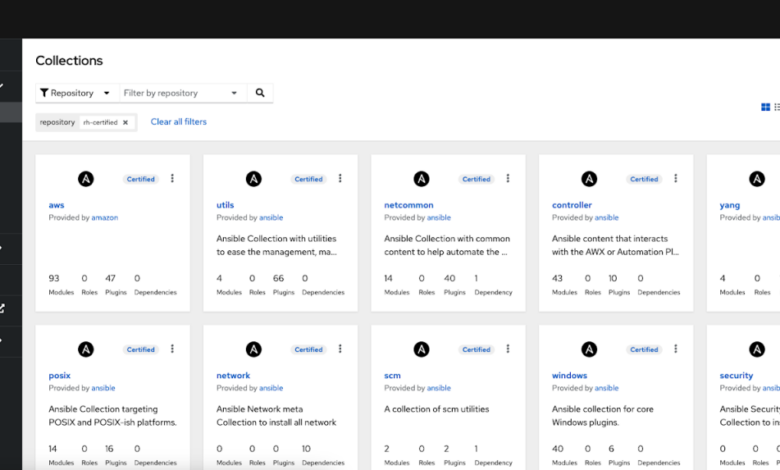

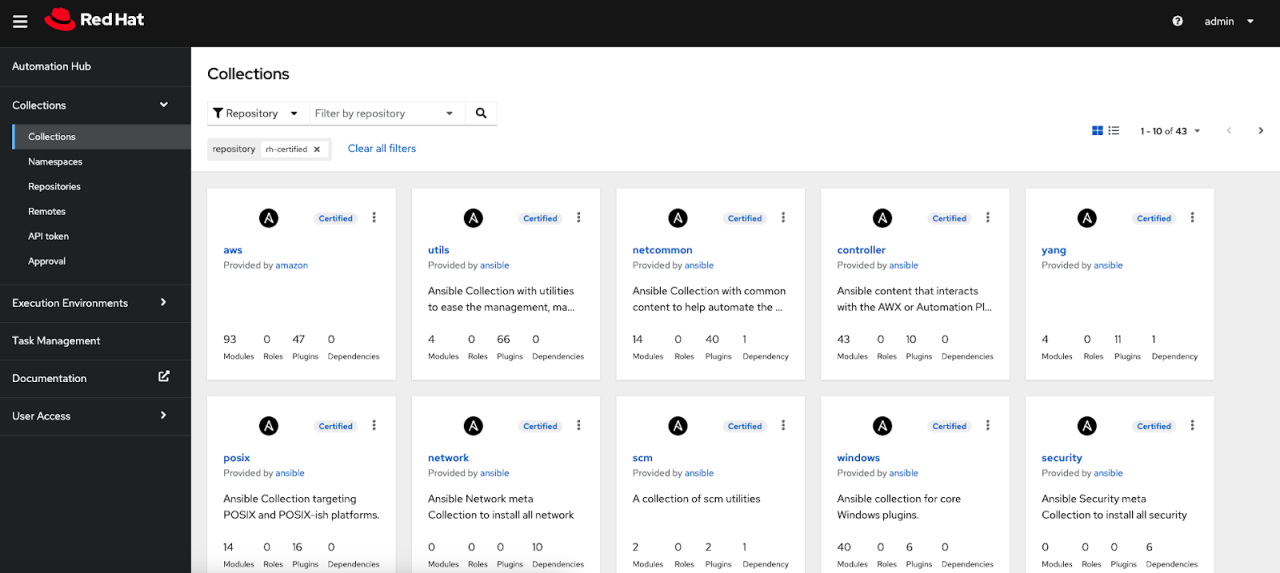

Utilizing Ansible Galaxy for Content Sharing and Access

Ansible Galaxy is a repository of pre-built Ansible roles contributed by the community. Accessing and utilizing these roles significantly accelerates the automation process. Galaxy provides a central location to discover, download, and manage roles, eliminating the need to create everything from scratch. Searching for roles related to specific technologies or tasks is straightforward, and the roles often come with detailed documentation and examples.

This community-driven approach fosters collaboration and provides access to high-quality, well-tested automation components. For example, searching for “MySQL” in Ansible Galaxy will yield several roles that can manage different aspects of MySQL database deployments, saving significant time and effort compared to writing custom Ansible code. This also ensures adherence to best practices and improves consistency across different projects.

Automating Database Management Tasks with Ansible

Ansible excels at automating database management tasks, a crucial component of workload automation. It can manage various database systems, including MySQL, PostgreSQL, and Oracle, using dedicated modules. These modules allow for tasks such as creating and managing databases, users, and privileges; backing up and restoring databases; and executing SQL scripts. For instance, Ansible can automate the creation of a new database user with specific permissions, ensuring consistent security policies across all databases.

Similarly, it can automate the deployment of database schema changes, reducing the risk of manual errors and ensuring consistency across environments. This automation minimizes downtime and streamlines the database administration process. Consider a scenario where a new application requires a new database schema. Ansible can automate the creation of the schema, populating it with initial data, and granting appropriate access permissions, all within a single, repeatable playbook.

Case Study: Streamlining Global E-commerce Deployments with Ansible

This case study details how a fictional global e-commerce company, “ShopGlobally,” successfully leveraged Ansible for workload automation, resulting in significant improvements in efficiency and cost reduction across its infrastructure. ShopGlobally experienced rapid growth, leading to a complex and unwieldy deployment process for its e-commerce platform across multiple data centers worldwide. Manual processes were slow, error-prone, and unsustainable.ShopGlobally implemented Ansible to automate the entire deployment lifecycle, from infrastructure provisioning to application deployment and ongoing management.

This involved creating a comprehensive Ansible playbook that managed all aspects of their infrastructure and application deployments. The transition from manual processes to Ansible automation provided several key advantages.

Infrastructure Provisioning Automation

Prior to Ansible, provisioning new servers in ShopGlobally’s multiple data centers was a time-consuming manual process. It involved multiple steps, including ordering hardware, configuring operating systems, installing necessary software, and configuring network settings. This process often took days or even weeks, leading to delays in deploying new features and scaling the platform to meet demand. Ansible automated this process, reducing the time required to provision a new server from several days to mere minutes.

The Ansible playbook dynamically created and configured servers, ensuring consistency across all environments. This significantly reduced human error and improved overall deployment reliability.

Application Deployment Optimization

Deploying application updates was another area where Ansible delivered significant improvements. Previously, ShopGlobally’s developers had to manually deploy updates to each server, a process that was both time-consuming and prone to errors. With Ansible, they created a playbook that automated the entire deployment process, ensuring consistent and reliable deployments across all servers. The playbook handled tasks such as code deployment, database updates, and configuration changes, minimizing downtime and ensuring a smooth transition to the new version.

Rollback capabilities, built into the Ansible playbook, also provided a safety net in case of issues.

Improved Efficiency and Reduced Operational Costs

The implementation of Ansible resulted in a significant increase in efficiency and a reduction in operational costs for ShopGlobally. The automation of infrastructure provisioning and application deployments freed up IT staff to focus on more strategic initiatives. The reduction in manual effort resulted in a considerable decrease in operational costs, estimated at approximately 30% over a six-month period.

This was achieved through reduced labor costs, minimized errors, and decreased downtime. Moreover, the improved reliability and consistency of deployments contributed to increased customer satisfaction and reduced support requests. For example, the average time to deploy a new feature decreased from several days to a few hours, allowing ShopGlobally to respond more quickly to market demands and competitive pressures.

The reduced downtime also translated into improved website availability and higher sales conversion rates.

Monitoring and Scalability Enhancements

Ansible also integrated with ShopGlobally’s monitoring tools, allowing for real-time monitoring of the entire infrastructure and application deployments. This proactive approach to monitoring enabled the IT team to identify and address potential issues before they impacted customers. The scalability of Ansible allowed ShopGlobally to easily adapt to the growing demands of its e-commerce platform. As the company expanded, they could easily scale their infrastructure by simply running the Ansible playbook to provision new servers and deploy applications.

This flexibility ensured that the platform could handle increased traffic and maintain optimal performance.

Final Wrap-Up

So, there you have it – a glimpse into the incredible capabilities of Ansible for workload automation. From simplifying infrastructure provisioning to streamlining application deployments and scaling operations effortlessly, Ansible offers a powerful solution for modern IT challenges. By embracing Ansible, you’re not just automating tasks; you’re empowering your team to focus on innovation and strategic initiatives, leaving the repetitive drudgery to a reliable and efficient automation engine.

Ready to take control? Dive in and start exploring!

Question Bank

What are the main limitations of Ansible?

While powerful, Ansible’s reliance on SSH can be a bottleneck in certain high-security environments. Complex deployments might require advanced knowledge, and scaling to extremely large environments can present challenges.

How does Ansible compare to other automation tools like Puppet or Chef?

Ansible distinguishes itself through its agentless architecture and simple YAML-based syntax, making it easier to learn and use than agent-based solutions. The choice depends on your specific needs and team expertise.

Is Ansible suitable for all types of workloads?

Ansible is highly versatile, but its best suited for tasks involving server management, configuration management, and application deployments. For certain specialized tasks, other tools might be more appropriate.