BigFix and the NIS2 Directive Securing Your Systems

BigFix and the NIS2 Directive: Navigating the complex world of cybersecurity compliance can feel like scaling a mountain, but with the right tools, it’s entirely conquerable. This post dives into how BigFix, a powerful endpoint management platform, can be your trusty climbing gear as you tackle the challenges of the NIS2 Directive. We’ll explore its core functionalities, integration with other security tools, and its critical role in incident response, all within the context of meeting NIS2’s stringent requirements.

Get ready to climb!

The NIS2 Directive (Network and Information Systems Security Directive) sets a high bar for cybersecurity across various sectors. It mandates robust security measures, regular assessments, and stringent incident response protocols. BigFix, with its capabilities in patch management, vulnerability scanning, and asset inventory, offers a powerful solution to streamline compliance efforts. This post will break down exactly how BigFix can help you meet these demands, providing practical insights and real-world examples along the way.

We’ll also address common integration challenges and offer best practices for maximizing its effectiveness.

BigFix Functionality Relevant to NIS2 Compliance

BigFix, with its robust capabilities for endpoint management, plays a crucial role in helping organizations meet the stringent requirements of the NIS2 Directive. This directive necessitates a proactive approach to cybersecurity, demanding robust vulnerability management, comprehensive asset inventories, and demonstrable compliance reporting. BigFix’s features directly address these needs, streamlining the process and reducing the overall compliance burden.

By leveraging BigFix’s functionalities, organizations can significantly improve their security posture and demonstrate compliance with NIS2 regulations efficiently and effectively.

BigFix Patch Management and NIS2 Compliance

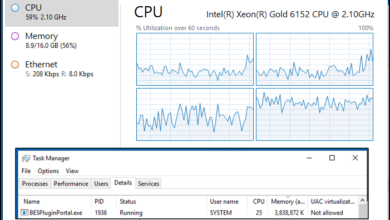

BigFix’s patch management capabilities are central to NIS2 compliance. The directive emphasizes the timely patching of vulnerabilities to mitigate risks. BigFix allows for automated deployment of security updates across all managed endpoints, ensuring systems are up-to-date with the latest security patches. This automated approach eliminates manual intervention, reducing the risk of human error and ensuring consistent, timely patching across the entire IT infrastructure.

Furthermore, BigFix provides detailed reporting on patch deployment status, providing evidence of compliance efforts. This reporting functionality is critical for demonstrating adherence to NIS2 auditing requirements.

BigFix Vulnerability Management and NIS2 Requirements

Beyond patch management, BigFix offers comprehensive vulnerability management capabilities. It integrates with various vulnerability scanners to identify and assess potential security weaknesses on managed endpoints. This proactive approach allows organizations to prioritize and address critical vulnerabilities swiftly, minimizing the organization’s attack surface. BigFix’s remediation capabilities enable automated deployment of fixes or workarounds for identified vulnerabilities, enhancing the overall security posture and directly contributing to NIS2 compliance.

The detailed reporting features within BigFix provide verifiable evidence of vulnerability identification, assessment, and remediation efforts, satisfying the audit requirements of the NIS2 Directive.

BigFix Asset Inventory and Management for NIS2 Adherence, Bigfix and the nis2 directive

Accurate and up-to-date asset inventory is paramount for NIS2 compliance. BigFix automatically discovers and inventories all managed endpoints, collecting crucial information such as operating systems, installed software, and hardware configurations. This comprehensive inventory provides a clear understanding of the organization’s IT landscape, allowing for better risk assessment and management. The detailed asset information collected by BigFix is crucial for identifying systems that require specific security controls or patching, facilitating compliance with the NIS2 requirements for asset management and risk mitigation.

Demonstrating NIS2 Compliance with BigFix Reporting

BigFix facilitates the creation of comprehensive compliance reports that demonstrate adherence to NIS2 requirements. The following table Artikels a step-by-step procedure for using BigFix to generate reports that fulfill NIS2 reporting obligations:

| BigFix Feature | NIS2 Requirement Addressed | Implementation Steps | Potential Challenges |

|---|---|---|---|

| Patch Management Reporting | Demonstrating timely patching of vulnerabilities | 1. Configure BigFix to automatically deploy patches. 2. Create reports showing patch deployment status, including dates and affected systems. 3. Schedule regular report generation. | Maintaining accurate patch databases; dealing with systems that cannot be patched immediately; ensuring consistent reporting across all systems. |

| Vulnerability Assessment Reporting | Identifying and addressing vulnerabilities | 1. Integrate BigFix with vulnerability scanners. 2. Configure BigFix to automatically scan for vulnerabilities. 3. Create reports showing identified vulnerabilities, their severity, and remediation status. | False positives from vulnerability scanners; ensuring timely remediation of critical vulnerabilities; managing vulnerability scanning frequency to minimize impact on system performance. |

| Asset Inventory Reporting | Maintaining an accurate inventory of assets | 1. Configure BigFix to automatically discover and inventory assets. 2. Create reports showing details of all managed endpoints, including operating systems, software, and hardware configurations. 3. Regularly update the inventory. | Managing changes in the IT infrastructure; ensuring data accuracy; dealing with systems that are difficult to discover or inventory. |

| Custom Reporting | Meeting specific reporting requirements | 1. Utilize BigFix’s reporting capabilities to create custom reports addressing specific NIS2 requirements. 2. Tailor reports to meet auditor needs. 3. Regularly review and update reports. | Developing the necessary reporting expertise; ensuring reports are accurate and complete; adapting reports to changing regulatory requirements. |

Integrating BigFix with Other NIS2-Relevant Security Tools: Bigfix And The Nis2 Directive

BigFix, with its robust endpoint management capabilities, isn’t a standalone solution for complete NIS2 compliance. Effective NIS2 adherence necessitates a layered security approach, integrating BigFix with other crucial security tools for comprehensive threat detection, response, and reporting. This integration significantly enhances visibility and control across the entire IT infrastructure, ensuring better compliance posture.BigFix’s strength lies in its ability to remediate vulnerabilities and enforce security policies directly on endpoints.

However, a comprehensive security strategy requires correlating this endpoint data with broader security context provided by other systems.

BigFix Integration with SIEM Systems

Integrating BigFix with a Security Information and Event Management (SIEM) system offers a powerful synergy. BigFix provides real-time data on endpoint security posture – software inventory, patch levels, vulnerability status, and user activity – while the SIEM system aggregates logs from various sources, enabling comprehensive threat detection and correlation. This integration facilitates a proactive security posture, enabling rapid identification and response to potential NIS2 violations.

For example, a SIEM system might detect suspicious network activity, and BigFix can then be used to immediately investigate affected endpoints, isolate them, and remediate any identified vulnerabilities. This coordinated approach reduces response times significantly and improves overall security effectiveness.

Comparison of BigFix and SIEM Capabilities Regarding NIS2 Compliance

BigFix excels at endpoint security management and remediation, providing granular control over software deployments, patching, and configuration settings. SIEM systems, on the other hand, focus on collecting, analyzing, and correlating security logs from various sources to detect threats and security incidents. While BigFix can contribute crucial endpoint data to a SIEM, the SIEM provides the broader context needed for comprehensive threat analysis and incident response.

In the context of NIS2, BigFix helps ensure compliance by automating security controls and providing evidence of compliance, while the SIEM offers a central point for monitoring and responding to security incidents that could violate NIS2 regulations. Neither system is a complete solution on its own; their combined power is what ensures robust NIS2 compliance.

Automating NIS2 Security Controls with BigFix

BigFix’s automation capabilities are invaluable for implementing and enforcing many NIS2-mandated security controls. For instance, BigFix can automate the deployment of security patches, ensuring systems are up-to-date and protected against known vulnerabilities. It can also enforce strong password policies, configure firewalls, and deploy anti-malware software across all managed endpoints. This automation reduces the manual effort required for compliance, minimizing human error and ensuring consistent security across the organization.

The automated reporting features within BigFix also help in generating the necessary audit trails for demonstrating compliance to NIS2 auditors.

BigFix and Incident Response Workflow for NIS2 Incidents

Effective incident response is crucial for addressing NIS2-related security breaches. The integration of BigFix with an incident response system significantly streamlines this process.

- Incident Detection: The SIEM system detects a suspicious event, potentially indicating a NIS2 violation (e.g., unauthorized access, data breach).

- Endpoint Identification: The SIEM identifies the affected endpoints based on log data.

- BigFix Investigation: The incident response team uses BigFix to remotely investigate the affected endpoints, gathering information on software inventory, running processes, and user activity.

- Remediation: BigFix is used to deploy countermeasures, such as isolating the affected systems, removing malware, or applying security patches.

- Forensic Analysis: BigFix collects forensic data from the affected endpoints, aiding in post-incident investigation and root cause analysis.

- Reporting: BigFix generates reports documenting the incident, the remediation steps taken, and the resulting security posture, facilitating compliance reporting.

This coordinated workflow ensures a rapid and effective response to security incidents, minimizing their impact and demonstrating compliance with NIS2 requirements.

BigFix’s Role in Incident Response Under NIS2

BigFix, with its robust endpoint management capabilities, plays a crucial role in streamlining incident response activities, ensuring compliance with the stringent NIS2 Directive. Its ability to quickly assess the impact of a breach, contain the spread of malware, and facilitate system recovery makes it an invaluable tool for organizations striving for NIS2 compliance. This section will detail how BigFix supports each phase of incident response, emphasizing best practices and alignment with NIS2 requirements.

Key Steps in an Incident Response Plan Leveraging BigFix

A well-defined incident response plan is paramount for NIS2 compliance. BigFix significantly enhances this plan by providing the tools for rapid assessment, containment, eradication, recovery, and reporting. The following steps illustrate how BigFix facilitates each phase. First, BigFix’s real-time monitoring capabilities allow for immediate detection of suspicious activity, triggering alerts and enabling prompt investigation. Secondly, its remote control functionality permits immediate isolation of compromised systems, preventing further lateral movement.

Thirdly, BigFix enables the rapid deployment of remediation actions, such as patching vulnerabilities or removing malware. Finally, BigFix facilitates the detailed documentation of all actions taken, which is critical for compliance reporting.

Containing and Eradicating Malware Using BigFix

Once a security breach is identified, swift action is essential. BigFix’s ability to deploy and execute remediation scripts across numerous endpoints simultaneously is critical for containing and eradicating malware. This can include deploying antivirus updates, removing malicious files, and restoring system settings to a known good state. For example, a script can be deployed to quarantine infected files, delete registry keys associated with the malware, and then trigger a full system scan.

This approach ensures consistency and speed, minimizing the impact of the breach. Real-time monitoring after the deployment allows for verification of the remediation’s success and quick identification of any remaining threats. This proactive approach aligns perfectly with the NIS2 Directive’s emphasis on rapid response and containment.

Recovering Systems and Restoring Data with BigFix

System recovery and data restoration are crucial steps in the post-incident phase. BigFix can facilitate this process through several mechanisms. It can be used to deploy system image backups, restoring affected systems to a pre-breach state. Alternatively, BigFix can deploy scripts to repair damaged system files or reinstall necessary applications. For data restoration, BigFix can initiate the process of retrieving data from backups, ensuring business continuity is restored as quickly as possible.

The ability to perform these actions remotely, across multiple systems simultaneously, is a significant advantage, minimizing downtime and the overall impact of the incident. This aligns with NIS2’s focus on business continuity and rapid recovery.

Checklist for BigFix Actions During and After a Security Incident

Pre-Incident: Ensure BigFix is configured correctly, with appropriate access controls and reporting mechanisms. Regularly update the BigFix client and server software.

During Incident: Immediately identify affected systems. Isolate compromised systems. Deploy malware removal scripts. Document all actions taken. Collect logs and evidence.

Post-Incident: Verify the effectiveness of remediation. Restore systems and data. Analyze the incident to identify vulnerabilities. Update security policies. Report the incident to relevant authorities, documenting all actions taken with BigFix in the report.

Ongoing: Regularly review BigFix logs for suspicious activity. Perform vulnerability assessments. Maintain up-to-date security patches and configurations.

Addressing NIS2’s Specific Requirements with BigFix

BigFix, with its robust capabilities for endpoint management and security, offers a powerful solution for organizations striving to meet the stringent requirements of the NIS2 Directive. Its ability to automate tasks, enforce policies, and provide comprehensive visibility into the security posture of an organization makes it a valuable asset in achieving and maintaining compliance. This section will explore how BigFix directly addresses key NIS2 mandates.

BigFix and Regular Security Assessments

NIS2 demands regular security assessments to identify and mitigate vulnerabilities. BigFix facilitates this through automated vulnerability scanning and patching. By deploying vulnerability scans across all managed endpoints, BigFix identifies weaknesses in software, operating systems, and configurations. The results are then presented in a centralized dashboard, allowing security teams to prioritize remediation efforts based on risk levels. This automated process significantly reduces the time and resources required for manual assessments, ensuring consistent and frequent checks.

Furthermore, BigFix’s ability to deploy patches automatically reduces the window of vulnerability, strengthening the overall security posture.

BigFix and Strong Authentication and Access Control

Implementing strong authentication and access control is critical for NIS2 compliance. BigFix contributes by enabling the enforcement of strong password policies, multi-factor authentication (MFA), and granular access controls. It can push configurations to endpoints, ensuring all systems adhere to the organization’s security policies. For example, BigFix can enforce password complexity rules, prevent the use of weak passwords, and mandate regular password changes.

It can also integrate with MFA providers, ensuring that only authorized users with verified identities can access sensitive systems. Furthermore, BigFix allows administrators to manage user permissions and access rights at the individual endpoint level, restricting access based on roles and responsibilities.

BigFix and Secure Software Development Practices

While BigFix doesn’t directly manage the software development lifecycle (SDLC), it plays a crucial role in ensuring the security of deployed software. By providing visibility into the software versions running on endpoints, BigFix allows organizations to quickly identify and remediate vulnerabilities in applications. This enables rapid response to newly discovered vulnerabilities and ensures that all systems are running the most up-to-date and secure versions of software.

This contributes to the overall security posture and helps meet the indirect NIS2 requirements related to secure software deployment and maintenance.

Scenario: A NIS2 Non-Compliance Issue and BigFix Mitigation

Imagine a scenario where a small manufacturing company, Acme Manufacturing, fails to update its industrial control system (ICS) software. This ICS controls crucial machinery on their factory floor.

System Architecture: The ICS consists of several PLCs (Programmable Logic Controllers) connected to a central server, which is itself connected to the company network. The network includes workstations for engineers and a separate guest Wi-Fi network.

Attack Vector: A malicious actor exploits a known vulnerability in the outdated ICS software via the company network. This allows the actor to gain control of the PLCs, potentially disrupting production and causing significant financial losses.

BigFix Mitigation: Had Acme Manufacturing used BigFix, they could have automated the patching of the ICS software. BigFix’s ability to target specific endpoints and deploy patches would have ensured that the ICS was updated with the latest security patches. Furthermore, BigFix’s reporting features would have alerted the IT team to any missing patches, allowing for proactive remediation. Regular vulnerability scans would have identified the outdated software, providing early warning of the potential risk.

This scenario illustrates how BigFix can prevent vulnerabilities by providing a proactive and automated approach to patch management and security assessment, ensuring compliance with NIS2 requirements.

BigFix and Data Protection in the Context of NIS2

BigFix, with its robust endpoint management capabilities, plays a crucial role in bolstering data protection strategies within the framework of the NIS2 Directive. Its ability to centrally manage and monitor systems allows organizations to effectively secure sensitive data, minimize risks, and demonstrate compliance with stringent data protection regulations. This goes beyond simple vulnerability management; it encompasses proactive data loss prevention and incident response.BigFix’s contribution to data protection under NIS2 extends to several key areas, including data access control, breach notification, and overall security posture management.

Its capabilities enable organizations to build a comprehensive and verifiable security strategy, reducing the likelihood of data breaches and minimizing the impact if one occurs.

Data Breach Notification and Reporting with BigFix

Effective and timely data breach notification is paramount under NIS2. BigFix facilitates this process through several mechanisms. Its inventory and auditing capabilities allow for rapid identification of compromised systems and the extent of data exposure. Automated reporting features can generate comprehensive breach reports, including affected systems, data types involved, and potential impact, significantly speeding up the notification process to relevant authorities and affected individuals.

Furthermore, BigFix can be integrated with incident response systems to streamline the entire process from detection to remediation and reporting. This ensures compliance with NIS2’s strict timelines for breach notification and allows organizations to demonstrate proactive and efficient incident handling.

Monitoring and Controlling Data Access with BigFix

NIS2 places significant emphasis on controlling access to sensitive data. BigFix enables organizations to implement granular access control policies, restricting access to sensitive data based on user roles, location, and device compliance. By leveraging BigFix’s inventory and compliance capabilities, organizations can monitor user activity and identify unauthorized access attempts in real-time. This proactive monitoring helps prevent data breaches before they occur and strengthens the organization’s overall security posture.

BigFix can also enforce data encryption policies, ensuring that sensitive data remains protected even if a system is compromised. Regular audits generated by BigFix provide a verifiable record of data access and control measures, crucial for demonstrating compliance.

A Comprehensive Data Security Policy Incorporating BigFix

A robust data security policy is essential for NIS2 compliance. This policy should leverage BigFix’s functionalities to ensure effective data protection. The following elements should be included:

This policy Artikels the organization’s commitment to protecting sensitive data in accordance with NIS2 requirements. It leverages BigFix’s capabilities to ensure robust data security, access control, and incident response. Regular reviews and updates to this policy are essential to maintain its effectiveness.

- Data Classification and Inventory: Regularly classify sensitive data and maintain an accurate inventory using BigFix’s asset management features. This allows for targeted security controls.

- Access Control Policies: Implement granular access control policies using BigFix, restricting access to sensitive data based on roles, location, and device compliance. Regularly review and update these policies.

- Data Encryption: Enforce data encryption at rest and in transit using BigFix to protect sensitive data even in the event of a breach. Monitor encryption status regularly.

- Data Loss Prevention (DLP): Utilize BigFix to monitor and prevent unauthorized data transfer or exfiltration. Implement DLP rules based on data sensitivity and user behavior.

- Regular Security Audits: Conduct regular security audits using BigFix’s reporting capabilities to assess compliance with data protection policies and identify vulnerabilities.

- Incident Response Plan: Develop a comprehensive incident response plan that leverages BigFix for rapid identification, containment, and remediation of data breaches. Include clear communication protocols for notifying relevant stakeholders.

- Employee Training: Provide regular training to employees on data security policies and procedures, emphasizing the importance of secure data handling practices.

- Vulnerability Management: Proactively identify and address system vulnerabilities using BigFix’s patch management capabilities to reduce the risk of data breaches.

Closing Notes

Successfully navigating the NIS2 Directive requires a proactive and multifaceted approach to cybersecurity. BigFix emerges as a key player, offering a powerful suite of tools to streamline compliance efforts. From proactive patch management and vulnerability scanning to efficient incident response and detailed reporting, BigFix provides the technological backbone to ensure your organization is well-equipped to meet the demands of this critical regulation.

By leveraging BigFix’s capabilities, organizations can significantly reduce their risk profile and demonstrate a strong commitment to data security and regulatory compliance. Remember, robust cybersecurity isn’t just a box to tick; it’s a continuous journey – and BigFix can be your trusted companion on the way.

FAQ Overview

What if my organization doesn’t use BigFix? Are there alternatives?

Yes, several other endpoint management and security information and event management (SIEM) solutions can help with NIS2 compliance. The best choice depends on your specific needs and existing infrastructure. Researching alternatives like Microsoft Intune, VMware Workspace ONE, or various SIEM platforms is recommended.

How much does BigFix cost?

BigFix pricing is based on factors like the number of managed endpoints and the specific features you need. It’s best to contact IBM directly for a customized quote.

Is BigFix suitable for all organizations subject to NIS2?

While BigFix is a powerful tool, its suitability depends on your organization’s size, technical capabilities, and specific NIS2 requirements. Smaller organizations might find alternative, less complex solutions more appropriate.

How often should I perform security assessments using BigFix to meet NIS2 requirements?

NIS2 doesn’t specify a fixed frequency for security assessments. However, regular and consistent assessments are crucial. The frequency should be determined based on your risk assessment and the nature of your operations. Consider factors such as the sensitivity of your data and the potential impact of a security breach.