Unicas Cloud Native Journey A Deep Dive

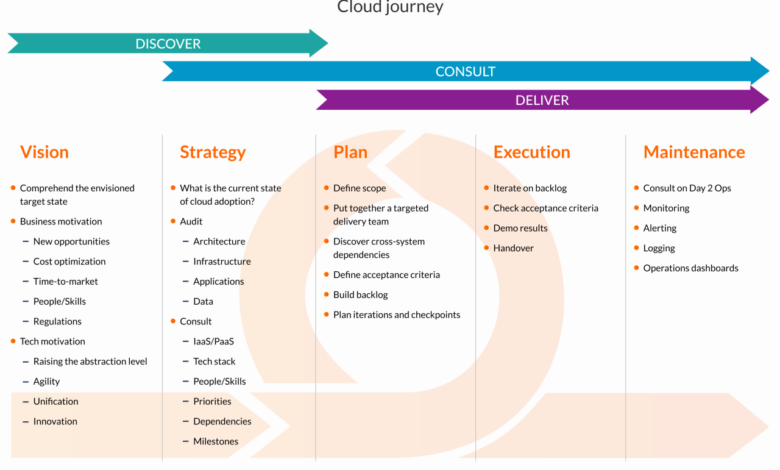

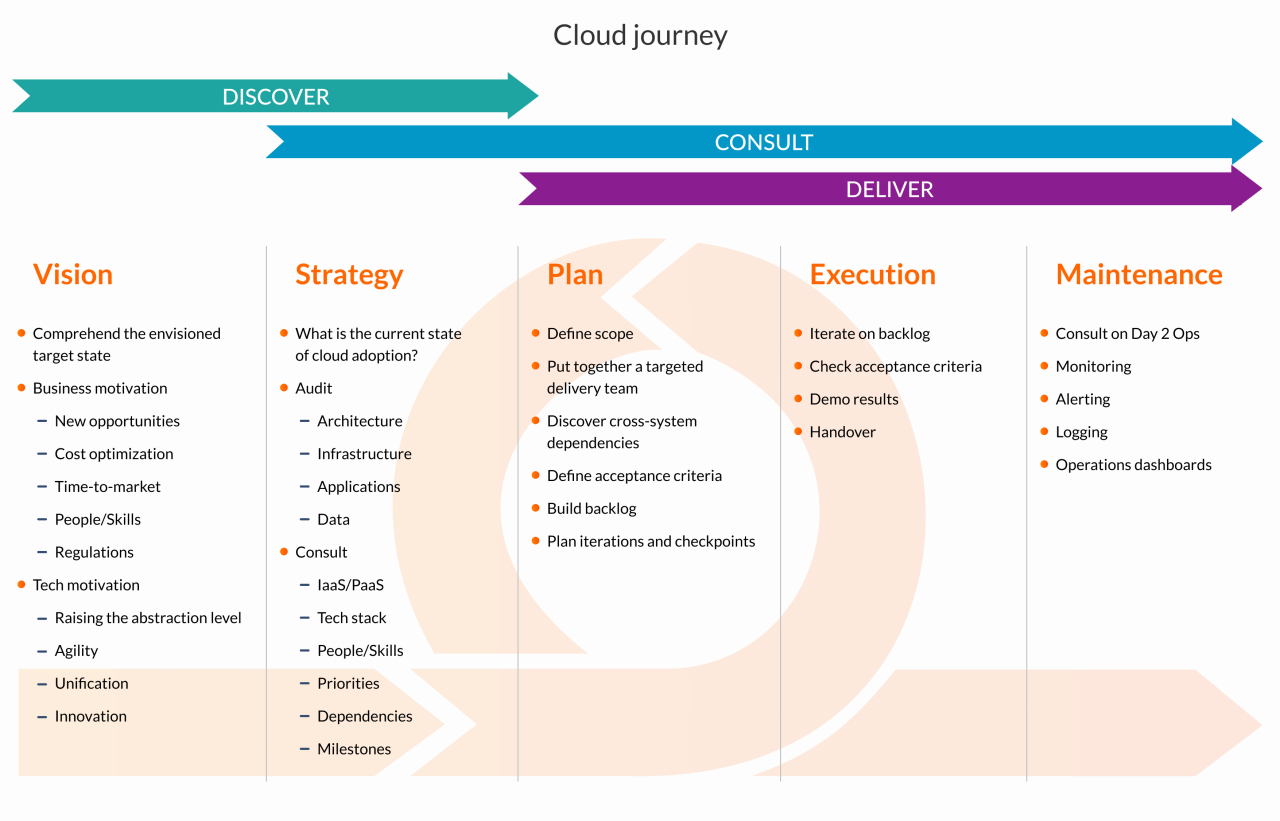

Unicas Cloud Native Journey: Embark on a thrilling expedition into the heart of Unicas’s cloud-native transformation! This isn’t just another tech story; it’s a behind-the-scenes look at the strategic decisions, technological hurdles, and ultimate triumphs of migrating a complex system to a cloud-native architecture. Prepare for a fascinating exploration of architecture, migration strategies, DevOps practices, security measures, and cost optimization techniques—all within the dynamic landscape of the cloud.

We’ll dissect the core components of Unicas’s cloud-native architecture, examining the technologies that power its seamless operations. From containerization and orchestration to service meshes, we’ll uncover the intricate workings of this modern marvel. We’ll also delve into the challenges faced during migration, the innovative solutions implemented, and the lessons learned along the way. This journey is a testament to the power of strategic planning, technological prowess, and a commitment to continuous improvement.

Unicas Cloud Native Architecture

Unicas’ cloud-native architecture is designed for scalability, resilience, and agility. It leverages modern technologies to deliver a highly available and efficient platform capable of handling fluctuating workloads and rapid deployments. This approach allows for continuous integration and continuous delivery (CI/CD), enabling faster innovation and quicker responses to market demands.Unicas’ cloud-native architecture relies on a microservices-based approach, decoupling functionalities into independent, deployable units.

This contrasts with monolithic architectures, offering improved fault isolation and easier maintenance. The architecture also emphasizes automation, minimizing manual intervention and reducing the risk of human error.

Core Components of Unicas’ Cloud Native Architecture

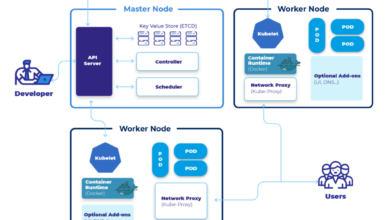

The core components of Unicas’ cloud-native architecture work together seamlessly to provide a robust and scalable platform. These components are interconnected and rely on each other to ensure the overall functionality and performance of the system. They include a container orchestration layer, a service mesh for managing inter-service communication, and a robust monitoring and logging system.

Key Technologies Utilized

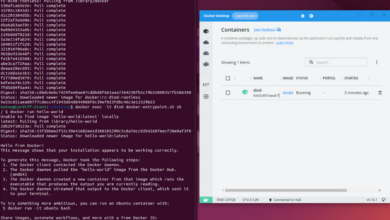

Unicas utilizes a variety of key technologies to support its cloud-native architecture. Containerization, using Docker, provides lightweight, portable units for deploying applications. Kubernetes serves as the orchestration layer, automating deployment, scaling, and management of containers. A service mesh, potentially Istio or Linkerd, handles service discovery, traffic management, and security between microservices. Finally, robust monitoring and logging tools, such as Prometheus and Grafana, provide real-time insights into the system’s health and performance.

Comparison with Other Prominent Cloud-Native Architectures

Compared to other cloud-native architectures, Unicas’ design prioritizes simplicity and ease of management while maintaining scalability and resilience. While architectures like those employed by large cloud providers might incorporate more complex components and features, Unicas focuses on a streamlined approach, making it suitable for organizations of various sizes. This approach emphasizes practicality and avoids unnecessary complexity. For example, while some architectures heavily rely on serverless functions, Unicas favors a more traditional containerized microservices approach for greater control and predictability.

Architectural Diagram

The following table illustrates the interaction between the key components of Unicas’ cloud-native architecture.

| Component | Function | Technology | Interaction with Other Components |

|---|---|---|---|

| Microservices | Individual, independent units of functionality | Docker, Go, Java, etc. | Communicate with each other via the service mesh; deployed and managed by Kubernetes |

| Kubernetes | Orchestrates container deployment, scaling, and management | Kubernetes | Manages the lifecycle of microservices; interacts with the service mesh for traffic routing |

| Service Mesh (e.g., Istio) | Manages inter-service communication, security, and observability | Istio/Linkerd | Facilitates communication between microservices; integrates with monitoring and logging systems |

| Monitoring & Logging (e.g., Prometheus & Grafana) | Provides real-time insights into system health and performance | Prometheus, Grafana | Collects metrics and logs from all components; provides dashboards for monitoring and analysis |

Migration Strategy to Cloud Native

Unicas’s journey to a cloud-native architecture wasn’t a single, sweeping change, but a carefully planned and executed phased migration. We understood that a “big bang” approach risked significant downtime and data loss, so we opted for a more iterative and controlled process, minimizing disruption to our ongoing operations. This approach allowed us to learn from each phase, refine our processes, and ultimately achieve a smoother, more successful overall migration.Our phased approach prioritized minimizing risk and maximizing the benefits of cloud native technologies.

This involved a strategic breakdown of our applications and infrastructure into manageable chunks, allowing us to focus resources effectively and continuously monitor the impact of each migration step. This iterative approach proved invaluable in identifying and resolving potential issues early in the process.

Phased Migration Approach

The Unicas cloud-native migration was divided into four distinct phases. Phase 1 focused on assessment and planning, meticulously analyzing our existing infrastructure and applications to identify dependencies and potential roadblocks. Phase 2 involved the migration of non-critical applications to the cloud, providing a testing ground for our processes and allowing us to fine-tune our approach. Phase 3 saw the migration of our core applications, requiring more rigorous testing and monitoring.

Finally, Phase 4 concentrated on optimizing and refining our cloud-native architecture for maximum efficiency and scalability. Each phase included thorough testing, monitoring, and feedback loops to ensure a smooth transition.

Step-by-Step Migration Guide

- Assessment and Planning: This initial phase involved a comprehensive analysis of our existing infrastructure, applications, and dependencies. We identified potential challenges, created detailed migration plans, and established clear success metrics.

- Proof of Concept (POC): We selected a small, non-critical application to migrate as a proof of concept. This allowed us to test our migration processes, identify potential issues, and refine our approach before tackling more critical applications.

- Pilot Migration: Following the successful POC, we migrated a larger, but still non-critical, set of applications. This phase provided further validation of our processes and allowed us to gain experience with larger-scale migrations.

- Phased Rollout: We then migrated our core applications in a phased manner, prioritizing applications based on their criticality and dependencies. This minimized disruption to our users and allowed us to address any issues that arose in a controlled manner.

- Optimization and Refinement: The final phase focused on optimizing our cloud-native architecture to maximize efficiency, scalability, and cost-effectiveness. This involved continuous monitoring, performance tuning, and ongoing refinement of our processes.

Best Practices Implemented During Migration

To ensure a smooth transition, we implemented several best practices. These included rigorous testing at each stage, automated deployment pipelines to reduce manual errors, and comprehensive monitoring to detect and address issues proactively. We also prioritized collaboration and communication throughout the process, ensuring that all stakeholders were informed and involved. Regular reviews and feedback sessions allowed us to adapt our approach as needed and ensure alignment with our overall goals.

Finally, a robust rollback plan was in place to mitigate risks and ensure a quick recovery in case of unforeseen issues.

Challenges Encountered and Solutions

- Data Migration Complexity: Migrating large datasets to the cloud presented significant challenges. We addressed this by implementing a phased data migration strategy, utilizing data transformation tools, and employing data validation techniques to ensure data integrity.

- Application Refactoring: Some applications required significant refactoring to be compatible with a cloud-native architecture. We addressed this by adopting a microservices approach, breaking down monolithic applications into smaller, independent services.

- Security Concerns: Ensuring the security of our applications and data in the cloud was paramount. We addressed this by implementing robust security measures, including encryption, access controls, and regular security audits.

- Skill Gap: The migration required specialized skills in cloud-native technologies. We addressed this by investing in training and development for our team, and by leveraging external expertise where needed.

DevOps Practices and Automation

Unicas’ cloud-native journey hinges on a robust DevOps culture and extensive automation. This approach allows us to deliver value faster, increase reliability, and maintain a highly scalable and resilient infrastructure. By embracing automation at every stage, from development to deployment, we’ve streamlined our workflows and reduced the risk of human error.DevOps practices adopted by Unicas are built around principles of collaboration, automation, and continuous improvement.

We foster a close working relationship between development and operations teams, promoting shared responsibility and a unified approach to software delivery. This collaborative spirit is essential for the success of our cloud-native strategy.

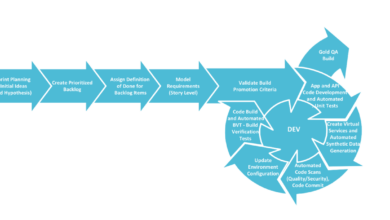

Continuous Integration and Continuous Delivery (CI/CD) Pipeline

Our CI/CD pipeline is the backbone of our automated deployment process. It encompasses automated testing, code integration, and deployment to various environments (development, staging, production). This automated pipeline allows for frequent and reliable releases, reducing lead times and improving overall efficiency. We leverage a combination of tools to achieve this, ensuring flexibility and scalability.

Infrastructure as Code (IaC) Implementation, Unicas cloud native journey

Unicas utilizes Infrastructure as Code (IaC) to manage and provision our cloud infrastructure. This means our infrastructure is defined and managed through code, rather than manual configuration. This approach allows for consistent, repeatable deployments, simplifies infrastructure management, and enables version control for infrastructure changes. We primarily use Terraform to define and manage our infrastructure, leveraging its declarative approach to ensure consistency and ease of modification.

This allows us to easily replicate environments and manage infrastructure across multiple cloud providers.

Comparison of CI/CD Tools

Choosing the right CI/CD tool is crucial for effective automation. We’ve evaluated several options and found that each has its own strengths and weaknesses. The following table compares three popular tools: Jenkins, GitLab CI, and CircleCI.

| Feature | Jenkins | GitLab CI | CircleCI |

|---|---|---|---|

| Ease of Use | Steeper learning curve, requires more configuration | Integrated with GitLab, relatively easy to use | User-friendly interface, good documentation |

| Flexibility and Customization | Highly customizable and extensible with plugins | Good customization options, but less extensive than Jenkins | Good customization, but less extensive than Jenkins |

| Scalability | Highly scalable, can handle large and complex projects | Scalable, good for medium to large projects | Scalable, good for medium to large projects, excellent for containerized workflows |

| Cost | Open-source, but requires infrastructure costs | Included with GitLab subscriptions, varying costs | Subscription-based, various pricing tiers |

Security Considerations in a Cloud Native Environment: Unicas Cloud Native Journey

Embracing a cloud-native architecture offers significant advantages in terms of scalability, agility, and efficiency. However, this shift also introduces a new set of security challenges that require careful consideration and proactive mitigation strategies. The distributed nature of cloud-native applications, coupled with the dynamic and ephemeral characteristics of containers and microservices, necessitates a robust and adaptive security approach.

Key Security Challenges in Cloud Native Architectures

The decentralized and dynamic nature of cloud-native environments presents unique security risks. Traditional perimeter-based security models are insufficient to protect the complex interplay of containers, microservices, and orchestration layers. Key challenges include securing communication between microservices, managing access control across numerous components, ensuring container image integrity, and dealing with the increased attack surface presented by the distributed nature of the architecture.

Vulnerabilities in any single component can have cascading effects throughout the entire system.

Unicas’ cloud-native journey has been fascinating, especially seeing how we’re embracing modern development practices. This shift naturally leads us to explore innovative approaches like those discussed in this great article on domino app dev, the low-code and pro-code future , which really highlights the potential for faster, more efficient application development. Ultimately, this aligns perfectly with our goal of streamlined processes within Unicas’ cloud infrastructure.

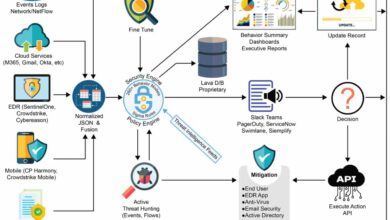

Unicas’ Security Measures for Cloud Native Environments

Unicas implements a multi-layered security strategy to address the challenges inherent in cloud-native deployments. This includes leveraging strong authentication and authorization mechanisms, implementing robust network security policies, employing continuous security monitoring and vulnerability scanning, and utilizing security automation and orchestration tools. We emphasize least privilege access, regularly update our security configurations, and incorporate security best practices throughout the software development lifecycle (SDLC).

For example, we utilize tools like Falco for runtime security analysis and Clair for vulnerability scanning of container images.

The Role of Security Automation and Orchestration

Automation plays a critical role in maintaining security posture within a dynamic cloud-native environment. Unicas leverages automated security scanning and testing tools integrated into our CI/CD pipeline to identify and address vulnerabilities early in the development process. Orchestration tools like Kubernetes are configured with security policies to enforce access control, network segmentation, and resource isolation. Automated response mechanisms are in place to detect and react to security threats in real-time, minimizing the impact of potential breaches.

This includes automated patching, automated incident response, and automated security policy updates.

Best Practices for Securing Containers, Microservices, and Underlying Infrastructure

Securing the different layers of a cloud-native architecture requires a holistic approach. For containers, we utilize immutable infrastructure, regularly scan images for vulnerabilities, and employ strong access control mechanisms. Microservices communication is secured through encryption (TLS/HTTPS) and robust authentication. The underlying infrastructure is protected using network segmentation, firewalls, and intrusion detection/prevention systems. Regular security audits and penetration testing are performed to identify and address potential weaknesses.

We also employ a zero-trust security model, assuming no implicit trust and verifying every access request.

Monitoring and Observability

At Unicas, a robust monitoring and observability strategy is crucial for ensuring the smooth operation and continuous improvement of our cloud-native applications. We leverage a multi-layered approach, combining various tools and techniques to gain comprehensive insights into application performance, resource utilization, and potential issues, ultimately enabling proactive problem resolution and informed decision-making. This approach is essential for maintaining the high availability and scalability demanded by our cloud-native infrastructure.Our monitoring and observability solution provides a holistic view of our entire system, from individual microservices to the underlying infrastructure.

This enables us to quickly identify bottlenecks, diagnose failures, and optimize resource allocation, leading to improved application performance and reduced operational costs. The data collected is used not only for reactive problem-solving but also for proactive capacity planning and system optimization.

Unicas’ Monitoring Toolset

Unicas employs a combination of open-source and commercial tools to achieve comprehensive monitoring and observability. Our core monitoring stack includes Prometheus for metrics collection, Grafana for visualization and dashboarding, Jaeger for distributed tracing, and Elasticsearch, Logstash, and Kibana (ELK stack) for log management and analysis. These tools are integrated to provide a unified view of application performance and system health.

We also utilize cloud-provider-specific monitoring services for infrastructure-level insights. This multi-faceted approach allows us to capture a wide range of data points, offering granular visibility into our applications and infrastructure.

Insights into Application Performance, Resource Utilization, and Potential Issues

The integrated monitoring system provides real-time visibility into key performance indicators (KPIs) such as request latency, error rates, CPU utilization, memory usage, and network traffic. Prometheus scrapes metrics from our applications and infrastructure components, providing a continuous stream of data. Jaeger traces requests across multiple services, helping us identify performance bottlenecks and pinpoint the root cause of errors.

The ELK stack aggregates and analyzes logs from various sources, providing valuable insights into application behavior and potential issues. By correlating metrics, traces, and logs, we gain a deep understanding of application performance and can proactively identify and address potential problems before they impact users. For example, a sudden spike in request latency, as observed in Grafana, might be correlated with high CPU utilization detected by Prometheus, and further investigated through detailed logs in Kibana, ultimately leading to the identification of a specific microservice requiring scaling or optimization.

Monitoring Dashboard Visualization

Our Grafana dashboard is designed to provide a clear and concise overview of the system’s health and performance. The dashboard is divided into several sections, each focusing on a specific aspect of the system.A large central panel displays a world map showing the geographic distribution of our user base and the corresponding response times. This allows us to quickly identify regional performance issues.

Surrounding this are smaller panels displaying key metrics such as overall request latency (displayed as a line graph over time, color-coded by application), error rates (shown as a bar chart, categorized by error type), and CPU/memory utilization for critical services (presented as gauges, with color-coded thresholds indicating warning and critical levels). Alerts are triggered based on pre-defined thresholds for these metrics, and are displayed prominently as flashing indicators with accompanying pop-up notifications providing context and severity.

The dashboard also includes panels displaying the number of active users, the current throughput, and the queue length for critical services. Each panel uses clear and intuitive visualizations, ensuring that even non-technical personnel can quickly understand the system’s status.

Comparison of Monitoring Approaches in a Cloud-Native Setting

Different approaches to monitoring and observability exist in cloud-native environments. A centralized approach, like the one employed by Unicas, offers a unified view of the entire system, simplifying troubleshooting and improving overall visibility. A decentralized approach, where each team manages its own monitoring tools, may offer greater autonomy but can lead to data silos and inconsistencies. Another key difference lies in the level of automation: Unicas utilizes automated alerting and scaling mechanisms, whereas less automated approaches may require more manual intervention.

Finally, the choice of tools can significantly impact the effectiveness of monitoring. Unicas’ selection of open-source and commercial tools ensures a balance between cost-effectiveness, flexibility, and scalability. The selection process involved evaluating factors such as scalability, ease of integration, cost, and community support.

Cost Optimization Strategies

Unicas’ cloud-native journey isn’t just about agility and scalability; it’s also about responsible resource management and cost efficiency. We understand that uncontrolled cloud spending can quickly negate the benefits of a cloud-native architecture. Therefore, a robust cost optimization strategy is integral to our approach. This strategy isn’t a one-time initiative but a continuous process of monitoring, analyzing, and adapting to evolving needs.We employ a multi-pronged approach to cost optimization, focusing on right-sizing resources, leveraging cost-effective services, and implementing automation to minimize waste.

This involves a combination of proactive measures to prevent unnecessary expenditure and reactive measures to identify and address cost anomalies. Our aim is to maximize value from our cloud investment while maintaining the performance and reliability our applications demand.

Right-Sizing Resources

Right-sizing involves ensuring that our applications and services only consume the resources they actually need. Over-provisioning, while offering a safety net, often leads to significant cost overruns. We regularly analyze resource utilization metrics – CPU, memory, storage, and network – to identify instances where resources are underutilized. This analysis is performed using a combination of automated tools and manual reviews by our cloud engineers.

For example, we’ve identified several database instances that were consistently operating below 30% capacity. By downsizing these instances to a more appropriate size, we realized a 40% reduction in monthly database costs without impacting application performance. This process is continuously refined based on the observed patterns and trends.

Leveraging Cost-Effective Services

Cloud providers offer a wide array of services, each with varying pricing models. Unicas actively explores and adopts cost-effective alternatives whenever possible. For instance, we’ve transitioned from premium storage tiers to more cost-effective options where data access patterns allow. Similarly, we’ve migrated several workloads to spot instances, which offer significant cost savings compared to on-demand instances, but require careful consideration of workload characteristics and fault tolerance mechanisms.

The decision to use spot instances is based on a rigorous analysis of workload requirements and potential disruptions. Our team carefully monitors the success rate of these migrations and continuously refines our strategies.

Automating Cost Management

Automation plays a crucial role in our cost optimization strategy. We’ve implemented automated processes for tasks like shutting down unused development and testing environments outside of business hours, automatically scaling resources based on real-time demand, and generating regular cost reports. These automated processes significantly reduce manual effort and human error, leading to more accurate cost tracking and better resource management.

This automation is coupled with alert systems that notify our team of any unusual cost spikes or resource consumption patterns, allowing for prompt investigation and remediation.

Cost Monitoring and Management Tools and Techniques

Effective cost management requires robust monitoring and reporting. We utilize a combination of tools and techniques to achieve this:

- Cloud provider’s native cost management tools: We leverage the built-in cost management dashboards and reporting features provided by our cloud provider (AWS, Azure, or GCP). These tools provide granular visibility into spending patterns across different services and resources.

- Third-party cost management platforms: We supplement the cloud provider’s tools with third-party platforms that offer advanced analytics, forecasting, and anomaly detection capabilities. These platforms help us identify cost optimization opportunities that might be missed using native tools.

- Custom scripts and dashboards: We’ve developed custom scripts and dashboards to monitor key metrics and generate tailored reports specific to our needs. This allows us to track costs at a more granular level and identify areas for improvement that might not be apparent using standard reporting tools.

- Regular cost reviews and analysis: We conduct regular cost reviews and analyses, examining spending trends, identifying cost drivers, and developing mitigation strategies. These reviews involve both automated reports and manual analysis by our finance and engineering teams.

Last Recap

Unicas’s cloud-native journey serves as a compelling case study for organizations contemplating similar transformations. The insights shared here—from architectural design and migration strategies to DevOps practices and cost optimization—offer valuable lessons for anyone navigating the complexities of cloud-native adoption. It’s a story of innovation, resilience, and the transformative power of embracing cutting-edge technologies to achieve greater efficiency, scalability, and security.

The journey, while challenging, has resulted in a more robust, adaptable, and cost-effective system, setting the stage for future growth and innovation. Their success provides a roadmap for others seeking to modernize their infrastructure and unlock the full potential of the cloud.

FAQ Insights

What specific containerization technology did Unicas use?

This information wasn’t provided in the Artikel, but a common choice would be Docker or Kubernetes.

How did Unicas handle data migration during the cloud-native transition?

The Artikel doesn’t detail the data migration strategy. This would likely involve careful planning, potentially using tools for database migration and ensuring minimal downtime.

What were the biggest unexpected challenges during the migration?

The Artikel mentions challenges but doesn’t specify them. Unexpected challenges often involve unforeseen dependencies, integration issues, or security vulnerabilities.

What is Unicas’s long-term strategy for its cloud-native environment?

This information is not included in the provided Artikel, but it likely involves continuous improvement, scaling, and adapting to emerging technologies.