Virtualizing Middle Tier and Back End Applications

Virtualizing middle tier and back end applications is revolutionizing how we build and deploy software. Imagine a world where scaling your application is as simple as clicking a button, where downtime is a distant memory, and where resource management is automated and efficient. That’s the power of virtualization, and this post dives deep into how it transforms the heart of your application architecture.

We’ll explore various virtualization technologies, architectural considerations, and best practices for a seamless transition.

From containerization with Docker and Kubernetes to the robust power of hypervisors like VMware vSphere and Microsoft Hyper-V, we’ll examine the different approaches to virtualizing your middle tier and back end. We’ll also discuss the crucial aspects of security, performance optimization, and efficient resource allocation. This isn’t just theory; we’ll look at real-world examples and case studies to show you the tangible benefits of this transformative technology.

Defining Virtualization in the Context of Middle-Tier and Back-End Applications

Virtualization, in the context of middle-tier and back-end applications, refers to the creation of virtual versions of physical computing resources, such as servers, storage, and networks. This allows multiple applications to run independently on a single physical machine, effectively isolating them from each other and improving resource utilization. This is particularly beneficial for the often demanding and complex environments of middle-tier (application servers, databases, and APIs) and back-end (databases, storage, and message queues) systems.Virtualization offers several key advantages for these application tiers.

It significantly reduces hardware costs by consolidating multiple applications onto fewer physical servers. This consolidation also simplifies management and maintenance, leading to reduced operational expenses. Furthermore, virtualization enhances scalability and flexibility, allowing for easy addition or removal of resources as needed, and facilitating faster deployment of new applications or updates. The improved resource isolation provided by virtualization also enhances security and availability, minimizing the impact of failures on other applications.

Benefits of Virtualizing Middle-Tier and Back-End Applications

The benefits extend beyond cost savings and simplified management. Improved resource utilization is a significant advantage, as virtual machines (VMs) can be dynamically allocated resources based on demand, leading to optimized performance and reduced wasted capacity. High availability and disaster recovery are also greatly enhanced. Creating virtual copies of production environments allows for quick failover in case of hardware or software failures, minimizing downtime and ensuring business continuity.

Finally, the flexibility of virtualization facilitates testing and development, allowing developers to easily create and manage multiple test environments without requiring dedicated physical hardware.

Types of Virtualization Technologies

Several virtualization technologies are applicable to middle-tier and back-end applications. These include:* Hypervisors: These are software layers that create and manage VMs. Type 1 hypervisors (bare-metal hypervisors like VMware ESXi or Microsoft Hyper-V) run directly on the hardware, while Type 2 hypervisors (hosted hypervisors like Oracle VirtualBox or VMware Workstation) run on top of an existing operating system.

The choice between Type 1 and Type 2 depends on factors like performance requirements and management complexity.* Containerization: Technologies like Docker and Kubernetes provide a lighter-weight form of virtualization. Containers share the host operating system’s kernel, resulting in less overhead compared to VMs. This makes them ideal for microservices architectures and applications requiring high density and rapid deployment.* Serverless Computing: This approach abstracts away the management of servers entirely.

Developers focus solely on code, while the cloud provider handles the underlying infrastructure. This is particularly suitable for event-driven applications and tasks that don’t require persistent resources.

Performance Implications of Virtualized versus Non-Virtualized Architectures

While virtualization offers numerous advantages, it’s crucial to understand its performance implications. A well-designed and implemented virtualized environment can achieve comparable or even superior performance to a non-virtualized setup. However, the overhead introduced by the hypervisor or containerization technology can impact performance, especially under heavy load. Careful planning, resource allocation, and optimization are necessary to mitigate this overhead.

Factors such as the hypervisor type, VM configuration, and network performance play a significant role in determining the overall performance. For example, using a high-performance hypervisor and adequately provisioning resources for each VM can minimize performance bottlenecks. Conversely, over-virtualization (running too many VMs on a single physical server) can lead to performance degradation.

Hardware Requirements Comparison

| Feature | Virtualized Setup | Non-Virtualized Setup |

|---|---|---|

| CPU | Higher core count recommended for multiple VMs | Core count depends on individual application requirements |

| RAM | Larger amount required to accommodate multiple VMs and hypervisor | RAM allocation based on individual application needs |

| Storage | Larger storage capacity needed for VM images and data | Storage capacity determined by individual application data |

| Network | High-bandwidth network connection essential for VM communication | Network bandwidth requirements depend on individual application needs |

Virtualization Technologies for Middle-Tier and Back-End Applications

Virtualizing middle-tier and back-end applications offers significant advantages in terms of scalability, efficiency, and cost-effectiveness. By decoupling applications from the underlying hardware, organizations gain flexibility and resilience. Several key technologies play crucial roles in achieving this.

Containerization Technologies

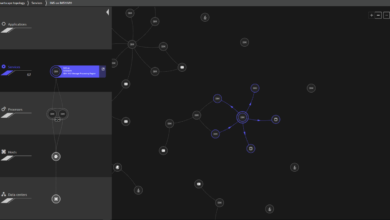

Containerization, using technologies like Docker and Kubernetes, has revolutionized application deployment and management. Docker creates lightweight, portable containers that package an application and its dependencies, ensuring consistent execution across different environments. This eliminates the “it works on my machine” problem, a common source of frustration in software development. Kubernetes, on the other hand, orchestrates the deployment, scaling, and management of containerized applications across a cluster of machines, automating many complex tasks and enhancing reliability.

For example, a large e-commerce platform might use Docker to containerize individual microservices (e.g., user authentication, product catalog, shopping cart) and Kubernetes to manage the deployment and scaling of these services based on real-time demand, ensuring high availability during peak shopping seasons. The benefits include faster deployment cycles, improved resource utilization, and easier scaling.

Hypervisors for Back-End Application Virtualization

Hypervisors, such as VMware vSphere and Microsoft Hyper-V, provide a layer of abstraction between the physical hardware and virtual machines (VMs). This allows multiple virtual machines, each running a separate back-end application or database, to share the same physical server resources. This approach is particularly useful for consolidating workloads and optimizing hardware utilization. For instance, a financial institution might use vSphere to virtualize its core banking applications, databases, and transaction processing systems, consolidating them onto fewer physical servers while maintaining isolation and security between different components.

This improves resource utilization and reduces the overall hardware footprint, leading to cost savings.

Serverless Computing Platforms

Serverless computing platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, represent a further evolution of virtualization. Instead of managing entire virtual machines, developers deploy individual functions or code snippets that execute only when triggered by an event. The platform automatically manages the underlying infrastructure, scaling resources up or down based on demand. This approach is particularly suitable for event-driven architectures and microservices, significantly reducing operational overhead and improving scalability.

A social media platform, for example, might use AWS Lambda to process user uploads, resizing images and generating thumbnails only when a new image is uploaded. This eliminates the need to maintain constantly running servers for this task, reducing costs and improving efficiency.

Security Considerations in Virtualized Environments

Security is paramount when virtualizing middle-tier and back-end applications. Effective security measures must address both the virtualization layer and the applications themselves. This includes implementing strong access controls, network segmentation, regular security patching, and robust monitoring to detect and respond to threats. Vulnerabilities in the hypervisor or container runtime environment can expose the entire virtualized infrastructure, while vulnerabilities within individual applications can lead to data breaches or service disruptions.

A multi-layered security approach, combining virtualization-specific security tools with traditional security practices, is essential to protect virtualized applications and data. Regular security audits and penetration testing are vital to identify and mitigate potential risks.

Architectural Considerations for Virtualized Middle-Tier and Back-End Systems

Virtualizing middle-tier and back-end applications offers significant advantages, including scalability, flexibility, and cost savings. However, careful architectural planning is crucial to avoid performance bottlenecks and ensure the stability and reliability of the system. This section delves into key architectural considerations for successfully virtualizing these critical application layers.

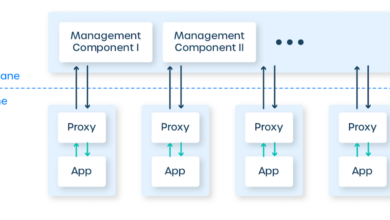

Sample Architecture Diagram for a Virtualized Three-Tier Application, Virtualizing middle tier and back end applications

A well-designed architecture is paramount for a successful virtualization strategy. The following illustrates a typical three-tier application, showing how virtualization can be implemented. This example uses virtual machines (VMs) to isolate and manage each tier.

| Tier | Description | Virtualization Implementation |

|---|---|---|

| Presentation Tier | Handles user interaction (web browsers, mobile apps). | Can be a load-balanced cluster of VMs running web servers. This tier is often less resource-intensive and might not require as much virtualization overhead. |

| Middle Tier (Application Tier) | Processes business logic and interacts with the database. | Multiple VMs, potentially with different roles (e.g., dedicated VMs for specific application modules), managed by a load balancer to distribute requests. |

| Data Tier (Back-End) | Houses the database management system (DBMS) and data storage. | Can be a physical server or a highly available cluster of VMs with shared storage (e.g., using SAN or NAS). Database performance is critical here, so careful resource allocation is essential. |

Potential Bottlenecks in a Virtualized Middle-Tier and Back-End Architecture

Several potential bottlenecks can arise in a virtualized environment. Understanding these is key to designing a robust and efficient system.

- Hypervisor Overhead: The hypervisor itself consumes system resources. Over-virtualization (too many VMs on a single physical host) can lead to performance degradation.

- Network I/O: Network latency and bandwidth limitations can significantly impact performance, especially in applications with high data transfer requirements.

- Storage I/O: Slow storage access can severely bottleneck database operations. Shared storage performance is crucial in a virtualized environment.

- Resource Contention: VMs competing for CPU, memory, and I/O resources on the same physical host can lead to performance degradation.

- Virtual Network Configuration: Improperly configured virtual networks can introduce latency and affect communication between VMs.

Implementing Load Balancing Across Virtual Machines in a Middle-Tier Environment

Load balancing is essential for distributing incoming requests across multiple middle-tier VMs, preventing overload and ensuring high availability. Several methods exist:

- Hardware Load Balancers: Dedicated appliances that distribute traffic based on various algorithms (round-robin, least connections, etc.). They provide high throughput and resilience.

- Software Load Balancers: Software solutions running on a dedicated VM or distributed across multiple VMs. They offer flexibility but might require more management.

- Cloud-Based Load Balancers: Offered by cloud providers as a managed service, simplifying deployment and management.

For example, a common approach involves using a hardware load balancer to distribute incoming HTTP requests to a pool of VMs running application servers. The load balancer monitors the health of each VM and redirects traffic accordingly.

Impact of Virtualization on Database Performance in Back-End Systems

Virtualizing database servers requires careful consideration of performance implications. While virtualization offers benefits like scalability and high availability, it can also introduce performance overhead.

- Storage Performance: The speed of the underlying storage (SAN, NAS, local disks) directly affects database performance. Using high-performance storage and optimizing storage I/O is crucial.

- Resource Allocation: Sufficient CPU, memory, and I/O resources must be allocated to the database VM(s) to avoid contention and performance bottlenecks. Over-committing resources can lead to performance degradation.

- High Availability and Disaster Recovery: Virtualization simplifies implementing high availability and disaster recovery for database systems through features like clustering and live migration.

- Database Optimization: Database tuning and optimization techniques remain essential, regardless of whether the database is virtualized or not. Proper indexing, query optimization, and database design are crucial.

For instance, a poorly configured virtualized database server might experience slow query response times due to insufficient RAM allocation, leading to excessive paging and impacting overall application performance. Conversely, a well-planned and optimized virtualized database setup can achieve even better performance than a physical server due to the flexibility and scalability offered by virtualization.

Deployment and Management of Virtualized Middle-Tier and Back-End Applications

Successfully deploying and managing virtualized middle-tier and back-end applications requires a well-defined strategy encompassing planning, execution, and ongoing monitoring. This involves careful consideration of resource allocation, application dependencies, and robust disaster recovery mechanisms. A phased approach, coupled with automation wherever possible, is key to minimizing downtime and maximizing efficiency.

Efficient deployment and management of virtualized middle-tier and back-end applications hinges on several key aspects. Proper planning, leveraging automation tools, and establishing comprehensive monitoring systems are crucial for ensuring application stability, scalability, and high availability. This section delves into best practices, migration strategies, performance monitoring techniques, and high-availability checklists, providing a practical guide for successful implementation.

Best Practices for Deploying and Managing Virtualized Middle-Tier and Back-End Applications

Implementing best practices significantly improves the reliability and efficiency of virtualized environments. These practices encompass various stages, from initial planning to ongoing maintenance, and aim to minimize risks and optimize resource utilization.

- Automated Deployment: Utilizing tools like Ansible, Chef, or Puppet automates the deployment process, reducing manual errors and ensuring consistency across environments. This allows for faster deployments and rollbacks, improving agility.

- Resource Optimization: Careful allocation of virtual machine (VM) resources, including CPU, memory, and storage, is critical. Over-provisioning wastes resources, while under-provisioning can lead to performance bottlenecks. Regular monitoring and adjustment are necessary to maintain optimal performance.

- Version Control and Configuration Management: Maintaining a version history of all application configurations and code allows for easy rollback in case of errors or unexpected issues. Tools like Git are essential for managing code, while configuration management tools track changes to the application environment.

- Regular Patching and Updates: Keeping the operating system, applications, and virtualization platform up-to-date with security patches is vital for mitigating vulnerabilities. A well-defined patching schedule and automated patching processes are crucial.

- Comprehensive Monitoring: Real-time monitoring of key performance indicators (KPIs) such as CPU utilization, memory usage, network traffic, and application response times enables proactive identification and resolution of potential issues. This includes setting up alerts for critical thresholds.

Step-by-Step Guide for Migrating a Legacy Application to a Virtualized Environment

Migrating legacy applications to a virtualized environment can be a complex process, requiring careful planning and execution. A phased approach minimizes disruption and allows for thorough testing at each stage.

- Assessment and Planning: Thoroughly assess the legacy application’s architecture, dependencies, and performance characteristics. Develop a detailed migration plan, including timelines, resources, and potential risks.

- Environment Setup: Create a virtualized environment that mirrors the production environment as closely as possible. This includes configuring virtual networks, storage, and security settings.

- Application Virtualization: Virtualize the legacy application, either through full virtualization or paravirtualization, depending on the application’s requirements and compatibility.

- Testing and Validation: Thoroughly test the virtualized application in the new environment, ensuring functionality, performance, and security are maintained. This includes performance testing and security vulnerability assessments.

- Deployment and Cutover: Deploy the virtualized application to the production environment, following a phased rollout approach to minimize disruption. Monitor the application closely during and after the cutover.

Strategies for Monitoring and Maintaining the Performance of Virtualized Applications

Continuous monitoring and performance optimization are crucial for maintaining the stability and responsiveness of virtualized applications. A proactive approach allows for early detection and resolution of performance bottlenecks.

- Real-time Monitoring Tools: Utilize tools like VMware vCenter, Microsoft System Center, or Nagios to monitor key performance indicators (KPIs) in real-time. These tools provide dashboards and alerts for critical thresholds.

- Performance Analysis: Regularly analyze performance data to identify trends and potential bottlenecks. This involves examining CPU utilization, memory usage, disk I/O, and network traffic.

- Capacity Planning: Proactively plan for future capacity needs based on historical performance data and projected growth. This helps avoid performance issues due to resource constraints.

- Resource Optimization Techniques: Implement techniques such as VM consolidation, resource ballooning, and storage optimization to improve resource utilization and reduce costs.

Checklist for Ensuring High Availability and Disaster Recovery in a Virtualized Environment

High availability and disaster recovery are paramount for minimizing downtime and ensuring business continuity. A comprehensive plan is crucial, incorporating redundancy and failover mechanisms.

| Aspect | Action Item |

|---|---|

| Virtual Machine Replication | Implement VM replication to a secondary data center or cloud environment. |

| Storage Redundancy | Utilize redundant storage arrays (RAID) and backups to protect against data loss. |

| Network Redundancy | Implement redundant network connections and switches to prevent network outages. |

| Failover Mechanisms | Configure automatic failover mechanisms to quickly switch to backup systems in case of failure. |

| Disaster Recovery Plan | Develop a comprehensive disaster recovery plan that Artikels procedures for restoring systems and data in the event of a disaster. |

| Regular Testing | Regularly test the disaster recovery plan to ensure its effectiveness. |

Case Studies and Real-World Examples: Virtualizing Middle Tier And Back End Applications

Virtualizing middle-tier and back-end applications offers significant advantages, and real-world examples showcase the tangible benefits. By moving these components to virtual environments, organizations can achieve improved performance, scalability, and cost efficiency. Let’s examine some specific instances.

A Case Study: E-commerce Platform Virtualization

A large online retailer experienced significant performance bottlenecks during peak shopping seasons. Their monolithic e-commerce application struggled to handle the surge in traffic, resulting in slow response times and frustrated customers. To address this, they virtualized their middle-tier application servers (handling order processing and inventory management) and their back-end database servers using VMware vSphere. Each application component was deployed as a separate virtual machine, allowing for independent scaling.

During peak periods, they could easily add more virtual machines to handle the increased load. The result was a 40% reduction in average response time and a 25% increase in transaction throughput. The improved scalability also allowed them to handle unexpected traffic spikes without service disruptions. Furthermore, the ability to easily create and manage virtual machines reduced deployment time for new features and updates.

Industries Benefiting from Middle-Tier and Back-End Virtualization

Several industries derive substantial benefits from virtualizing their middle-tier and back-end applications. Financial services firms, for instance, rely heavily on robust and scalable systems to process transactions and manage data. Virtualization allows them to quickly provision resources for new services or to scale up during periods of high trading activity. Similarly, the healthcare industry benefits from the improved reliability and disaster recovery capabilities offered by virtualization.

Virtualized systems can be easily replicated and migrated to alternative data centers, ensuring business continuity in the event of an outage. The telecommunications industry also leverages virtualization to manage their complex network infrastructure and applications. The ability to dynamically allocate resources to meet fluctuating demand is crucial in this sector. Finally, the gaming industry benefits from the flexibility and scalability of virtualization to handle large numbers of concurrent users.

Impact of Virtualization on Scalability and Cost Efficiency

Virtualization significantly enhances the scalability and cost efficiency of middle-tier and back-end applications. The ability to easily create and manage virtual machines allows organizations to quickly scale their infrastructure up or down to meet changing demands. This eliminates the need for over-provisioning physical hardware, leading to significant cost savings. Moreover, virtualization simplifies resource management, reducing the need for dedicated IT staff to manage physical servers.

Consolidation of multiple applications onto fewer physical servers also reduces energy consumption and data center space requirements. For example, a company that previously used ten physical servers for its back-end applications might be able to consolidate those applications onto three or four physical servers using virtualization, resulting in considerable savings on hardware, power, and cooling costs. The flexibility to quickly provision resources also reduces the time to market for new applications and features, further enhancing cost-effectiveness.

Concluding Remarks

Virtualizing your middle tier and back end isn’t just a trend; it’s a necessity for building modern, scalable, and resilient applications. By leveraging the power of virtualization technologies, you can unlock significant improvements in performance, efficiency, and cost-effectiveness. This journey through the world of virtualization has hopefully illuminated the path to a more robust and adaptable application architecture.

Remember to carefully consider your specific needs and choose the technology that best aligns with your goals. The future of application deployment is virtual, and the benefits are undeniable.

FAQ Explained

What are the biggest challenges in virtualizing legacy applications?

Migrating legacy applications can be challenging due to compatibility issues, potential data loss risks, and the need for thorough testing. Careful planning and a phased approach are crucial.

How does virtualization impact database performance?

Virtualization can impact database performance if not properly configured. Careful resource allocation and optimization are essential to avoid bottlenecks. Using a suitable database virtualization solution can help.

What are the security implications of virtualizing middle-tier and back-end applications?

Security is paramount. Virtualized environments require robust security measures, including access control, network segmentation, and regular security audits, to prevent vulnerabilities.

What’s the difference between hypervisors and containers?

Hypervisors create virtual machines (VMs), each with its own operating system. Containers share the host OS kernel, making them more lightweight and efficient but potentially less isolated.