Rubrik Offers $10M Ransomware Victim Compensation

Rubrik offers 10m ransomware compensation to victims – Rubrik offers $10M ransomware compensation to victims – that’s a headline that grabbed my attention! This isn’t just another cybersecurity story; it’s a bold move by Rubrik, offering a significant financial lifeline to those who’ve suffered the devastating effects of ransomware attacks. It raises a lot of questions: Is this a game-changer in the fight against ransomware?

Will it actually help victims recover financially and emotionally? And what’s the long-term impact on the cybersecurity landscape? Let’s dive in.

Rubrik’s $10 million program is designed to help organizations recover from ransomware attacks by compensating them for their losses. Eligibility criteria likely involve factors like the use of Rubrik’s products, the severity of the attack, and proof of the attack’s impact. The application process probably includes documentation submission and verification steps. This differs from other cybersecurity companies who may offer insurance or incident response services, but not direct financial compensation on this scale.

It’s a fascinating strategy with potentially far-reaching implications.

Rubrik’s Ransomware Compensation Program

Rubrik’s bold move to offer a $10 million ransomware compensation program represents a significant shift in the cybersecurity landscape. This initiative aims to directly support victims of ransomware attacks, regardless of whether they utilize Rubrik’s data management solutions. The program demonstrates a commitment to combating ransomware and underscores the financial burden these attacks place on individuals and organizations.

This program is designed to incentivize proactive cybersecurity measures and provide a much-needed safety net for those who fall victim.The program’s key features include a substantial financial commitment, a relatively straightforward application process, and a focus on supporting victims through the recovery process. Eligibility is based on specific criteria, and the program aims to provide compensation for verified ransomware-related expenses.

While details are subject to change, the program sets a new precedent for industry responsibility in the fight against ransomware.

Eligibility Criteria for Ransomware Compensation

To qualify for compensation, victims must meet several requirements. These typically include demonstrating that a ransomware attack occurred, providing evidence of the attack (such as law enforcement reports or forensic analysis), and detailing the financial losses incurred directly as a result of the attack. Critically, the program likely requires documentation of attempted recovery efforts and a clear link between the incurred losses and the ransomware event.

Furthermore, victims must agree to cooperate fully with Rubrik’s investigation and comply with the program’s terms and conditions. The specific requirements will be Artikeld in the official program guidelines.

The Application Process for Ransomware Compensation, Rubrik offers 10m ransomware compensation to victims

The application process for Rubrik’s program likely involves submitting a comprehensive application detailing the ransomware attack, including dates, methods used, and financial losses. Supporting documentation such as police reports, forensic analysis reports, and invoices related to recovery efforts will be crucial. The application may also require a detailed description of the victim’s cybersecurity practices before the attack, potentially assessing the level of preparedness and the extent of preventative measures taken.

Rubrik will likely review applications thoroughly to verify the legitimacy of the claims and assess the extent of the damages. The program will probably involve multiple stages of review and may require additional information or documentation throughout the process.

Comparison to Other Cybersecurity Companies’ Initiatives

While Rubrik’s $10 million commitment is substantial, other cybersecurity companies have also implemented initiatives to support ransomware victims. Some companies offer similar compensation programs, although the amounts and eligibility criteria may vary significantly. Other companies focus on providing free incident response services or offering discounted security solutions to affected organizations. A direct comparison requires detailed knowledge of each program’s specifics.

However, Rubrik’s program stands out due to its substantial financial commitment and its focus on compensating victims directly, rather than solely offering technical assistance. This approach highlights a proactive and responsible approach to addressing the growing threat of ransomware.

Impact on Ransomware Victims

Rubrik’s bold move to offer $10 million in ransomware compensation to victims represents a significant shift in the cybersecurity landscape. This program has the potential to dramatically alter the experience of ransomware attacks, impacting both the financial and emotional well-being of affected individuals and organizations. It’s a departure from the traditional reactive approach and signifies a proactive commitment to assisting those who fall victim to these devastating attacks.This program’s impact on victims’ financial recovery is potentially substantial.

Ransomware attacks often lead to significant financial losses, including the ransom payment itself, the cost of recovery efforts, lost productivity, and potential legal fees. Rubrik’s compensation, while not covering all potential losses, can provide a crucial lifeline, allowing victims to rebuild and recover more quickly. The availability of such substantial funding can significantly reduce the long-term financial burden, enabling businesses to resume operations and individuals to regain their financial stability.

Financial Recovery Assistance

The $10 million pool represents a substantial resource for victims. Imagine a small business hit by a ransomware attack that encrypts their critical client data. The ransom demand might be crippling, forcing them to choose between paying the ransom and facing bankruptcy. Rubrik’s program could potentially cover a significant portion, if not all, of the ransom demand, allowing them to refuse payment and focus on data recovery through legitimate means, such as utilizing Rubrik’s own backup and recovery solutions.

This could also prevent the perpetuation of the ransomware ecosystem by refusing to pay the ransom. This shift from desperate financial struggle to a chance for recovery is transformative.

Alleviation of Psychological Effects

Ransomware attacks are not just financially devastating; they also inflict significant psychological harm. The feeling of helplessness, the violation of privacy, and the fear of reputational damage can lead to stress, anxiety, and even depression. Rubrik’s program offers a measure of relief by demonstrating that victims are not alone in their struggle. The financial assistance helps mitigate the immediate crisis, allowing victims to focus on rebuilding rather than being consumed by overwhelming financial stress.

This support can significantly improve mental well-being and speed up the recovery process.

Incentivizing Better Data Protection Practices

The program acts as a strong incentive for organizations to prioritize robust data protection strategies. Knowing that a safety net exists in the event of a ransomware attack might encourage businesses to invest more in cybersecurity solutions, such as regular backups, multi-factor authentication, and employee training. This proactive approach is far more effective and cost-efficient than reacting to an attack after it has occurred.

The program implicitly promotes a shift from a reactive to a proactive cybersecurity posture.

Rubrik’s $10 million ransomware compensation fund is a huge step, showing they’re serious about helping victims. But building resilient systems is key, and that’s where understanding the future of app development comes in, like exploring the exciting possibilities outlined in this article on domino app dev the low code and pro code future. Ultimately, proactive security measures, coupled with robust application design, are the best defense against ransomware attacks like those Rubrik is addressing.

Hypothetical Successful Claim

Let’s consider a hypothetical scenario: “Acme Corp,” a mid-sized manufacturing company, suffers a ransomware attack that encrypts their production scheduling database. They immediately contact Rubrik, demonstrating they had implemented Rubrik’s recommended data protection best practices, including regular backups and security protocols. After a thorough investigation, Rubrik verifies the attack and determines that Acme Corp is eligible for compensation under the program.

Based on their documented losses, Rubrik awards Acme Corp $500,000 to cover the costs of data recovery, lost productivity, and incident response. This allows Acme Corp to quickly recover from the attack and resume normal operations without facing crippling financial hardship. This successful claim underscores the tangible benefits of the program.

Rubrik’s Business Strategy and Reputation

Rubrik’s decision to offer $10 million in ransomware compensation to victims is a bold strategic move with significant implications for its brand and market standing. This isn’t simply a charitable act; it’s a calculated risk designed to reshape the narrative around data protection and solidify Rubrik’s position in a fiercely competitive market. The program’s success hinges on a delicate balance between enhancing its reputation and managing potential financial and legal liabilities.Rubrik’s Motivations for the Ransomware Compensation Program are multifaceted.

The primary driver is likely to differentiate itself from competitors. By directly addressing the financial burden of ransomware attacks, Rubrik positions itself as a proactive and customer-centric company, emphasizing the value of its data protection solutions. This strategy aims to build trust and loyalty, attracting customers who are increasingly concerned about the devastating consequences of ransomware. Secondary motivations might include improving market share and bolstering its brand image as a leader in cybersecurity.

The program can serve as a powerful marketing tool, showcasing Rubrik’s commitment to customer success and its confidence in the efficacy of its products.

Potential Benefits for Rubrik’s Brand Image and Market Position

The potential benefits are substantial. A successful compensation program can significantly enhance Rubrik’s reputation as a trustworthy and reliable partner in the fight against ransomware. Positive media coverage and word-of-mouth marketing could drive increased sales and market share. Moreover, the program could attract top talent within the cybersecurity industry, further strengthening Rubrik’s competitive edge. By demonstrating a willingness to bear some of the financial burden associated with ransomware attacks, Rubrik positions itself as a company that truly understands and addresses the challenges faced by its customers.

This builds a stronger emotional connection, fostering customer loyalty and potentially leading to higher customer lifetime value.

Potential Risks Associated with the Program

Despite the potential upsides, the program carries significant risks. The most pressing concern is the potential for a surge in claims, exceeding the $10 million budget. This could strain Rubrik’s resources and potentially damage its reputation if it’s perceived as failing to honor its commitment. Furthermore, the program could invite legal challenges. Determining eligibility for compensation and establishing fair compensation amounts will be complex and could lead to disputes.

The program’s success will depend heavily on Rubrik’s ability to effectively manage claims and navigate any legal complexities. The possibility of fraudulent claims also poses a significant risk, requiring robust verification processes to prevent abuse.

Pros and Cons of Rubrik’s Approach

| Pros | Cons |

|---|---|

| Enhanced brand reputation and customer loyalty | Potential for exceeding the compensation budget |

| Increased market share and competitive advantage | Risk of legal challenges and disputes |

| Improved customer acquisition and retention | Possibility of fraudulent claims |

| Attracting top talent in the cybersecurity industry | Complex claim processing and verification |

The Ransomware Landscape and Industry Response

The ransomware threat continues to evolve at an alarming rate, impacting businesses and individuals globally. The sheer financial cost and operational disruption caused by these attacks are forcing organizations to re-evaluate their security postures and prompting a wider industry response, including innovative solutions like Rubrik’s compensation program. However, understanding the broader landscape is crucial to effectively combatting this persistent threat.Rubrik’s $10 million ransomware compensation program is a notable initiative, but it’s just one piece of a much larger puzzle.

While many cybersecurity companies offer some level of support to clients after a ransomware attack, few offer direct financial compensation on this scale. This approach represents a significant shift in the industry, highlighting the increasing severity of ransomware attacks and the need for proactive measures beyond traditional security solutions. Other responses have focused more on incident response services, threat intelligence sharing, and improved security software.

A more holistic approach, combining preventative measures with post-attack support, is proving increasingly necessary.

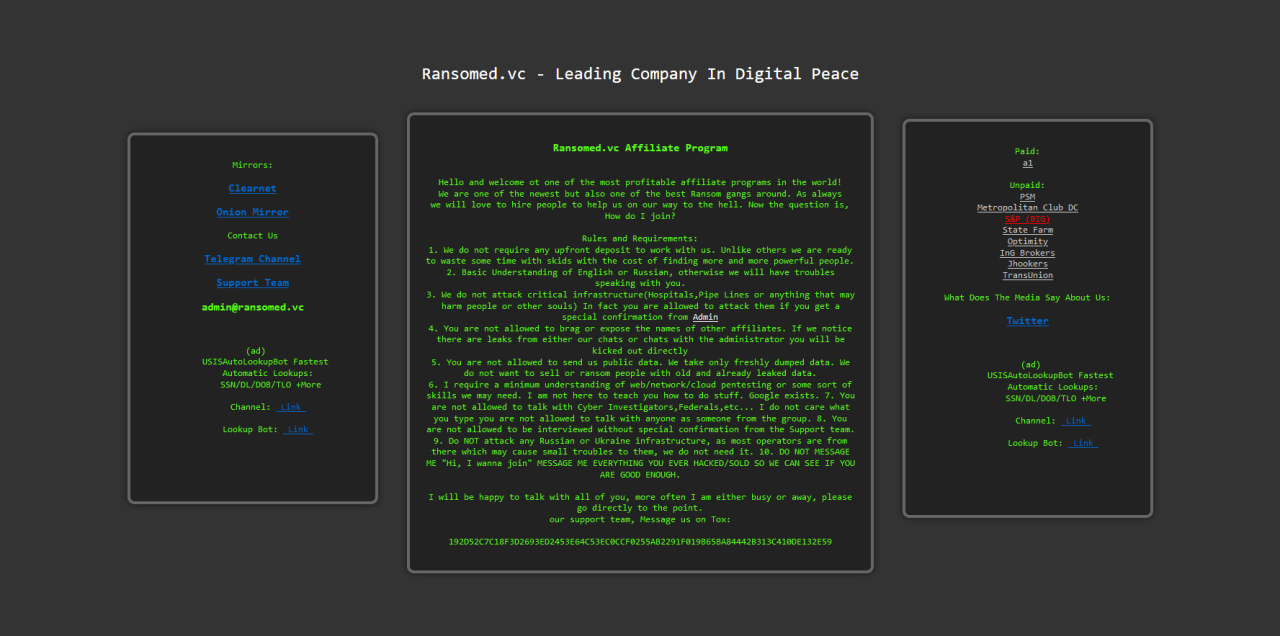

Current State of the Ransomware Landscape

The ransomware landscape is characterized by sophisticated attacks, constantly evolving tactics, and substantial financial impacts. Attack vectors range from phishing emails and exploiting software vulnerabilities to leveraging compromised credentials and supply chain attacks. The financial burden is immense, with ransomware attacks costing organizations millions, if not billions, of dollars annually in ransom payments, recovery costs, business disruption, and reputational damage.

For example, the Colonial Pipeline attack in 2021 resulted in a reported $4.4 million ransom payment, in addition to significant operational disruption and legal repercussions. The average ransom demand has also steadily increased over the years, further emphasizing the severity of the threat. The increasing use of double extortion, where attackers steal data before encrypting it and threaten to release it publicly unless a ransom is paid, significantly increases the pressure on victims.

Ransomware Mitigation Best Practices

Organizations need a multi-layered approach to mitigate ransomware risk effectively. A comprehensive strategy should incorporate several key elements:

- Regularly update and patch software and operating systems to address known vulnerabilities.

- Implement strong access controls and multi-factor authentication (MFA) to limit unauthorized access to sensitive data.

- Conduct regular security awareness training for employees to educate them about phishing scams and other social engineering tactics.

- Implement robust data backup and recovery solutions that are regularly tested and are offline or immutable.

- Segment networks to limit the impact of a ransomware attack should one occur.

- Develop and regularly test an incident response plan to guide actions in the event of a ransomware attack.

- Employ endpoint detection and response (EDR) solutions to monitor for and detect malicious activity.

These best practices, when implemented effectively, can significantly reduce the likelihood and impact of a successful ransomware attack. Ignoring these precautions significantly increases the risk of becoming a victim.

The Role of Cybersecurity Insurance

Cybersecurity insurance plays a crucial role in mitigating the financial impact of ransomware attacks. Policies can cover ransom payments (though this is often subject to limitations and conditions), data recovery costs, legal fees, and business interruption expenses. However, it’s essential to understand that insurance is not a replacement for robust security practices. Many insurers now require organizations to demonstrate a commitment to strong security measures as a condition for coverage.

Moreover, the cost of cybersecurity insurance can vary widely depending on the organization’s risk profile and the level of coverage sought. A comprehensive insurance policy, coupled with strong security practices, provides a more robust defense against ransomware attacks.

Legal and Ethical Considerations

Rubrik’s ambitious $10 million ransomware compensation program, while laudable in its intent, raises complex legal and ethical questions. The program’s structure, implementation, and potential consequences need careful consideration, particularly regarding liability, fairness, and the verification of claims. The potential for abuse and the program’s impact on the broader ransomware landscape also deserve scrutiny.The program’s legal implications are multifaceted.

Rubrik faces potential liability for claims deemed fraudulent or for situations where the program’s criteria aren’t clearly defined or consistently applied. For example, disputes could arise over the definition of a “ransomware attack,” the verification of losses, or the extent of Rubrik’s responsibility for pre-existing vulnerabilities. The program’s terms and conditions must be meticulously drafted to minimize legal exposure and provide clear guidelines for victims.

Failure to do so could lead to costly litigation and damage Rubrik’s reputation.

Liability Issues in Rubrik’s Compensation Program

The potential for legal challenges is significant. Rubrik could face lawsuits from victims whose claims are denied, alleging breach of contract or misrepresentation. Furthermore, there’s a risk of class-action lawsuits if the program is perceived as unfairly excluding certain victims or groups. Successfully navigating these legal challenges requires robust internal processes for claim evaluation, clear communication with victims, and a transparent appeals process.

The program’s terms must be carefully crafted to define eligibility criteria, the types of losses covered, and the process for submitting and adjudicating claims, minimizing ambiguity and potential for dispute. The legal team will need to anticipate various scenarios and ensure the program operates within existing legal frameworks regarding contracts, fraud, and consumer protection laws.

Ethical Considerations of Financial Incentives for Ransomware Victims

Offering financial incentives to ransomware victims raises important ethical questions. Some argue that such programs inadvertently reward malicious actors, potentially encouraging more ransomware attacks. Others might contend that it’s a necessary measure to alleviate the suffering of victims and deter future attacks by making it less financially appealing for attackers. Balancing the potential benefits with the ethical concerns requires careful consideration.

A transparent and robust claims process is crucial to ensure fairness and prevent the program from being exploited. The program should also be designed to discourage future attacks, perhaps by incorporating requirements for victims to report incidents to law enforcement and cooperate in investigations.

Challenges in Verifying the Legitimacy of Claims

Verifying the legitimacy of claims presents a substantial challenge. Ransomware attacks can be complex, and victims may not always have complete documentation of their losses. Furthermore, there’s a risk of fraudulent claims, where individuals attempt to exploit the program for personal gain. To mitigate this risk, Rubrik needs a rigorous verification process involving independent audits, forensic analysis of systems, and potentially collaboration with cybersecurity experts and law enforcement.

This could involve detailed documentation requirements, interviews with victims, and potentially even independent investigations to validate the extent of the damages.

Hypothetical Legal Challenge

Imagine a scenario where a company, “Acme Corp,” submits a claim to Rubrik’s program, claiming significant financial losses due to a ransomware attack. Acme Corp. however, failed to implement basic cybersecurity measures, such as regular backups and multi-factor authentication, significantly contributing to the severity of the attack. Rubrik, after a thorough investigation, denies the claim, citing Acme Corp.’s negligence as a contributing factor.

Acme Corp. could then sue Rubrik, arguing that the program’s terms were unclear about the role of victim negligence in claim eligibility, leading to a breach of contract. This hypothetical lawsuit highlights the need for precise language in the program’s terms and conditions and a robust, transparent process for evaluating claims, minimizing the risk of future disputes.

Future Implications

Rubrik’s bold move to offer $10 million in ransomware compensation represents a significant shift in the cybersecurity landscape. This initiative isn’t just a PR stunt; it has the potential to reshape the industry’s approach to ransomware, influencing both victim behavior and attacker strategies. The long-term effects are complex and far-reaching, demanding careful consideration.The forecast for ransomware compensation programs is one of increasing prevalence, though likely with variations in approach.

We’ll see more cybersecurity firms, insurance providers, and even governments exploring similar models, perhaps with different payout structures and eligibility criteria. The success of Rubrik’s program, measured by its impact on victim recovery and its influence on attacker behavior, will heavily influence this trend. We might see a tiered system emerge, with compensation amounts varying based on factors like the size of the organization, the type of data compromised, and the effectiveness of the victim’s security posture.

A parallel trend could be the rise of “ransomware insurance” specifically designed to cover such payouts, further incentivizing companies to invest in robust cybersecurity measures.

The Influence on Ransomware Attack Strategies

Rubrik’s program could inadvertently influence attacker strategies. While it doesn’t directly deter attacks, it might subtly shift the targets. Attackers may prioritize organizations perceived as less likely to receive significant compensation, focusing on smaller businesses or those with weaker insurance coverage. Conversely, it might incentivize more sophisticated attacks targeting high-value data, hoping to extract a larger ransom, even if a portion goes to compensation.

This necessitates a proactive response from the cybersecurity community, emphasizing comprehensive security measures beyond relying solely on compensation programs as a deterrent. The success of this program, in part, will depend on its ability to shift the economic calculus of ransomware attacks, making them less profitable for attackers.

Potential Improvements to Rubrik’s Program

While Rubrik’s program is innovative, several improvements could enhance its effectiveness. Expanding eligibility criteria to include a wider range of victims, particularly smaller businesses, would significantly broaden its impact. Clarifying the process and streamlining the application procedure would reduce the burden on already-stressed victims. Adding a component focused on post-attack recovery support, beyond just financial compensation, would provide more holistic assistance.

This could involve incident response expertise, data recovery assistance, and legal guidance. Finally, a transparent reporting mechanism detailing the program’s successes and challenges would build trust and inform future iterations. Learning from the program’s implementation and adapting it based on feedback will be key to its long-term success.

Infographic Design: Rubrik’s Ransomware Compensation Program

The infographic would be visually appealing and easy to understand. The title, “Rubrik’s $10M Ransomware Compensation Program: Protecting Businesses from Cyberattacks,” would be prominently displayed. A central image could depict a shield protecting a business from ransomware attacks. The infographic would use a combination of icons, charts, and text. One section would highlight the key features of the program: $10 million compensation pool, eligibility criteria (e.g., using Rubrik’s security products, successful data recovery), and the application process.

Another section would showcase the potential impact: reduced financial burden on victims, improved data recovery rates, and a potential deterrent to ransomware attacks. A final section could present statistics illustrating the growing ransomware threat landscape and the program’s contribution to mitigating it. The color scheme would be professional and reassuring, using blues and greens to convey security and stability.

A clear call to action – “Learn more about Rubrik’s commitment to ransomware protection” – would be included, linking to the program’s website.

Wrap-Up: Rubrik Offers 10m Ransomware Compensation To Victims

Rubrik’s $10 million ransomware compensation program is a bold and potentially groundbreaking initiative. While it faces challenges like verifying claims and potential legal issues, its impact on the ransomware landscape could be significant. It’s a high-stakes gamble, but one that could redefine the relationship between cybersecurity companies and their clients facing this growing threat. Will it deter attacks?

Will it encourage better data protection? Only time will tell, but this is definitely a story to watch unfold.

Clarifying Questions

What types of ransomware attacks are covered by Rubrik’s program?

The specifics likely depend on the program’s fine print, but it probably covers a range of attacks impacting data availability and integrity.

Is there a limit to the amount of compensation a victim can receive?

While the total program is $10 million, individual payouts are likely capped at a certain amount per claim, based on factors like the extent of the damage.

What happens if Rubrik receives more claims than the $10 million allows?

This is a crucial question. They’ll probably have a system for prioritizing claims based on factors like severity and documentation. A first-come, first-served approach is also possible, but unlikely.

What kind of proof is required to make a successful claim?

Expect a rigorous process involving forensic reports, incident response documentation, and possibly even legal documentation related to the attack and resulting losses.